Quantization in machine learning (Part 2): Data preprocessing, table selection, training CatBoost models

The article considers the practical application of quantization in the construction of tree models. The methods for selecting quantum tables and data preprocessing are considered. No complex mathematical equations are used.

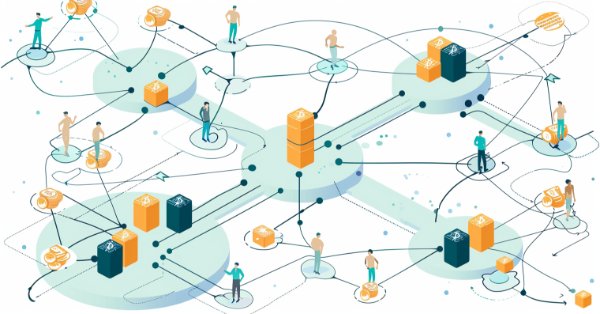

Hybridization of population algorithms. Sequential and parallel structures

Here we will dive into the world of hybridization of optimization algorithms by looking at three key types: strategy mixing, sequential and parallel hybridization. We will conduct a series of experiments combining and testing relevant optimization algorithms.

Neural networks made easy (Part 82): Ordinary Differential Equation models (NeuralODE)

In this article, we will discuss another type of models that are aimed at studying the dynamics of the environmental state.

The Group Method of Data Handling: Implementing the Multilayered Iterative Algorithm in MQL5

In this article we describe the implementation of the Multilayered Iterative Algorithm of the Group Method of Data Handling in MQL5.

Population optimization algorithms: Bird Swarm Algorithm (BSA)

The article explores the bird swarm-based algorithm (BSA) inspired by the collective flocking interactions of birds in nature. The different search strategies of individuals in BSA, including switching between flight, vigilance and foraging behavior, make this algorithm multifaceted. It uses the principles of bird flocking, communication, adaptability, leading and following to efficiently find optimal solutions.

Reimagining Classic Strategies in Python: MA Crossovers

In this article, we revisit the classic moving average crossover strategy to assess its current effectiveness. Given the amount of time time since its inception, we explore the potential enhancements that AI can bring to this traditional trading strategy. By incorporating AI techniques, we aim to leverage advanced predictive capabilities to potentially optimize trade entry and exit points, adapt to varying market conditions, and enhance overall performance compared to conventional approaches.

Neural networks made easy (Part 70): Closed-Form Policy Improvement Operators (CFPI)

In this article, we will get acquainted with an algorithm that uses closed-form policy improvement operators to optimize Agent actions in offline mode.

Population optimization algorithms: Evolution Strategies, (μ,λ)-ES and (μ+λ)-ES

The article considers a group of optimization algorithms known as Evolution Strategies (ES). They are among the very first population algorithms to use evolutionary principles for finding optimal solutions. We will implement changes to the conventional ES variants and revise the test function and test stand methodology for the algorithms.

Population optimization algorithms: Binary Genetic Algorithm (BGA). Part I

In this article, we will explore various methods used in binary genetic and other population algorithms. We will look at the main components of the algorithm, such as selection, crossover and mutation, and their impact on the optimization. In addition, we will study data presentation methods and their impact on optimization results.

Time series clustering in causal inference

Clustering algorithms in machine learning are important unsupervised learning algorithms that can divide the original data into groups with similar observations. By using these groups, you can analyze the market for a specific cluster, search for the most stable clusters using new data, and make causal inferences. The article proposes an original method for time series clustering in Python.

A feature selection algorithm using energy based learning in pure MQL5

In this article we present the implementation of a feature selection algorithm described in an academic paper titled,"FREL: A stable feature selection algorithm", called Feature weighting as regularized energy based learning.

Role of random number generator quality in the efficiency of optimization algorithms

In this article, we will look at the Mersenne Twister random number generator and compare it with the standard one in MQL5. We will also find out the influence of the random number generator quality on the results of optimization algorithms.

Neural networks made easy (Part 77): Cross-Covariance Transformer (XCiT)

In our models, we often use various attention algorithms. And, probably, most often we use Transformers. Their main disadvantage is the resource requirement. In this article, we will consider a new algorithm that can help reduce computing costs without losing quality.

Neural networks made easy (Part 69): Density-based support constraint for the behavioral policy (SPOT)

In offline learning, we use a fixed dataset, which limits the coverage of environmental diversity. During the learning process, our Agent can generate actions beyond this dataset. If there is no feedback from the environment, how can we be sure that the assessments of such actions are correct? Maintaining the Agent's policy within the training dataset becomes an important aspect to ensure the reliability of training. This is what we will talk about in this article.

Population optimization algorithms: Whale Optimization Algorithm (WOA)

Whale Optimization Algorithm (WOA) is a metaheuristic algorithm inspired by the behavior and hunting strategies of humpback whales. The main idea of WOA is to mimic the so-called "bubble-net" feeding method, in which whales create bubbles around prey and then attack it in a spiral motion.

Population optimization algorithms: Boids Algorithm

The article considers Boids algorithm based on unique examples of animal flocking behavior. In turn, the Boids algorithm serves as the basis for the creation of the whole class of algorithms united under the name "Swarm Intelligence".

Causal inference in time series classification problems

In this article, we will look at the theory of causal inference using machine learning, as well as the custom approach implementation in Python. Causal inference and causal thinking have their roots in philosophy and psychology and play an important role in our understanding of reality.

MQL5 Wizard Techniques you should know (Part 23): CNNs

Convolutional Neural Networks are another machine learning algorithm that tend to specialize in decomposing multi-dimensioned data sets into key constituent parts. We look at how this is typically achieved and explore a possible application for traders in another MQL5 wizard signal class.

Population optimization algorithms: Bacterial Foraging Optimization - Genetic Algorithm (BFO-GA)

The article presents a new approach to solving optimization problems by combining ideas from bacterial foraging optimization (BFO) algorithms and techniques used in the genetic algorithm (GA) into a hybrid BFO-GA algorithm. It uses bacterial swarming to globally search for an optimal solution and genetic operators to refine local optima. Unlike the original BFO, bacteria can now mutate and inherit genes.

Population optimization algorithms: Bird Swarm Algorithm (BSA)

The article explores the bird swarm-based algorithm (BSA) inspired by the collective flocking interactions of birds in nature. The different search strategies of individuals in BSA, including switching between flight, vigilance and foraging behavior, make this algorithm multifaceted. It uses the principles of bird flocking, communication, adaptability, leading and following to efficiently find optimal solutions.

Integrating MQL5 with data processing packages (Part 1): Advanced Data analysis and Statistical Processing

Integration enables seamless workflow where raw financial data from MQL5 can be imported into data processing packages like Jupyter Lab for advanced analysis including statistical testing.

Tuning LLMs with Your Own Personalized Data and Integrating into EA (Part 5): Develop and Test Trading Strategy with LLMs(I)-Fine-tuning

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

MQL5 Wizard Techniques you should know (Part 29): Continuation on Learning Rates with MLPs

We wrap up our look at learning rate sensitivity to the performance of Expert Advisors by primarily examining the Adaptive Learning Rates. These learning rates aim to be customized for each parameter in a layer during the training process and so we assess potential benefits vs the expected performance toll.

Eigenvectors and eigenvalues: Exploratory data analysis in MetaTrader 5

In this article we explore different ways in which the eigenvectors and eigenvalues can be applied in exploratory data analysis to reveal unique relationships in data.

MQL5 Wizard Techniques you should know (Part 30): Spotlight on Batch-Normalization in Machine Learning

Batch normalization is the pre-processing of data before it is fed into a machine learning algorithm, like a neural network. This is always done while being mindful of the type of Activation to be used by the algorithm. We therefore explore the different approaches that one can take in reaping the benefits of this, with the help of a wizard assembled Expert Advisor.

Integrating MQL5 with data processing packages (Part 2): Machine Learning and Predictive Analytics

In our series on integrating MQL5 with data processing packages, we delve in to the powerful combination of machine learning and predictive analysis. We will explore how to seamlessly connect MQL5 with popular machine learning libraries, to enable sophisticated predictive models for financial markets.

Reimagining Classic Strategies (Part VI): Multiple Time-Frame Analysis

In this series of articles, we revisit classic strategies to see if we can improve them using AI. In today's article, we will examine the popular strategy of multiple time-frame analysis to judge if the strategy would be enhanced with AI.

MQL5 Wizard Techniques you should know (Part 34): Price-Embedding with an Unconventional RBM

Restricted Boltzmann Machines are a form of neural network that was developed in the mid 1980s at a time when compute resources were prohibitively expensive. At its onset, it relied on Gibbs Sampling and Contrastive Divergence in order to reduce dimensionality or capture the hidden probabilities/properties over input training data sets. We examine how Backpropagation can perform similarly when the RBM ‘embeds’ prices for a forecasting Multi-Layer-Perceptron.

Neural Network in Practice: Secant Line

As already explained in the theoretical part, when working with neural networks we need to use linear regressions and derivatives. Why? The reason is that linear regression is one of the simplest formulas in existence. Essentially, linear regression is just an affine function. However, when we talk about neural networks, we are not interested in the effects of direct linear regression. We are interested in the equation that generates this line. We are not that interested in the line created. Do you know the main equation that we need to understand? If not, I recommend reading this article to understanding it.