Neural networks made easy (Part 73): AutoBots for predicting price movements

We continue to discuss algorithms for training trajectory prediction models. In this article, we will get acquainted with a method called "AutoBots".

Build Self Optimizing Expert Advisors in MQL5 (Part 8): Multiple Strategy Analysis

How best can we combine multiple strategies to create a powerful ensemble strategy? Join us in this discussion as we look to fit together three different strategies into our trading application. Traders often employ specialized strategies for opening and closing positions, and we want to know if our machines can perform this task better. For our opening discussion, we will get familiar with the faculties of the strategy tester and the principles of OOP we will need for this task.

Data label for timeseries mining (Part 2):Make datasets with trend markers using Python

This series of articles introduces several time series labeling methods, which can create data that meets most artificial intelligence models, and targeted data labeling according to needs can make the trained artificial intelligence model more in line with the expected design, improve the accuracy of our model, and even help the model make a qualitative leap!

Fast trading strategy tester in Python using Numba

The article implements a fast strategy tester for machine learning models using Numba. It is 50 times faster than the pure Python strategy tester. The author recommends using this library to speed up mathematical calculations, especially the ones involving loops.

Integrate Your Own LLM into EA (Part 5): Develop and Test Trading Strategy with LLMs (III) – Adapter-Tuning

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

Wrapping ONNX models in classes

Object-oriented programming enables creation of a more compact code that is easy to read and modify. Here we will have a look at the example for three ONNX models.

Integrate Your Own LLM into EA (Part 2): Example of Environment Deployment

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

Integrating MQL5 with data processing packages (Part 5): Adaptive Learning and Flexibility

This part focuses on building a flexible, adaptive trading model trained on historical XAUUSD data, preparing it for ONNX export and potential integration into live trading systems.

Build Self Optimizing Expert Advisors With MQL5 And Python (Part II): Tuning Deep Neural Networks

Machine learning models come with various adjustable parameters. In this series of articles, we will explore how to customize your AI models to fit your specific market using the SciPy library.

Neural networks made easy (Part 20): Autoencoders

We continue to study unsupervised learning algorithms. Some readers might have questions regarding the relevance of recent publications to the topic of neural networks. In this new article, we get back to studying neural networks.

Neural networks made easy (Part 53): Reward decomposition

We have already talked more than once about the importance of correctly selecting the reward function, which we use to stimulate the desired behavior of the Agent by adding rewards or penalties for individual actions. But the question remains open about the decryption of our signals by the Agent. In this article, we will talk about reward decomposition in terms of transmitting individual signals to the trained Agent.

Category Theory in MQL5 (Part 15) : Functors with Graphs

This article on Category Theory implementation in MQL5, continues the series by looking at Functors but this time as a bridge between Graphs and a set. We revisit calendar data, and despite its limitations in Strategy Tester use, make the case using functors in forecasting volatility with the help of correlation.

MQL5 Wizard Techniques you should know (Part 80): Using Patterns of Ichimoku and the ADX-Wilder with TD3 Reinforcement Learning

This article follows up ‘Part-74’, where we examined the pairing of Ichimoku and the ADX under a Supervised Learning framework, by moving our focus to Reinforcement Learning. Ichimoku and ADX form a complementary combination of support/resistance mapping and trend strength spotting. In this installment, we indulge in how the Twin Delayed Deep Deterministic Policy Gradient (TD3) algorithm can be used with this indicator set. As with earlier parts of the series, the implementation is carried out in a custom signal class designed for integration with the MQL5 Wizard, which facilitates seamless Expert Advisor assembly.

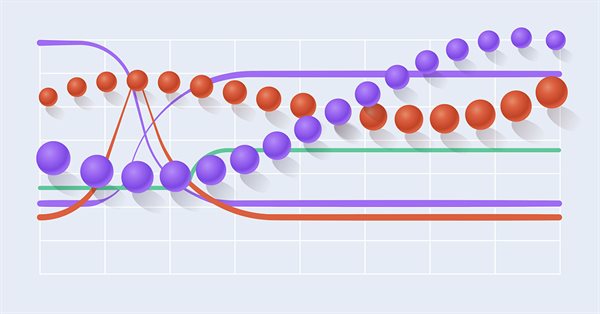

Data Science and ML (Part 26): The Ultimate Battle in Time Series Forecasting — LSTM vs GRU Neural Networks

In the previous article, we discussed a simple RNN which despite its inability to understand long-term dependencies in the data, was able to make a profitable strategy. In this article, we are discussing both the Long-Short Term Memory(LSTM) and the Gated Recurrent Unit(GRU). These two were introduced to overcome the shortcomings of a simple RNN and to outsmart it.

Example of Auto Optimized Take Profits and Indicator Parameters with SMA and EMA

This article presents a sophisticated Expert Advisor for forex trading, combining machine learning with technical analysis. It focuses on trading Apple stock, featuring adaptive optimization, risk management, and multiple strategies. Backtesting shows promising results with high profitability but also significant drawdowns, indicating potential for further refinement.

Data Science and ML(Part 30): The Power Couple for Predicting the Stock Market, Convolutional Neural Networks(CNNs) and Recurrent Neural Networks(RNNs)

In this article, We explore the dynamic integration of Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) in stock market prediction. By leveraging CNNs' ability to extract patterns and RNNs' proficiency in handling sequential data. Let us see how this powerful combination can enhance the accuracy and efficiency of trading algorithms.

Neural Networks Made Easy (Part 88): Time-Series Dense Encoder (TiDE)

In an attempt to obtain the most accurate forecasts, researchers often complicate forecasting models. Which in turn leads to increased model training and maintenance costs. Is such an increase always justified? This article introduces an algorithm that uses the simplicity and speed of linear models and demonstrates results on par with the best models with a more complex architecture.

Neural Networks in Trading: An Ensemble of Agents with Attention Mechanisms (Final Part)

In the previous article, we introduced the multi-agent adaptive framework MASAAT, which uses an ensemble of agents to perform cross-analysis of multimodal time series at different data scales. Today we will continue implementing the approaches of this framework in MQL5 and bring this work to a logical conclusion.

Integrating ML models with the Strategy Tester (Conclusion): Implementing a regression model for price prediction

This article describes the implementation of a regression model based on a decision tree. The model should predict prices of financial assets. We have already prepared the data, trained and evaluated the model, as well as adjusted and optimized it. However, it is important to note that this model is intended for study purposes only and should not be used in real trading.

Neural networks made easy (Part 66): Exploration problems in offline learning

Models are trained offline using data from a prepared training dataset. While providing certain advantages, its negative side is that information about the environment is greatly compressed to the size of the training dataset. Which, in turn, limits the possibilities of exploration. In this article, we will consider a method that enables the filling of a training dataset with the most diverse data possible.

Neural Networks in Trading: Optimizing the Transformer for Time Series Forecasting (LSEAttention)

The LSEAttention framework offers improvements to the Transformer architecture. It was designed specifically for long-term multivariate time series forecasting. The approaches proposed by the authors of the method can be applied to solve problems of entropy collapse and learning instability, which are often encountered with vanilla Transformer.

Population optimization algorithms: Artificial Bee Colony (ABC)

In this article, we will study the algorithm of an artificial bee colony and supplement our knowledge with new principles of studying functional spaces. In this article, I will showcase my interpretation of the classic version of the algorithm.

Data Science and Machine Learning(Part 14): Finding Your Way in the Markets with Kohonen Maps

Are you looking for a cutting-edge approach to trading that can help you navigate complex and ever-changing markets? Look no further than Kohonen maps, an innovative form of artificial neural networks that can help you uncover hidden patterns and trends in market data. In this article, we'll explore how Kohonen maps work, and how they can be used to develop smarter, more effective trading strategies. Whether you're a seasoned trader or just starting out, you won't want to miss this exciting new approach to trading.

Neural Networks in Trading: Scene-Aware Object Detection (HyperDet3D)

We invite you to get acquainted with a new approach to detecting objects using hypernetworks. A hypernetwork generates weights for the main model, which allows taking into account the specifics of the current market situation. This approach allows us to improve forecasting accuracy by adapting the model to different trading conditions.

Trading Insights Through Volume: Trend Confirmation

The Enhanced Trend Confirmation Technique combines price action, volume analysis, and machine learning to identify genuine market movements. It requires both price breakouts and volume surges (50% above average) for trade validation, while using an LSTM neural network for additional confirmation. The system employs ATR-based position sizing and dynamic risk management, making it adaptable to various market conditions while filtering out false signals.

Population optimization algorithms: Stochastic Diffusion Search (SDS)

The article discusses Stochastic Diffusion Search (SDS), which is a very powerful and efficient optimization algorithm based on the principles of random walk. The algorithm allows finding optimal solutions in complex multidimensional spaces, while featuring a high speed of convergence and the ability to avoid local extrema.

Experiments with neural networks (Part 4): Templates

In this article, I will use experimentation and non-standard approaches to develop a profitable trading system and check whether neural networks can be of any help for traders. MetaTrader 5 as a self-sufficient tool for using neural networks in trading. Simple explanation.

Machine Learning Blueprint (Part 4): The Hidden Flaw in Your Financial ML Pipeline — Label Concurrency

Discover how to fix a critical flaw in financial machine learning that causes overfit models and poor live performance—label concurrency. When using the triple-barrier method, your training labels overlap in time, violating the core IID assumption of most ML algorithms. This article provides a hands-on solution through sample weighting. You will learn how to quantify temporal overlap between trading signals, calculate sample weights that reflect each observation's unique information, and implement these weights in scikit-learn to build more robust classifiers. Learning these essential techniques will make your trading models more robust, reliable and profitable.

Neural Networks Made Easy (Part 94): Optimizing the Input Sequence

When working with time series, we always use the source data in their historical sequence. But is this the best option? There is an opinion that changing the sequence of the input data will improve the efficiency of the trained models. In this article I invite you to get acquainted with one of the methods for optimizing the input sequence.

Data Science and Machine Learning (Part 25): Forex Timeseries Forecasting Using a Recurrent Neural Network (RNN)

Recurrent neural networks (RNNs) excel at leveraging past information to predict future events. Their remarkable predictive capabilities have been applied across various domains with great success. In this article, we will deploy RNN models to predict trends in the forex market, demonstrating their potential to enhance forecasting accuracy in forex trading.

Neural Networks in Trading: A Multimodal, Tool-Augmented Agent for Financial Markets (Final Part)

We continue to develop the algorithms for FinAgent, a multimodal financial trading agent designed to analyze multimodal market dynamics data and historical trading patterns.

Category Theory in MQL5 (Part 3)

Category Theory is a diverse and expanding branch of Mathematics which as of yet is relatively uncovered in the MQL5 community. These series of articles look to introduce and examine some of its concepts with the overall goal of establishing an open library that provides insight while hopefully furthering the use of this remarkable field in Traders' strategy development.

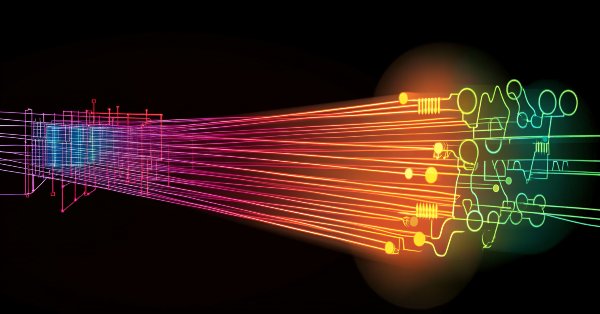

Analyzing all price movement options on the IBM quantum computer

We will use a quantum computer from IBM to discover all price movement options. Sounds like science fiction? Welcome to the world of quantum computing for trading!

Integrating ML models with the Strategy Tester (Part 3): Managing CSV files (II)

This material provides a complete guide to creating a class in MQL5 for efficient management of CSV files. We will see the implementation of methods for opening, writing, reading, and transforming data. We will also consider how to use them to store and access information. In addition, we will discuss the limitations and the most important aspects of using such a class. This article ca be a valuable resource for those who want to learn how to process CSV files in MQL5.

Population optimization algorithms: Firefly Algorithm (FA)

In this article, I will consider the Firefly Algorithm (FA) optimization method. Thanks to the modification, the algorithm has turned from an outsider into a real rating table leader.

Matrix Utils, Extending the Matrices and Vector Standard Library Functionality

Matrix serves as the foundation of machine learning algorithms and computers in general because of their ability to effectively handle large mathematical operations, The Standard library has everything one needs but let's see how we can extend it by introducing several functions in the utils file, that are not yet available in the library

Non-linear regression models on the stock exchange

Non-linear regression models on the stock exchange: Is it possible to predict financial markets? Let's consider creating a model for forecasting prices for EURUSD, and make two robots based on it - in Python and MQL5.

Neuro-symbolic systems in algorithmic trading: Combining symbolic rules and neural networks

The article describes the experience of developing a hybrid trading system that combines classical technical analysis with neural networks. The author provides a detailed analysis of the system architecture from basic pattern analysis and neural network structure to the mechanisms behind trading decisions, and shares real code and practical observations.

Neural networks made easy (Part 35): Intrinsic Curiosity Module

We continue to study reinforcement learning algorithms. All the algorithms we have considered so far required the creation of a reward policy to enable the agent to evaluate each of its actions at each transition from one system state to another. However, this approach is rather artificial. In practice, there is some time lag between an action and a reward. In this article, we will get acquainted with a model training algorithm which can work with various time delays from the action to the reward.

Data Science and Machine Learning (Part 19): Supercharge Your AI models with AdaBoost

AdaBoost, a powerful boosting algorithm designed to elevate the performance of your AI models. AdaBoost, short for Adaptive Boosting, is a sophisticated ensemble learning technique that seamlessly integrates weak learners, enhancing their collective predictive strength.