Сode Lock Algorithm (CLA)

Contents

1. Introduction

2. Algorithm implementation

3.Test results

1. Introduction

Code locks, also known as digital or combination locks, are security mechanisms used to control access to rooms, safes, cabinets, and other objects. They differ from ordinary locks in that instead of using a key, a certain number combination is inserted to open them.

Code locks are usually equipped with a keypad, special cylinders or other rotary mechanisms allowing a user to enter a code sequence. Once the correct combination is entered, the code lock activates a mechanism that unlocks the lock, allowing a user to open a door or access the contents of a safe. User can set their own codes or use the provided code to open the lock.

Code lock advantages:

- Security. Code locks can provide a high level of security, especially if the codes are changed regularly.

- Convenience. There is no need to carry the keys, which makes code locks convenient to use.

- Ability to set multiple codes. Some models allow setting several different codes for different users or for different time intervals.

Code locks are used in a variety of areas including home security, commercial buildings, hotels, schools, offices and other institutions where access control is required. Using code locks in the context of optimization algorithms can be an interesting idea. One possible application of code locks in optimization algorithms could be to create a system that uses the principles of code locks to solve optimization problems, such as finding optimal parameter values in machine learning problems, or to solve other optimization problems.

The code lock operation principle, based on entering the correct numerical combination to unlock, can be similar to the mechanisms of optimization algorithms that try to find the optimal solution by iteratively changing the parameters and evaluating their efficiency.

For example, one can imagine an optimization algorithm that uses the code lock analogy as follows:

- Combination as parameters. The parameters to be optimized can be represented as a "combination" of inputs.

- Checking the combination validity. The algorithm can change the values of the parameters and assess their efficiency, similar to how a user enters different combinations into a combination lock to check their validity.

- Optimal solution as unblocking. When the algorithm finds optimal parameter values, this can be viewed as "unlocking" the optimal solution.

Thus, code locks inspired me to develop an optimization algorithm, which can be used to find optimal solutions in various problems, including machine learning and trading systems development.

2. Algorithm implementation

Let's imagine a population of agents as a population of locks, where each lock represents a solution to a problem. Then we can sketch out an approximate pseudocode for the code lock algorithm:

1. Initialization:

Create an array of locks of the popSize size. Each lock has the coords set of disks, while each set has lockDiscs disks.

2. Selecting a combination of locks:

If this is the first step, initialize each disk of each set of each lock to a random number between 0 and 9.

Otherwise, for each lock:

- If a random number is less than copyProb, copy a set of disks from a randomly selected lock.

- Otherwise, for each set of disks:

- If a random number is less than rotateProb, set the disk to a random number from 0 to 9.

- Otherwise, copy the disk from a randomly selected lock.

3. Evaluating the lock combinations quality:

Update the combination quality of each lock.

Find the lock with the best combination quality and update the global best solution if necessary.

Copy all locks to the common lock basket.

Sort the basket of locks by fitness.

4. Repeat steps 2 and 3 for the specified number of epochs or until the stop criterion is reached.

The concept is quite exciting, but some aspects still raise questions. In particular, it is not entirely clear how the combination code in the lock can represent a solution to a problem. It would be helpful to have an illustration for a deeper understanding.

So, let's imagine that we represent the solution to a problem where we need to optimize one or more parameters in the form of a code lock. In this case, each parameter can be represented as a combination of numbers, where each number is located on a separate disk. There can be any number of disks. The number is specified as an external parameter of the algorithm. Thus, each parameter is represented by a set of disks with digital labels.

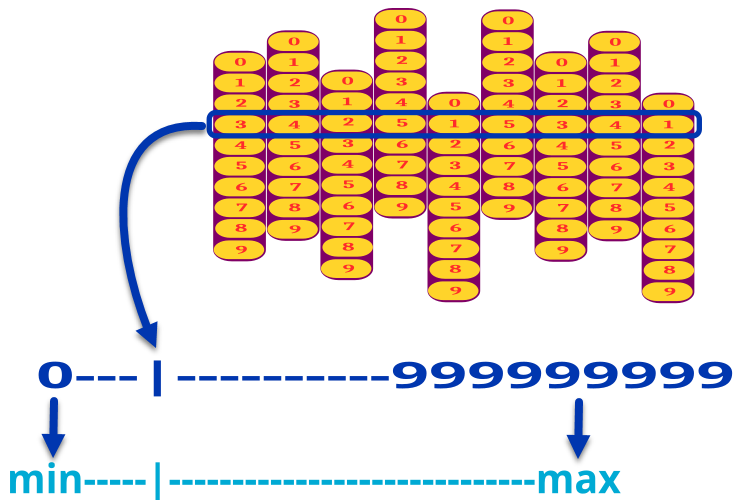

For example, if a task parameter has a set of 9 disks, each with numbers from 0 to 9, then the combination can be from 000000000 to 999999999. We scale the resulting number into the range from "min" to "max" of the corresponding parameter. Thus, if we have, for example, three optimized parameters, then our combination lock will have 3 x 9 = 27 digital disks. Figure 1 illustrates the coding mechanism for one parameter.

Figure 1. Decoding a task parameter from a digital code to a real value

The operators in optimization algorithms, such as crossover and mutation, are integrated into the CLA optimization algorithm using the code lock idea.

1. Crossover. Crossover in genetic algorithms is designed to combine the genetic material of two parents to create offspring.

- In the context of code locks we can imagine a crossover as a combination of disk values from different locks to create a new combination of encoded task parameters.

- This process provides combinatorial possibilities for the algorithm to explore new combinations of parameters, similar to how a user can try different combinations in a code lock, and still use the most successful combinations of numbers on different locks.

2. Mutation. Mutation in genetic algorithms is the random change in genetic material to create diversity in a population.

- In the context of code locks, mutation can be interpreted as randomly rotating the discs to any number position to change the combination in the code lock.

- This process helps the algorithm explore new parameter combinations throughout the solution space and avoid getting stuck in local optima.

In general, integrating crossover and mutation into the optimization algorithm can increase the diversity of solutions, speed up the convergence to the optimal solution, and help avoid premature convergence. This approach can be useful in optimization problems with large search spaces and complex objective functions. In fact, we have no other options except to rotate the disks, using the analogy with genetic operators.

To solve the problem, we need to select the correct combination of numbers on the code lock. We need to rotate the disks to find the correct code combination that will allow us to open the lock. Unlike genetic algorithms, where by "rotating" the binary code value in the genes the probability of getting a value is 50/50 (either 0 or 1), in the combination lock algorithm we can use probability distributions in the disk rotation strategy. Let's consider a few options.

We can use a Gaussian distribution where the combination parameters are mutated, each parameter changes to a random value selected from a normal (Gaussian) distribution. This allows some random change to be made to the parameters while still maintaining their current value to some extent.

Using a Gaussian distribution for mutation can be useful because it allows control over the degree of parameter changes. The parameters can be changed by small values close to the current ones, which helps to preserve the good properties of the current solution, while the random nature of the mutation allows the algorithm to explore new areas of the search space.

Thus, using a Gaussian distribution for mutation can help balance the exploration of new solutions with maintaining the good properties of current solutions, which will facilitate efficient search for the optimal solution in complex parameter spaces.

We can also use a power law distribution for the mutation process. Unlike the Gaussian distribution, the power law distribution has heavier tails, meaning that large parameter changes are more likely to occur, which can be useful in cases where more radical exploration of the parameter space is needed. A power distribution can help the algorithm "jump out" of local traps.

We can also try to use a third option - uniform distribution, which creates an equal probability of choosing any number on the lock disk.

Now we can move on from the theoretical part to writing code and practical research.

In the agent CLA algorithm representing the problem solution, describe the lock using the S_CLA_Agent structure. Here are its main components:

- f is an agent fitness. It is initialized as -DBL_MAX, which means the smallest possible value for the double type.

- struct S_Lock is a nested structure containing the lock array. This array is used to store the code combination of a single task parameter being optimized.

- S_Lock code [] is the S_Lock structure array. This entire array is a "lock".

- void Init (int coords, int lockDiscs) is an initialization function. It accepts two parameters: coords defines the number of parameter sets, while lockDiscs defines the number of disks in each set. The code array is initialized within the function.

Overall, this structure represents an agent of the CLA optimization algorithm. Each agent has its own fitness and lock combination description, which will be optimized during the execution of the algorithm.

//—————————————————————————————————————————————————————————————————————————————— struct S_CLA_Agent { double f; //fitness struct S_Lock { int lock []; }; S_Lock code []; void Init (int coords, int lockDiscs) { f = -DBL_MAX; ArrayResize (code, coords); for (int i = 0; i < coords; i++) { ArrayResize (code [i].lock, lockDiscs); } } }; //——————————————————————————————————————————————————————————————————————————————

Now let's describe the C_AO_CLA class derived from the C_AO class. Main class components:

- C_AO_CLA () - class constructor that initializes the class object. Such parameters as popSize, lockDiscs, copyProb, rotateProb and params are initialized within the constructor.

- SetParams () sets the algorithm parameters based on the values in the params array.

- Init () is an initialization function, which accepts the search ranges and the number of epochs as parameters.

- Moving () and Revision () are the functions used to execute the main logic in the algorithm.

- lockDiscs, copyProb and rotateProb are the variables used to store the algorithm parameters.

- S_CLA_Agent agent [] is an array of agents. Each agent is an object of the S_CLA_Agent structure.

- maxLockNumber, S_CLA_Agent parents [] and S_CLA_Agent parTemp [] are private variables and arrays used inside the class.

- ArrayToNumber () and LockToDouble () are private methods used to convert a code combination array into a number, as well as a lock into a floating-point number, respectively.

//—————————————————————————————————————————————————————————————————————————————— class C_AO_CLA : public C_AO { public: //-------------------------------------------------------------------- ~C_AO_CLA () { } C_AO_CLA () { ao_name = "CLA"; ao_desc = "Code Lock Algorithm"; ao_link = "https://www.mql5.com/ru/articles/14878"; popSize = 100; //population size lockDiscs = 8; //lock discs copyProb = 0.8; //copying probability rotateProb = 0.03; //rotate disc probability ArrayResize (params, 4); params [0].name = "popSize"; params [0].val = popSize; params [1].name = "lockDiscs"; params [1].val = lockDiscs; params [2].name = "copyProb"; params [2].val = copyProb; params [3].name = "rotateProb"; params [3].val = rotateProb; } void SetParams () { popSize = (int)params [0].val; lockDiscs = (int)params [1].val; copyProb = params [2].val; rotateProb = params [3].val; } bool Init (const double &rangeMinP [], //minimum search range const double &rangeMaxP [], //maximum search range const double &rangeStepP [], //step search const int epochsP = 0); //number of epochs void Moving (); void Revision (); void Injection (const int popPos, const int coordPos, const double value); //---------------------------------------------------------------------------- int lockDiscs; //lock discs double copyProb; //copying probability double rotateProb; //rotate disc probability S_CLA_Agent agent []; private: //------------------------------------------------------------------- int maxLockNumber; //max lock number S_CLA_Agent parents []; S_CLA_Agent parTemp []; int ArrayToNumber (int &arr []); double LockToDouble (int lockNum, int coordPos); }; //——————————————————————————————————————————————————————————————————————————————

Define the Init method in the C_AO_CLA class. This method initializes the algorithm with the given search ranges and number of epochs. Description of each step:

1. if (!StandardInit (rangeMinP, rangeMaxP, rangeStepP)) return false; - calling the StandardInit function woth the specified search ranges. If initialization fails, the function returns 'false'.

2. ArrayResize (agent, popSize); - resizes the 'agent' array to the popSize population size.

3. for (int i = 0; i < popSize; i++) agent [i].Init (coords, lockDiscs); - the loop initializes each agent in the population with the specified number of coords coordinates and lockDiscs lock disks.

4. ArrayResize (parents, popSize * 2); ArrayResize (parTemp, popSize * 2); - resize the parents and parTemp arrays to rwo population sizes.

5. for (int i = 0; i < popSize * 2; i++) { parents [i].Init (coords, lockDiscs); parTemp [i].Init (coords, lockDiscs); } - the loop initializes each parent and temporary parent in the parents and parTemp arrays.

6. maxLockNumber = 0; for (int i = 0; i < lockDiscs; i++) { maxLockNumber += 9 * (int)pow (10, i); } - the loop calculates the maximum number in the maxLockNumber lock combination using the number of lock disks (lockDiscs).

7. return true; - if all previous operations are successful, the function returns 'true'.

The function initializes agents and parents in the CLA code lock optimization algorithm and sets the maximum number of lock combinations based on the number of lock discs.

//—————————————————————————————————————————————————————————————————————————————— bool C_AO_CLA::Init (const double &rangeMinP [], //minimum search range const double &rangeMaxP [], //maximum search range const double &rangeStepP [], //step search const int epochsP = 0) //number of epochs { if (!StandardInit (rangeMinP, rangeMaxP, rangeStepP)) return false; //---------------------------------------------------------------------------- ArrayResize (agent, popSize); for (int i = 0; i < popSize; i++) agent [i].Init (coords, lockDiscs); ArrayResize (parents, popSize * 2); ArrayResize (parTemp, popSize * 2); for (int i = 0; i < popSize * 2; i++) { parents [i].Init (coords, lockDiscs); parTemp [i].Init (coords, lockDiscs); } maxLockNumber = 0; for (int i = 0; i < lockDiscs; i++) { maxLockNumber += 9 * (int)pow (10, i); } return true; } //——————————————————————————————————————————————————————————————————————————————

Selection of digital combinations in locks is described in the Moving method of the C_AO_CLA class. The process is as follows:

- val = 0.0; code = 0; pos = 0; - initializing the variables to be used in the method.

- if (!revision) {...} - the code block is executed if revision is 'false'. Inside this block of code, the agents and parents are initialized with random values.

- Then the code and val values are calculated for each agent and each parent and they are used to update the agent coordinates. After that, revision is set to 'true' and the function is complete.

- for (int i = 0; i < popSize; i++) {...} - the code block is executed if revision is 'true'. The agents are updated inside the code block. If a random number is less than copyProb, the lock code of one of the parents is copied to the agent. Otherwise, the agent lock code is updated with either a random value or the lock code of one of the parents.

- The code and val values are then calculated for each agent. These values are used to update the agent coordinates.

The function is used to update agents in the CLA optimization algorithm.

//—————————————————————————————————————————————————————————————————————————————— void C_AO_CLA::Moving () { double val = 0.0; int code = 0; int pos = 0; //---------------------------------------------------------------------------- if (!revision) { for (int i = 0; i < popSize; i++) { for (int c = 0; c < coords; c++) { for (int l = 0; l < lockDiscs; l++) { agent [i].code [c].lock [l] = u.RNDminusOne (10); } code = ArrayToNumber (agent [i].code [c].lock); val = LockToDouble (code, c); a [i].c [c] = u.SeInDiSp (val, rangeMin [c], rangeMax [c], rangeStep [c]); } } for (int i = 0; i < popSize * 2; i++) { for (int c = 0; c < coords; c++) { for (int l = 0; l < lockDiscs; l++) { parents [i].code [c].lock [l] = u.RNDminusOne (10); } } } revision = true; return; } //---------------------------------------------------------------------------- for (int i = 0; i < popSize; i++) { for (int c = 0; c < coords; c++) { if (u.RNDprobab () < copyProb) { int pos = u.RNDminusOne (popSize); ArrayCopy (agent [i].code [c].lock, parents [pos].code [c].lock, 0, 0, WHOLE_ARRAY); } else { for (int l = 0; l < lockDiscs; l++) { if (u.RNDprobab () < rotateProb) { //pos = u.RNDminusOne (popSize); //agent [i].code [c].lock [l] = (int)round (u.GaussDistribution (agent [i].codePrev [c].lock [l], 0, 9, 8)); //agent [i].code [c].lock [l] = (int)round (u.PowerDistribution (agent [i].codePrev [c].lock [l], 0, 9, 20)); agent [i].code [c].lock [l] = u.RNDminusOne (10); } else { pos = u.RNDminusOne (popSize); agent [i].code [c].lock [l] = parents [pos].code [c].lock [l]; } } } code = ArrayToNumber (agent [i].code [c].lock); val = LockToDouble (code, c); a [i].c [c] = u.SeInDiSp (val, rangeMin [c], rangeMax [c], rangeStep [c]); } } } //——————————————————————————————————————————————————————————————————————————————The Revision method in the C_AO_CLA class in general is meant for updating the global solution. The method does the following:

- ind = -1; - initializtion of the 'ind' variable to be used to track the index of the agent with the best fitness.

- for (int i = 0; i < popSize; i++) {...} - the loop passes through each agent in the population and checks if the agent fitness exceeds the current fB best fitness value. If this is the case, then fB is updated, and ind is set to the current index.

- if (ind != -1) ArrayCopy (cB, a [ind].c, 0, 0, WHOLE_ARRAY); - if the agent with the best fitness was found, its coordinates are copied to cB.

- for (int i = 0; i < popSize; i++) { agent [i].f = a [i].f; } - the loop updates the fitness of each agent based on the current values in the a array.

- for (int i = 0; i < popSize; i++) { parents [i + popSize] = agent [i]; } - the loop copies each agent into the parents array starting from the popSize position, that is the second half of the parent population.

- u.Sorting (parents, parTemp, popSize * 2); - the string sorts the parents array using the parTemp array as a temporary storage.

//—————————————————————————————————————————————————————————————————————————————— void C_AO_CLA::Revision () { //---------------------------------------------------------------------------- int ind = -1; for (int i = 0; i < popSize; i++) { if (a [i].f > fB) { fB = a [i].f; ind = i; } } if (ind != -1) ArrayCopy (cB, a [ind].c, 0, 0, WHOLE_ARRAY); //---------------------------------------------------------------------------- for (int i = 0; i < popSize; i++) { agent [i].f = a [i].f; } for (int i = 0; i < popSize; i++) { parents [i + popSize] = agent [i]; } u.Sorting (parents, parTemp, popSize * 2); } //——————————————————————————————————————————————————————————————————————————————

The ArrayToNumber method converts the characters of a code combination into a number. Method actions:

- result = 0; - initialization of the variable to be used to store the result.

- for (int i = 0; i < ArraySize (arr); i++) {...} - the loop passes through each element of the arr array. For each arr [i] element, it multiplies the current result value by 10 and adds arr [i].

- return result; - the string returns the final result value.

In general, this method converts an array of integers into a single integer by interpreting each element of the array as a digit in the decimal representation of the number.

For example, the values of the arr input array (code combination): |0|3|1|5|7|0|. We need to discard all zeros on the left of the combination and start forming the number from the value of 3. The resulting number is 31570. If all cells in the array contain zeros in the combination, the final number will be 0.

//—————————————————————————————————————————————————————————————————————————————— int C_AO_CLA::ArrayToNumber (int &arr []) { int result = 0; for (int i = 0; i < ArraySize (arr); i++) { result = result * 10 + arr [i]; } return result; } //——————————————————————————————————————————————————————————————————————————————

The LockToDouble method in the C_AO_CLA class converts the number of the code combination into the scale of the optimized parameter. Here is what this function does:

- LockToDouble (int lockNum, int coordPos) - the method takes two parameters: lockNum is the number of the coordPos lock and the coordinate position in the coordinate array (optimized parameter).

- return u.Scale (lockNum, 0, maxLockNumber, rangeMin [coordPos], rangeMax [coordPos]); - the string returns the value obtained by scaling lockNum from the [0, maxLockNumber] range to [rangeMin [coordPos], rangeMax [coordPos]].

In general, this method is used to convert the lock number into a floating point number based on a given range. In other words, the lock numbers are converted into the values of the optimized task parameters.

//—————————————————————————————————————————————————————————————————————————————— double C_AO_CLA::LockToDouble (int lockNum, int coordPos) { return u.Scale (lockNum, 0, maxLockNumber, rangeMin [coordPos], rangeMax [coordPos]); } //——————————————————————————————————————————————————————————————————————————————

3. Test results

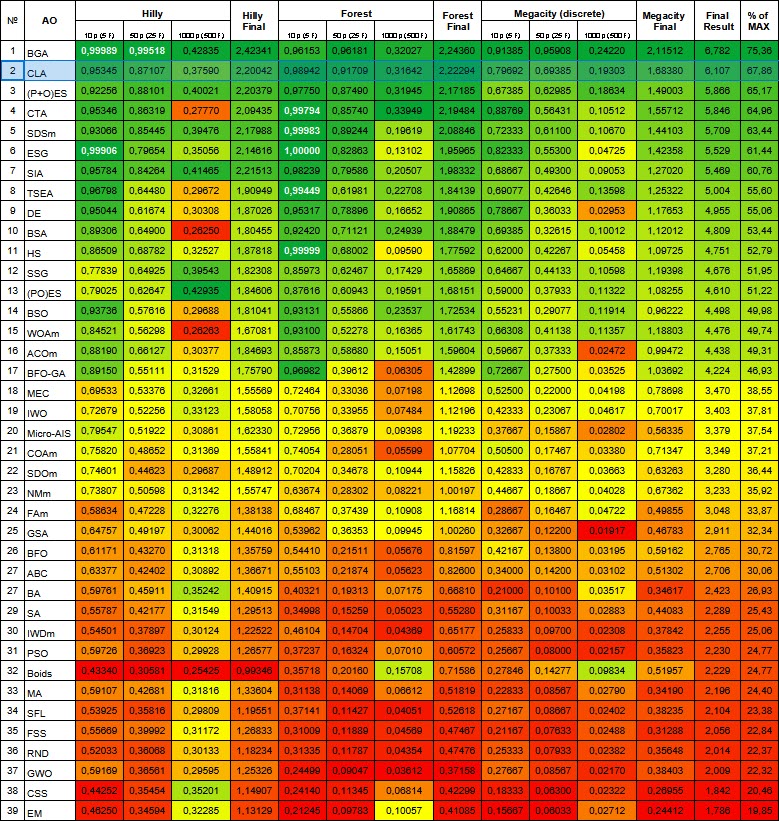

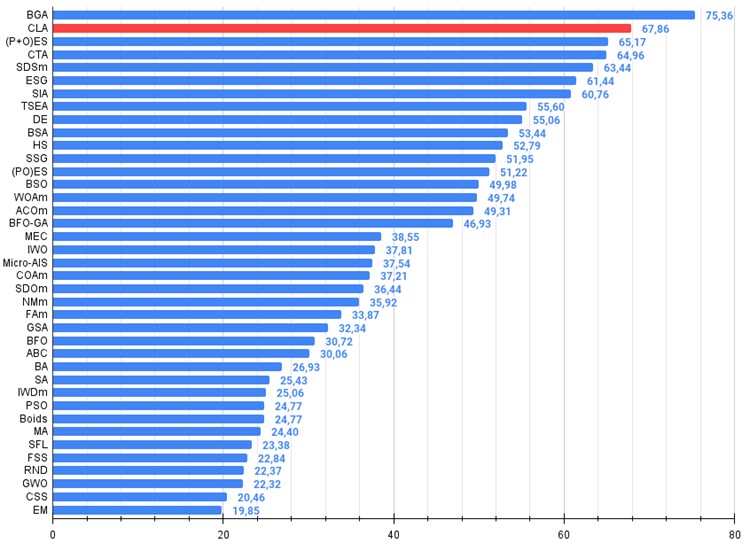

The results of the CLA optimization algorithm on the test functions look excellent. Reaching 67.86% of the maximum possible value is an excellent result. This demonstrates the high performance of the CLA algorithm in optimizing complex functions.

These results show that the CLA algorithm is quite effective and can be successfully applied to various problems where optimization of multidimensional functions is required. Its scalability and ability to handle complex problems make it a promising tool for solving a wide range of optimization problems.

CLA|Code Lock Algorithm|100.0|8.0|0.8|0.03|

=============================

5 Hilly's; Func runs: 10000; result: 0.9534490932631814

25 Hilly's; Func runs: 10000; result: 0.8710742215971827

500 Hilly's; Func runs: 10000; result: 0.375900385878165

=============================

5 Forest's; Func runs: 10000; result: 0.9894215656296362

25 Forest's; Func runs: 10000; result: 0.917091907561472

500 Forest's; Func runs: 10000; result: 0.3164221021938828

=============================

5 Megacity's; Func runs: 10000; result: 0.7969230769230768

25 Megacity's; Func runs: 10000; result: 0.693846153846154

500 Megacity's; Func runs: 10000; result: 0.19303076923077062

=============================

All score: 6.10716 (67.86%)

In the visualization of the algorithm operation on test functions, a spread of results is noticeable on functions of small dimension, however, with an increase in the number of task parameters, the spread of results disappears. In addition, the potential of the algorithm for large-scale problems (1000 parameters) is noticeable from the shape of the convergence curve (red line). The line grows with acceleration. If we remove the limitation on the number of fitness function runs (our test rules), the algorithm will continue to approach the global optimum.

CLA on the Hilly test function

CLA on the Forest test function

CLA on the Megacity test function

The code lock algorithm took second place in the ranking table. In the sharp Forest function with 10 parameters, CLA was even able to outpace the leader of the table - BGA. The color of the result cells for all test functions is green (comparison with all algorithms present in the table), which indicates very uniform performance on different types of tasks, without noticeable failures on individual tests.

| # | AO | Description | Hilly | Hilly final | Forest | Forest final | Megacity (discrete) | Megacity final | Final result | % of MAX | ||||||

| 10 p (5 F) | 50 p (25 F) | 1000 p (500 F) | 10 p (5 F) | 50 p (25 F) | 1000 p (500 F) | 10 p (5 F) | 50 p (25 F) | 1000 p (500 F) | ||||||||

| 1 | BGA | binary genetic algorithm | 0,99989 | 0,99518 | 0,42835 | 2,42341 | 0,96153 | 0,96181 | 0,32027 | 2,24360 | 0,91385 | 0,95908 | 0,24220 | 2,11512 | 6,782 | 75,36 |

| 2 | CLA | code lock algorithm | 0.95345 | 0.87107 | 0.37590 | 2.20042 | 0.98942 | 0.91709 | 0.31642 | 2.22294 | 0.79692 | 0.69385 | 0.19303 | 1.68380 | 6.107 | 67.86 |

| 3 | (P+O)ES | (P+O) evolution strategies | 0.92256 | 0.88101 | 0.40021 | 2.20379 | 0.97750 | 0.87490 | 0.31945 | 2.17185 | 0.67385 | 0.62985 | 0.18634 | 1.49003 | 5.866 | 65.17 |

| 4 | CTA | comet tail algorithm | 0.95346 | 0.86319 | 0.27770 | 2.09435 | 0.99794 | 0.85740 | 0.33949 | 2.19484 | 0.88769 | 0.56431 | 0.10512 | 1.55712 | 5.846 | 64.96 |

| 5 | SDSm | stochastic diffusion search M | 0.93066 | 0.85445 | 0.39476 | 2.17988 | 0.99983 | 0.89244 | 0.19619 | 2.08846 | 0.72333 | 0.61100 | 0.10670 | 1.44103 | 5.709 | 63.44 |

| 6 | ESG | evolution of social groups | 0.99906 | 0.79654 | 0.35056 | 2.14616 | 1.00000 | 0.82863 | 0.13102 | 1.95965 | 0.82333 | 0.55300 | 0.04725 | 1.42358 | 5.529 | 61.44 |

| 7 | SIA | simulated isotropic annealing | 0.95784 | 0.84264 | 0.41465 | 2.21513 | 0.98239 | 0.79586 | 0.20507 | 1.98332 | 0.68667 | 0.49300 | 0.09053 | 1.27020 | 5.469 | 60.76 |

| 8 | TSEA | turtle shell evolution algorithm | 0.96798 | 0.64480 | 0.29672 | 1.90949 | 0.99449 | 0.61981 | 0.22708 | 1.84139 | 0.69077 | 0.42646 | 0.13598 | 1.25322 | 5.004 | 55.60 |

| 9 | DE | differential evolution | 0.95044 | 0.61674 | 0.30308 | 1.87026 | 0.95317 | 0.78896 | 0.16652 | 1.90865 | 0.78667 | 0.36033 | 0.02953 | 1.17653 | 4.955 | 55.06 |

| 10 | BSA | bird swarm algorithm | 0.89306 | 0.64900 | 0.26250 | 1.80455 | 0.92420 | 0.71121 | 0.24939 | 1.88479 | 0.69385 | 0.32615 | 0.10012 | 1.12012 | 4.809 | 53.44 |

| 11 | HS | harmony search | 0.86509 | 0.68782 | 0.32527 | 1.87818 | 0.99999 | 0.68002 | 0.09590 | 1.77592 | 0.62000 | 0.42267 | 0.05458 | 1.09725 | 4.751 | 52.79 |

| 12 | SSG | saplings sowing and growing | 0.77839 | 0.64925 | 0.39543 | 1.82308 | 0.85973 | 0.62467 | 0.17429 | 1.65869 | 0.64667 | 0.44133 | 0.10598 | 1.19398 | 4.676 | 51.95 |

| 13 | (PO)ES | (PO) evolution strategies | 0.79025 | 0.62647 | 0.42935 | 1.84606 | 0.87616 | 0.60943 | 0.19591 | 1.68151 | 0.59000 | 0.37933 | 0.11322 | 1.08255 | 4.610 | 51.22 |

| 14 | BSO | brain storm optimization | 0.93736 | 0.57616 | 0.29688 | 1.81041 | 0.93131 | 0.55866 | 0.23537 | 1.72534 | 0.55231 | 0.29077 | 0.11914 | 0.96222 | 4.498 | 49.98 |

| 15 | WOAm | wale optimization algorithm M | 0.84521 | 0.56298 | 0.26263 | 1.67081 | 0.93100 | 0.52278 | 0.16365 | 1.61743 | 0.66308 | 0.41138 | 0.11357 | 1.18803 | 4.476 | 49.74 |

| 16 | ACOm | ant colony optimization M | 0.88190 | 0.66127 | 0.30377 | 1.84693 | 0.85873 | 0.58680 | 0.15051 | 1.59604 | 0.59667 | 0.37333 | 0.02472 | 0.99472 | 4.438 | 49.31 |

| 17 | BFO-GA | bacterial foraging optimization - ga | 0.89150 | 0.55111 | 0.31529 | 1.75790 | 0.96982 | 0.39612 | 0.06305 | 1.42899 | 0.72667 | 0.27500 | 0.03525 | 1.03692 | 4.224 | 46.93 |

| 18 | MEC | mind evolutionary computation | 0.69533 | 0.53376 | 0.32661 | 1.55569 | 0.72464 | 0.33036 | 0.07198 | 1.12698 | 0.52500 | 0.22000 | 0.04198 | 0.78698 | 3.470 | 38.55 |

| 19 | IWO | invasive weed optimization | 0.72679 | 0.52256 | 0.33123 | 1.58058 | 0.70756 | 0.33955 | 0.07484 | 1.12196 | 0.42333 | 0.23067 | 0.04617 | 0.70017 | 3.403 | 37.81 |

| 20 | Micro-AIS | micro artificial immune system | 0.79547 | 0.51922 | 0.30861 | 1.62330 | 0.72956 | 0.36879 | 0.09398 | 1.19233 | 0.37667 | 0.15867 | 0.02802 | 0.56335 | 3.379 | 37.54 |

| 21 | COAm | cuckoo optimization algorithm M | 0.75820 | 0.48652 | 0.31369 | 1.55841 | 0.74054 | 0.28051 | 0.05599 | 1.07704 | 0.50500 | 0.17467 | 0.03380 | 0.71347 | 3.349 | 37.21 |

| 22 | SDOm | spiral dynamics optimization M | 0.74601 | 0.44623 | 0.29687 | 1.48912 | 0.70204 | 0.34678 | 0.10944 | 1.15826 | 0.42833 | 0.16767 | 0.03663 | 0.63263 | 3.280 | 36.44 |

| 23 | NMm | Nelder-Mead method M | 0.73807 | 0.50598 | 0.31342 | 1.55747 | 0.63674 | 0.28302 | 0.08221 | 1.00197 | 0.44667 | 0.18667 | 0.04028 | 0.67362 | 3.233 | 35.92 |

| 24 | FAm | firefly algorithm M | 0.58634 | 0.47228 | 0.32276 | 1.38138 | 0.68467 | 0.37439 | 0.10908 | 1.16814 | 0.28667 | 0.16467 | 0.04722 | 0.49855 | 3.048 | 33.87 |

| 25 | GSA | gravitational search algorithm | 0.64757 | 0.49197 | 0.30062 | 1.44016 | 0.53962 | 0.36353 | 0.09945 | 1.00260 | 0.32667 | 0.12200 | 0.01917 | 0.46783 | 2.911 | 32.34 |

| 26 | BFO | bacterial foraging optimization | 0.61171 | 0.43270 | 0.31318 | 1.35759 | 0.54410 | 0.21511 | 0.05676 | 0.81597 | 0.42167 | 0.13800 | 0.03195 | 0.59162 | 2.765 | 30.72 |

| 27 | ABC | artificial bee colony | 0.63377 | 0.42402 | 0.30892 | 1.36671 | 0.55103 | 0.21874 | 0.05623 | 0.82600 | 0.34000 | 0.14200 | 0.03102 | 0.51302 | 2.706 | 30.06 |

| 28 | BA | bat algorithm | 0.59761 | 0.45911 | 0.35242 | 1.40915 | 0.40321 | 0.19313 | 0.07175 | 0.66810 | 0.21000 | 0.10100 | 0.03517 | 0.34617 | 2.423 | 26.93 |

| 29 | SA | simulated annealing | 0.55787 | 0.42177 | 0.31549 | 1.29513 | 0.34998 | 0.15259 | 0.05023 | 0.55280 | 0.31167 | 0.10033 | 0.02883 | 0.44083 | 2.289 | 25.43 |

| 30 | IWDm | intelligent water drops M | 0.54501 | 0.37897 | 0.30124 | 1.22522 | 0.46104 | 0.14704 | 0.04369 | 0.65177 | 0.25833 | 0.09700 | 0.02308 | 0.37842 | 2.255 | 25.06 |

| 31 | PSO | particle swarm optimisation | 0.59726 | 0.36923 | 0.29928 | 1.26577 | 0.37237 | 0.16324 | 0.07010 | 0.60572 | 0.25667 | 0.08000 | 0.02157 | 0.35823 | 2.230 | 24.77 |

| 32 | Boids | boids algorithm | 0.43340 | 0.30581 | 0.25425 | 0.99346 | 0.35718 | 0.20160 | 0.15708 | 0.71586 | 0.27846 | 0.14277 | 0.09834 | 0.51957 | 2.229 | 24.77 |

| 33 | MA | monkey algorithm | 0.59107 | 0.42681 | 0.31816 | 1.33604 | 0.31138 | 0.14069 | 0.06612 | 0.51819 | 0.22833 | 0.08567 | 0.02790 | 0.34190 | 2.196 | 24.40 |

| 34 | SFL | shuffled frog-leaping | 0.53925 | 0.35816 | 0.29809 | 1.19551 | 0.37141 | 0.11427 | 0.04051 | 0.52618 | 0.27167 | 0.08667 | 0.02402 | 0.38235 | 2.104 | 23.38 |

| 35 | FSS | fish school search | 0.55669 | 0.39992 | 0.31172 | 1.26833 | 0.31009 | 0.11889 | 0.04569 | 0.47467 | 0.21167 | 0.07633 | 0.02488 | 0.31288 | 2.056 | 22.84 |

| 36 | RND | random | 0.52033 | 0.36068 | 0.30133 | 1.18234 | 0.31335 | 0.11787 | 0.04354 | 0.47476 | 0.25333 | 0.07933 | 0.02382 | 0.35648 | 2.014 | 22.37 |

| 37 | GWO | grey wolf optimizer | 0.59169 | 0.36561 | 0.29595 | 1.25326 | 0.24499 | 0.09047 | 0.03612 | 0.37158 | 0.27667 | 0.08567 | 0.02170 | 0.38403 | 2.009 | 22.32 |

| 38 | CSS | charged system search | 0.44252 | 0.35454 | 0.35201 | 1.14907 | 0.24140 | 0.11345 | 0.06814 | 0.42299 | 0.18333 | 0.06300 | 0.02322 | 0.26955 | 1.842 | 20.46 |

| 39 | EM | electroMagnetism-like algorithm | 0.46250 | 0.34594 | 0.32285 | 1.13129 | 0.21245 | 0.09783 | 0.10057 | 0.41085 | 0.15667 | 0.06033 | 0.02712 | 0.24412 | 1.786 | 19.85 |

Summary

The binary genetic algorithm (BGA) played a certain role in the idea of creating the CLA algorithm. My goal was to develop a faster algorithm. I came up with the idea of replacing binary coding with decimal one. The code lock mechanism served as the prototype for creating the algorithm. This turned out to be a successful solution. CLA operates faster than BGA, while successfully competing with it in terms of efficiency. In addition, decimal encoding allows the use of different types of distributions when generating a numerical combination, which can be useful for some tasks (in the code, the power and normal distributions are commented out and can be used if necessary). Experiments have shown that using a simple uniform distribution turned out to be more effective in this case.

To summarize all of the above, the CLA combination lock algorithm showed excellent results, stability and efficiency on all test functions. This indicates the high scalability of the algorithm and its ability to cope with complex problems, as well as highlights the flexibility and promising nature of the CLA algorithm in various optimization scenarios.

Figure 2. Color gradation of algorithms according to relevant tests Results greater than or equal to 0.99 are highlighted in white

Figure 3. The histogram of algorithm test results (on a scale from 0 to 100, the more the better,

where 100 is the maximum possible theoretical result, the archive features a script for calculating the rating table)

CLA pros and cons:

Advantages:

- Excellent convergence on various types of functions.

- Simple implementation.

Disadvantages:

- Scatter of results on low-dimensional functions.

The article is accompanied by an archive with the current versions of the algorithm codes. The author of the article is not responsible for the absolute accuracy in the description of canonical algorithms. Changes have been made to many of them to improve search capabilities. The conclusions and judgments presented in the articles are based on the results of the experiments.

Translated from Russian by MetaQuotes Ltd.

Original article: https://www.mql5.com/ru/articles/14878

Features of Custom Indicators Creation

Features of Custom Indicators Creation

From Novice to Expert: Collaborative Debugging in MQL5

From Novice to Expert: Collaborative Debugging in MQL5

Features of Experts Advisors

Features of Experts Advisors

MQL5 Wizard Techniques you should know (Part 41): Deep-Q-Networks

MQL5 Wizard Techniques you should know (Part 41): Deep-Q-Networks

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

Do all AOs make the same number of FF calculations?

Probably it would be useful to compare AOs by the average number of FF calculations when the optimum is reached.

This number is the speed of optimisation.

Do all AOs do the same number of FF calculations?

Perhaps it would be useful to compare AOs in terms of average number of FF calculations when the optimum is reached.

This number is the speed of optimisation.

Yes, all AOs perform the same number of FF calculations in tests - 10000. Different AOs have different populations, but here the number of epochs is simply changed: 10000 / population_size = number_epochs.

An interesting suggestion is to compare by the number of FF runs before reaching the maximum value that the algorithm was able to reach. However, in this case there is an unclear point: an algorithm stuck at the very beginning of optimisation on low FF values will show a high result on such a test....

an algorithm stuck at the very beginning of optimisation at low FF values will show a high result on such a test...

That's why I was talking about the average. Or the worst.

That's why I was talking about the average. Or the worst.