Ordinal Encoding for Nominal Variables

Introduction

When working with categorical data in machine learning, it is common to encounter nominal variables. Although these variables can be valuable sources of information for modeling, many machine learning algorithms—especially those that operate exclusively on numerical data—cannot process them directly. To address this, we often convert nominal variables into ordinal variables. In this article, we delve into the complexities of converting nominal variables into ordinal variables. We will explore the rationale behind such conversions, discuss various techniques for assigning ordinal values, and highlight the potential benefits and drawbacks of each approach. Additionally, we will demonstrate these methods primarily using Python code, while also implementing two versatile transformation methods in pure MQL5.

Understanding Nominal and Ordinal Variables

Nominal variables represent categorical data where no inherent order or ranking exists between the categories. Examples specific to financial time series datasets might include:

- Price bar types (e.g., pin bar, spinning top, hammer)

- Days of the week (e.g., Monday, Tuesday, Wednesday)

These variables are purely qualitative, meaning there is no implied hierarchy or sequence among the categories. For instance, a pin bar formation is not inherently superior to a spinning top, nor is a bullish bar better than a bearish bar.

In numerical computing, it is common practice to assign arbitrary integers to distinct categories. However, if these integers are used as inputs to a machine learning algorithm, there is a risk that the assigned values may distort the information conveyed by the original data. The algorithm might incorrectly infer that larger values imply a certain relationship or ranking, even if none was intended.

On the other hand, ordinal variables are categorical data with an inherent order or ranking between categories. Examples include:

- Trend intensity (e.g., strong trend, mild trend, weak trend)

- Volatility (e.g., high volatility, low volatility)

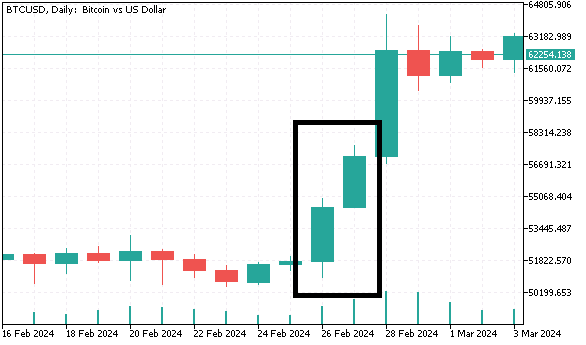

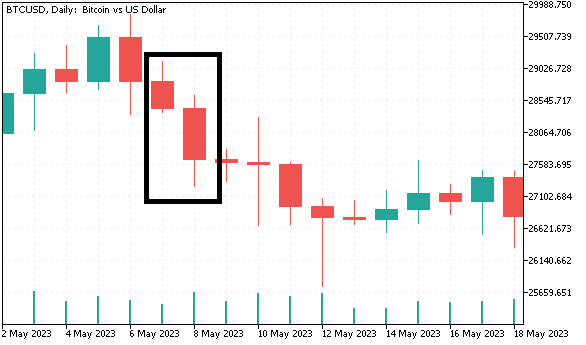

Understanding this distinction makes it clear why simply assigning integers to nominal categories may not always be appropriate. To gain a better understanding, we will create a dataset containing categorical variables that will be converted to ordinal format using different methods in the upcoming sections. We will collect daily bar data (open, high, low, close) for Bitcoin and generate nominal variables that will be used to predict next-day returns. The first nominal variable classifies bars as either bullish or bearish. The second nominal variable contains four distinct categories and groups bars based on the ratio of a candlestick's body size to its full size. For the last nominal variable, three categories are created:

- When both the current and previous bars are bullish, and the current bar's low and high are above the previous bar's low and high, we designate the current bar as a "higher high."

- The opposite scenario is labeled as a "lower low."

- Any other two-bar pattern falls into the third category.

The Python code that generates this dataset is provided below.

# Copyright 2024, MetaQuotes Ltd. # https://www.mql5.com # imports from datetime import datetime import MetaTrader5 as mt5 import pandas as pd import numpy as np import pytz import os from category_encoders import OrdinalEncoder, OneHotEncoder, BinaryEncoder,TargetEncoder, CountEncoder, HashingEncoder, LeaveOneOutEncoder,JamesSteinEncoder if not mt5.initialize(): print("initialize() failed ") mt5.shutdown() exit() #set up timezone infomation tz=pytz.timezone("Etc/UTC") #use time zone to set correct date for history data extraction startdate = datetime(2023,12,31,hour=23,minute=59,second=59,tzinfo=tz) stopdate = datetime(2017,12,31,hour=23,minute=59,second=59,tzinfo=tz) #list the symbol symbol = "BTCUSD" #get price history prices = pd.DataFrame(mt5.copy_rates_range(symbol,mt5.TIMEFRAME_D1,stopdate,startdate)) if len(prices) < 1: print(" Error downloading rates history ") mt5.shutdown() exit() #shutdown mt5 tether mt5.shutdown() #drop unnecessary columns prices.drop(labels=["time","tick_volume","spread","real_volume"],axis=1,inplace=True) #initialize categorical features prices["bar_type"] = np.where(prices["close"]>=prices["open"],"bullish","bearish") prices["body_type"] = np.empty((len(prices),),dtype='str') prices["bar_pattern"] = np.empty((len(prices),),dtype='str') #set feature values for i in np.arange(len(prices)): bodyratio = np.abs(prices.iloc[i,3]-prices.iloc[i,0])/np.abs(prices.iloc[i,1]-prices.iloc[i,2]) if bodyratio >= 0.75: prices.iloc[i,5] = ">=0.75" elif bodyratio < 0.75 and bodyratio >= 0.5: prices.iloc[i,5]=">=0.5<0.75" elif bodyratio < 0.5 and bodyratio >= 0.25: prices.iloc[i,5]=">=0.25<0.5" else: prices.iloc[i,5]="<0.25" if i < 1: prices.iloc[i,6] = None continue if(prices.iloc[i,4]=="bullish" and prices.iloc[i-1,4]=="bullish") and (prices.iloc[i,1]>prices.iloc[i-1,1]) and (prices.iloc[i,2]>prices.iloc[i-1,2]): prices.iloc[i,6] = "higherHigh" elif(prices.iloc[i,4]=="bearish" and prices.iloc[i-1,4]=="bearish") and (prices.iloc[i,2]<prices.iloc[i-1,2]) and (prices.iloc[i,1]<prices.iloc[i-1,1]): prices.iloc[i,6] = "lowerLow" else : prices.iloc[i,6] = "flat" #calculate target look_ahead = 1 prices["target"] = np.log(prices["close"]) prices["target"] = prices["target"].diff(look_ahead) prices["target"] = prices["target"].shift(-look_ahead) #drop rows with NA values prices.dropna(axis=0,inplace=True,ignore_index=True) print("Full feature matrix \n",prices.head())

Note that in Python, the categories are assigned literal names, whereas in the MQL5 code listings that follows, integers are used to distinguish between the categories.

//get relative shift of is and oos sets int trainstart,trainstop; trainstart=iBarShift(SetSymbol!=""?SetSymbol:NULL,tf,TrainingSampleStartDate); trainstop=iBarShift(SetSymbol!=""?SetSymbol:NULL,tf,TrainingSampleStopDate); //check for errors from ibarshift calls if(trainstart<0 || trainstop<0) { Print(ErrorDescription(GetLastError())); return; } //---set the size of the sample sets size_insample=(trainstop - trainstart) + 1; //---check for input errors if(size_insample<=0) { Print("Invalid inputs "); return; } //--- if(!predictors.Resize(size_insample,3)) { Print("ArrayResize error ",ErrorDescription(GetLastError())); return; } //--- if(!prices.CopyRates(SetSymbol,tf,COPY_RATES_VERTICAL|COPY_RATES_OHLC,TrainingSampleStartDate,TrainingSampleStopDate)) { Print("Copyrates error ",ErrorDescription(GetLastError())); return; } //--- targets = log(prices.Col(3)); targets = np::diff(targets); //--- double bodyratio = 0.0; for(ulong i = 0; i<prices.Rows(); i++) { if(prices[i][3]<prices[i][0]) predictors[i][0] = 0.0; else predictors[i][0] = 1.0; bodyratio = MathAbs(prices[i][3]-prices[i][0])/MathAbs(prices[i][1]-prices[i][2]); if(bodyratio >=0.75) predictors[i][1] = 0.0; else if(bodyratio<0.75 && bodyratio>=0.5) predictors[i][1] = 1.0; else if(bodyratio<0.5 && bodyratio>=0.25) predictors[i][1] = 2.0; else predictors[i][1] = 3.0; if(i<1) { predictors[i][2] = 0.0; continue; } if(predictors[i][0]==1.0 && predictors[i-1][0]==1.0 && prices[i][1]>prices[i-1][1] && prices[i][2]>prices[i-1][2]) predictors[i][2] = 2.0; else if(predictors[i][0]==0.0 && predictors[i-1][0]==0.0 && prices[i][2]<prices[i-1][2] && prices[i][1]>prices[i-1][1]) predictors[i][2] = 1.0; else predictors[i][2] = 0.0; } targets = np::sliceVector(targets,1); prices = np::sliceMatrixRows(prices,1,predictors.Rows()-1); predictors = np::sliceMatrixRows(predictors,1,predictors.Rows()-1); matrix fullFeatureMatrix(predictors.Rows(),predictors.Cols()+prices.Cols()); if(!np::matrixCopyCols(fullFeatureMatrix,prices,0,prices.Cols()) || !np::matrixCopyCols(fullFeatureMatrix,predictors,prices.Cols())) { Print("Failed to merge matrices"); return; }

A snippet of the dataset is given below.

Why convert nominal variables to ordinal

Some machine learning algorithms, such as decision trees, can handle nominal data directly. However, others—especially linear models like logistic regression or neural networks—require numerical inputs. Converting nominal variables to ordinal variables can make them interpretable by these models, allowing algorithms to learn from the data more effectively. While ordinal variables represent categories, they also provide a clear progression or ranking, giving algorithms more context to understand relationships. When a nominal variable shares substantial information with the target variable, it can often be advantageous to elevate its level of measurement. If the nominal variable has meaningful numeric values, these can be directly used as inputs to the model. However, even when the values lack intrinsic numerical meaning, we can often assign ordinal values based on their relationship to the target variable.

By elevating a nominal variable to an ordinal scale, we introduce a sense of order or ranking among the categories. This can enhance the model’s ability to capture underlying patterns and relationships between the variable and the target. While it is theoretically possible to elevate the nominal variable to the same level of measurement as the target variable, in practice, converting it to an ordinal scale is often sufficient. This approach strikes a balance between preserving the variable’s information content and minimizing noise. In the following sections, we explore common techniques for converting nominal variables into ordinal forms, along with key considerations to maintain the integrity of the data.

Nominal variable conversion techniques

We begin with the simplest method: ordinal encoding. In this method, we simply assign an integer value to each category. As mentioned earlier, this imposes a ranking on the categories. If the practitioner is familiar with the data and knows in advance how the categories relate to the target variable, this method should suffice. However, ordinal encoding should not be used in unsupervised learning, as it can easily introduce biases by implying an order where none exists.

To convert our dataset of nominal variables in Python, we will use the category_encoders package. This package provides a broad range of categorical transformation implementations, making it suitable for most tasks. Readers can find more information on the project’s GitHub repository.

Converting variables to ordinal numeric format requires the OrdinalEncoder object.

#Ordinal encoding ord_encoder = OrdinalEncoder(cols = ["bar_type","body_type","bar_pattern"]) ordinal_data = ord_encoder.fit_transform(prices) print(" ordinal encoding\n ", ordinal_data.head())

The transformed data:

Appropriate conversion techniques for unsupervised learning algorithms include binary encoding, one-hot encoding, and frequency encoding. One-hot encoding transforms each category into a binary column, where the presence of a category is marked with a 1, and its absence with a 0. The main drawback of this method is that it significantly increases the number of input variables. A new variable is created for each category of a categorical variable. For example, if we were to encode the months of the year, we would end up with 11 additional input variables.

The 'OneHotEncoder' object handles One-Hot encoding in the category_encoders package.

#One-Hot encoding onehot_encoder = OneHotEncoder(cols = ["bar_type","body_type","bar_pattern"]) onehot_data = onehot_encoder.fit_transform(prices) print(" ordinal encoding\n ", onehot_data.head())

The transformed data:

Binary encoding is a more efficient alternative, especially when dealing with numerous categories. In this method, each category is first converted to a unique integer, and then the integer is represented as a binary number. This binary representation is spread across multiple columns, which typically results in fewer columns compared to one-hot encoding. For example, to encode 12 months, only 4 binary columns would be needed. Binary encoding works well in scenarios where the categorical variable has many unique categories, and you want to limit the number of input variables.

Binary encoding our BTCUSD dataset.

#Binary encoding binary_encoder = BinaryEncoder(cols = ["bar_type","body_type","bar_pattern"]) binary_data = binary_encoder.fit_transform(prices) print(" binary encoding\n ", binary_data.head())

The transformed data:

Frequency encoding transforms a categorical variable by replacing each category with the frequency of its occurrence in the dataset. Instead of creating multiple columns, each category is replaced by the proportion or count of how often it appears. This approach is useful when there is a meaningful relationship between the frequency of a category and the target variable, as it preserves valuable information in a more compact form. However, it may introduce bias if certain categories dominate in the dataset. It is often used as a first step in a more complex feature engineering pipeline, in unsupervised learning scenarios.

Here we use the 'CountEncoder' object.

#Frequency encoding freq_encoder = CountEncoder(cols = ["bar_type","body_type","bar_pattern"]) freq_data = freq_encoder.fit_transform(prices) print(" frequency encoding\n ", freq_data.head())

The transformed data:

Binary, one-hot, and frequency encoding are versatile techniques that can be applied to most categorical data without the risk of introducing unwarranted side effects that might affect the learning outcome. These transformations share the important characteristic of being independent of the target variable.

However, in some cases, a machine learning algorithm may benefit from transformations that reflect a variable's association with the target. These methods leverage the target variable to convert categorical data into numerical values that impart some level of associativity, potentially enhancing the model's predictive power.

One such method is target encoding, also known as mean encoding. This approach replaces each category with the mean of the target variable for that category. For instance, if we are predicting the likelihood of a stock closing higher (binary target), we could replace each category in a nominal variable—such as trading volume range—with the average closing probability for each range. Target encoding can be particularly powerful for high-cardinality categorical variables, as it consolidates useful information without increasing the dimensionality of the dataset. This technique helps in capturing relationshis between the categorical variable and the target, making it effective for supervised learning tasks. It is mostly effective when categories have a strong correlation with the target. But must also be paired with over fitting mitigation procedures to ensure better generalization.

#Target encoding target_encoder = TargetEncoder(cols = ["bar_type","body_type","bar_pattern"]) target_data = target_encoder.fit_transform(prices[["open","high","low","close","bar_type","body_type","bar_pattern"]], prices["target"]) print(" target encoding\n ", target_data.head())

The transformed data:

Another target-dependent method is leave-one-out encoding. It works similarly to target encoding but adjusts the encoding by excluding the current row’s target value when calculating the mean for that category. This helps reduce overfitting, especially when the dataset is small or when certain categories are overrepresented. Leave-one-out encoding ensures that the transformation remains independent of the row being processed, thereby maintaining the integrity of the learning process.

#LeaveOneOut encoding oneout_encoder = LeaveOneOutEncoder(cols = ["bar_type","body_type","bar_pattern"]) oneout_data = oneout_encoder.fit_transform(prices[["open","high","low","close","bar_type","body_type","bar_pattern"]], prices["target"]) print(" LeaveOneOut encoding\n ", oneout_data.head())

The transformed data:

James-Stein encoding is a Bayesian approach to encoding that shrinks the estimate of a category’s target mean toward the overall mean, depending on the amount of data available for each category. This technique is particularly useful for datasets with low cardinality, where traditional methods like target encoding or leave-one-out encoding may lead to overfitting, especially in small datasets or when dealing with categories that have a severely uneven distribution. By adjusting the category means based on the overall mean, James-Stein encoding mitigates the risk of extreme values unduly influencing the model. This results in more stable and robust estimates, making it an effective alternative when dealing with sparse data or categories with few observations.

#James Stein encoding james_encoder = JamesSteinEncoder(cols = ["bar_type","body_type","bar_pattern"]) james_data = james_encoder.fit_transform(prices[["open","high","low","close","bar_type","body_type","bar_pattern"]], prices["target"]) print(" James Stein encoding\n ", james_data.head())

Transformed data:

The category_encoders library offers a wide variety of encoding techniques tailored to different types of categorical data and machine learning tasks. The appropriate encoding method depends on the nature of the data, the machine learning algorithm being used, and the specific requirements of the task at hand. In summary, one-hot encoding is a versatile method suitable for many use cases, particularly when dealing with nominal variables. Target encoding, leave-one-out encoding, and James-Stein encoding should be employed when there is a need to emphasize the relationship between a variable and the target. Finally, binary encoding and hash encoding are useful techniques when the goal is to reduce dimensionality while still retaining meaningful information.

Nominal to ordinal conversion in MQL5

In this section, we implement two nominal variable encoding methods in MQL5, both of which are encapsulated in classes declared in the header file nom2ord.mqh. The class COneHotEncoder implements one-hot encoding for datasets and features two primary methods that users should be familiar with.

//+------------------------------------------------------------------+ //| one hot encoder class | //+------------------------------------------------------------------+ class COneHotEncoder { private: vector m_mapping[]; ulong m_cat_cols[]; ulong m_vars,m_cols; public: //+------------------------------------------------------------------+ //| Constructor | //+------------------------------------------------------------------+ COneHotEncoder(void) { } //+------------------------------------------------------------------+ //| Destructor | //+------------------------------------------------------------------+ ~COneHotEncoder(void) { ArrayFree(m_mapping); } //+------------------------------------------------------------------+ //| map categorical features of a training dataset | //+------------------------------------------------------------------+ bool fit(matrix &in_data,ulong &cols[]) { m_cols = in_data.Cols(); matrix data = np::selectMatrixCols(in_data,cols); if(data.Cols()!=ulong(cols.Size())) { Print(__FUNCTION__, " invalid input data "); return false; } m_vars = ulong(cols.Size()); if(ArrayCopy(m_cat_cols,cols)<=0 || !ArraySort(m_cat_cols)) { Print(__FUNCTION__, " ArrayCopy or ArraySort failure ", GetLastError()); return false; } if(ArrayResize(m_mapping,int(m_vars))<0) { Print(__FUNCTION__, " Vector array resize failure ", GetLastError()); return false; } for(ulong i = 0; i<m_vars; i++) { vector unique = data.Col(i); m_mapping[i] = np::unique(unique); } return true; } //+------------------------------------------------------------------+ //| Transform abitrary feature matrix to learned category m_mapping | //+------------------------------------------------------------------+ matrix transform(matrix &in_data) { if(in_data.Cols()!=m_cols) { Print(__FUNCTION__," Column dimension of input not equal to ", m_cols); return matrix::Zeros(1,1); } matrix out,input_copy; matrix data = np::selectMatrixCols(in_data,m_cat_cols); if(data.Cols()!=ulong(m_cat_cols.Size())) { Print(__FUNCTION__, " invalid input data "); return matrix::Zeros(1,1); } ulong unchanged_feature_cols[]; for(ulong i = 0; i<in_data.Cols(); i++) { int found = ArrayBsearch(m_cat_cols,i); if(m_cat_cols[found]!=i) { if(!unchanged_feature_cols.Push(i)) { Print(__FUNCTION__, " Failed array insertion ", GetLastError()); return matrix::Zeros(1,1); } } } input_copy = unchanged_feature_cols.Size()?np::selectMatrixCols(in_data,unchanged_feature_cols):input_copy; ulong numcols = 0; vector cumsum = vector::Zeros(ulong(MathMin(m_vars,data.Cols()))); for(ulong i = 0; i<cumsum.Size(); i++) { cumsum[i] = double(numcols); numcols+=m_mapping[i].Size(); } out = matrix::Zeros(data.Rows(),numcols); for(ulong i = 0;i<data.Rows(); i++) { vector row = data.Row(i); for(ulong col = 0; col<row.Size(); col++) { for(ulong k = 0; k<m_mapping[col].Size(); k++) { if(MathAbs(row[col]-m_mapping[col][k])<=1.e-15) { out[i][ulong(cumsum[col])+k]=1.0; break; } } } } matrix newfeaturematrix(out.Rows(),input_copy.Cols()+out.Cols()); if((input_copy.Cols()>0 && !np::matrixCopyCols(newfeaturematrix,input_copy,0,input_copy.Cols())) || !np::matrixCopyCols(newfeaturematrix,out,input_copy.Cols())) { Print(__FUNCTION__, " Failed matrix copy "); return matrix::Zeros(1,1); } return newfeaturematrix; } }; //+------------------------------------------------------------------+

The first method is fit(), which should be called after creating an instance of the class. This method requires two inputs: a feature matrix (training data) and an array. The feature matrix can be the complete dataset, including both categorical and non-categorical variables. If this is the case, the array should contain the column indices of the nominal variables within the matrix. If an empty array is supplied, it is assumed that all variables are nominal. After the fit() method executes successfully and returns a true value, the transform() method can be called to obtain the transformed dataset. This method requires a matrix that has the same number of columns as the matrix supplied to the fit() method. If the dimensions do not match, an error will be flagged.

Let us examine how the COneHotEncoder class works through a demonstration using the BTCUSD dataset prepared earlier in this text. The snippet below illustrates the conversion process. This code is sourced from the MetaTrader 5 script OneHotEncoding_demo.mq5.

//+------------------------------------------------------------------+ //| OneHotEncoding_demo.mq5 | //| Copyright 2024, MetaQuotes Ltd. | //| https://www.mql5.com | //+------------------------------------------------------------------+ #property copyright "Copyright 2024, MetaQuotes Ltd." #property link "https://www.mql5.com" #property version "1.00" #property script_show_inputs #include<np.mqh> #include<nom2ord.mqh> #include<ErrorDescription.mqh> //--- input parameters input datetime TrainingSampleStartDate=D'2023.12.31'; input datetime TrainingSampleStopDate=D'2017.12.31'; input ENUM_TIMEFRAMES tf = PERIOD_D1; input string SetSymbol="BTCUSD"; //+------------------------------------------------------------------+ //|global integer variables | //+------------------------------------------------------------------+ int size_insample, //training set size size_observations, //size of of both training and testing sets combined price_handle=INVALID_HANDLE; //log prices indicator handle //+------------------------------------------------------------------+ //|double global variables | //+------------------------------------------------------------------+ matrix prices; //array for log transformed prices vector targets; //differenced prices kept here matrix predictors; //flat array arranged as matrix of all predictors ie size_observations by size_predictors //+------------------------------------------------------------------+ //| Script program start function | //+------------------------------------------------------------------+ void OnStart() { //get relative shift of is and oos sets int trainstart,trainstop; trainstart=iBarShift(SetSymbol!=""?SetSymbol:NULL,tf,TrainingSampleStartDate); trainstop=iBarShift(SetSymbol!=""?SetSymbol:NULL,tf,TrainingSampleStopDate); //check for errors from ibarshift calls if(trainstart<0 || trainstop<0) { Print(ErrorDescription(GetLastError())); return; } //---set the size of the sample sets size_insample=(trainstop - trainstart) + 1; //---check for input errors if(size_insample<=0) { Print("Invalid inputs "); return; } //--- if(!predictors.Resize(size_insample,3)) { Print("ArrayResize error ",ErrorDescription(GetLastError())); return; } //--- if(!prices.CopyRates(SetSymbol,tf,COPY_RATES_VERTICAL|COPY_RATES_OHLC,TrainingSampleStartDate,TrainingSampleStopDate)) { Print("Copyrates error ",ErrorDescription(GetLastError())); return; } //--- targets = log(prices.Col(3)); targets = np::diff(targets); //--- double bodyratio = 0.0; for(ulong i = 0; i<prices.Rows(); i++) { if(prices[i][3]<prices[i][0]) predictors[i][0] = 0.0; else predictors[i][0] = 1.0; bodyratio = MathAbs(prices[i][3]-prices[i][0])/MathAbs(prices[i][1]-prices[i][2]); if(bodyratio >=0.75) predictors[i][1] = 0.0; else if(bodyratio<0.75 && bodyratio>=0.5) predictors[i][1] = 1.0; else if(bodyratio<0.5 && bodyratio>=0.25) predictors[i][1] = 2.0; else predictors[i][1] = 3.0; if(i<1) { predictors[i][2] = 0.0; continue; } if(predictors[i][0]==1.0 && predictors[i-1][0]==1.0 && prices[i][1]>prices[i-1][1] && prices[i][2]>prices[i-1][2]) predictors[i][2] = 2.0; else if(predictors[i][0]==0.0 && predictors[i-1][0]==0.0 && prices[i][2]<prices[i-1][2] && prices[i][1]>prices[i-1][1]) predictors[i][2] = 1.0; else predictors[i][2] = 0.0; } targets = np::sliceVector(targets,1); prices = np::sliceMatrixRows(prices,1,predictors.Rows()-1); predictors = np::sliceMatrixRows(predictors,1,predictors.Rows()-1); matrix fullFeatureMatrix(predictors.Rows(),predictors.Cols()+prices.Cols()); if(!np::matrixCopyCols(fullFeatureMatrix,prices,0,prices.Cols()) || !np::matrixCopyCols(fullFeatureMatrix,predictors,prices.Cols())) { Print("Failed to merge matrices"); return; } if(predictors.Rows()!=targets.Size()) { Print(" Error in aligning data structures "); return; } COneHotEncoder enc; ulong selectedcols[] = {4,5,6}; if(!enc.fit(fullFeatureMatrix,selectedcols)) return; matrix transformed = enc.transform(fullFeatureMatrix); Print(" Original predictors \n", fullFeatureMatrix); Print(" transformed predictors \n", transformed); } //+------------------------------------------------------------------+

Feature matrix before:

RQ 0 16:40:41.760 OneHotEncoding_demo (BTCUSD,D1) Original predictors ED 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [[13743,13855,12362.69,13347,0,2,0] RN 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [13348,15381,12535.67,14689,1,2,0] DG 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [14232.48,15408,14110.57,15130,1,1,2] HH 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [15114,15370,13786.18,15139,1,3,0] QN 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [15055.8,16894,14349.84,16725,1,1,2] RP 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [15699.53,16474,15672.99,16186,1,1,0] OI 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [16187,16258,13639.83,14900,0,2,0] ML 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [14884,15334,13777.33,14405,0,2,0] GS 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [14405,14876,12969.58,14876,1,3,0] KF 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [14876,14927,12417.22,13245,0,1,0] ON 0 16:40:41.761 OneHotEncoding_demo (BTCUSD,D1) [12776.79,14078.5,12355.38,13681,1,1,0…]]

Feature matrix after:

PH 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) transformed predictors KP 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [[13743,13855,12362.69,13347,1,0,1,0,0,0,1,0,0] KP 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [13348,15381,12535.67,14689,0,1,1,0,0,0,1,0,0] NF 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [14232.48,15408,14110.57,15130,0,1,0,1,0,0,0,1,0] JI 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [15114,15370,13786.18,15139,0,1,0,0,1,0,1,0,0] CL 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [15055.8,16894,14349.84,16725,0,1,0,1,0,0,0,1,0] RL 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [15699.53,16474,15672.99,16186,0,1,0,1,0,0,1,0,0] IS 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [16187,16258,13639.83,14900,1,0,1,0,0,0,1,0,0] GG 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [14884,15334,13777.33,14405,1,0,1,0,0,0,1,0,0] QK 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [14405,14876,12969.58,14876,0,1,0,0,1,0,1,0,0] PL 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [14876,14927,12417.22,13245,1,0,0,1,0,0,1,0,0] GS 0 16:40:41.762 OneHotEncoding_demo (BTCUSD,D1) [12776.79,14078.5,12355.38,13681,0,1,0,1,0,0,1,0,0.]]

The second conversion method implemented in MQL5 operates in two modes, both of which are variations of target encoding modified to reduce the effects of overfitting. This technique is encapsulated in the class CNomOrd, defined in nom2ord.mqh. The class utilizes familiar methods, fit() and transform(), to convert variables without reducing the dimensionality of categorical inputs.

public: //+------------------------------------------------------------------+ //| constructor | //+------------------------------------------------------------------+ CNomOrd(void) { } //+------------------------------------------------------------------+ //| destructor | //+------------------------------------------------------------------+ ~CNomOrd(void) { } //+------------------------------------------------------------------+ //| fit mapping to training data | //+------------------------------------------------------------------+ bool fit(matrix &preds_in, ulong &cols[], vector &target) { m_dim_reduce = 0; mapped = false; //--- if(cols.Size()==0 && preds_in.Cols()) { m_pred = int(preds_in.Cols()); if(!np::arange(m_colindices,m_pred,ulong(0),ulong(1))) { Print(__FUNCTION__, " arange error "); return mapped; } } else { m_pred = int(cols.Size()); } //--- m_rows = int(preds_in.Rows()) ; m_cols = int(preds_in.Cols()); //--- if(ArrayResize(m_mean_rankings,m_pred)<0 || ArrayResize(m_rankings,m_rows)<0 || ArrayResize(m_indices,m_rows)<0 || ArrayResize(m_mapping,m_pred)<0 || ArrayResize(m_class_counts,m_pred)<0 || !m_median.Resize(m_pred) || !m_class_ids.Resize(m_rows,m_pred) || !shuffle_target.Resize(m_rows,2) || (cols.Size()>0 && ArrayCopy(m_colindices,cols)<0) || !ArraySort(m_colindices)) { Print(__FUNCTION__, " Memory allocation failure ", GetLastError()); return mapped; } //--- for(uint col = 0; col<m_colindices.Size(); col++) { vector var = preds_in.Col(m_colindices[col]); m_mapping[col] = np::unique(var); m_class_counts[col] = vector::Zeros(m_mapping[col].Size()); for(ulong i = 0; i<var.Size(); i++) { for(ulong j = 0; j<m_mapping[col].Size(); j++) { if(MathAbs(var[i]-m_mapping[col][j])<=1.e-15) { m_class_ids[i][col]=double(j); ++m_class_counts[col][j]; break; } } } } m_target = target; for(uint i = 0; i<m_colindices.Size(); i++) { vector cid = m_class_ids.Col(i); vector ccounts = m_class_counts[i]; m_mean_rankings[i] = train(cid,ccounts,m_target,m_median[i]); } mapped = true; return mapped; } //+------------------------------------------------------------------+ //| transform nominal to ordinal based on learned mapping | //+------------------------------------------------------------------+ matrix transform(matrix &data_in) { if(m_dim_reduce) { Print(__FUNCTION__, " Invalid method call, Use fitTransform() or call fit() "); return matrix::Zeros(1,1); } if(!mapped) { Print(__FUNCTION__, " Invalid method call, training either failed or was not done. Call fit() first. "); return matrix::Zeros(1,1); } if(data_in.Cols()!=ulong(m_cols)) { Print(__FUNCTION__, " Unexpected input data shape, doesnot match training data "); return matrix::Zeros(1,1); } //--- matrix out = data_in; //--- for(uint col = 0; col<m_colindices.Size(); col++) { vector var = data_in.Col(m_colindices[col]); for(ulong i = 0; i<var.Size(); i++) { for(ulong j = 0; j<m_mapping[col].Size(); j++) { if(MathAbs(var[i]-m_mapping[col][j])<=1.e-15) { out[i][m_colindices[col]]=m_mean_rankings[col][j]; break; } } } } //--- return out; }

The fit() method requires an additional input: a vector representing the corresponding target variable. The encoding scheme differs from standard target encoding to minimize overfitting, which often arises due to outliers in the distribution of target variables. To address this issue, the class employs a percentile transformation. The target values are converted into a percentile-based scale, where the minimum value is assigned a percentile rank of 0, the maximum receives a rank of 100, and intermediate values are proportionally scaled. This approach effectively preserves the ordinal relationship between values while attenuating the influence of outliers.

//+------------------------------------------------------------------+ //| test for a genuine relationship between predictor and target | //+------------------------------------------------------------------+ double score(int reps, vector &test_target,ulong selectedVar=0) { if(!mapped) { Print(__FUNCTION__, " Invalid method call, training either failed or was not done. Call fit() first. "); return -1.0; } if(m_dim_reduce==0 && selectedVar>=ulong(m_colindices.Size())) { Print(__FUNCTION__, " invalid predictor selection "); return -1.0; } if(test_target.Size()!=m_rows) { Print(__FUNCTION__, " invalid targets parameter, Does not match shape of training data. "); return -1.0; } int i, j, irep, unif_error ; double dtemp, min_neg, max_neg, min_pos, max_pos, medn ; dtemp = 0.0; min_neg = 0.0; max_neg = -DBL_MIN; min_pos = DBL_MAX; max_pos = DBL_MIN ; vector id = (m_dim_reduce)?m_class_ids.Col(0):m_class_ids.Col(selectedVar); vector cc = (m_dim_reduce)?m_class_counts[0]:m_class_counts[selectedVar]; int nclasses = int(cc.Size()); if(reps < 1) reps = 1 ; for(irep=0 ; irep<reps ; irep++) { if(!shuffle_target.Col(test_target,0)) { Print(__FUNCTION__, " error filling shuffle_target column ", GetLastError()); return -1.0; } if(irep) { i = m_rows ; while(i > 1) { j = (int)(MathRandomUniform(0.0,1.0,unif_error) * i) ; if(unif_error) { Print(__FUNCTION__, " mathrandomuniform() error ", unif_error); return -1.0; } if(j >= i) j = i - 1 ; dtemp = shuffle_target[--i][0] ; shuffle_target[i][0] = shuffle_target[j][0] ; shuffle_target[j][0] = dtemp ; } } vector totrain = shuffle_target.Col(0); vector m_ranks = train(id,cc,totrain,medn) ; if(irep == 0) { for(i=0 ; i<nclasses ; i++) { if(i == 0) min_pos = max_pos = m_ranks[i] ; else { if(m_ranks[i] > max_pos) max_pos = m_ranks[i] ; if(m_ranks[i] < min_pos) min_pos = m_ranks[i] ; } } // For i<nclasses orig_max_class = max_pos - min_pos ; count_max_class = 1 ; } else { for(i=0 ; i<nclasses ; i++) { if(i == 0) min_pos = max_pos = m_ranks[i]; else { if(m_ranks[i] > max_pos) max_pos = m_ranks[i] ; if(m_ranks[i] < min_pos) min_pos = m_ranks[i] ; } } // For i<nclasses if(max_pos - min_pos >= orig_max_class) ++count_max_class ; } } if(reps <= 1) return -1.0; return double(count_max_class)/ double(reps); }

The score() method is used to assess the statistical significance of the implied relationship between an ordinal variable and a target. A Monte Carlo permutation test is employed to repeatedly shuffle the target variable data and recalculate the observed relationship. By comparing the observed relationship to the distribution of relationships obtained through random permutations, we can estimate the probability that the observed relationship is merely due to chance. To quantify the observed relationship, we calculate the difference between the maximum and minimum mean target percentiles across all categories of the nominal variable. Therefore, the score() method returns a probability value that serves as a result of the hypothesis test, where the null hypothesis states that the observed difference could have arisen by chance from an unrelated nominal variable and target.

Let’s see how this works by applying the class to our BTCUSD dataset. This demonstration is provided in the MQL5 script TargetBasedNominalVariableConversion_demo.mq5.

CNomOrd enc; ulong selectedcols[] = {4,5,6}; if(!enc.fit(fullFeatureMatrix,selectedcols,targets)) return; matrix transformed = enc.transform(fullFeatureMatrix); Print(" Original predictors \n", fullFeatureMatrix); Print(" transformed predictors \n", transformed); for(uint i = 0; i<selectedcols.Size(); i++) Print(" Probability that predicator at ", selectedcols[i] , " is associated with target ", enc.score(10000,targets,ulong(i)));

The transformed data:

IQ 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) transformed predictors LM 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [[13743,13855,12362.69,13347,52.28360492434251,50.66453470243147,50.45172139701621] CN 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [13348,15381,12535.67,14689,47.85025875164135,50.66453470243147,50.45172139701621] IH 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [14232.48,15408,14110.57,15130,47.85025875164135,49.77386885151457,48.16372967916465] FF 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [15114,15370,13786.18,15139,47.85025875164135,49.23046392011166,50.45172139701621] HR 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [15055.8,16894,14349.84,16725,47.85025875164135,49.77386885151457,48.16372967916465] EM 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [15699.53,16474,15672.99,16186,47.85025875164135,49.77386885151457,50.45172139701621] RK 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [16187,16258,13639.83,14900,52.28360492434251,50.66453470243147,50.45172139701621] LG 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [14884,15334,13777.33,14405,52.28360492434251,50.66453470243147,50.45172139701621] QD 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [14405,14876,12969.58,14876,47.85025875164135,49.23046392011166,50.45172139701621] HP 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [14876,14927,12417.22,13245,52.28360492434251,49.77386885151457,50.45172139701621] PO 0 16:44:25.680 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) [12776.79,14078.5,12355.38,13681,47.85025875164135,49.77386885151457,50.45172139701621…]]

The p-values, estimating the relation between the transformed variables and the target.

NS 0 16:44:29.287 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) Probability that predicator at 4 is associated with target 0.0005 IR 0 16:44:32.829 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) Probability that predicator at 5 is associated with target 0.7714 JS 0 16:44:36.406 TargetBasedNominalVariableConversion_demo (BTCUSD,D1) Probability that predicator at 6 is associated with target 0.749

The results indicate that, among all the categorical variables, only the bullish/bearish classification is significantly relevant to the target. In contrast, the two-bar pattern and the candlestick body size do not exhibit any obvious correspondence with the target. This points to converted variables being of little difference to a random variable.

The final demonstration showcases the use of the class to convert a set of nominal variables in combination with dimensionality reduction. This functionality is achieved by calling fitTransform(), which accepts the same input parameters as the fit() method and returns a matrix containing a single representative variable derived from the converted nominal variables. This operation consistently reduces any number of nominal variables down to one ordinal variable.

//+------------------------------------------------------------------+ //| categorical conversion with dimensionality reduction | //+------------------------------------------------------------------+ matrix fitTransform(matrix &preds_in, ulong &cols[], vector &target) { //--- if(preds_in.Cols()<2) { if(!fit(preds_in,cols,target)) { Print(__FUNCTION__, " error at ", __LINE__); return matrix::Zeros(1,1); } //--- return transform(preds_in); } //--- m_dim_reduce = 1; mapped = false; //--- if(cols.Size()==0 && preds_in.Cols()) { m_pred = int(preds_in.Cols()); if(!np::arange(m_colindices,m_pred,ulong(0),ulong(1))) { Print(__FUNCTION__, " arange error "); return matrix::Zeros(1,1); } } else { m_pred = int(cols.Size()); } //--- m_rows = int(preds_in.Rows()) ; m_cols = int(preds_in.Cols()); //--- if(ArrayResize(m_mean_rankings,1)<0 || ArrayResize(m_rankings,m_rows)<0 || ArrayResize(m_indices,m_rows)<0 || ArrayResize(m_mapping,m_pred)<0 || ArrayResize(m_class_counts,1)<0 || !m_median.Resize(m_pred) || !m_class_ids.Resize(m_rows,m_pred) || !shuffle_target.Resize(m_rows,2) || (cols.Size()>0 && ArrayCopy(m_colindices,cols)<0) || !ArraySort(m_colindices)) { Print(__FUNCTION__, " Memory allocation failure ", GetLastError()); return matrix::Zeros(1,1); } //--- for(uint col = 0; col<m_colindices.Size(); col++) { vector var = preds_in.Col(m_colindices[col]); m_mapping[col] = np::unique(var); for(ulong i = 0; i<var.Size(); i++) { for(ulong j = 0; j<m_mapping[col].Size(); j++) { if(MathAbs(var[i]-m_mapping[col][j])<=1.e-15) { m_class_ids[i][col]=double(j); break; } } } } m_class_counts[0] = vector::Zeros(ulong(m_colindices.Size())); if(!m_class_ids.Col(m_class_ids.ArgMax(1),0)) { Print(__FUNCTION__, " failed to insert new class id values ", GetLastError()); return matrix::Zeros(1,1); } for(ulong i = 0; i<m_class_ids.Rows(); i++) ++m_class_counts[0][ulong(m_class_ids[i][0])]; m_target = target; vector cid = m_class_ids.Col(0); m_mean_rankings[0] = train(cid,m_class_counts[0],m_target,m_median[0]); mapped = true; ulong unchanged_feature_cols[]; for(ulong i = 0; i<preds_in.Cols(); i++) { int found = ArrayBsearch(m_colindices,i); if(m_colindices[found]!=i) { if(!unchanged_feature_cols.Push(i)) { Print(__FUNCTION__, " Failed array insertion ", GetLastError()); return matrix::Zeros(1,1); } } } matrix out(preds_in.Rows(),unchanged_feature_cols.Size()+1); ulong nfeatureIndex = unchanged_feature_cols.Size(); if(nfeatureIndex) { matrix input_copy = np::selectMatrixCols(preds_in,unchanged_feature_cols); if(!np::matrixCopyCols(out,input_copy,0,nfeatureIndex)) { Print(__FUNCTION__, " failed to copy matrix columns "); return matrix::Zeros(1,1); } } for(ulong i = 0; i<out.Rows(); i++) { ulong r = ulong(m_class_ids[i][0]); if(r>=m_mean_rankings[0].Size()) { Print(__FUNCTION__, " critical error , index out of bounds "); return matrix::Zeros(1,1); } out[i][nfeatureIndex] = m_mean_rankings[0][r]; } return out; }

The script TargetBasedNominalVariableConversionWithDimReduc_demo illustrates how this process is implemented.

CNomOrd enc; ulong selectedcols[] = {4,5,6}; matrix transformed = enc.fitTransform(fullFeatureMatrix,selectedcols,targets); Print(" Original predictors \n", fullFeatureMatrix); Print(" transformed predictors \n", transformed); Print(" Probability that predicator is associated with target ", enc.score(10000,targets));

The transformed variable.

JR 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) transformed predictors JO 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [[13743,13855,12362.69,13347,49.36939702213909] NS 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [13348,15381,12535.67,14689,49.36939702213909] OP 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [14232.48,15408,14110.57,15130,49.36939702213909] RM 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [15114,15370,13786.18,15139,50.64271980734179] RL 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [15055.8,16894,14349.84,16725,49.36939702213909] ON 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [15699.53,16474,15672.99,16186,49.36939702213909] DO 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [16187,16258,13639.83,14900,49.36939702213909] JN 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [14884,15334,13777.33,14405,49.36939702213909] EN 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [14405,14876,12969.58,14876,50.64271980734179] PI 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [14876,14927,12417.22,13245,50.64271980734179] FK 0 16:51:06.137 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) [12776.79,14078.5,12355.38,13681,49.36939702213909…]] NQ 0 16:51:09.741 TargetBasedNominalVariableConversionWithDimReduc_demo (BTCUSD,D1) Probability that predicator is associated with target 0.4981

Checking the relationship of the new variable with the target yields a relatively high p-value, highlighting one of the limitations of dimensionality reduction: the loss of information. Therefore, this method should be used with caution.

Conclusion

The conversion of nominal variables to ordinal variables for machine learning is a powerful yet nuanced process. It enables models to work with categorical data meaningfully, but it requires careful consideration to avoid introducing biases or misrepresentations. By employing the appropriate transformation techniques—whether manual ordering, frequency-based encoding, clustering, or target encoding—you can ensure that your machine learning models handle nominal variables effectively while preserving data integrity. This article provides an incomplete overview of common nominal variable conversion methods, it is important to note that there are many more advanced techniques available. The primary goal of this text is to introduce practitioners to the concept of ordinal encoding and the factors to consider when selecting the most appropriate method for their specific use case. By understanding the implications of different encoding techniques, practitioners can make more informed decisions and improve the performance and interpretability of their machine learning models. All the code files referenced in the article are attached below.

| File | Description |

|---|---|

| MQL5/scripts/CategoricalVariableConversion.py | python script with examples of categorical conversion |

| MQL5/scripts/CategoricalVariableConversion.ipynb | Jupyter notebook of the python script listed above |

| MQL5/scripts/ OneHotEncoding_demo.mq5 | demo script for converting nominal variable using one-hot encoding in MQL5 |

| MQL5/scripts/TargetBasedNominalVariableConversion_demo.mq5 | demo script for converting nominal variables using a custom target-based encoding method |

| MQL5/scripts/TargetBasedNominalVariableConversionWithDimReduc_demo.mq5 | demo script for converting nominal variables using a custom target-based encoding method that implements dimensionality reduction |

| MQL5/include/nom2ord.mqh | header file containing definition of CNomOrd and COneHotEncoder classes |

| MQL5/include/np.mqh | header file of vector and matrix utility functions |

Warning: All rights to these materials are reserved by MetaQuotes Ltd. Copying or reprinting of these materials in whole or in part is prohibited.

This article was written by a user of the site and reflects their personal views. MetaQuotes Ltd is not responsible for the accuracy of the information presented, nor for any consequences resulting from the use of the solutions, strategies or recommendations described.

MQL5 Wizard Techniques you should know (Part 42): ADX Oscillator

MQL5 Wizard Techniques you should know (Part 42): ADX Oscillator

Creating an MQL5 Expert Advisor Based on the PIRANHA Strategy by Utilizing Bollinger Bands

Creating an MQL5 Expert Advisor Based on the PIRANHA Strategy by Utilizing Bollinger Bands

Matrix Factorization: A more practical modeling

Matrix Factorization: A more practical modeling

Developing a multi-currency Expert Advisor (Part 12): Developing prop trading level risk manager

Developing a multi-currency Expert Advisor (Part 12): Developing prop trading level risk manager

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use