Neural networks made easy (Part 49): Soft Actor-Critic

We continue our discussion of reinforcement learning algorithms for solving continuous action space problems. In this article, I will present the Soft Actor-Critic (SAC) algorithm. The main advantage of SAC is the ability to find optimal policies that not only maximize the expected reward, but also have maximum entropy (diversity) of actions.

Neural networks made easy (Part 48): Methods for reducing overestimation of Q-function values

In the previous article, we introduced the DDPG method, which allows training models in a continuous action space. However, like other Q-learning methods, DDPG is prone to overestimating Q-function values. This problem often results in training an agent with a suboptimal strategy. In this article, we will look at some approaches to overcome the mentioned issue.

Neural networks made easy (Part 47): Continuous action space

In this article, we expand the range of tasks of our agent. The training process will include some aspects of money and risk management, which are an integral part of any trading strategy.

MQL5 Wizard Techniques you should know (Part 07): Dendrograms

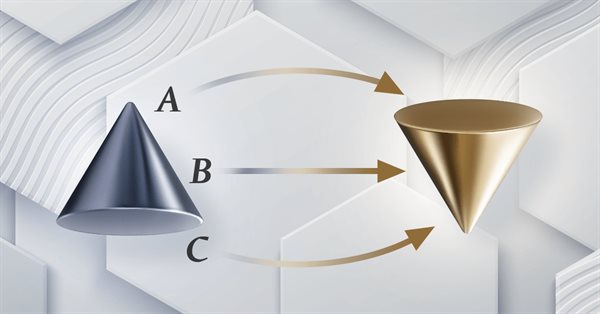

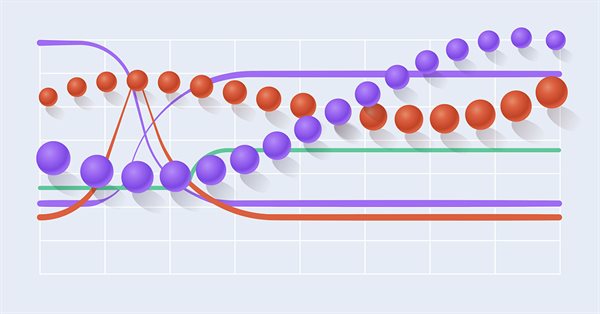

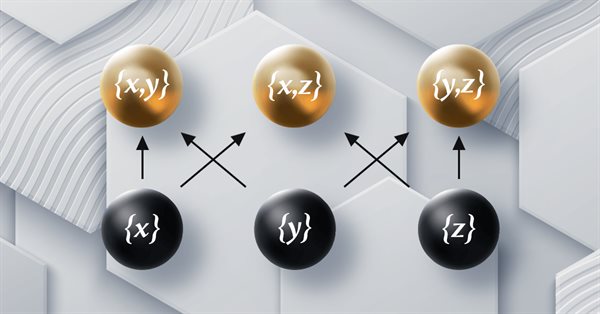

Data classification for purposes of analysis and forecasting is a very diverse arena within machine learning and it features a large number of approaches and methods. This piece looks at one such approach, namely Agglomerative Hierarchical Classification.

Neural networks made easy (Part 46): Goal-conditioned reinforcement learning (GCRL)

In this article, we will have a look at yet another reinforcement learning approach. It is called goal-conditioned reinforcement learning (GCRL). In this approach, an agent is trained to achieve different goals in specific scenarios.

Neural networks made easy (Part 45): Training state exploration skills

Training useful skills without an explicit reward function is one of the main challenges in hierarchical reinforcement learning. Previously, we already got acquainted with two algorithms for solving this problem. But the question of the completeness of environmental research remains open. This article demonstrates a different approach to skill training, the use of which directly depends on the current state of the system.

Neural networks made easy (Part 44): Learning skills with dynamics in mind

In the previous article, we introduced the DIAYN method, which offers the algorithm for learning a variety of skills. The acquired skills can be used for various tasks. But such skills can be quite unpredictable, which can make them difficult to use. In this article, we will look at an algorithm for learning predictable skills.

Neural networks made easy (Part 43): Mastering skills without the reward function

The problem of reinforcement learning lies in the need to define a reward function. It can be complex or difficult to formalize. To address this problem, activity-based and environment-based approaches are being explored to learn skills without an explicit reward function.

Neural networks made easy (Part 42): Model procrastination, reasons and solutions

In the context of reinforcement learning, model procrastination can be caused by several reasons. The article considers some of the possible causes of model procrastination and methods for overcoming them.

Integrate Your Own LLM into EA (Part 2): Example of Environment Deployment

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

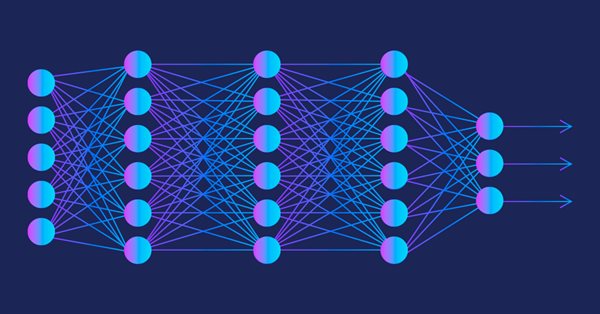

Neural networks made easy (Part 41): Hierarchical models

The article describes hierarchical training models that offer an effective approach to solving complex machine learning problems. Hierarchical models consist of several levels, each of which is responsible for different aspects of the task.

Neural networks made easy (Part 40): Using Go-Explore on large amounts of data

This article discusses the use of the Go-Explore algorithm over a long training period, since the random action selection strategy may not lead to a profitable pass as training time increases.

Integrate Your Own LLM into EA (Part 1): Hardware and Environment Deployment

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

Mastering ONNX: The Game-Changer for MQL5 Traders

Dive into the world of ONNX, the powerful open-standard format for exchanging machine learning models. Discover how leveraging ONNX can revolutionize algorithmic trading in MQL5, allowing traders to seamlessly integrate cutting-edge AI models and elevate their strategies to new heights. Uncover the secrets to cross-platform compatibility and learn how to unlock the full potential of ONNX in your MQL5 trading endeavors. Elevate your trading game with this comprehensive guide to Mastering ONNX

Data label for time series mining (Part 3):Example for using label data

This series of articles introduces several time series labeling methods, which can create data that meets most artificial intelligence models, and targeted data labeling according to needs can make the trained artificial intelligence model more in line with the expected design, improve the accuracy of our model, and even help the model make a qualitative leap!

Category Theory in MQL5 (Part 23): A different look at the Double Exponential Moving Average

In this article we continue with our theme in the last of tackling everyday trading indicators viewed in a ‘new’ light. We are handling horizontal composition of natural transformations for this piece and the best indicator for this, that expands on what we just covered, is the double exponential moving average (DEMA).

Classification models in the Scikit-Learn library and their export to ONNX

In this article, we will explore the application of all classification models available in the Scikit-Learn library to solve the classification task of Fisher's Iris dataset. We will attempt to convert these models into ONNX format and utilize the resulting models in MQL5 programs. Additionally, we will compare the accuracy of the original models with their ONNX versions on the full Iris dataset.

Category Theory in MQL5 (Part 22): A different look at Moving Averages

In this article we attempt to simplify our illustration of concepts covered in these series by dwelling on just one indicator, the most common and probably the easiest to understand. The moving average. In doing so we consider significance and possible applications of vertical natural transformations.

Neural networks made easy (Part 39): Go-Explore, a different approach to exploration

We continue studying the environment in reinforcement learning models. And in this article we will look at another algorithm – Go-Explore, which allows you to effectively explore the environment at the model training stage.

Neural networks made easy (Part 38): Self-Supervised Exploration via Disagreement

One of the key problems within reinforcement learning is environmental exploration. Previously, we have already seen the research method based on Intrinsic Curiosity. Today I propose to look at another algorithm: Exploration via Disagreement.

Category Theory in MQL5 (Part 21): Natural Transformations with LDA

This article, the 21st in our series, continues with a look at Natural Transformations and how they can be implemented using linear discriminant analysis. We present applications of this in a signal class format, like in the previous article.

Evaluating ONNX models using regression metrics

Regression is a task of predicting a real value from an unlabeled example. The so-called regression metrics are used to assess the accuracy of regression model predictions.

Category Theory in MQL5 (Part 20): A detour to Self-Attention and the Transformer

We digress in our series by pondering at part of the algorithm to chatGPT. Are there any similarities or concepts borrowed from natural transformations? We attempt to answer these and other questions in a fun piece, with our code in a signal class format.

Data label for timeseries mining (Part 2):Make datasets with trend markers using Python

This series of articles introduces several time series labeling methods, which can create data that meets most artificial intelligence models, and targeted data labeling according to needs can make the trained artificial intelligence model more in line with the expected design, improve the accuracy of our model, and even help the model make a qualitative leap!

Neural networks made easy (Part 37): Sparse Attention

In the previous article, we discussed relational models which use attention mechanisms in their architecture. One of the specific features of these models is the intensive utilization of computing resources. In this article, we will consider one of the mechanisms for reducing the number of computational operations inside the Self-Attention block. This will increase the general performance of the model.

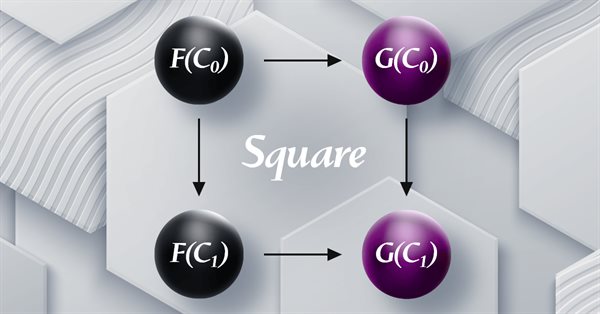

Category Theory in MQL5 (Part 19): Naturality Square Induction

We continue our look at natural transformations by considering naturality square induction. Slight restraints on multicurrency implementation for experts assembled with the MQL5 wizard mean we are showcasing our data classification abilities with a script. Principle applications considered are price change classification and thus its forecasting.

Data label for time series mining(Part 1):Make a dataset with trend markers through the EA operation chart

This series of articles introduces several time series labeling methods, which can create data that meets most artificial intelligence models, and targeted data labeling according to needs can make the trained artificial intelligence model more in line with the expected design, improve the accuracy of our model, and even help the model make a qualitative leap!

Category Theory in MQL5 (Part 18): Naturality Square

This article continues our series into category theory by introducing natural transformations, a key pillar within the subject. We look at the seemingly complex definition, then delve into examples and applications with this series’ ‘bread and butter’; volatility forecasting.

Wrapping ONNX models in classes

Object-oriented programming enables creation of a more compact code that is easy to read and modify. Here we will have a look at the example for three ONNX models.

Category Theory in MQL5 (Part 17): Functors and Monoids

This article, the final in our series to tackle functors as a subject, revisits monoids as a category. Monoids which we have already introduced in these series are used here to aid in position sizing, together with multi-layer perceptrons.

Category Theory in MQL5 (Part 16): Functors with Multi-Layer Perceptrons

This article, the 16th in our series, continues with a look at Functors and how they can be implemented using artificial neural networks. We depart from our approach so far in the series, that has involved forecasting volatility and try to implement a custom signal class for setting position entry and exit signals.

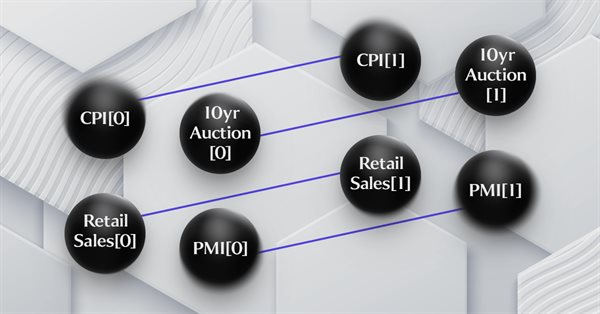

Category Theory in MQL5 (Part 15) : Functors with Graphs

This article on Category Theory implementation in MQL5, continues the series by looking at Functors but this time as a bridge between Graphs and a set. We revisit calendar data, and despite its limitations in Strategy Tester use, make the case using functors in forecasting volatility with the help of correlation.

Category Theory in MQL5 (Part 14): Functors with Linear-Orders

This article which is part of a broader series on Category Theory implementation in MQL5, delves into Functors. We examine how a Linear Order can be mapped to a set, thanks to Functors; by considering two sets of data that one would typically dismiss as having any connection.

Category Theory in MQL5 (Part 13): Calendar Events with Database Schemas

This article, that follows Category Theory implementation of Orders in MQL5, considers how database schemas can be incorporated for classification in MQL5. We take an introductory look at how database schema concepts could be married with category theory when identifying trade relevant text(string) information. Calendar events are the focus.

Integrating ML models with the Strategy Tester (Part 3): Managing CSV files (II)

This material provides a complete guide to creating a class in MQL5 for efficient management of CSV files. We will see the implementation of methods for opening, writing, reading, and transforming data. We will also consider how to use them to store and access information. In addition, we will discuss the limitations and the most important aspects of using such a class. This article ca be a valuable resource for those who want to learn how to process CSV files in MQL5.

Category Theory in MQL5 (Part 12): Orders

This article which is part of a series that follows Category Theory implementation of Graphs in MQL5, delves in Orders. We examine how concepts of Order-Theory can support monoid sets in informing trade decisions by considering two major ordering types.

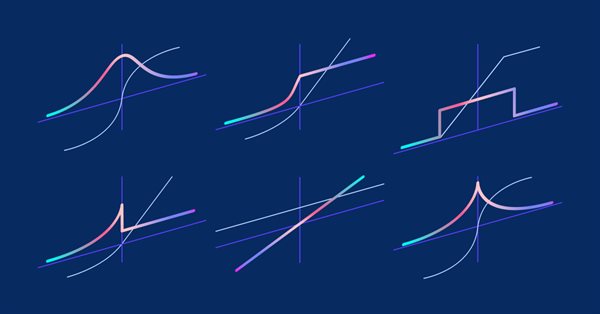

Matrices and vectors in MQL5: Activation functions

Here we will describe only one of the aspects of machine learning - activation functions. In artificial neural networks, a neuron activation function calculates an output signal value based on the values of an input signal or a set of input signals. We will delve into the inner workings of the process.

Category Theory (Part 9): Monoid-Actions

This article continues the series on category theory implementation in MQL5. Here we continue monoid-actions as a means of transforming monoids, covered in the previous article, leading to increased applications.

Experiments with neural networks (Part 6): Perceptron as a self-sufficient tool for price forecast

The article provides an example of using a perceptron as a self-sufficient price prediction tool by showcasing general concepts and the simplest ready-made Expert Advisor followed by the results of its optimization.

Frequency domain representations of time series: The Power Spectrum

In this article we discuss methods related to the analysis of timeseries in the frequency domain. Emphasizing the utility of examining the power spectra of time series when building predictive models. In this article we will discuss some of the useful perspectives to be gained by analyzing time series in the frequency domain using the discrete fourier transform (dft).