Population optimization algorithms: Monkey algorithm (MA)

In this article, I will consider the Monkey Algorithm (MA) optimization algorithm. The ability of these animals to overcome difficult obstacles and get to the most inaccessible tree tops formed the basis of the idea of the MA algorithm.

Deep Learning GRU model with Python to ONNX with EA, and GRU vs LSTM models

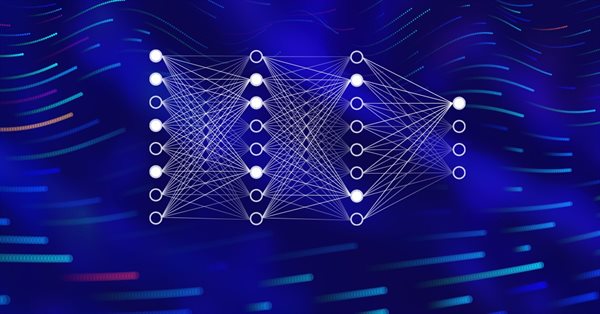

We will guide you through the entire process of DL with python to make a GRU ONNX model, culminating in the creation of an Expert Advisor (EA) designed for trading, and subsequently comparing GRU model with LSTN model.

Building Your First Glass-box Model Using Python And MQL5

Machine learning models are difficult to interpret and understanding why our models deviate from our expectations is critical if we want to gain any value from using such advanced techniques. Without comprehensive insight into the inner workings of our model, we might fail to spot bugs that are corrupting our model's performance, we may waste time over engineering features that aren't predictive and in the long run we risk underutilizing the power of these models. Fortunately, there is a sophisticated and well maintained all in one solution that allows us to see exactly what our model is doing underneath the hood.

Working with ONNX models in float16 and float8 formats

Data formats used to represent machine learning models play a crucial role in their effectiveness. In recent years, several new types of data have emerged, specifically designed for working with deep learning models. In this article, we will focus on two new data formats that have become widely adopted in modern models.

Neural networks made easy (Part 53): Reward decomposition

We have already talked more than once about the importance of correctly selecting the reward function, which we use to stimulate the desired behavior of the Agent by adding rewards or penalties for individual actions. But the question remains open about the decryption of our signals by the Agent. In this article, we will talk about reward decomposition in terms of transmitting individual signals to the trained Agent.

Mastering ONNX: The Game-Changer for MQL5 Traders

Dive into the world of ONNX, the powerful open-standard format for exchanging machine learning models. Discover how leveraging ONNX can revolutionize algorithmic trading in MQL5, allowing traders to seamlessly integrate cutting-edge AI models and elevate their strategies to new heights. Uncover the secrets to cross-platform compatibility and learn how to unlock the full potential of ONNX in your MQL5 trading endeavors. Elevate your trading game with this comprehensive guide to Mastering ONNX

Regression models of the Scikit-learn Library and their export to ONNX

In this article, we will explore the application of regression models from the Scikit-learn package, attempt to convert them into ONNX format, and use the resultant models within MQL5 programs. Additionally, we will compare the accuracy of the original models with their ONNX versions for both float and double precision. Furthermore, we will examine the ONNX representation of regression models, aiming to provide a better understanding of their internal structure and operational principles.

Data label for timeseries mining (Part 2):Make datasets with trend markers using Python

This series of articles introduces several time series labeling methods, which can create data that meets most artificial intelligence models, and targeted data labeling according to needs can make the trained artificial intelligence model more in line with the expected design, improve the accuracy of our model, and even help the model make a qualitative leap!

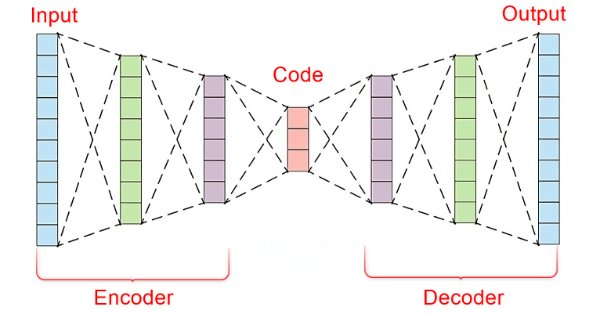

Neural networks made easy (Part 22): Unsupervised learning of recurrent models

We continue to study unsupervised learning algorithms. This time I suggest that we discuss the features of autoencoders when applied to recurrent model training.

Wrapping ONNX models in classes

Object-oriented programming enables creation of a more compact code that is easy to read and modify. Here we will have a look at the example for three ONNX models.

Category Theory in MQL5 (Part 3)

Category Theory is a diverse and expanding branch of Mathematics which as of yet is relatively uncovered in the MQL5 community. These series of articles look to introduce and examine some of its concepts with the overall goal of establishing an open library that provides insight while hopefully furthering the use of this remarkable field in Traders' strategy development.

Population optimization algorithms: Artificial Bee Colony (ABC)

In this article, we will study the algorithm of an artificial bee colony and supplement our knowledge with new principles of studying functional spaces. In this article, I will showcase my interpretation of the classic version of the algorithm.

Introduction to MQL5 (Part 7): Beginner's Guide to Building Expert Advisors and Utilizing AI-Generated Code in MQL5

Discover the ultimate beginner's guide to building Expert Advisors (EAs) with MQL5 in our comprehensive article. Learn step-by-step how to construct EAs using pseudocode and harness the power of AI-generated code. Whether you're new to algorithmic trading or seeking to enhance your skills, this guide provides a clear path to creating effective EAs.

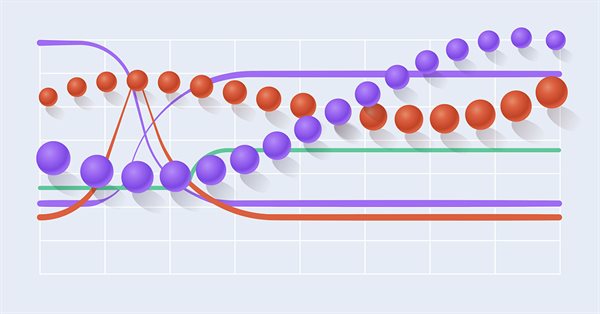

Data Science and Machine Learning(Part 14): Finding Your Way in the Markets with Kohonen Maps

Are you looking for a cutting-edge approach to trading that can help you navigate complex and ever-changing markets? Look no further than Kohonen maps, an innovative form of artificial neural networks that can help you uncover hidden patterns and trends in market data. In this article, we'll explore how Kohonen maps work, and how they can be used to develop smarter, more effective trading strategies. Whether you're a seasoned trader or just starting out, you won't want to miss this exciting new approach to trading.

Neural networks made easy (Part 44): Learning skills with dynamics in mind

In the previous article, we introduced the DIAYN method, which offers the algorithm for learning a variety of skills. The acquired skills can be used for various tasks. But such skills can be quite unpredictable, which can make them difficult to use. In this article, we will look at an algorithm for learning predictable skills.

Data Science and Machine Learning (Part 08): K-Means Clustering in plain MQL5

Data mining is crucial to a data scientist and a trader because very often, the data isn't as straightforward as we think it is. The human eye can not understand the minor underlying pattern and relationships in the dataset, maybe the K-means algorithm can help us with that. Let's find out...

Neural networks made easy (Part 23): Building a tool for Transfer Learning

In this series of articles, we have already mentioned Transfer Learning more than once. However, this was only mentioning. in this article, I suggest filling this gap and taking a closer look at Transfer Learning.

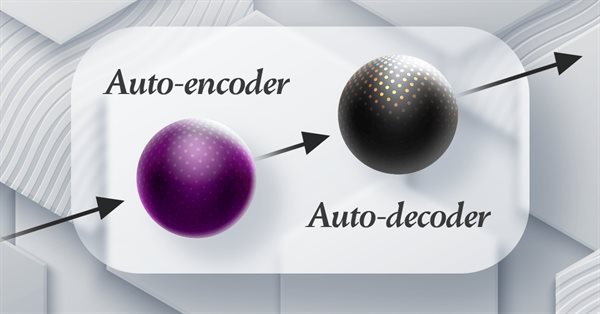

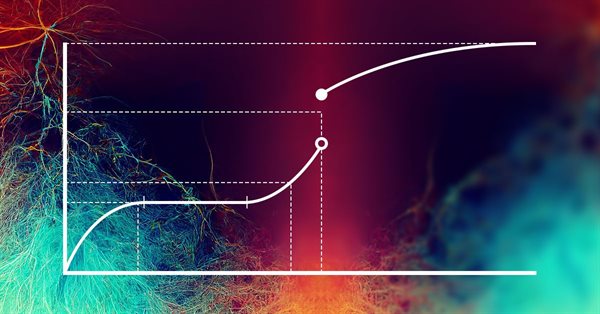

Neural networks made easy (Part 20): Autoencoders

We continue to study unsupervised learning algorithms. Some readers might have questions regarding the relevance of recent publications to the topic of neural networks. In this new article, we get back to studying neural networks.

Neural networks made easy (Part 50): Soft Actor-Critic (model optimization)

In the previous article, we implemented the Soft Actor-Critic algorithm, but were unable to train a profitable model. Here we will optimize the previously created model to obtain the desired results.

Neural networks made easy (Part 38): Self-Supervised Exploration via Disagreement

One of the key problems within reinforcement learning is environmental exploration. Previously, we have already seen the research method based on Intrinsic Curiosity. Today I propose to look at another algorithm: Exploration via Disagreement.

Population optimization algorithms: Stochastic Diffusion Search (SDS)

The article discusses Stochastic Diffusion Search (SDS), which is a very powerful and efficient optimization algorithm based on the principles of random walk. The algorithm allows finding optimal solutions in complex multidimensional spaces, while featuring a high speed of convergence and the ability to avoid local extrema.

Neural networks made easy (Part 43): Mastering skills without the reward function

The problem of reinforcement learning lies in the need to define a reward function. It can be complex or difficult to formalize. To address this problem, activity-based and environment-based approaches are being explored to learn skills without an explicit reward function.

Neural networks made easy (Part 66): Exploration problems in offline learning

Models are trained offline using data from a prepared training dataset. While providing certain advantages, its negative side is that information about the environment is greatly compressed to the size of the training dataset. Which, in turn, limits the possibilities of exploration. In this article, we will consider a method that enables the filling of a training dataset with the most diverse data possible.

Population optimization algorithms: Firefly Algorithm (FA)

In this article, I will consider the Firefly Algorithm (FA) optimization method. Thanks to the modification, the algorithm has turned from an outsider into a real rating table leader.

Population optimization algorithms: Cuckoo Optimization Algorithm (COA)

The next algorithm I will consider is cuckoo search optimization using Levy flights. This is one of the latest optimization algorithms and a new leader in the leaderboard.

Category Theory in MQL5 (Part 20): A detour to Self-Attention and the Transformer

We digress in our series by pondering at part of the algorithm to chatGPT. Are there any similarities or concepts borrowed from natural transformations? We attempt to answer these and other questions in a fun piece, with our code in a signal class format.

Neural networks made easy (Part 35): Intrinsic Curiosity Module

We continue to study reinforcement learning algorithms. All the algorithms we have considered so far required the creation of a reward policy to enable the agent to evaluate each of its actions at each transition from one system state to another. However, this approach is rather artificial. In practice, there is some time lag between an action and a reward. In this article, we will get acquainted with a model training algorithm which can work with various time delays from the action to the reward.

Population optimization algorithms: Saplings Sowing and Growing up (SSG)

Saplings Sowing and Growing up (SSG) algorithm is inspired by one of the most resilient organisms on the planet demonstrating outstanding capability for survival in a wide variety of conditions.

Experiments with neural networks (Part 4): Templates

In this article, I will use experimentation and non-standard approaches to develop a profitable trading system and check whether neural networks can be of any help for traders. MetaTrader 5 as a self-sufficient tool for using neural networks in trading. Simple explanation.

Category Theory in MQL5 (Part 18): Naturality Square

This article continues our series into category theory by introducing natural transformations, a key pillar within the subject. We look at the seemingly complex definition, then delve into examples and applications with this series’ ‘bread and butter’; volatility forecasting.

Category Theory in MQL5 (Part 2)

Category Theory is a diverse and expanding branch of Mathematics which as of yet is relatively uncovered in the MQL5 community. These series of articles look to introduce and examine some of its concepts with the overall goal of establishing an open library that attracts comments and discussion while hopefully furthering the use of this remarkable field in Traders' strategy development.

Neural networks made easy (Part 48): Methods for reducing overestimation of Q-function values

In the previous article, we introduced the DDPG method, which allows training models in a continuous action space. However, like other Q-learning methods, DDPG is prone to overestimating Q-function values. This problem often results in training an agent with a suboptimal strategy. In this article, we will look at some approaches to overcome the mentioned issue.

Integrate Your Own LLM into EA (Part 2): Example of Environment Deployment

With the rapid development of artificial intelligence today, language models (LLMs) are an important part of artificial intelligence, so we should think about how to integrate powerful LLMs into our algorithmic trading. For most people, it is difficult to fine-tune these powerful models according to their needs, deploy them locally, and then apply them to algorithmic trading. This series of articles will take a step-by-step approach to achieve this goal.

Python, ONNX and MetaTrader 5: Creating a RandomForest model with RobustScaler and PolynomialFeatures data preprocessing

In this article, we will create a random forest model in Python, train the model, and save it as an ONNX pipeline with data preprocessing. After that we will use the model in the MetaTrader 5 terminal.

Integrating ML models with the Strategy Tester (Part 3): Managing CSV files (II)

This material provides a complete guide to creating a class in MQL5 for efficient management of CSV files. We will see the implementation of methods for opening, writing, reading, and transforming data. We will also consider how to use them to store and access information. In addition, we will discuss the limitations and the most important aspects of using such a class. This article ca be a valuable resource for those who want to learn how to process CSV files in MQL5.

Data Science and Machine Learning (Part 19): Supercharge Your AI models with AdaBoost

AdaBoost, a powerful boosting algorithm designed to elevate the performance of your AI models. AdaBoost, short for Adaptive Boosting, is a sophisticated ensemble learning technique that seamlessly integrates weak learners, enhancing their collective predictive strength.

Data Science and ML (Part 22): Leveraging Autoencoders Neural Networks for Smarter Trades by Moving from Noise to Signal

In the fast-paced world of financial markets, separating meaningful signals from the noise is crucial for successful trading. By employing sophisticated neural network architectures, autoencoders excel at uncovering hidden patterns within market data, transforming noisy input into actionable insights. In this article, we explore how autoencoders are revolutionizing trading practices, offering traders a powerful tool to enhance decision-making and gain a competitive edge in today's dynamic markets.

Neural networks made easy (Part 47): Continuous action space

In this article, we expand the range of tasks of our agent. The training process will include some aspects of money and risk management, which are an integral part of any trading strategy.

Neural networks made easy (Part 34): Fully Parameterized Quantile Function

We continue studying distributed Q-learning algorithms. In previous articles, we have considered distributed and quantile Q-learning algorithms. In the first algorithm, we trained the probabilities of given ranges of values. In the second algorithm, we trained ranges with a given probability. In both of them, we used a priori knowledge of one distribution and trained another one. In this article, we will consider an algorithm which allows the model to train for both distributions.

Introduction to MQL5 (Part 4): Mastering Structures, Classes, and Time Functions

Unlock the secrets of MQL5 programming in our latest article! Delve into the essentials of structures, classes, and time functions, empowering your coding journey. Whether you're a beginner or an experienced developer, our guide simplifies complex concepts, providing valuable insights for mastering MQL5. Elevate your programming skills and stay ahead in the world of algorithmic trading!