An example of how to ensemble ONNX models in MQL5

ONNX (Open Neural Network eXchange) is an open format built to represent neural networks. In this article, we will show how to use two ONNX models in one Expert Advisor simultaneously.

Neural networks made easy (Part 67): Using past experience to solve new tasks

In this article, we continue discussing methods for collecting data into a training set. Obviously, the learning process requires constant interaction with the environment. However, situations can be different.

Neural networks made easy (Part 32): Distributed Q-Learning

We got acquainted with the Q-learning method in one of the earlier articles within this series. This method averages rewards for each action. Two works were presented in 2017, which show greater success when studying the reward distribution function. Let's consider the possibility of using such technology to solve our problems.

Neural networks made easy (Part 21): Variational autoencoders (VAE)

In the last article, we got acquainted with the Autoencoder algorithm. Like any other algorithm, it has its advantages and disadvantages. In its original implementation, the autoenctoder is used to separate the objects from the training sample as much as possible. This time we will talk about how to deal with some of its disadvantages.

Neural networks made easy (Part 16): Practical use of clustering

In the previous article, we have created a class for data clustering. In this article, I want to share variants of the possible application of obtained results in solving practical trading tasks.

Data Science and Machine Learning (Part 07): Polynomial Regression

Unlike linear regression, polynomial regression is a flexible model aimed to perform better at tasks the linear regression model could not handle, Let's find out how to make polynomial models in MQL5 and make something positive out of it.

Backpropagation Neural Networks using MQL5 Matrices

The article describes the theory and practice of applying the backpropagation algorithm in MQL5 using matrices. It provides ready-made classes along with script, indicator and Expert Advisor examples.

Population optimization algorithms: Gravitational Search Algorithm (GSA)

GSA is a population optimization algorithm inspired by inanimate nature. Thanks to Newton's law of gravity implemented in the algorithm, the high reliability of modeling the interaction of physical bodies allows us to observe the enchanting dance of planetary systems and galactic clusters. In this article, I will consider one of the most interesting and original optimization algorithms. The simulator of the space objects movement is provided as well.

Population optimization algorithms: Grey Wolf Optimizer (GWO)

Let's consider one of the newest modern optimization algorithms - Grey Wolf Optimization. The original behavior on test functions makes this algorithm one of the most interesting among the ones considered earlier. This is one of the top algorithms for use in training neural networks, smooth functions with many variables.

Neural networks made easy (Part 49): Soft Actor-Critic

We continue our discussion of reinforcement learning algorithms for solving continuous action space problems. In this article, I will present the Soft Actor-Critic (SAC) algorithm. The main advantage of SAC is the ability to find optimal policies that not only maximize the expected reward, but also have maximum entropy (diversity) of actions.

Neural networks made easy (Part 25): Practicing Transfer Learning

In the last two articles, we developed a tool for creating and editing neural network models. Now it is time to evaluate the potential use of Transfer Learning technology using practical examples.

Data Science and Machine Learning (Part 06): Gradient Descent

The gradient descent plays a significant role in training neural networks and many machine learning algorithms. It is a quick and intelligent algorithm despite its impressive work it is still misunderstood by a lot of data scientists let's see what it is all about.

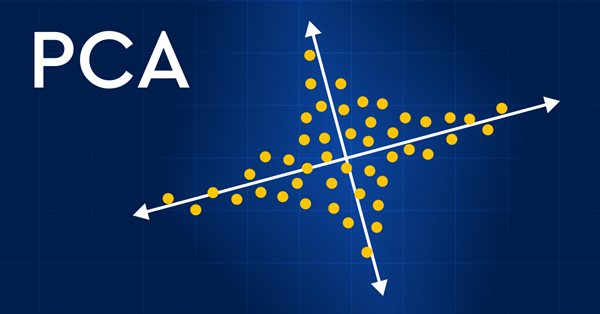

Data Science and Machine Learning (Part 13): Improve your financial market analysis with Principal Component Analysis (PCA)

Revolutionize your financial market analysis with Principal Component Analysis (PCA)! Discover how this powerful technique can unlock hidden patterns in your data, uncover latent market trends, and optimize your investment strategies. In this article, we explore how PCA can provide a new lens for analyzing complex financial data, revealing insights that would be missed by traditional approaches. Find out how applying PCA to financial market data can give you a competitive edge and help you stay ahead of the curve

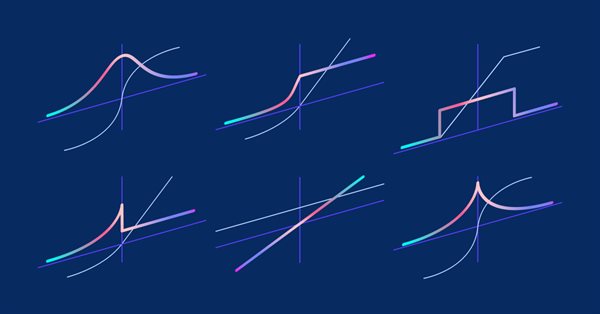

Matrices and vectors in MQL5: Activation functions

Here we will describe only one of the aspects of machine learning - activation functions. In artificial neural networks, a neuron activation function calculates an output signal value based on the values of an input signal or a set of input signals. We will delve into the inner workings of the process.

Experiments with neural networks (Part 2): Smart neural network optimization

In this article, I will use experimentation and non-standard approaches to develop a profitable trading system and check whether neural networks can be of any help for traders. MetaTrader 5 as a self-sufficient tool for using neural networks in trading.

Neural networks made easy (Part 31): Evolutionary algorithms

In the previous article, we started exploring non-gradient optimization methods. We got acquainted with the genetic algorithm. Today, we will continue this topic and will consider another class of evolutionary algorithms.

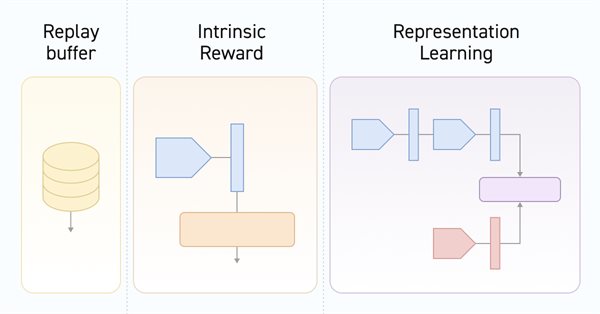

Neural networks made easy (Part 55): Contrastive intrinsic control (CIC)

Contrastive training is an unsupervised method of training representation. Its goal is to train a model to highlight similarities and differences in data sets. In this article, we will talk about using contrastive training approaches to explore different Actor skills.

Neural networks made easy (Part 33): Quantile regression in distributed Q-learning

We continue studying distributed Q-learning. Today we will look at this approach from the other side. We will consider the possibility of using quantile regression to solve price prediction tasks.

Population optimization algorithms: Fish School Search (FSS)

Fish School Search (FSS) is a new optimization algorithm inspired by the behavior of fish in a school, most of which (up to 80%) swim in an organized community of relatives. It has been proven that fish aggregations play an important role in the efficiency of foraging and protection from predators.

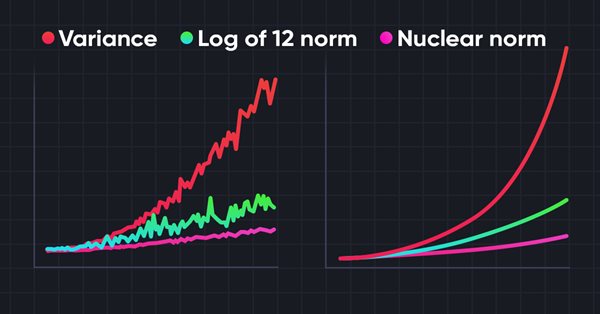

Neural networks made easy (Part 56): Using nuclear norm to drive research

The study of the environment in reinforcement learning is a pressing problem. We have already looked at some approaches previously. In this article, we will have a look at yet another method based on maximizing the nuclear norm. It allows agents to identify environmental states with a high degree of novelty and diversity.

Neural networks made easy (Part 54): Using random encoder for efficient research (RE3)

Whenever we consider reinforcement learning methods, we are faced with the issue of efficiently exploring the environment. Solving this issue often leads to complication of the algorithm and training of additional models. In this article, we will look at an alternative approach to solving this problem.

Experiments with neural networks (Part 3): Practical application

In this article series, I use experimentation and non-standard approaches to develop a profitable trading system and check whether neural networks can be of any help for traders. MetaTrader 5 is approached as a self-sufficient tool for using neural networks in trading.

Population optimization algorithms: Invasive Weed Optimization (IWO)

The amazing ability of weeds to survive in a wide variety of conditions has become the idea for a powerful optimization algorithm. IWO is one of the best algorithms among the previously reviewed ones.

Data Science and Machine Learning(Part 21): Unlocking Neural Networks, Optimization algorithms demystified

Dive into the heart of neural networks as we demystify the optimization algorithms used inside the neural network. In this article, discover the key techniques that unlock the full potential of neural networks, propelling your models to new heights of accuracy and efficiency.

Neural networks made easy (Part 37): Sparse Attention

In the previous article, we discussed relational models which use attention mechanisms in their architecture. One of the specific features of these models is the intensive utilization of computing resources. In this article, we will consider one of the mechanisms for reducing the number of computational operations inside the Self-Attention block. This will increase the general performance of the model.

Filtering and feature extraction in the frequency domain

In this article we explore the application of digital filters on time series represented in the frequency domain so as to extract unique features that may be useful to prediction models.

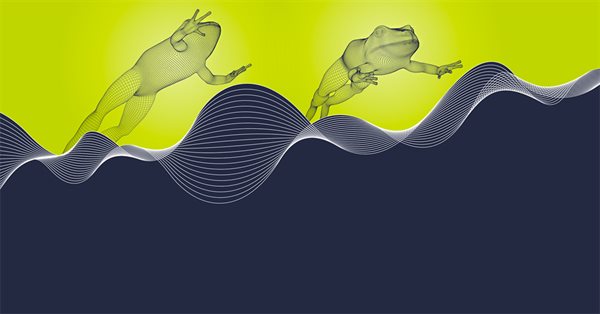

Population optimization algorithms: Shuffled Frog-Leaping algorithm (SFL)

The article presents a detailed description of the shuffled frog-leaping (SFL) algorithm and its capabilities in solving optimization problems. The SFL algorithm is inspired by the behavior of frogs in their natural environment and offers a new approach to function optimization. The SFL algorithm is an efficient and flexible tool capable of processing a variety of data types and achieving optimal solutions.

Population optimization algorithms: ElectroMagnetism-like algorithm (ЕМ)

The article describes the principles, methods and possibilities of using the Electromagnetic Algorithm in various optimization problems. The EM algorithm is an efficient optimization tool capable of working with large amounts of data and multidimensional functions.

Frequency domain representations of time series: The Power Spectrum

In this article we discuss methods related to the analysis of timeseries in the frequency domain. Emphasizing the utility of examining the power spectra of time series when building predictive models. In this article we will discuss some of the useful perspectives to be gained by analyzing time series in the frequency domain using the discrete fourier transform (dft).

Data label for time series mining (Part 3):Example for using label data

This series of articles introduces several time series labeling methods, which can create data that meets most artificial intelligence models, and targeted data labeling according to needs can make the trained artificial intelligence model more in line with the expected design, improve the accuracy of our model, and even help the model make a qualitative leap!

MQL5 Wizard techniques you should know (Part 04): Linear Discriminant Analysis

Todays trader is a philomath who is almost always looking up new ideas, trying them out, choosing to modify them or discard them; an exploratory process that should cost a fair amount of diligence. These series of articles will proposition that the MQL5 wizard should be a mainstay for traders in this effort.

MQL5 Wizard Techniques you should know (Part 09): Pairing K-Means Clustering with Fractal Waves

K-Means clustering takes the approach to grouping data points as a process that’s initially focused on the macro view of a data set that uses random generated cluster centroids before zooming in and adjusting these centroids to accurately represent the data set. We will look at this and exploit a few of its use cases.

Neural networks made easy (Part 18): Association rules

As a continuation of this series of articles, let's consider another type of problems within unsupervised learning methods: mining association rules. This problem type was first used in retail, namely supermarkets, to analyze market baskets. In this article, we will talk about the applicability of such algorithms in trading.

Category Theory in MQL5 (Part 15) : Functors with Graphs

This article on Category Theory implementation in MQL5, continues the series by looking at Functors but this time as a bridge between Graphs and a set. We revisit calendar data, and despite its limitations in Strategy Tester use, make the case using functors in forecasting volatility with the help of correlation.

Data Science and Machine Learning (Part 18): The battle of Mastering Market Complexity, Truncated SVD Versus NMF

Truncated Singular Value Decomposition (SVD) and Non-Negative Matrix Factorization (NMF) are dimensionality reduction techniques. They both play significant roles in shaping data-driven trading strategies. Discover the art of dimensionality reduction, unraveling insights, and optimizing quantitative analyses for an informed approach to navigating the intricacies of financial markets.

Neural networks made easy (Part 17): Dimensionality reduction

In this part we continue discussing Artificial Intelligence models. Namely, we study unsupervised learning algorithms. We have already discussed one of the clustering algorithms. In this article, I am sharing a variant of solving problems related to dimensionality reduction.

Measuring Indicator Information

Machine learning has become a popular method for strategy development. Whilst there has been more emphasis on maximizing profitability and prediction accuracy , the importance of processing the data used to build predictive models has not received a lot of attention. In this article we consider using the concept of entropy to evaluate the appropriateness of indicators to be used in predictive model building as documented in the book Testing and Tuning Market Trading Systems by Timothy Masters.

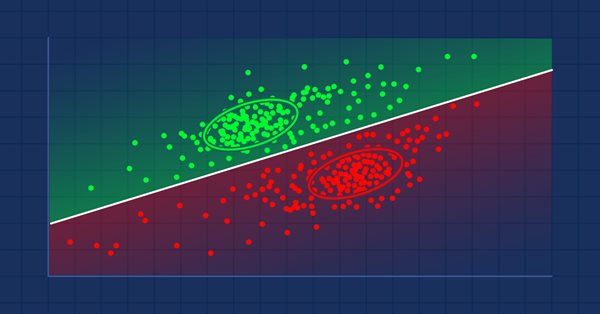

Data Science and Machine Learning (Part 15): SVM, A Must-Have Tool in Every Trader's Toolbox

Discover the indispensable role of Support Vector Machines (SVM) in shaping the future of trading. This comprehensive guide explores how SVM can elevate your trading strategies, enhance decision-making, and unlock new opportunities in the financial markets. Dive into the world of SVM with real-world applications, step-by-step tutorials, and expert insights. Equip yourself with the essential tool that can help you navigate the complexities of modern trading. Elevate your trading game with SVM—a must-have for every trader's toolbox.

Neural networks made easy (Part 58): Decision Transformer (DT)

We continue to explore reinforcement learning methods. In this article, I will focus on a slightly different algorithm that considers the Agent’s policy in the paradigm of constructing a sequence of actions.

Experiments with neural networks (Part 7): Passing indicators

Examples of passing indicators to a perceptron. The article describes general concepts and showcases the simplest ready-made Expert Advisor followed by the results of its optimization and forward test.