Regression models of the Scikit-learn Library and their export to ONNX

ONNX (Open Neural Network Exchange) is a format for describing and exchanging machine learning models, providing the capability to transfer models between different machine learning frameworks. In deep learning and neural networks, data types like float32 are frequently used. They are widely applied because they usually provide acceptable accuracy and efficiency for training deep learning models.

Some classical machine learning models are difficult to represent as ONNX operators. Therefore, additional ML operators (ai.onnx.ml) were introduced to implement them in ONNX. It's worth noting that according to the ONNX specification, the key operators in this set (LinearRegressor, SVMRegressor, TreeEnsembleRegressor) can accept various types of input data (tensor(float), tensor(double), tensor(int64), tensor(int32)), but they always return the type tensor(float) as output. The parameterization of these operators is also performed using floating-point numbers, which may limit the accuracy of calculations, especially if double precision numbers were used to define the parameters of the original model.

This can lead to a loss of accuracy when converting models or using different data types in the process of converting and processing data in ONNX. Much depends on the converter, as we will see later; some models manage to bypass these limitations and ensure full portability of ONNX models, allowing work with them in double precision without losing accuracy. It's important to consider these characteristics when working with models and their representation in ONNX, especially in cases where the accuracy of data representation matters.

Scikit-learn is one of the most popular and widely used libraries for machine learning in the Python community. It offers a wide range of algorithms, a user-friendly interface, and good documentation. The previous article, "Classification Models of the Scikit-learn Library and Their Export to ONNX", covered classification models.

In this article, we will explore the application of regression models in the Scikit-learn package, compute their parameters with double precision for the test dataset, attempt to convert them to the ONNX format for float and double precision, and use the obtained models in programs on MQL5. Additionally, we will compare the accuracy of the original models and their ONNX versions for float and double precision. Furthermore, we will examine the ONNX representation of regression models, which will provide a better understanding of their internal structure and operation.

Contents

- If it bothers you, welcome to contribute

- 1. Test Dataset

The script for displaying the test dataset - 2. Regression Models

2.0. List of Scikit-learn Regression Models - 2.1. Scikit-learn Regression Models that convert to ONNX models float and double

- 2.1.1. sklearn.linear_model.ARDRegression

2.1.1.1. Code for creating the ARDRegression

2.1.1.2. MQL5 code for executing ONNX Models

2.1.1.3. ONNX representation of the ard_regression_float.onnx and ard_regression_double.onnx - 2.1.2. sklearn.linear_model.BayesianRidge

2.1.2.1. Code for creating the BayesianRidge model and exporting it to ONNX for float and double

2.1.2.2. MQL5 code for executing ONNX Models

2.1.2.3. ONNX representation of the bayesian_ridge_float.onnx and bayesian_ridge_double.onnx - 2.1.3. sklearn.linear_model.ElasticNet

2.1.3.1. Code for creating the ElasticNet model and exporting it to ONNX for float and double

2.1.3.2. MQL5 code for executing ONNX Models

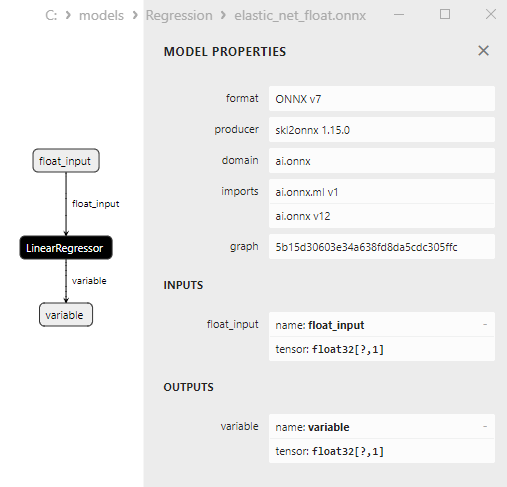

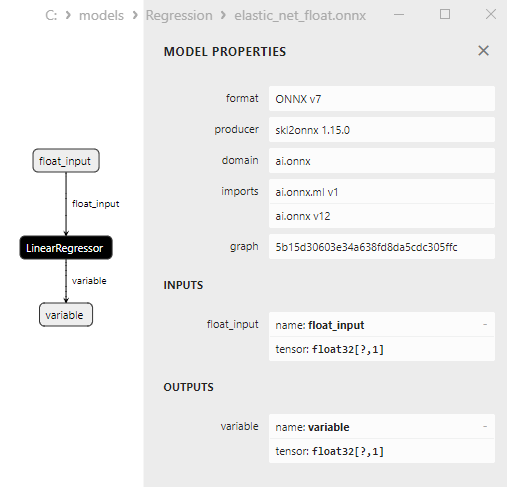

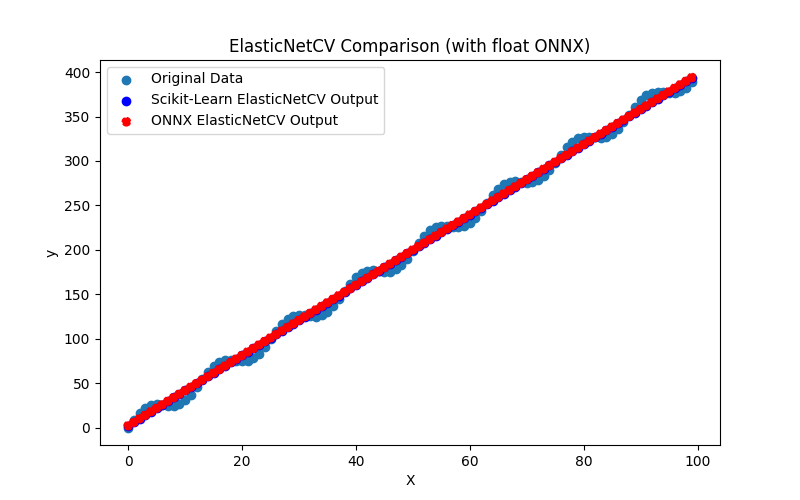

2.1.3.3. ONNX representation of the elastic_net_float.onnx and elastic_net_double.onnx - 2.1.4. sklearn.linear_model.ElasticNetCV

2.1.4.1. Code for creating the ElasticNet model and exporting it to ONNX for float and double

2.1.4.2. MQL5 code for executing ONNX Models

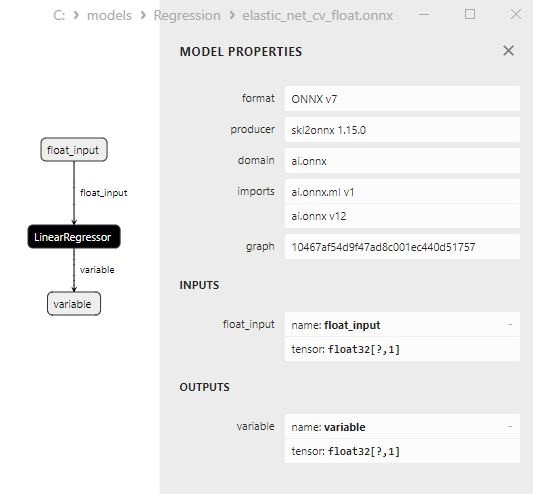

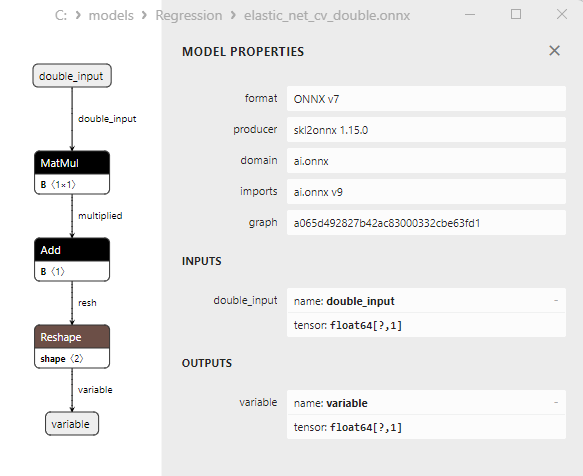

2.1.4.3. ONNX representation of the elastic_net_cv_float.onnx and elastic_net_cv_double.onnx - 2.1.5. sklearn.linear_model.HuberRegressor

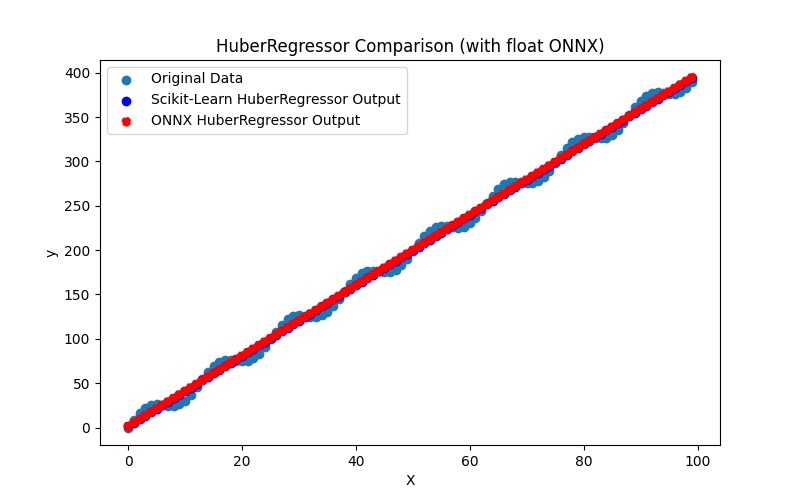

2.1.5.1. Code for creating the HuberRegressor model and exporting it to ONNX for float and double

2.1.5.2. MQL5 code for executing ONNX Models

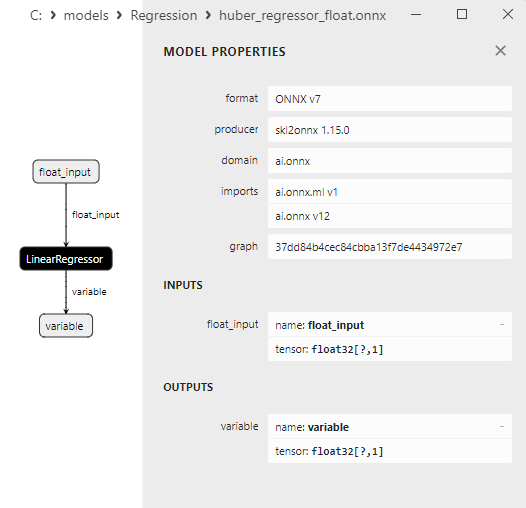

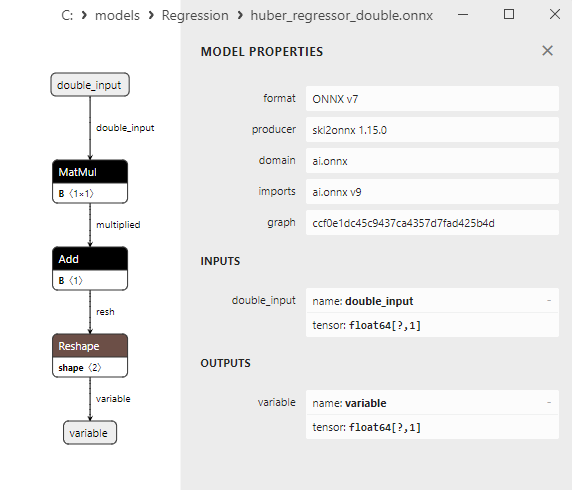

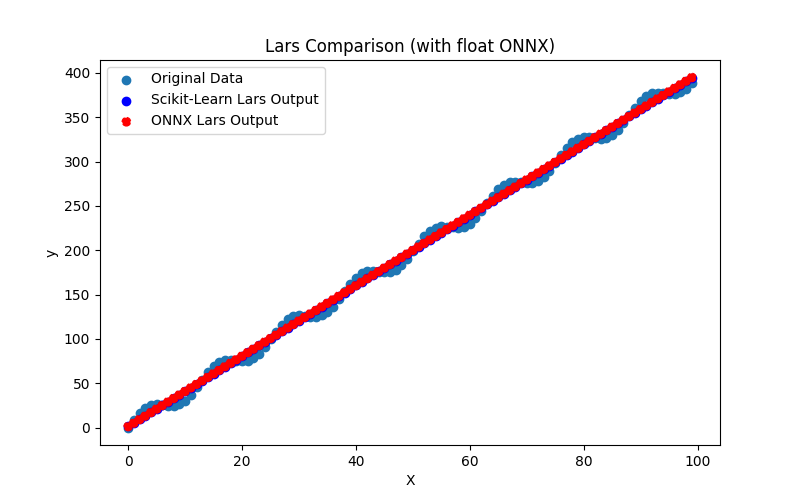

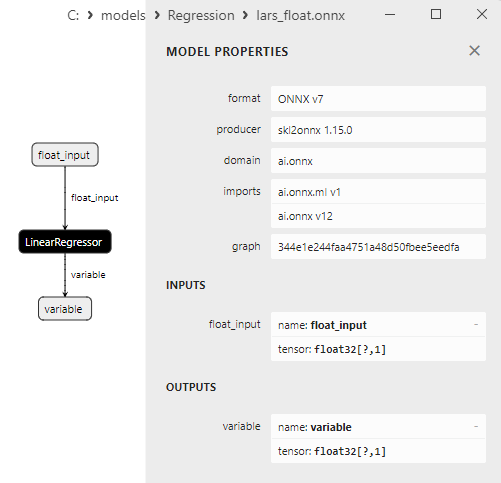

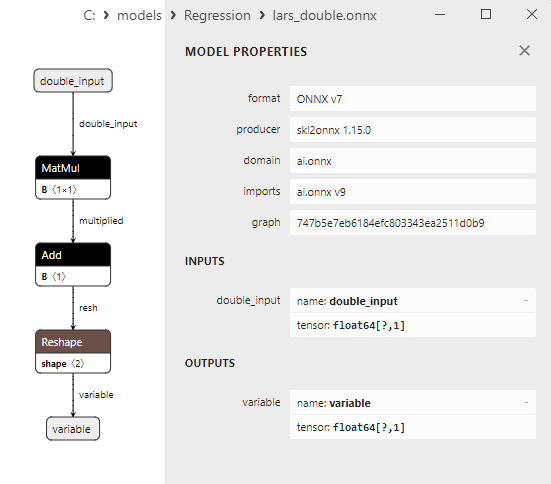

2.1.5.3. ONNX representation of the huber_regressor_float.onnx and huber_regressor_double.onnx - 2.1.6. sklearn.linear_model.Lars

2.1.6.1. Code for creating the Lars model and exporting it to ONNX for float and double

2.1.6.2. MQL5 code for executing ONNX Models

2.1.6.3. ONNX representation of the lars_float.onnx and lars_double.onnx - 2.1.7. sklearn.linear_model.LarsCV

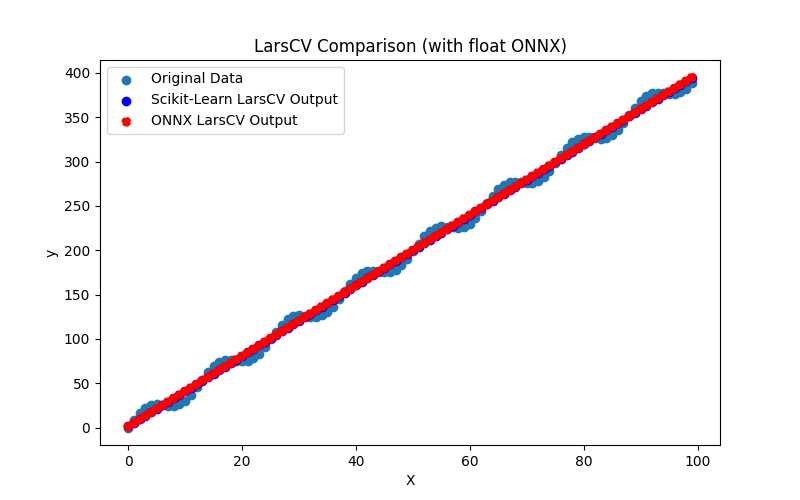

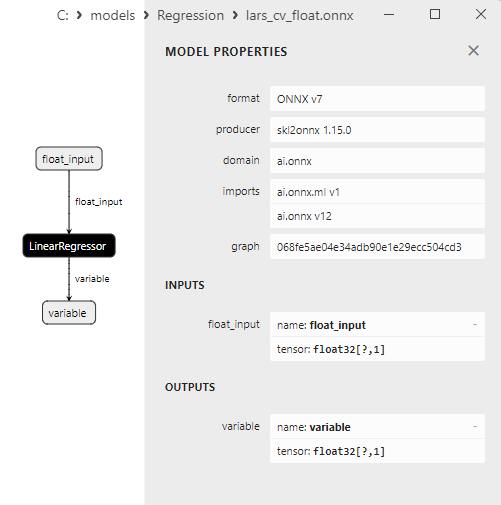

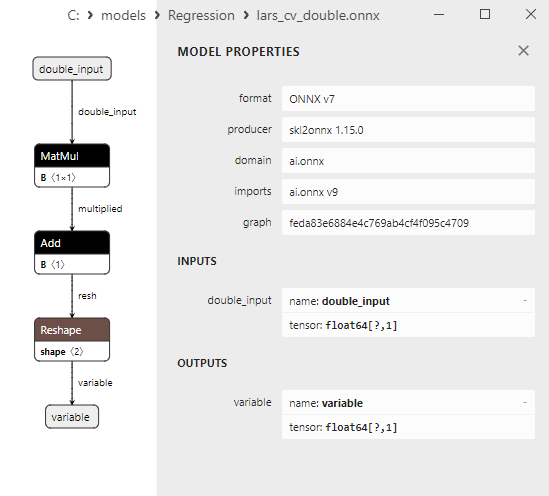

2.1.7.1. Code for creating the LarsCV model and exporting it to ONNX for float and double

2.1.7.2. MQL5 code for executing ONNX Models

2.1.7.3. ONNX representation of the lars_cv_float.onnx and lars_cv_double.onnx - 2.1.8. sklearn.linear_model.Lasso

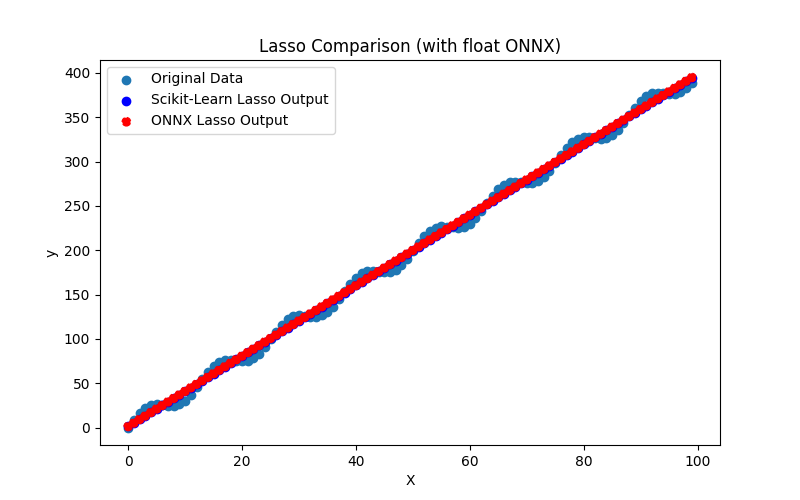

2.1.8.1. Code for creating the Lasso model and exporting it to ONNX for float and double

2.1.8.2. MQL5 code for executing ONNX Models

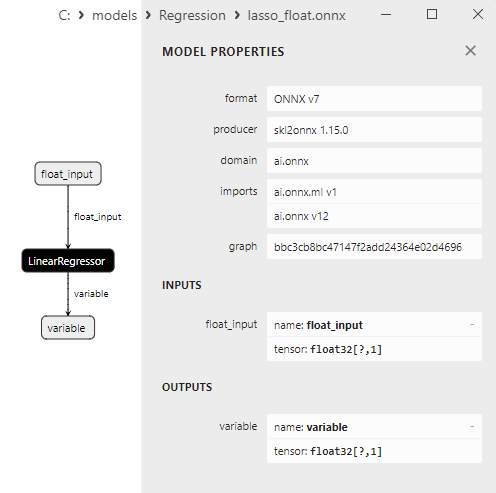

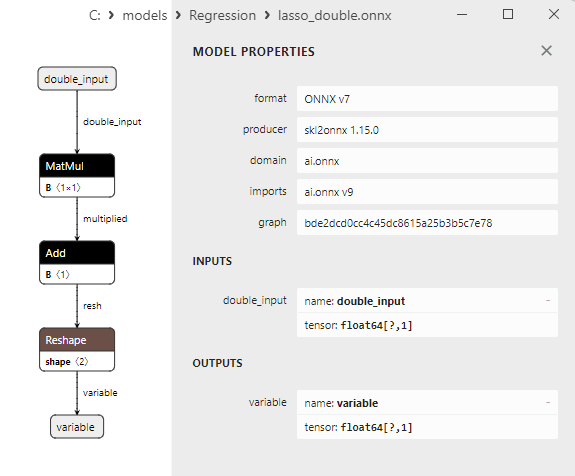

2.1.8.3. ONNX representation of the lasso_float.onnx and lasso_double.onnx - 2.1.9. sklearn.linear_model.LassoCV

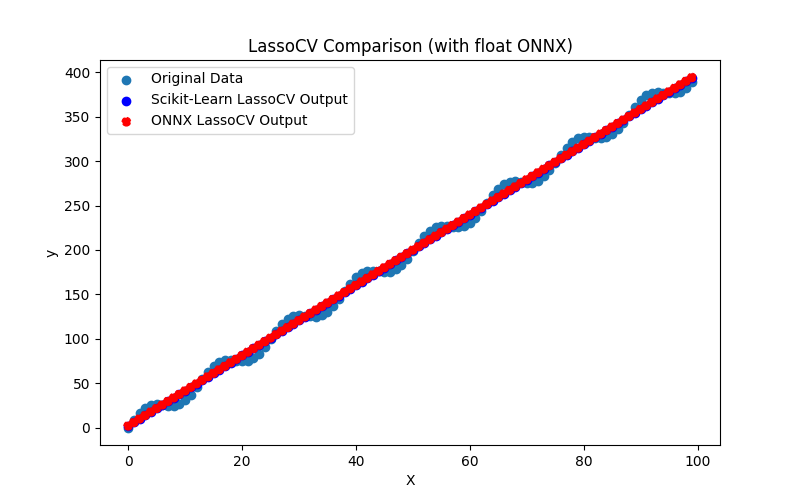

2.1.9.1. Code for creating the LassoCV model and exporting it to ONNX for float and double

2.1.9.2. MQL5 code for executing ONNX Models

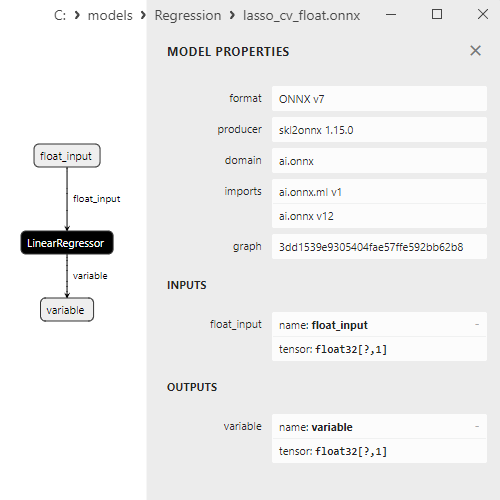

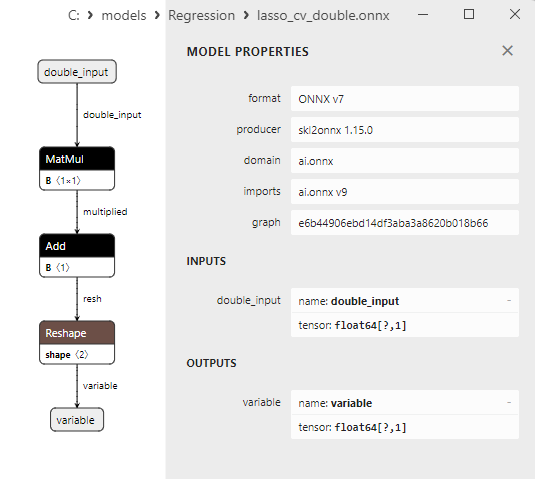

2.1.9.3. ONNX representation of the lasso_cv_float.onnx and lasso_cv_double.onnx - 2.1.10. sklearn.linear_model.LassoLars

2.1.10.1. Code for creating the LassoLars model and exporting it to ONNX for float and double

2.1.10.2. MQL5 code for executing ONNX Models

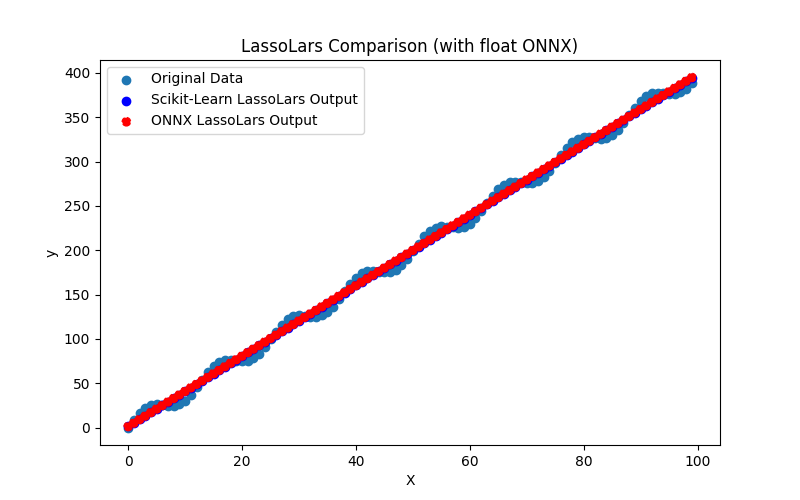

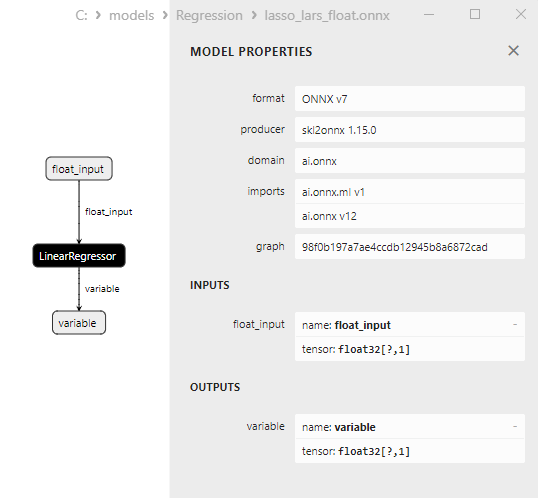

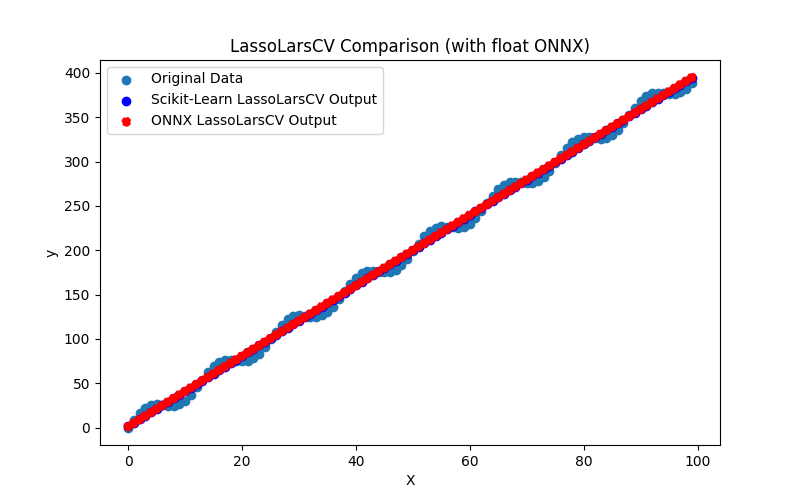

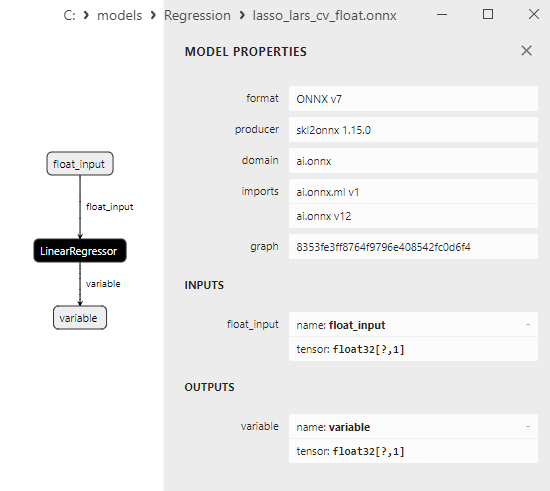

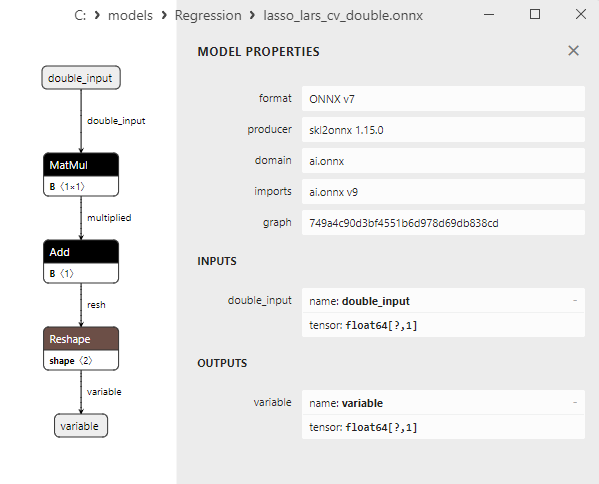

2.1.10.3. ONNX representation of the lasso_lars_float.onnx and lasso_lars_double.onnx - 2.1.11. sklearn.linear_model.LassoLarsCV

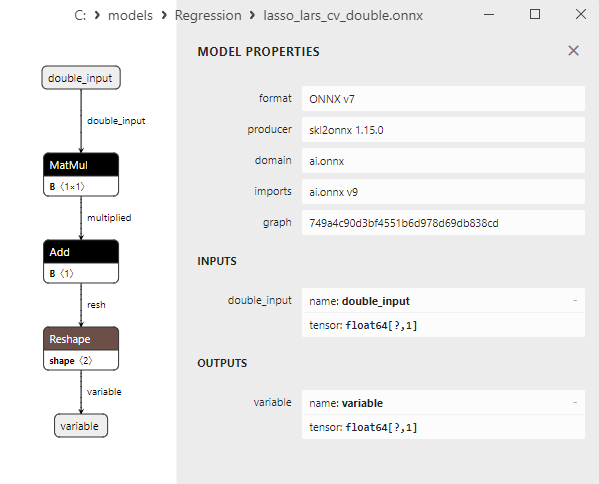

2.1.11.1. Code for creating the LassoLarsCV model and exporting it to ONNX for float and double

2.1.11.2. MQL5 code for executing ONNX Models

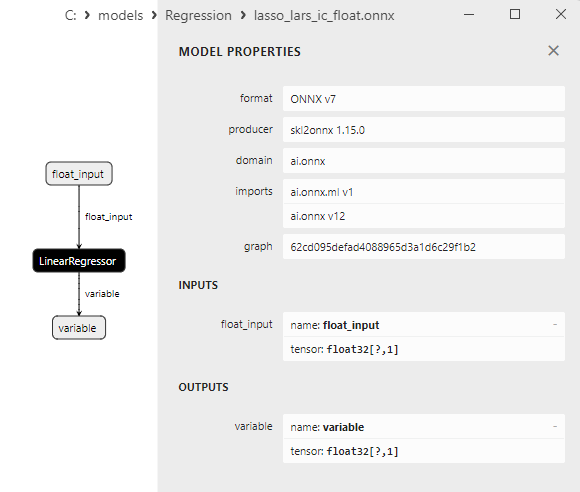

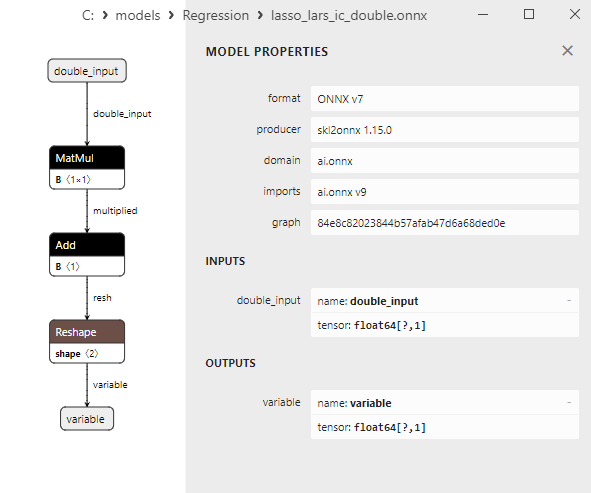

2.1.11.3. ONNX representation of the lasso_lars_cv_float.onnx and lasso_lars_cv_double.onnx - 2.1.12. sklearn.linear_model.LassoLarsIC

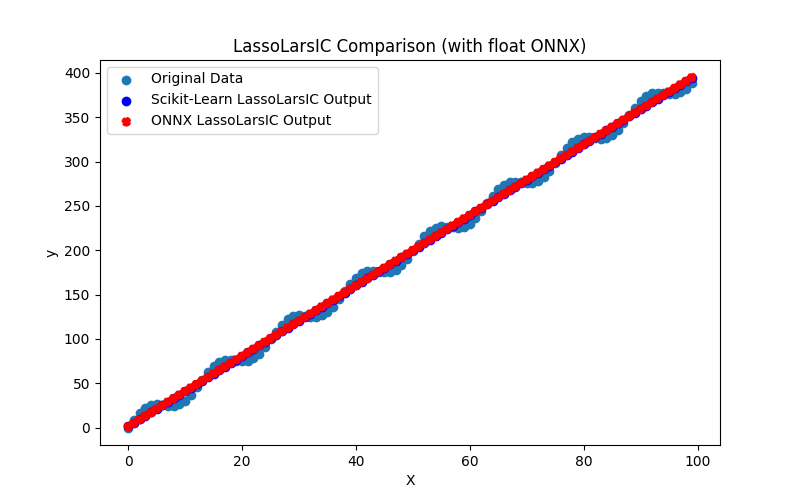

2.1.12.1. Code for creating the LassoLarsIC model and exporting it to ONNX for float and double

2.1.12.2. MQL5 code for executing ONNX Models

2.1.12.3. ONNX representation of the lasso_lars_ic_float.onnx and lasso_lars_ic_double.onnx - 2.1.13. sklearn.linear_model.LinearRegression

2.1.13.1. Code for creating the LinearRegression model and exporting it to ONNX for float and double

2.1.13.2. MQL5 code for executing ONNX Models

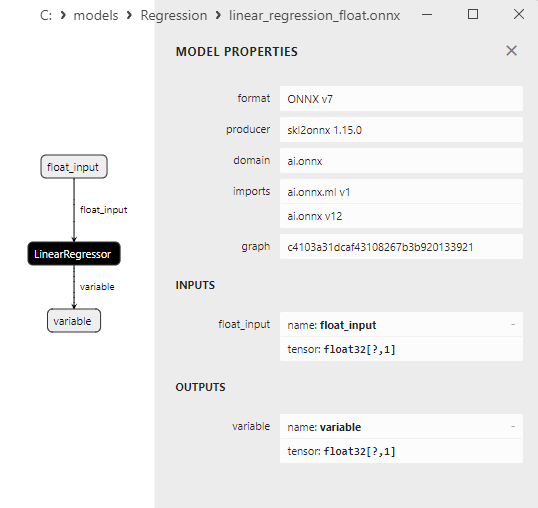

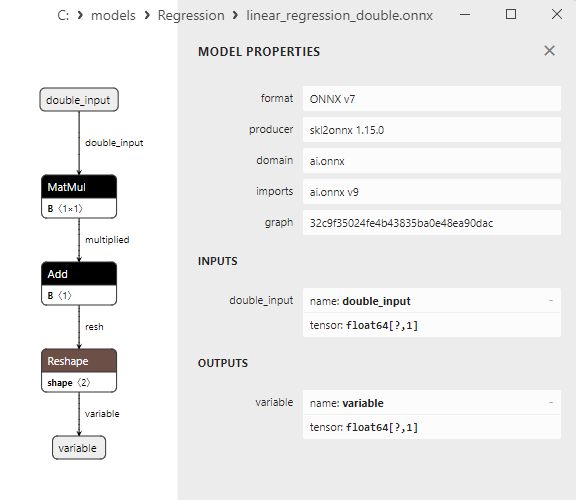

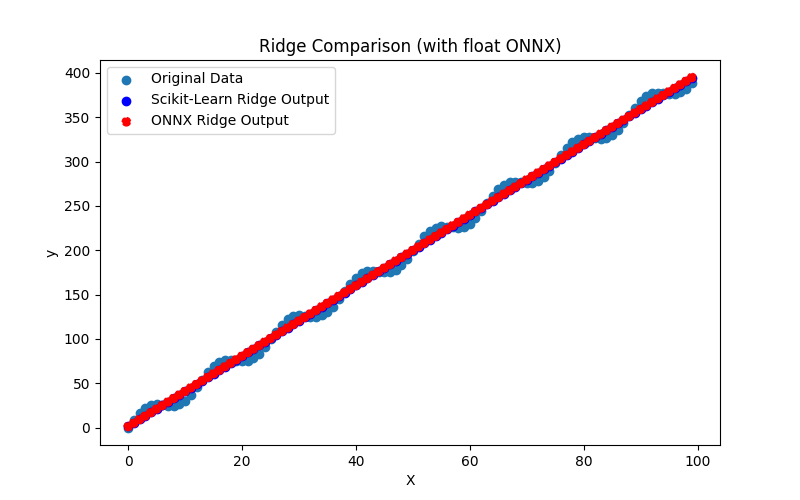

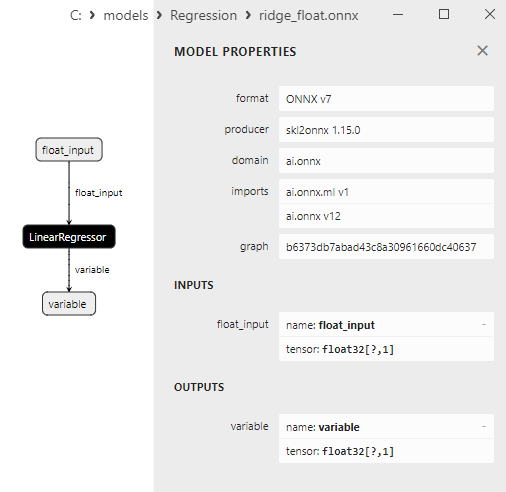

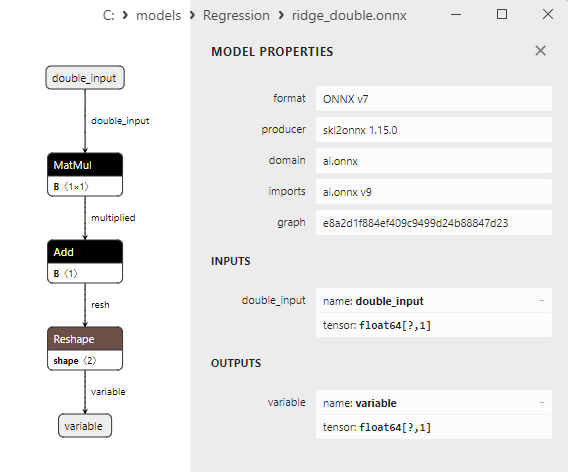

2.1.13.3. ONNX representation of the linear_regression_float.onnx and linear_regression_double.onnx - 2.1.14. sklearn.linear_model.Ridge

2.1.14.1. Code for creating the Ridge model and exporting it to ONNX for float and double

2.1.14.2. MQL5 code for executing ONNX Models

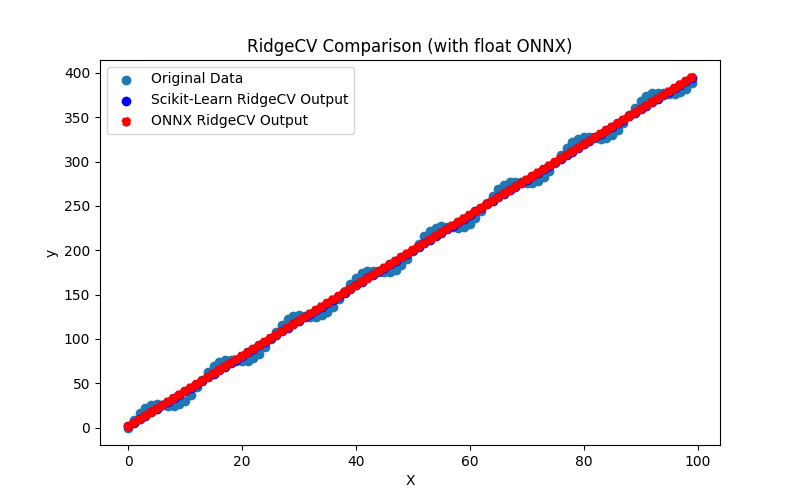

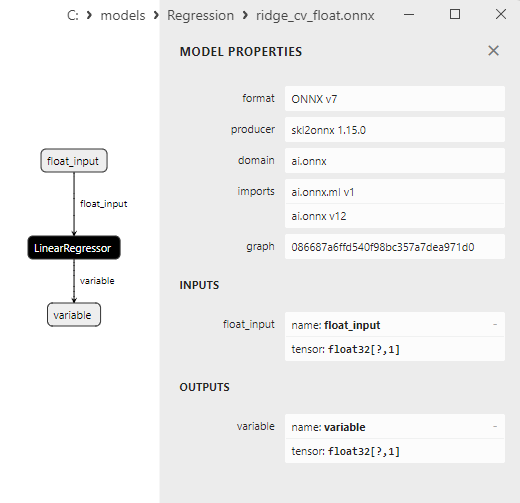

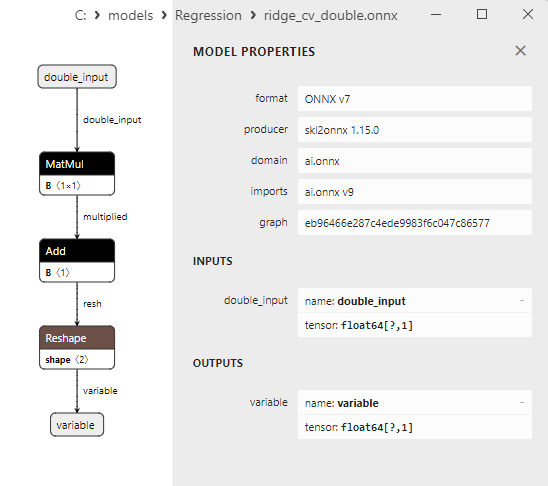

2.1.14.3. ONNX representation of the ridge_float.onnx and ridge_double.onnx - 2.1.15. sklearn.linear_model.RidgeCV

2.1.15.1. Code for creating the RidgeCV model and exporting it to ONNX for float and double

2.1.15.2. MQL5 code for executing ONNX Models

2.1.15.3. ONNX representation of the ridge_cv_float.onnx and ridge_cv_double.onnx - 2.1.16. sklearn.linear_model.OrthogonalMatchingPursuit

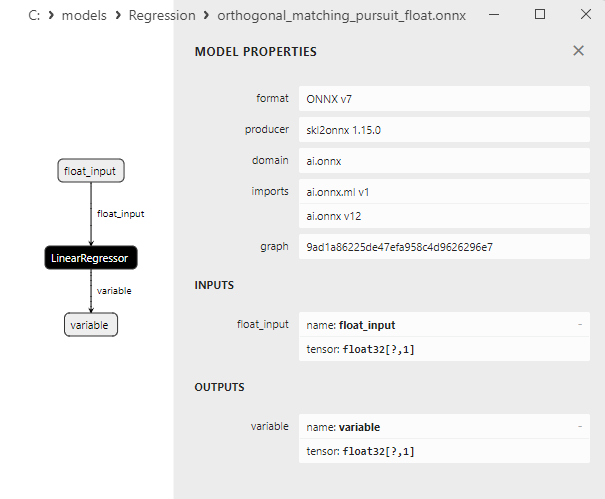

2.1.16.1. Code for creating the OrthogonalMatchingPursuit model and exporting it to ONNX for float and double

2.1.16.2. MQL5 code for executing ONNX Models

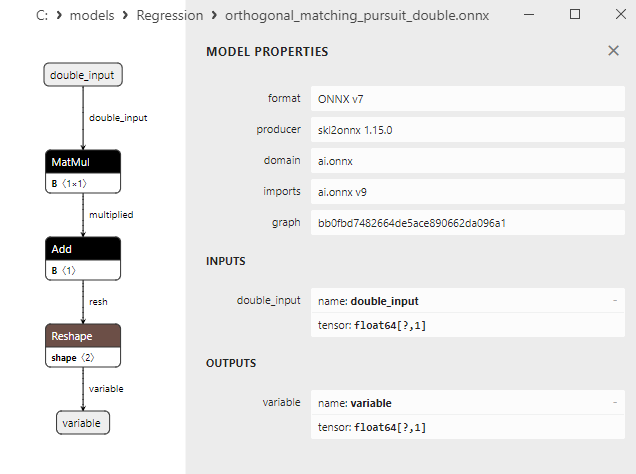

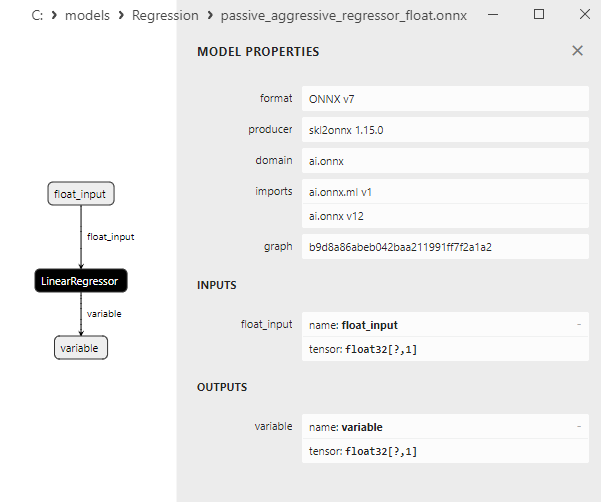

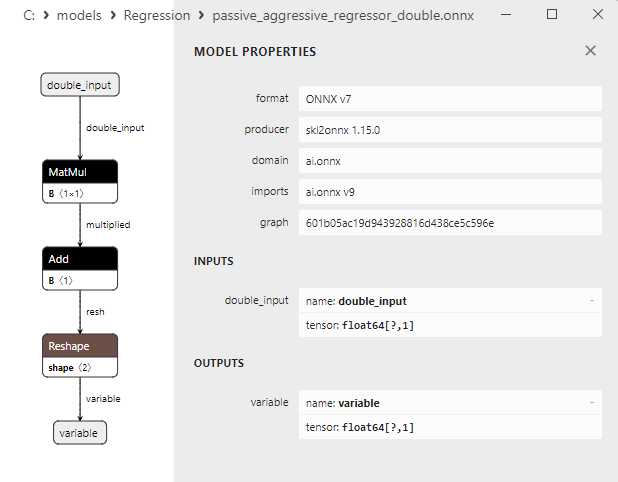

2.1.16.3. ONNX representation of the orthogonal_matching_pursuit_float.onnx and orthogonal_matching_pursuit_double.onnx - 2.1.17. sklearn.linear_model.PassiveAggressiveRegressor

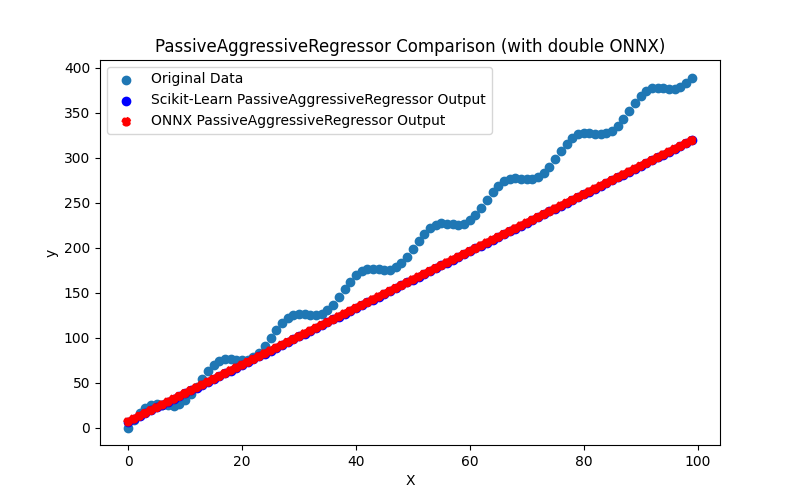

2.1.17.1. Code for creating the PassiveAggressiveRegressor model and exporting it to ONNX for float and double

2.1.17.2. MQL5 code for executing ONNX Models

2.1.17.3. ONNX representation of the passive_aggressive_regressor_float.onnx and passive_aggressive_regressor_double.onnx - 2.1.18. sklearn.linear_model.QuantileRegressor

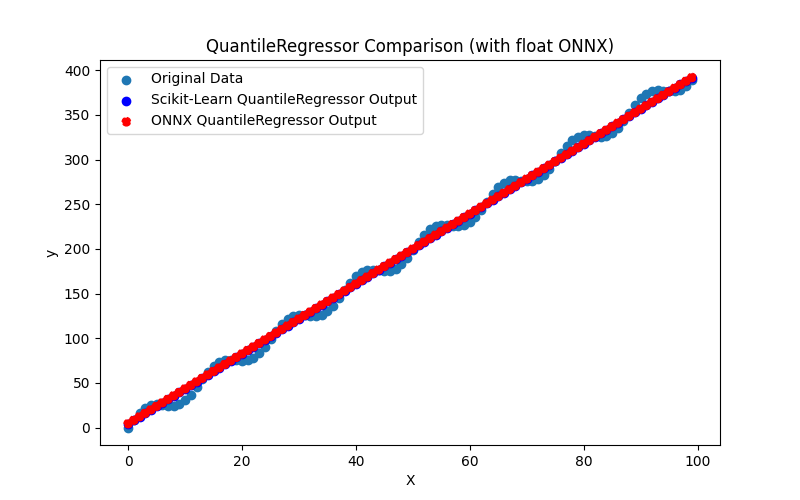

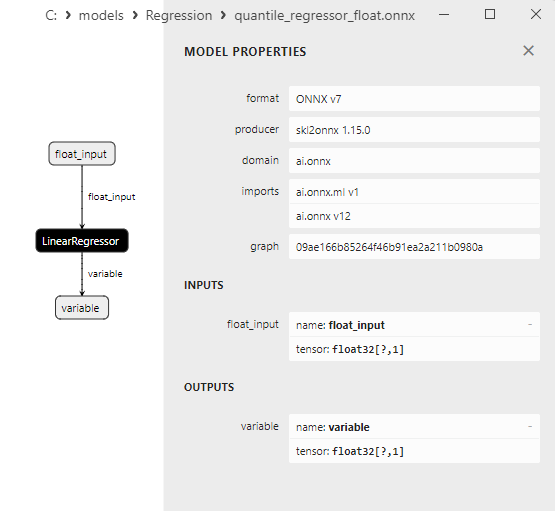

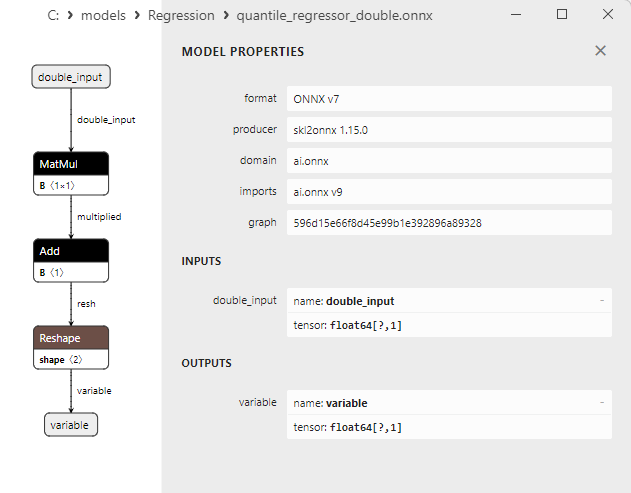

2.1.18.1. Code for creating the QuantileRegressor model and exporting it to ONNX for float and double

2.1.18.2. MQL5 code for executing ONNX Models

2.1.18.3. ONNX representation of the quantile_regressor_float.onnx and quantile_regressor_double.onnx - 2.1.19. sklearn.linear_model.RANSACRegressor

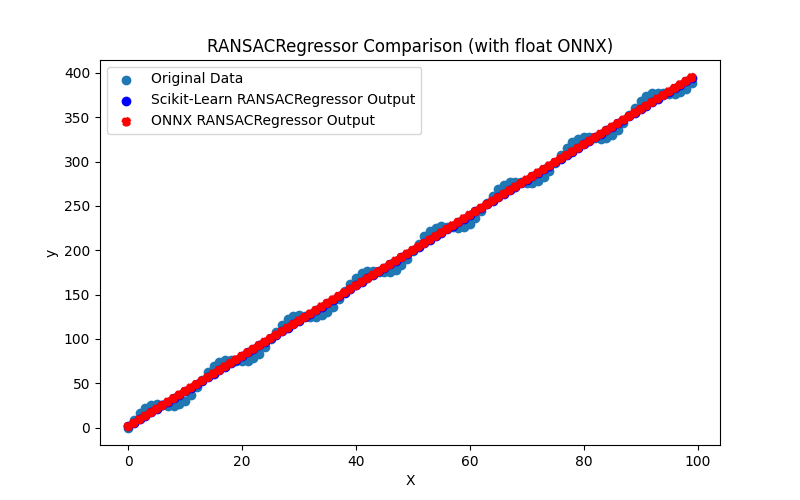

2.1.19.1. Code for creating the RANSACRegressor model and exporting it to ONNX for float and double

2.1.19.2. MQL5 code for executing ONNX Models

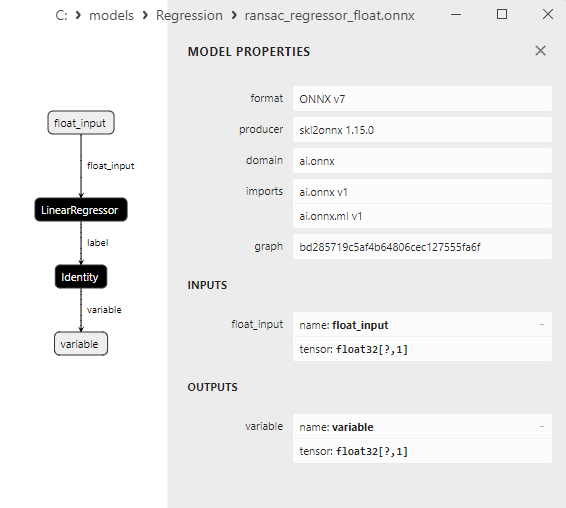

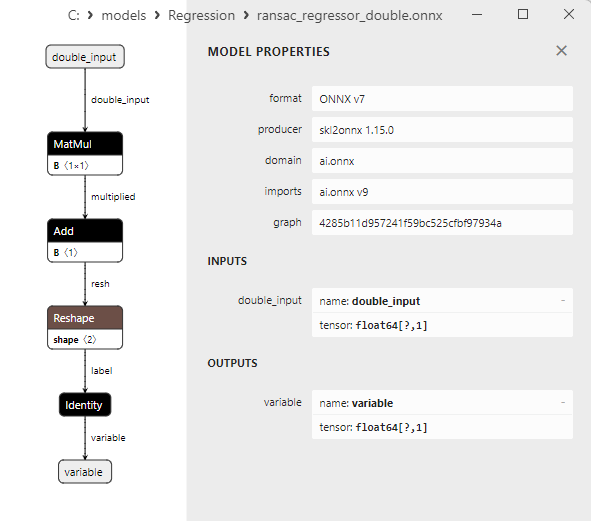

2.1.19.3. ONNX representation of the ransac_regressor_float.onnx and ransac_regressor_double.onnx - 2.1.20. sklearn.linear_model.TheilSenRegressor

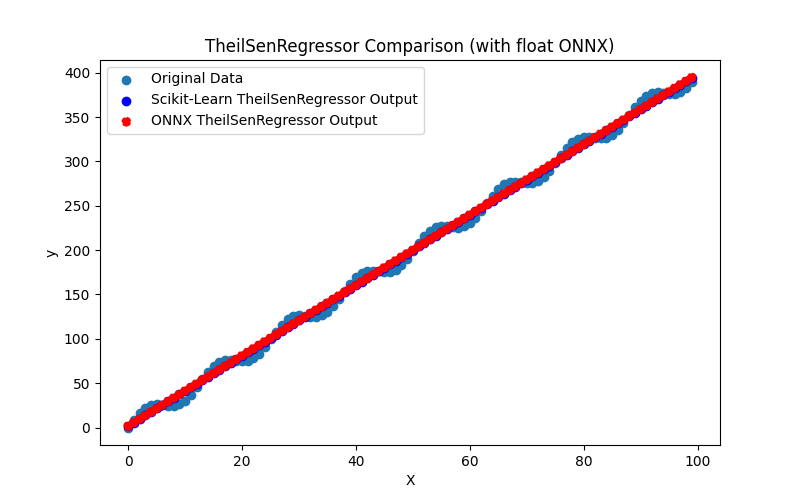

2.1.20.1. Code for creating the TheilSenRegressor model and exportingg it to ONNX for float and double

2.1.20.2. MQL5 code for executing ONNX Models

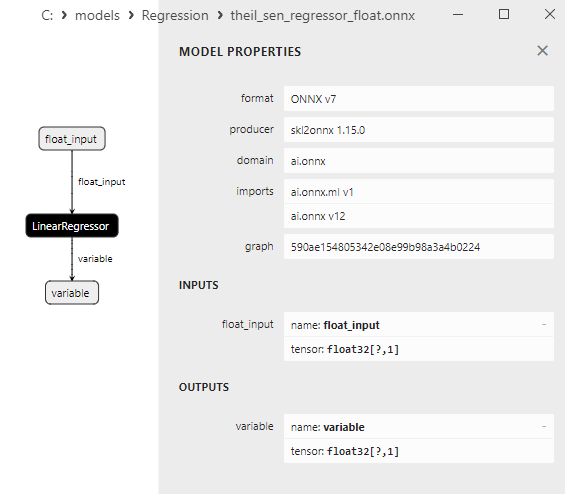

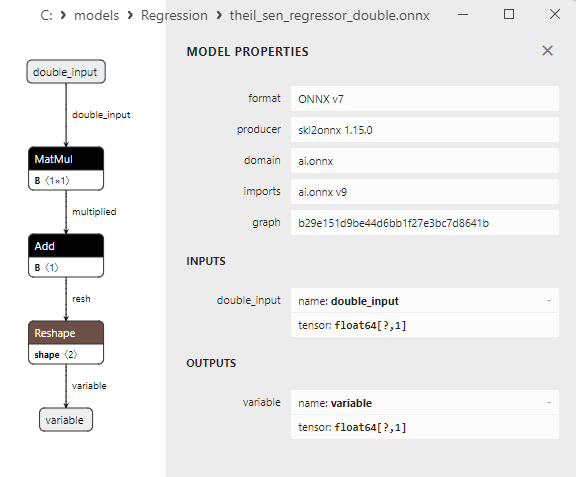

2.1.20.3. ONNX representation of the theil_sen_regressor_float.onnx and theil_sen_regressor_double.onnx - 2.1.21. sklearn.linear_model.LinearSVR

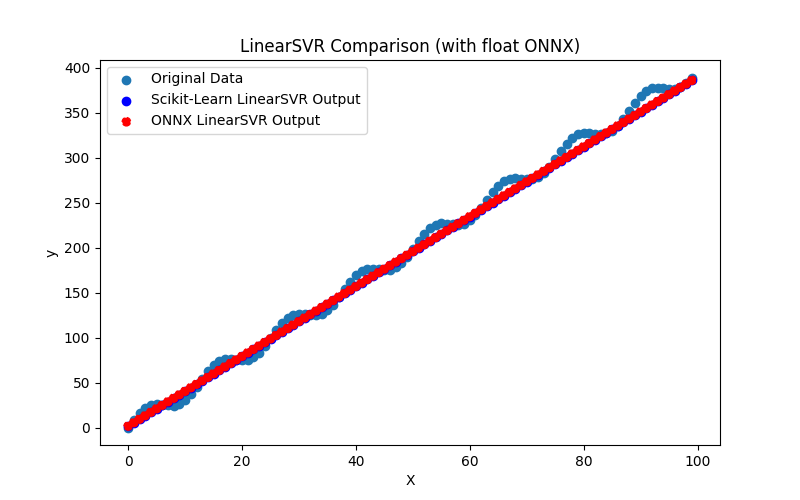

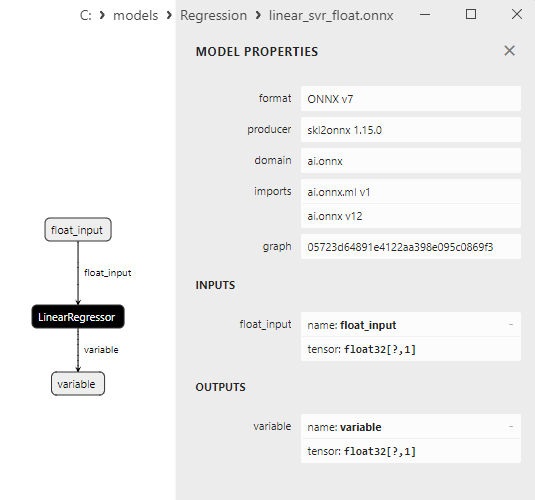

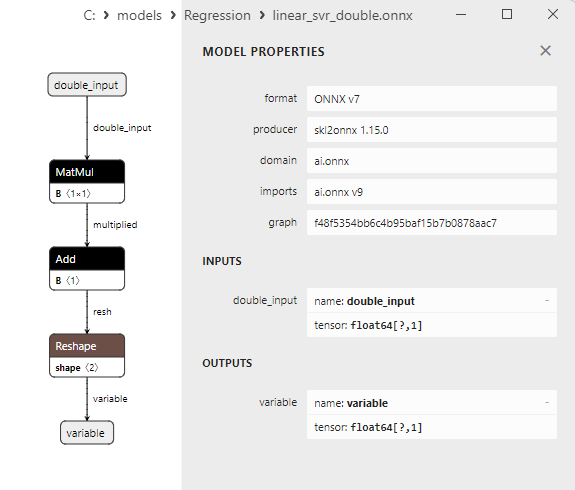

2.1.21.1. Code for creating the LinearSVR model and exporting it to ONNX for float and double

2.1.21.2. MQL5 code for executing ONNX Models

2.1.21.3. ONNX representation of the linear_svr_float.onnx and linear_svr_double.onnx - 2.1.22. sklearn.linear_model.MLPRegressor

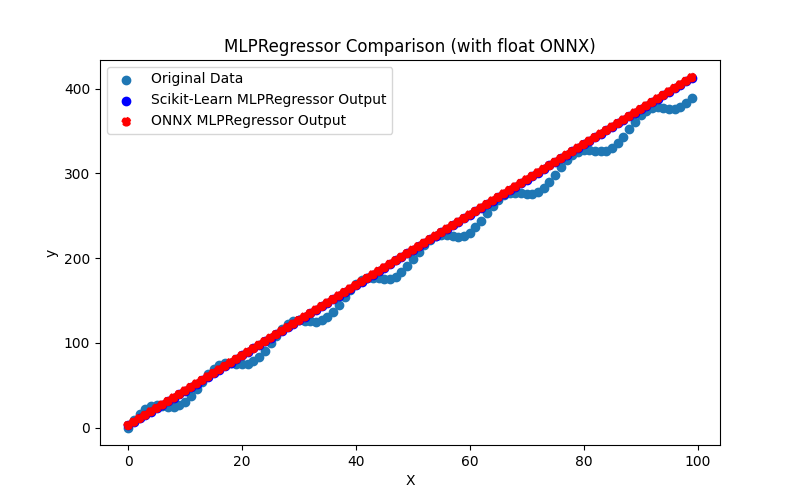

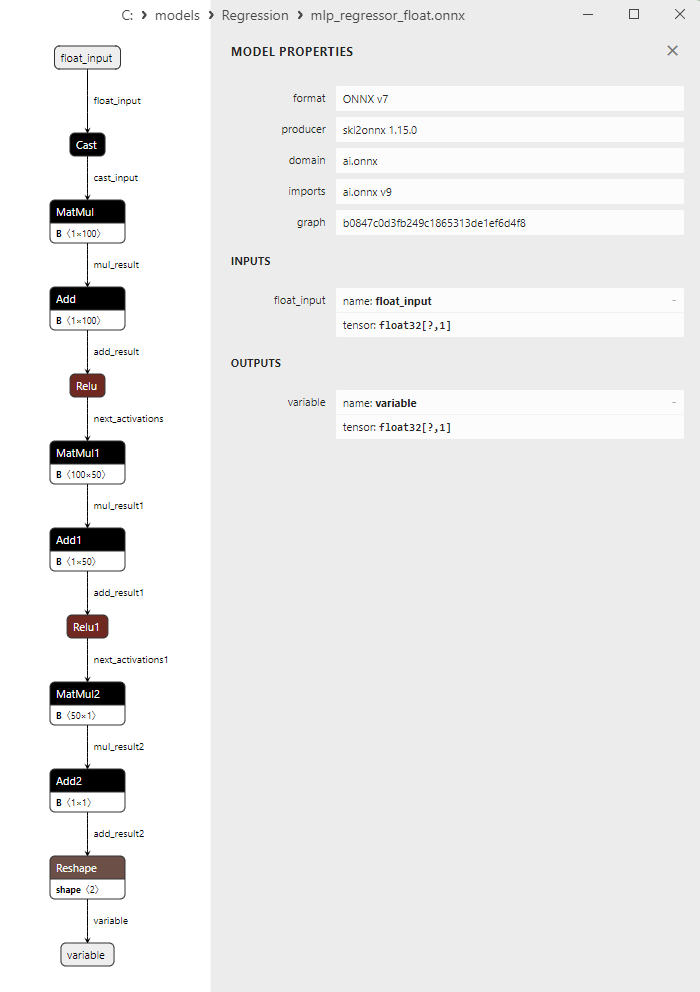

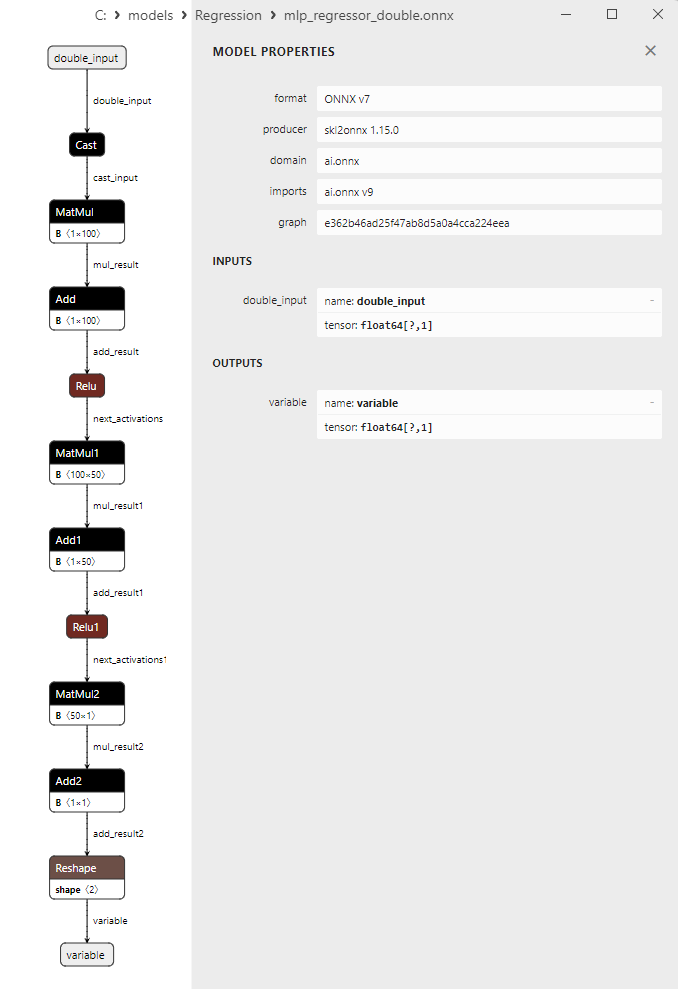

2.1.22.1. Code for creating the MLPRegressor model and exporting it to ONNX for float and double

2.1.22.2. MQL5 code for executing ONNX Models

2.1.22.3. ONNX representation of the mlp_regressor_float.onnx and mlp_regressor_double.onnx - 2.1.23. sklearn.cross_decomposition.PLSRegression

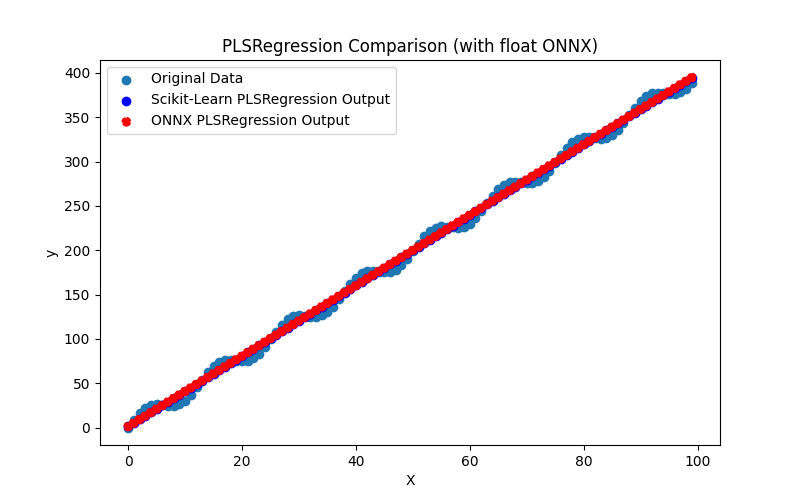

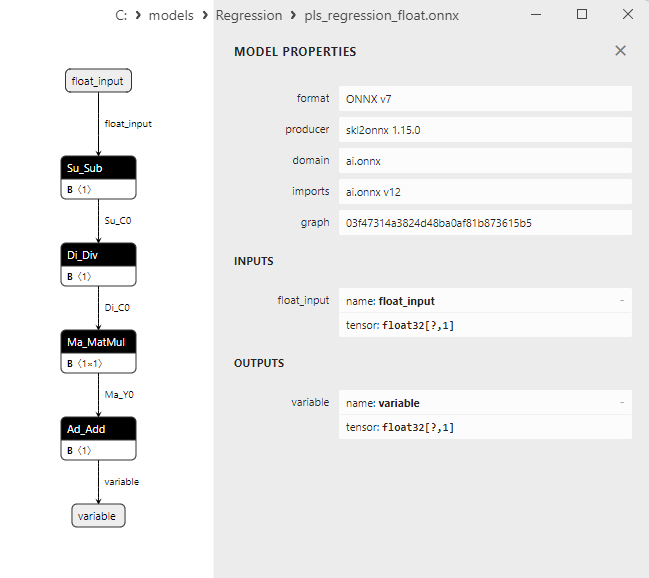

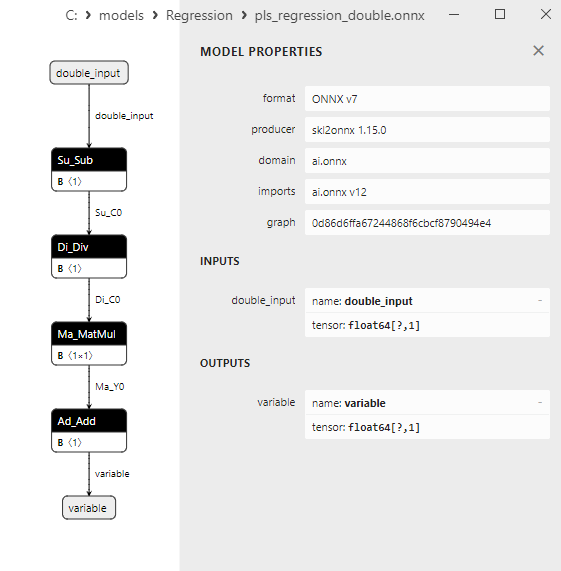

2.1.23.1. Code for creating the PLSRegression model and exporting it to ONNX for float and double

2.1.23.2. MQL5 code for executing ONNX Models

2.1.23.3. ONNX representation of the pls_regression_float.onnx and pls_regression_double.onnx - 2.1.24. sklearn.linear_model.TweedieRegressor

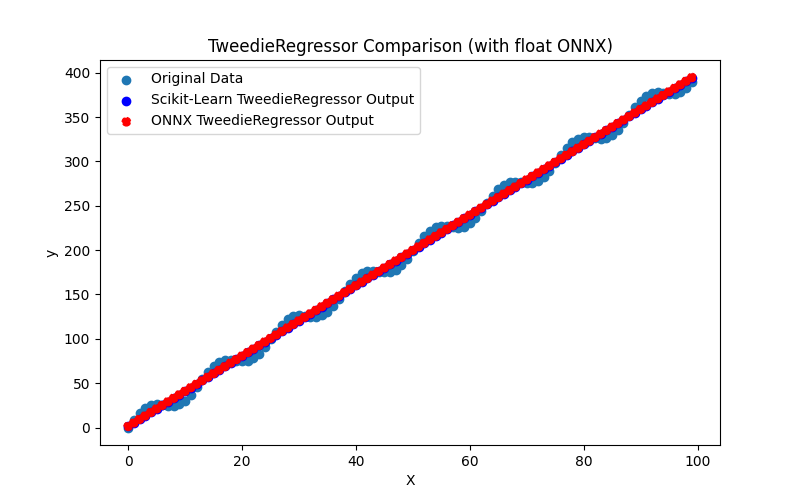

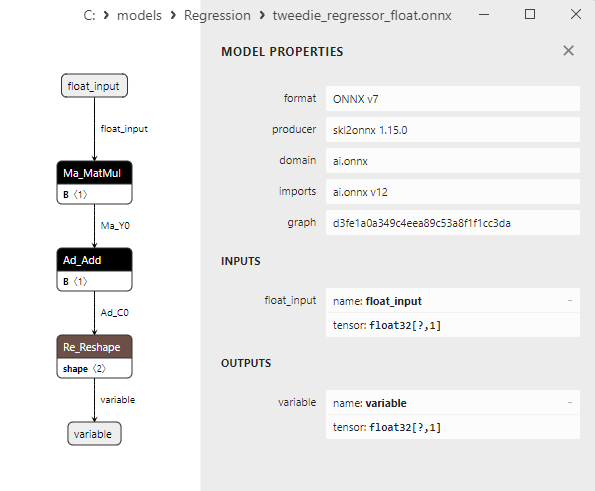

2.1.24.1. Code for creating the TweedieRegressor model and exporting it to ONNX for float and double

2.1.24.2. MQL5 code for executing ONNX Models

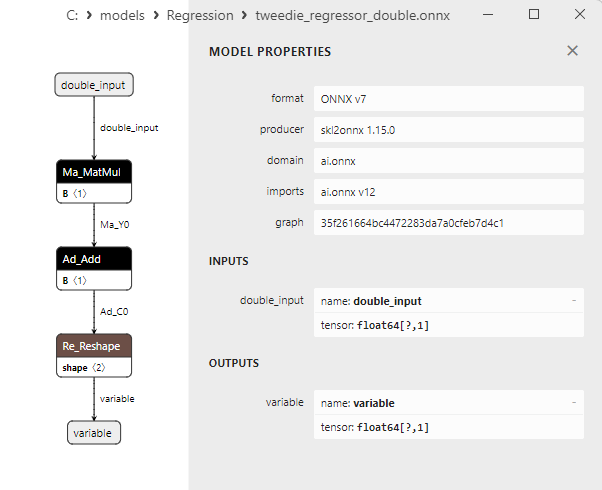

2.1.24.3. ONNX representation of the tweedie_regressor_float.onnx and tweedie_regressor_double.onnx - 2.1.25. sklearn.linear_model.PoissonRegressor

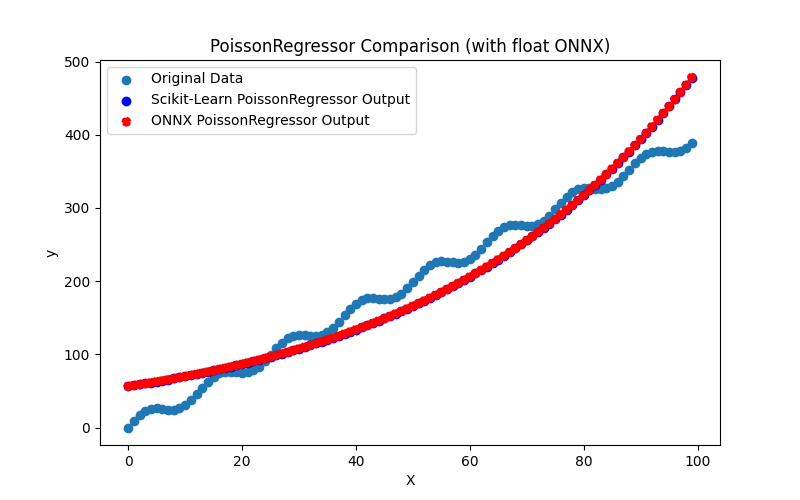

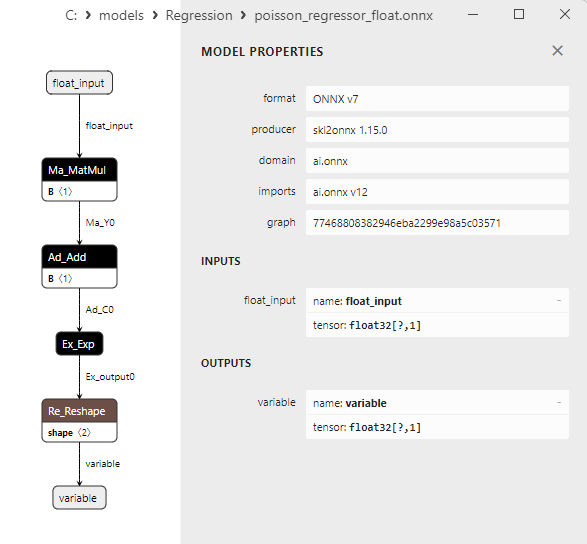

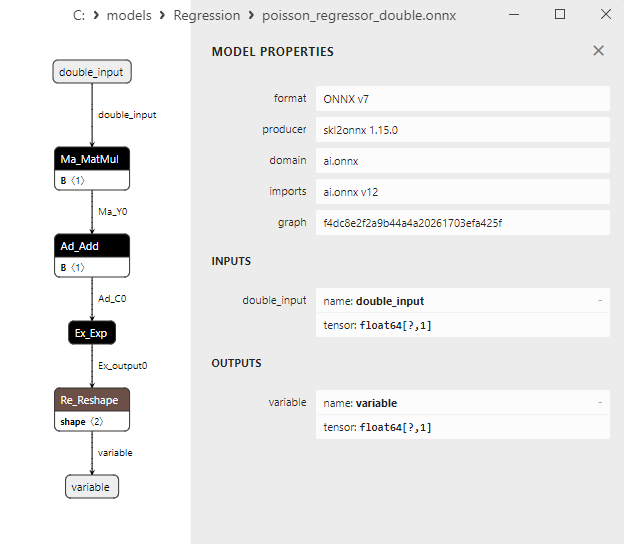

2.1.25.1. Code for creating the PoissonRegressor model and exporting it to ONNX for float and double

2.1.25.2. MQL5 code for executing ONNX Models

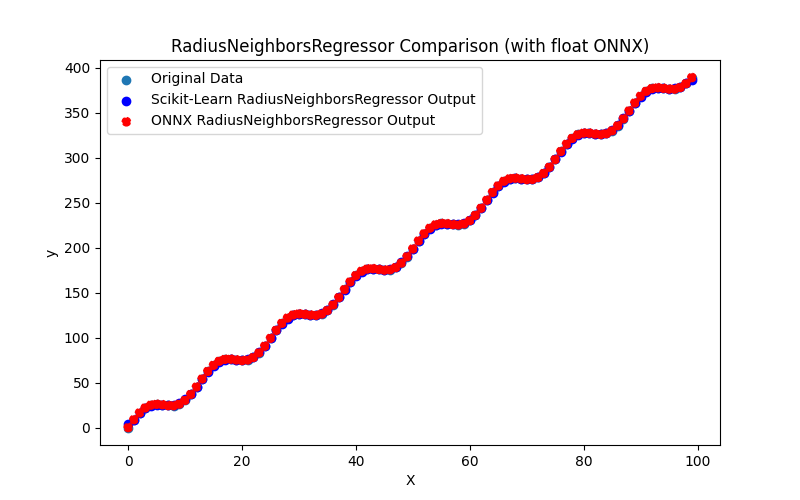

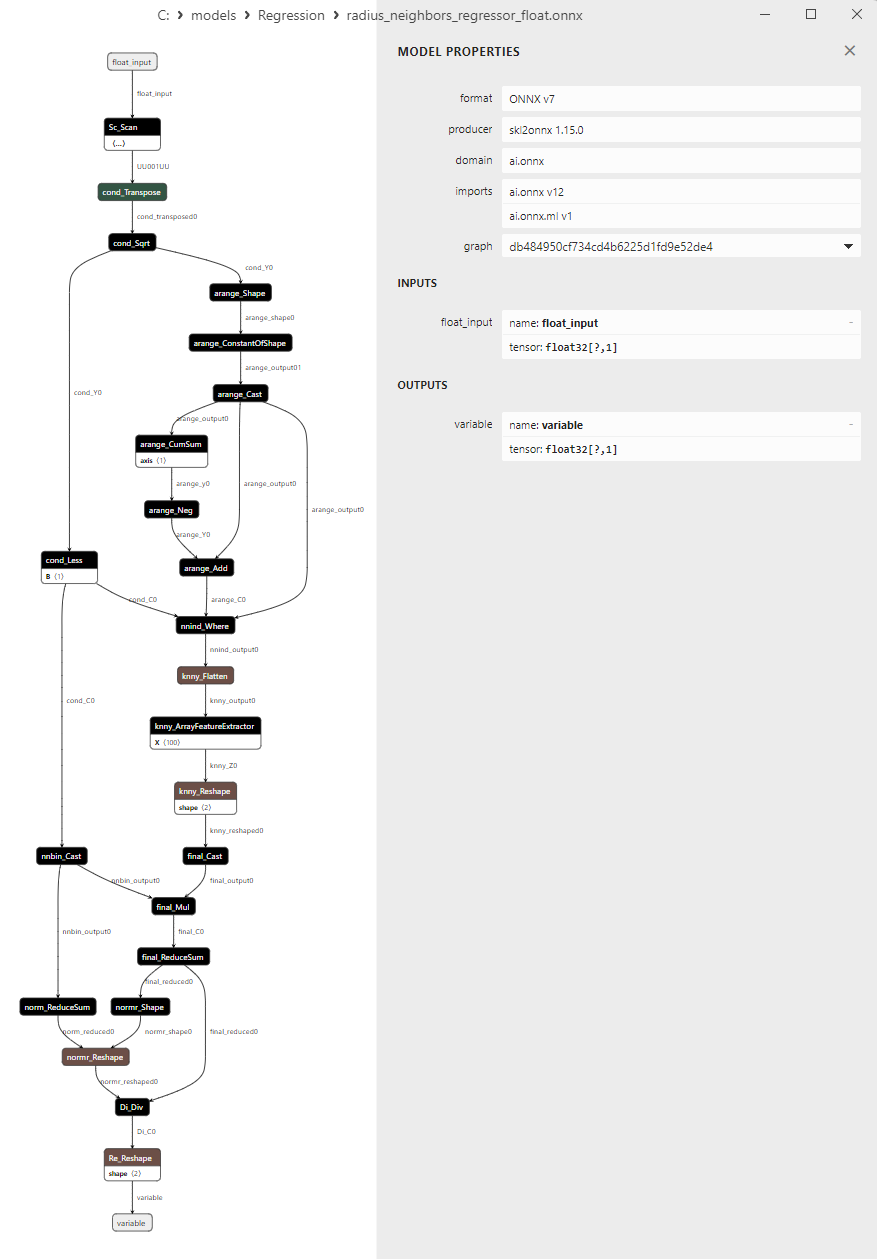

2.1.25.3. ONNX representation of the poisson_regressor_float.onnx and poisson_regressor_double.onnx - 2.1.26. sklearn.neighbors.RadiusNeighborsRegressor

2.1.26.1. Code for creating the RadiusNeighborsRegressor model and exporting it to ONNX for float and double

2.1.26.2. MQL5 code for executing ONNX Models

2.1.26.3. ONNX representation of the radius_neighbors_regressor_float.onnx and radius_neighbors_regressor_double.onnx - 2.1.27. sklearn.neighbors.KNeighborsRegressor

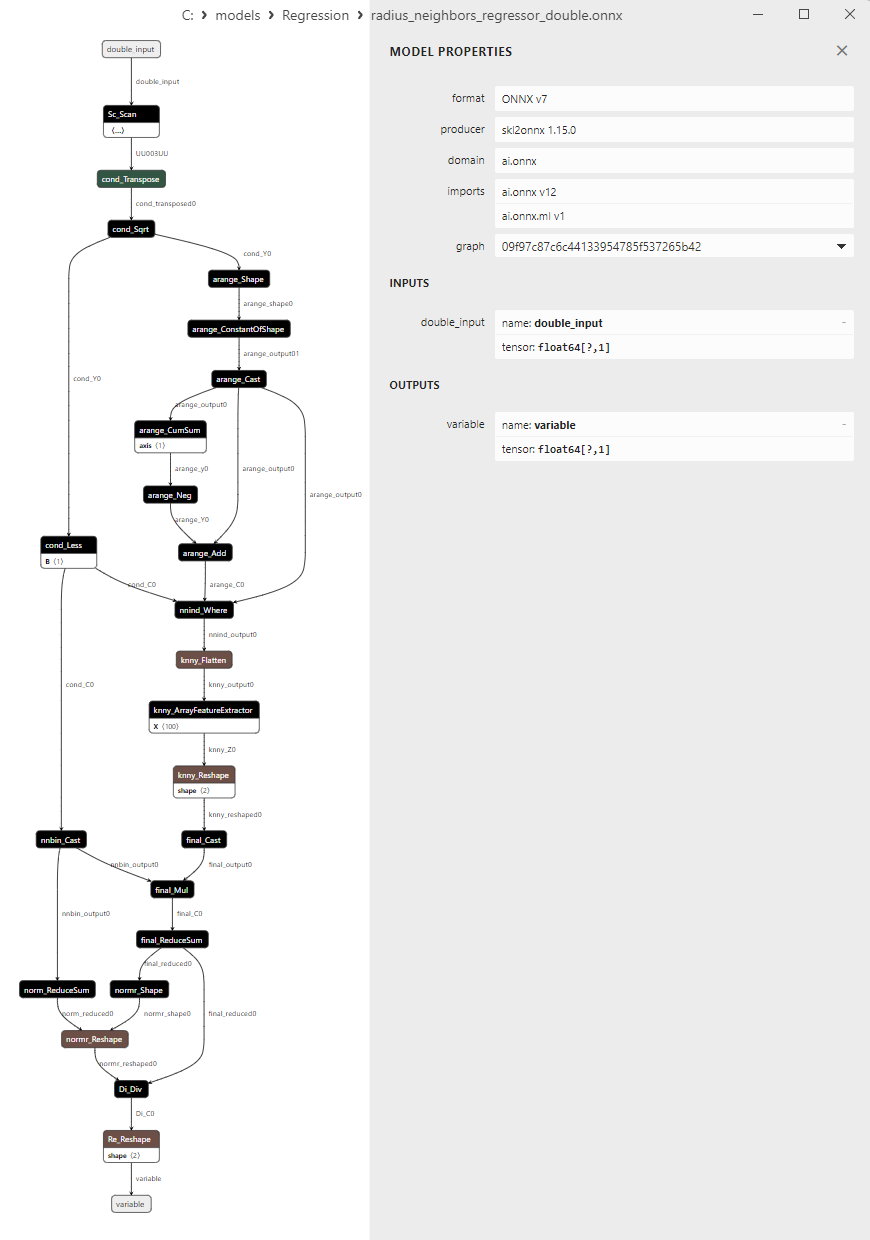

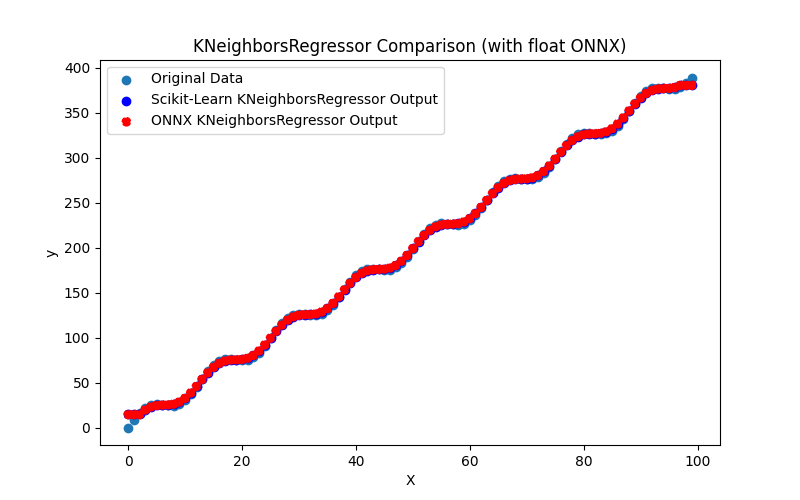

2.1.27.1. Code for creating the KNeighborsRegressor model and exporting it to ONNX for float and double

2.1.27.2. MQL5 code for executing ONNX Models

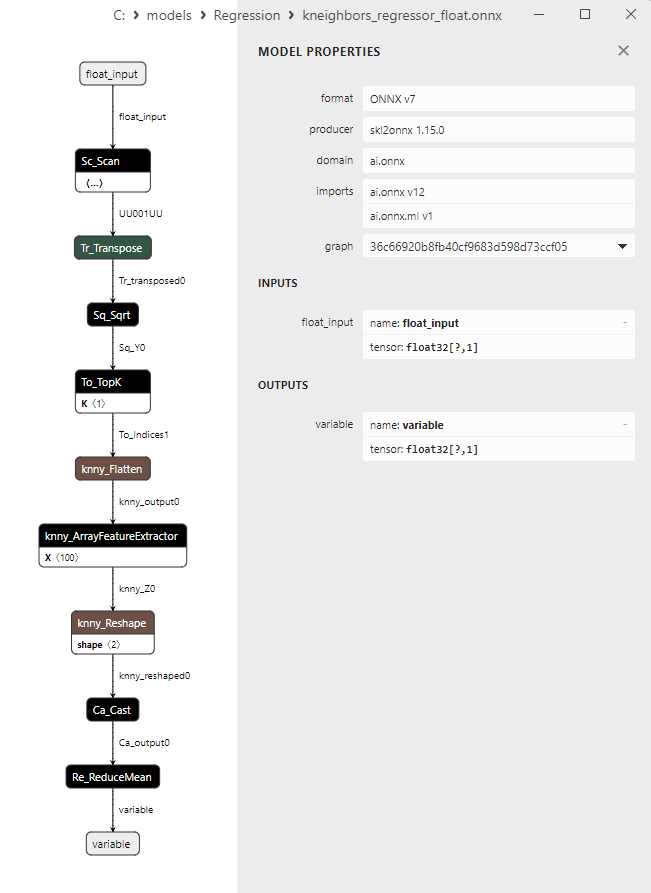

2.1.27.3. ONNX representation of the kneighbors_regressor_float.onnx and kneighbors_regressor_double.onnx

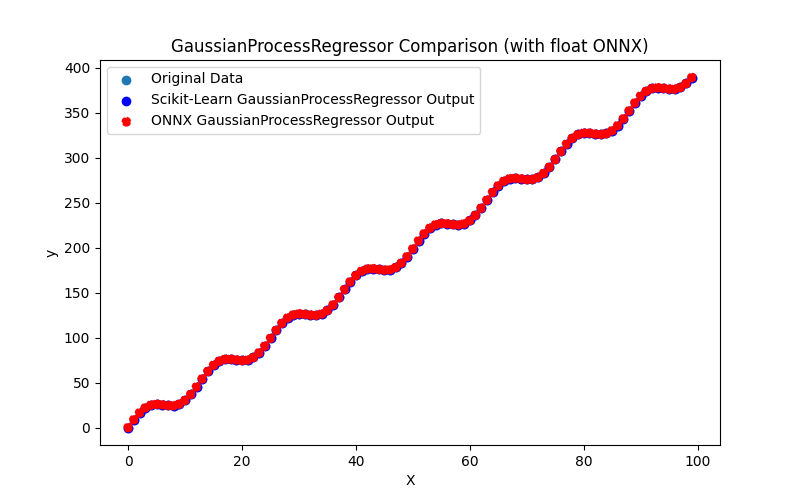

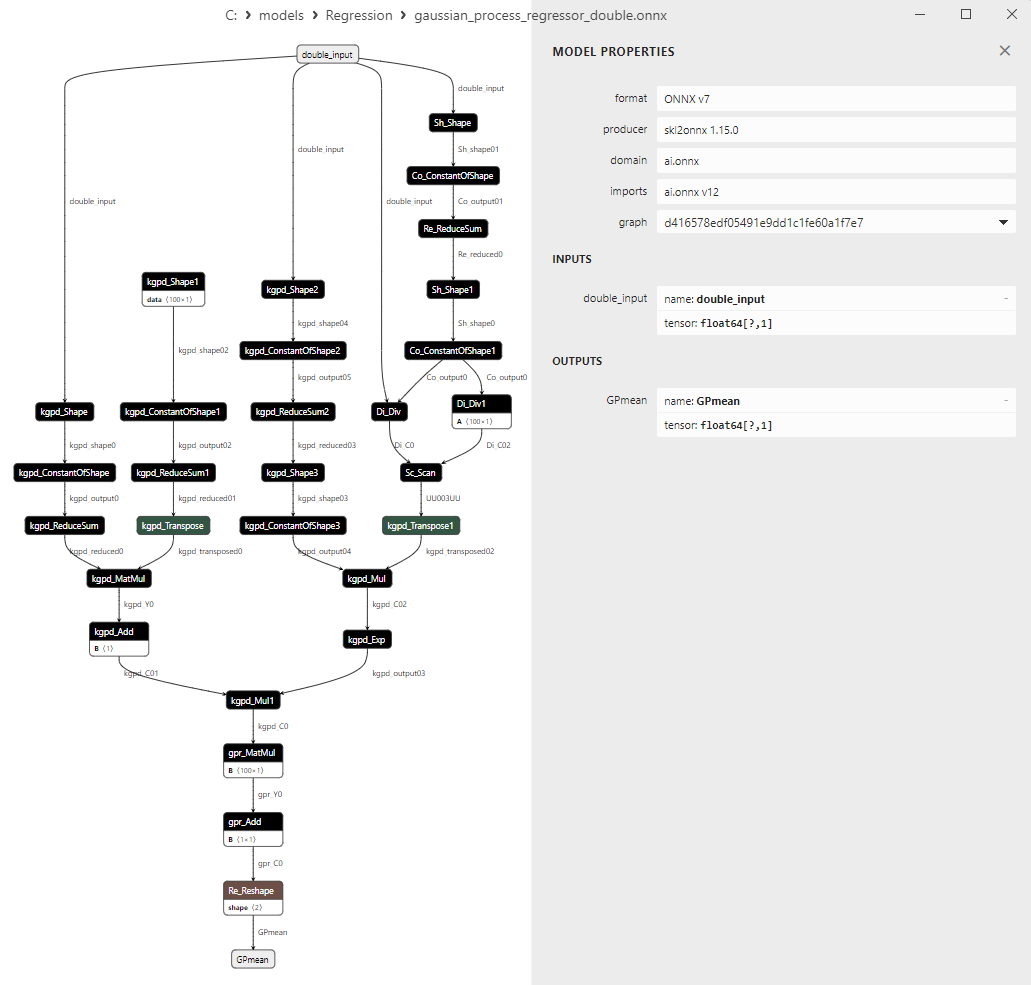

- 2.1.28. sklearn.gaussian_process.GaussianProcessRegressor

2.1.28.1. Code for creating the GaussianProcessRegressor model and exporting it to ONNX for float and double

2.1.28.2. MQL5 code for executing ONNX Models

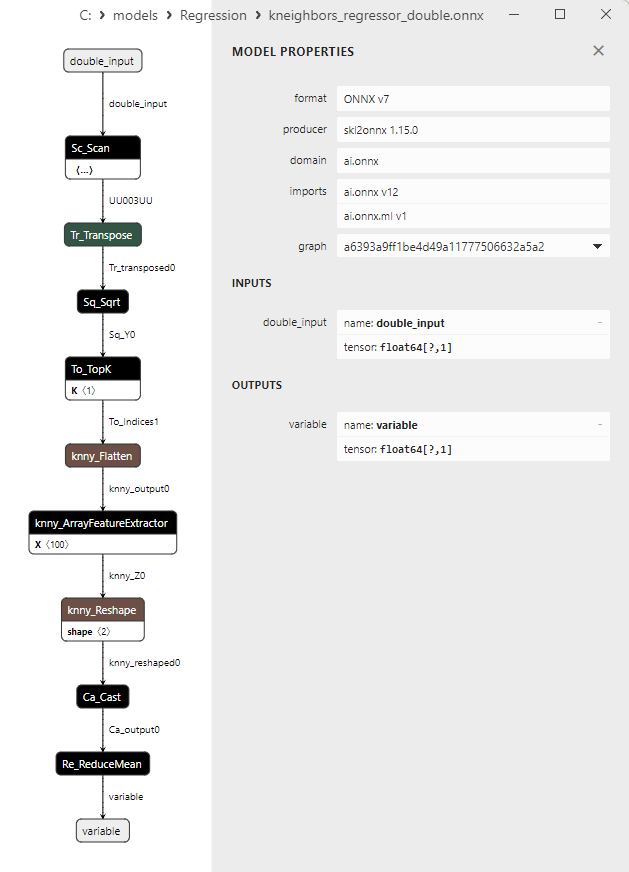

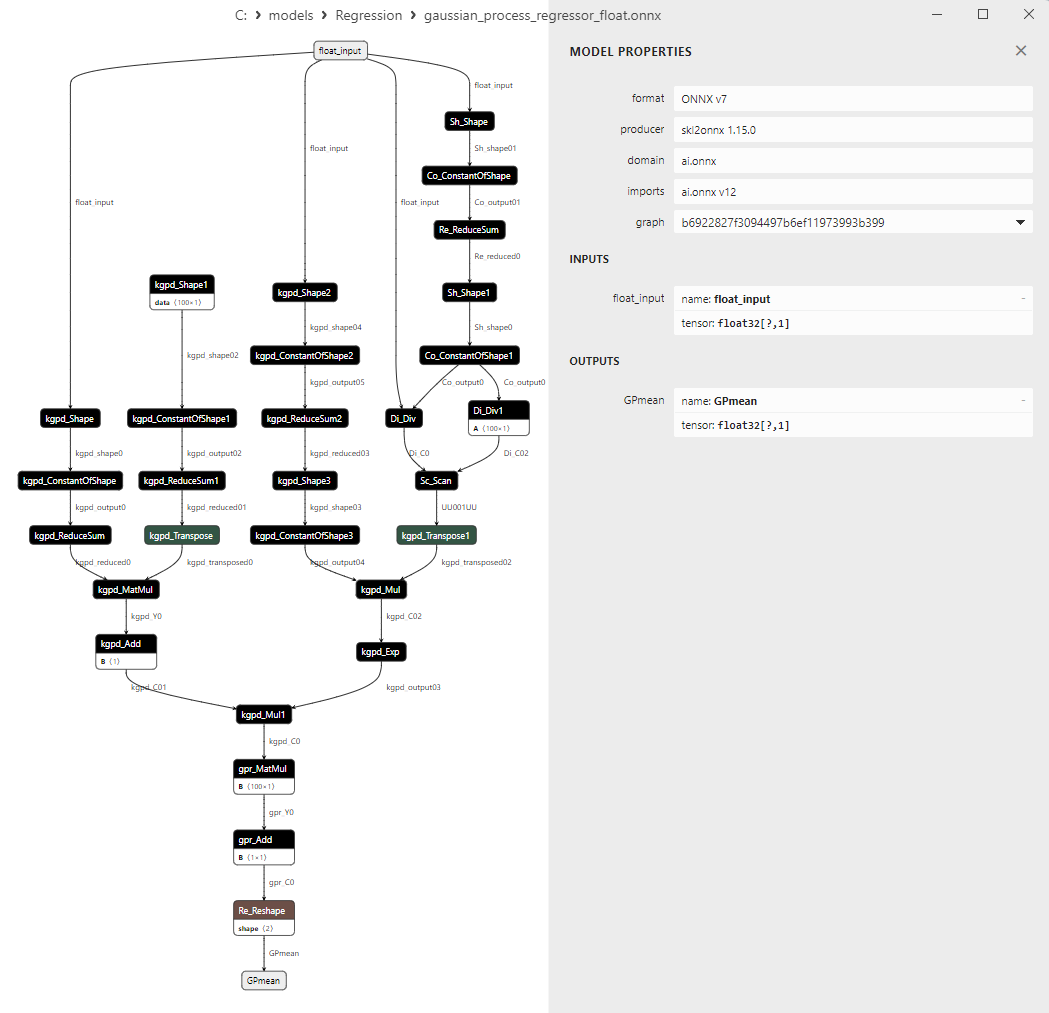

2.1.28.3. ONNX representation of the gaussian_process_regressor_float.onnx and gaussian_process_regressor_double.onnx

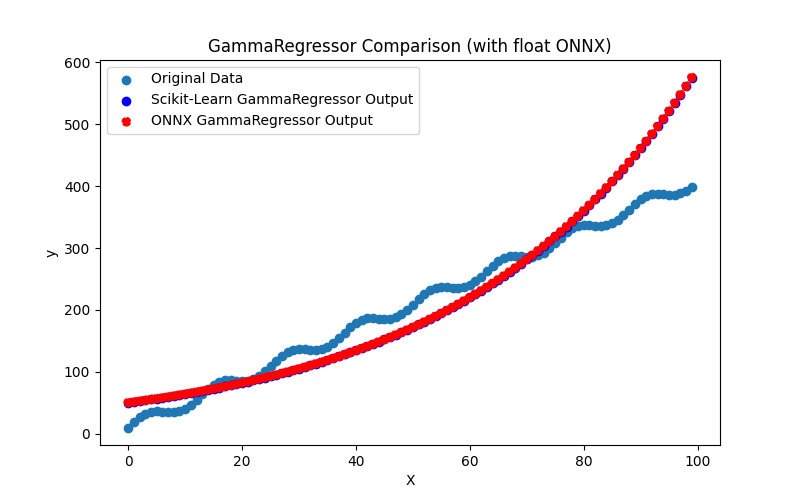

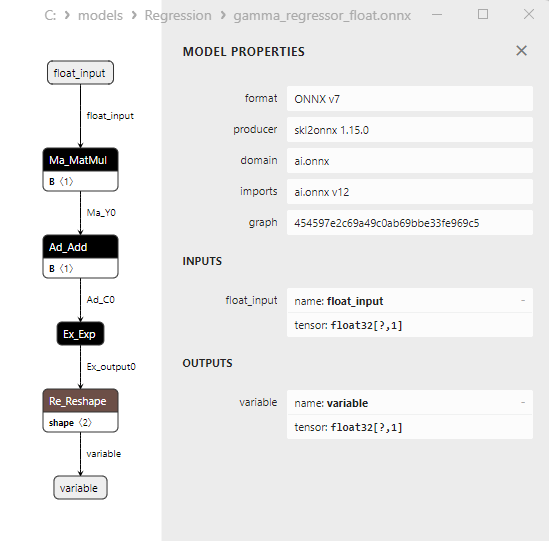

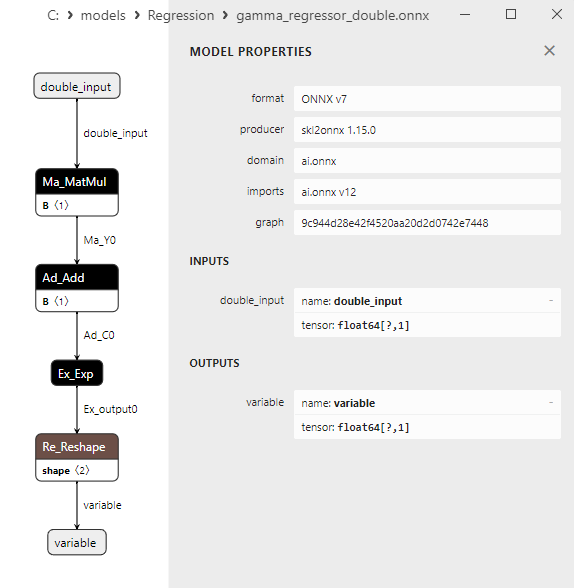

- 2.1.29. sklearn.linear_model.GammaRegressor

2.1.29.1. Code for creating the GammaRegressor model and exporting it to ONNX for float and double

2.1.29.2. MQL5 code for executing ONNX Models

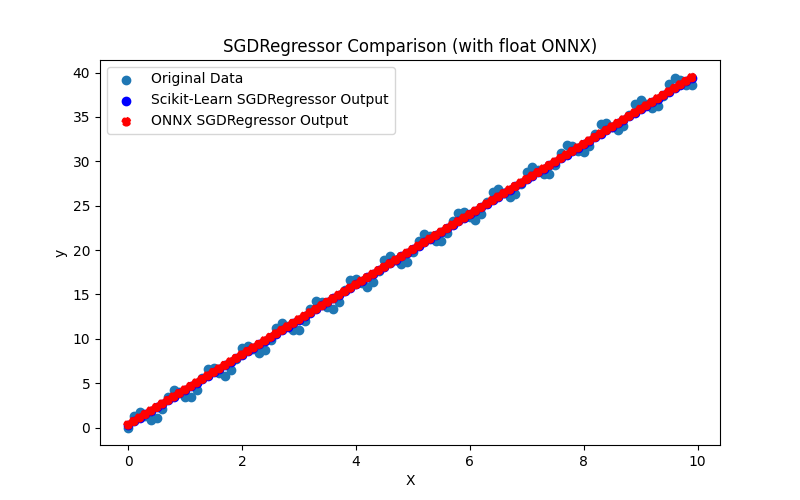

2.1.29.3. ONNX representation of the gamma_regressor_float.onnx and gamma_regressor_double.onnx - 2.1.30. sklearn.linear_model.SGDRegressor

2.1.30.1. Code for creating the SGDRegressor model and exporting it to ONNX for float and double

2.1.30.2. MQL5 code for executing ONNX Models

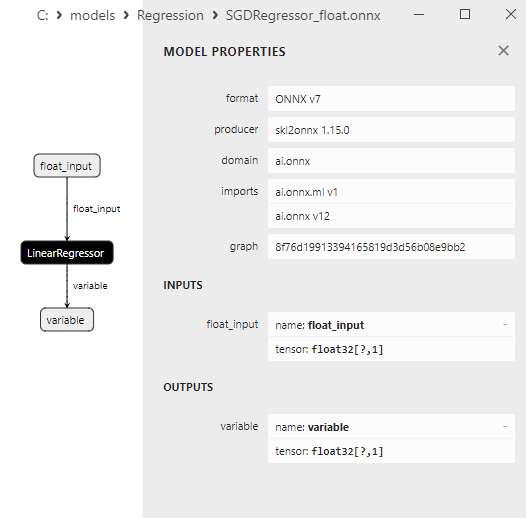

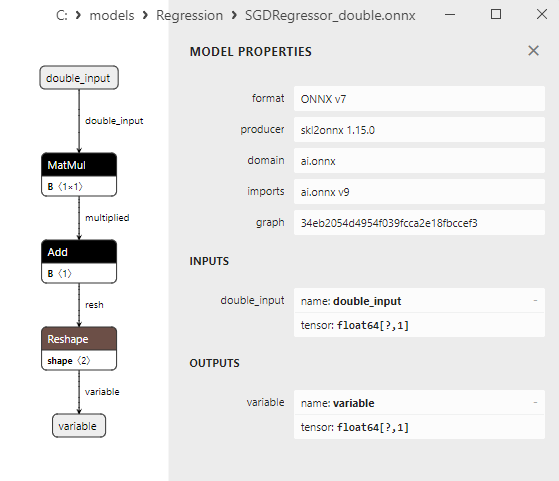

2.1.30.3. ONNX representation of the sgd_regressor_float.onnx and sgd_rgressor_double.onnx

- 2.2. Regression models from the Scikit-learn library that are converted only into float precision ONNX models

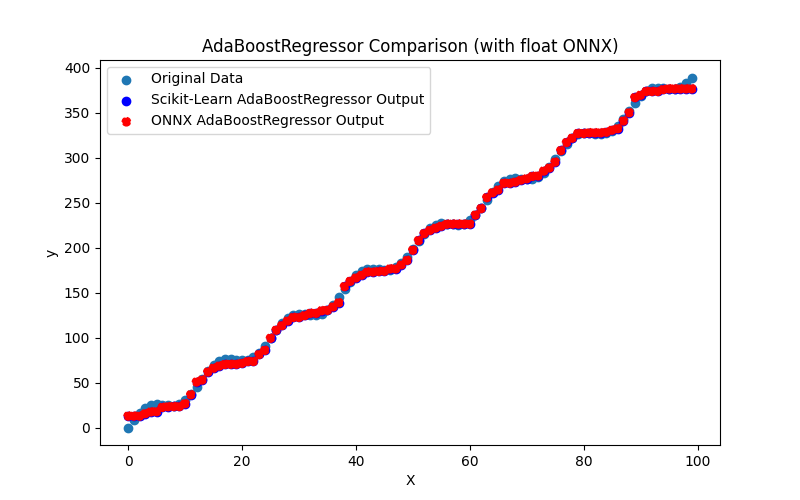

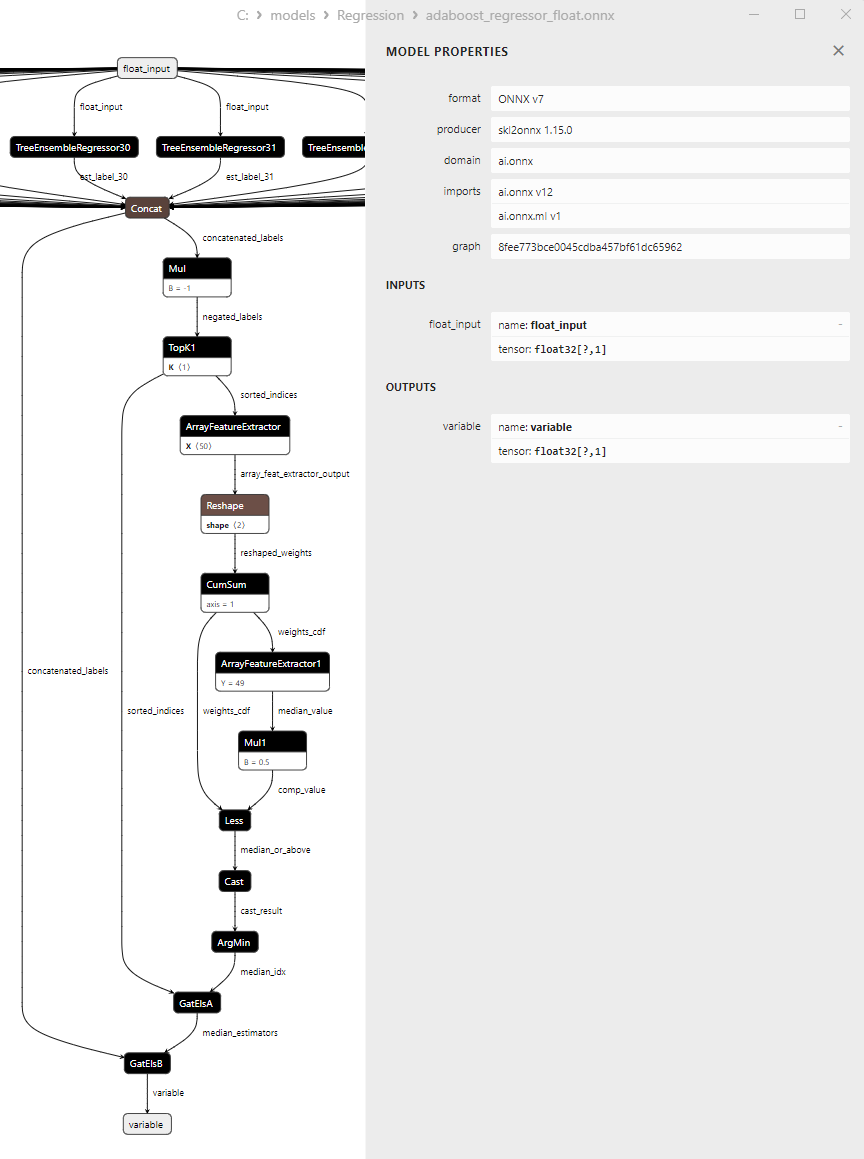

- 2.2.1. sklearn.linear_model.AdaBoostRegressor

2.2.1.1. Code for creating the AdaBoostRegressor model and exporting it to ONNX for float and double

2.2.1.2. MQL5 code for executing ONNX Models

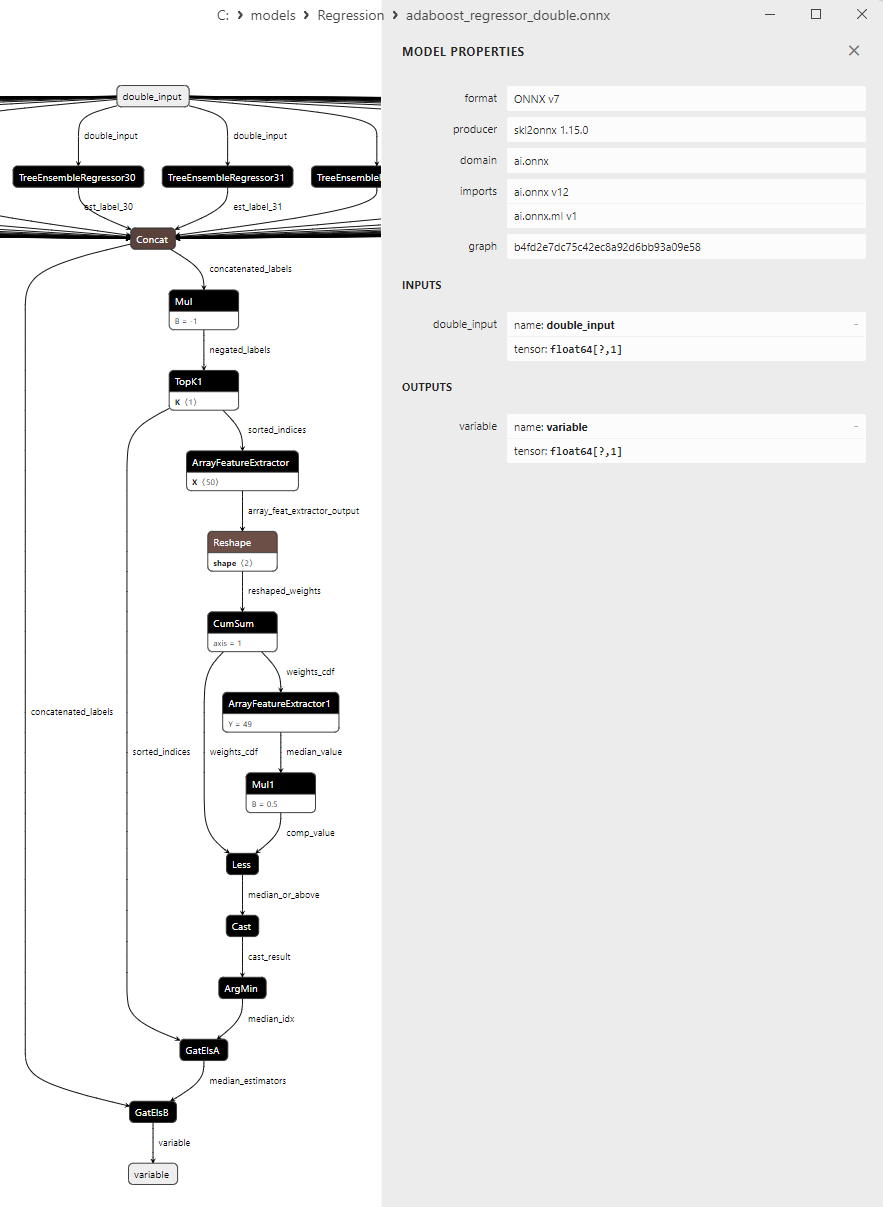

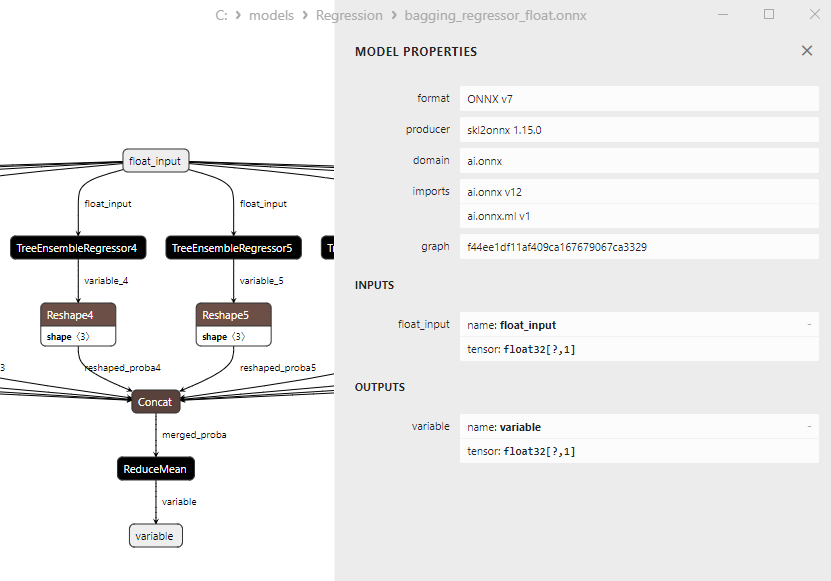

2.2.1.3. ONNX representation of the adaboost_regressor_float.onnx and adaboost_regressor_double.onnx - 2.2.2. sklearn.linear_model.BaggingRegressor

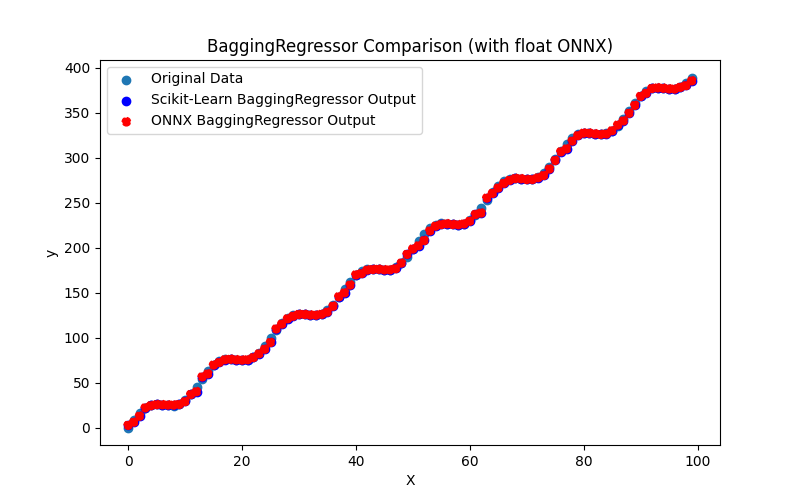

2.2.2.1. Code for creating the BaggingRegressor model and exporting it to ONNX for float and double

2.2.2.2. MQL5 code for executing ONNX Models

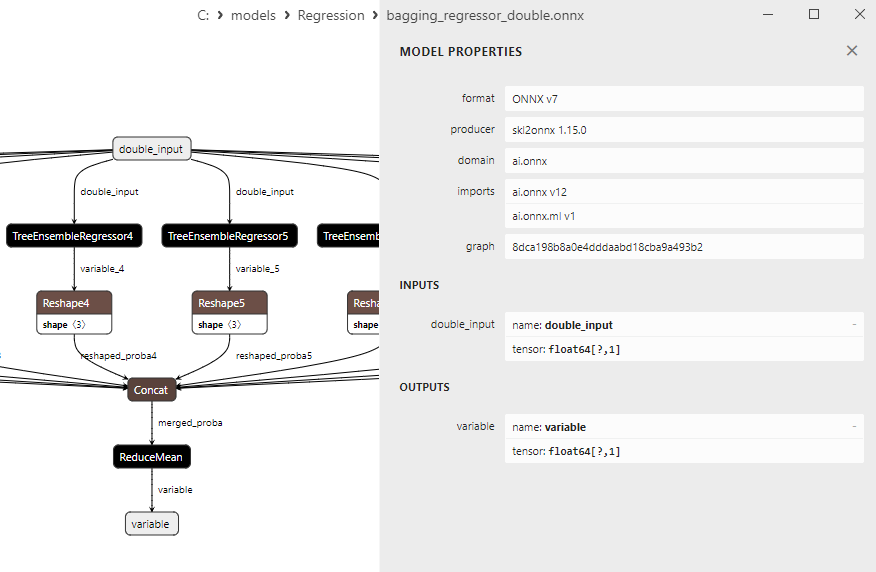

2.2.2.3. ONNX representation of the bagging_regressor_float.onnx and bagging_regressor_double.onnx - 2.2.3. sklearn.linear_model.DecisionTreeRegressor

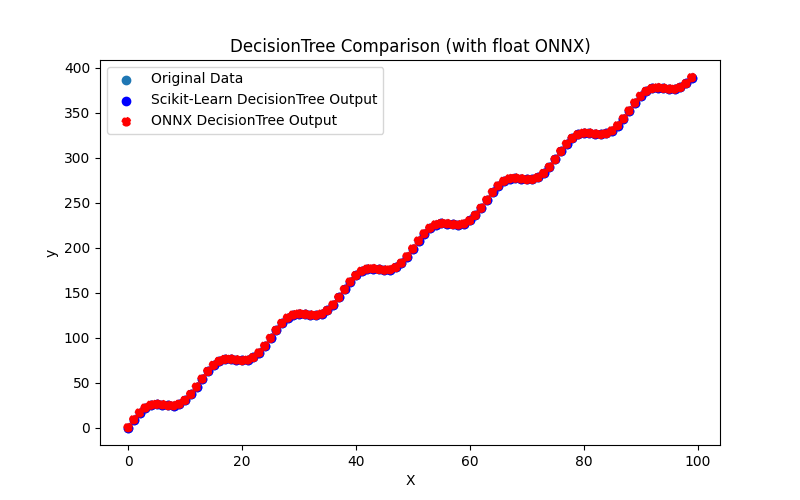

2.2.3.1. Code for creating the DecisionTreeRegressor model and exporting it to ONNX for float and double

2.2.3.2. MQL5 code for executing ONNX Models

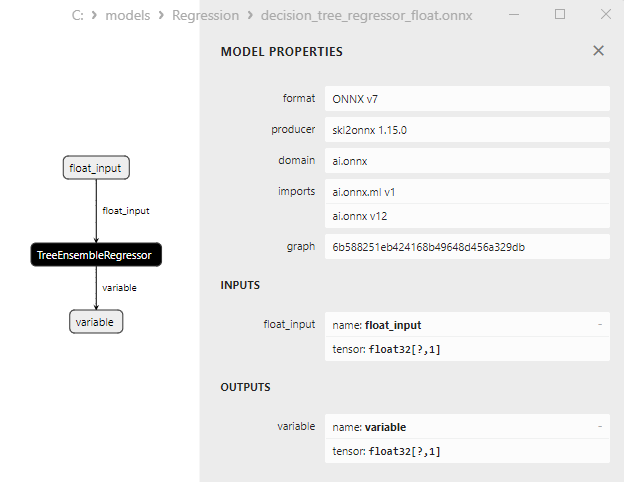

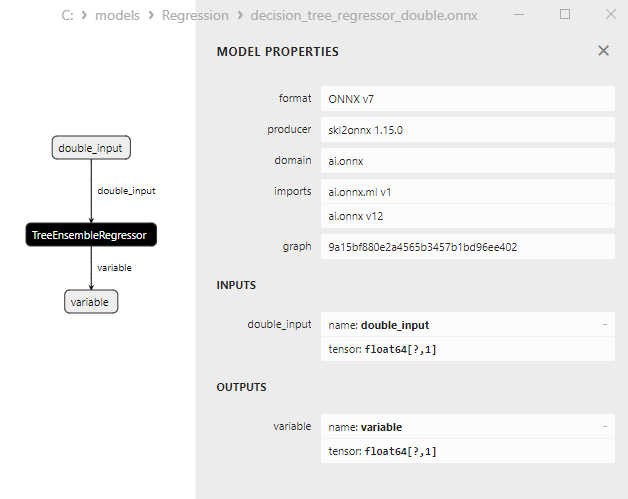

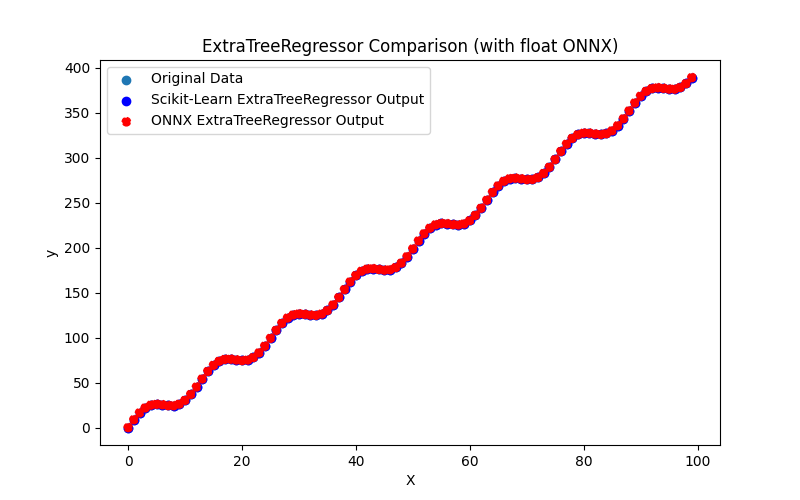

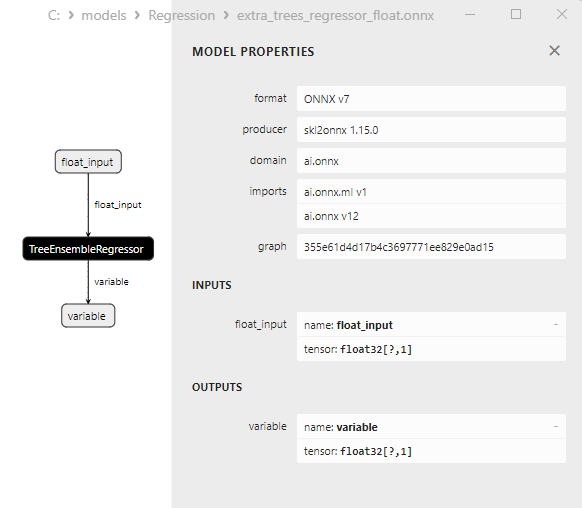

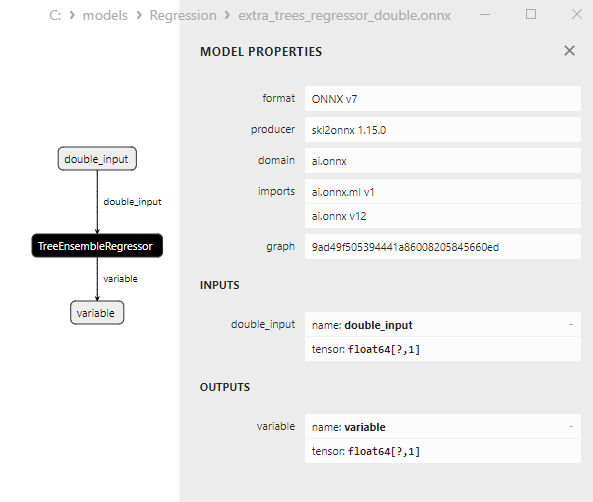

2.2.3.3. ONNX representation of the decision_tree_regressor_float.onnx and decision_tree_regressor_double.onnx - 2.2.4. sklearn.linear_model.ExtraTreeRegressor

2.2.4.1. Code for creating the ExtraTreeRegressor model and exporting it to ONNX for float and double

2.2.4.2. MQL5 code for executing ONNX Models

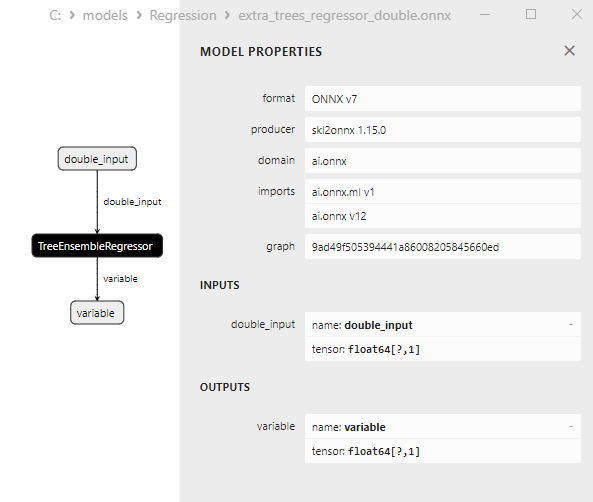

2.2.4.3. ONNX representation of the extra_tree_regressor_float.onnx and extra_tree_regressor_double.onnx - 2.2.5. sklearn.ensemble.ExtraTreesRegressor

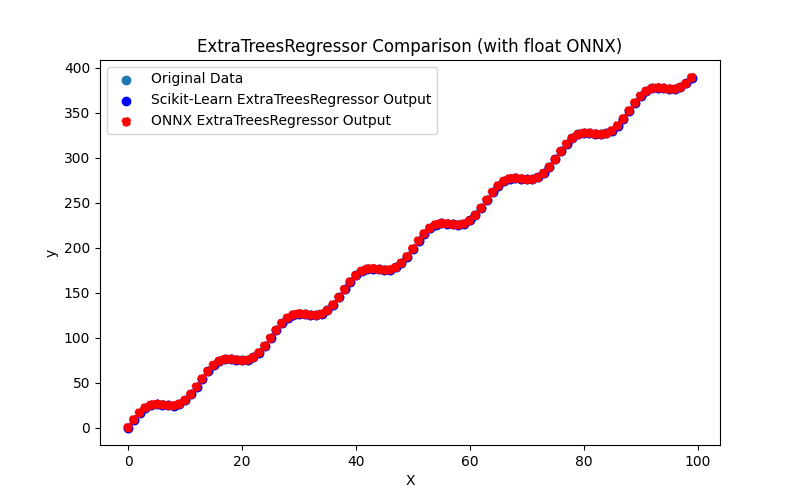

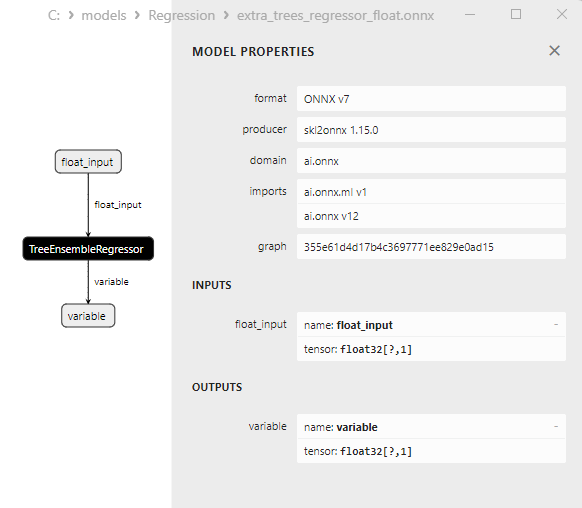

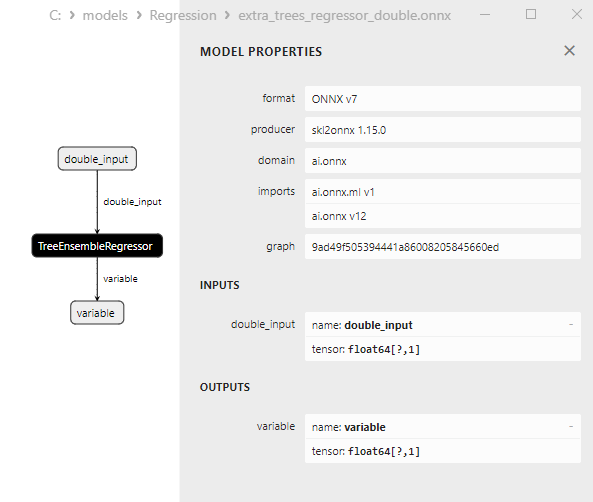

2.2.5.1. Code for creating the ExtraTreesRegressor model and exporting it to ONNX for float and double

2.2.5.2. MQL5 code for executing ONNX Models

2.2.5.3. ONNX representation of the extra_trees_regressor_float.onnx and extra_trees_regressor_double.onnx - 2.2.6. sklearn.svm.NuSVR

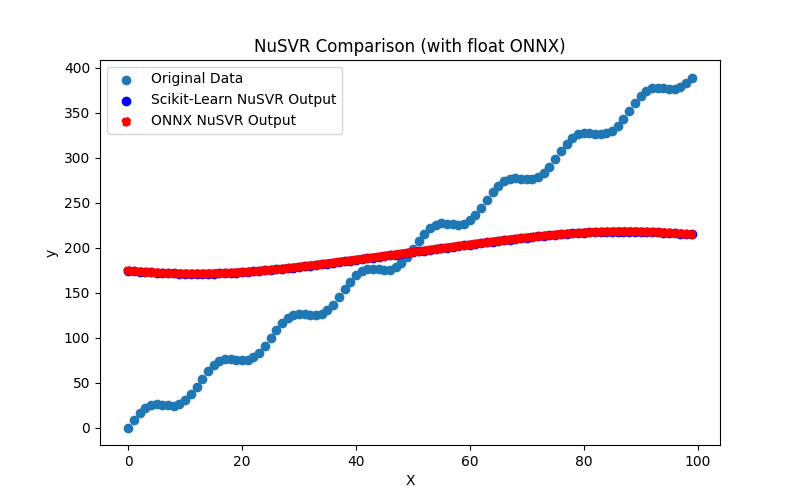

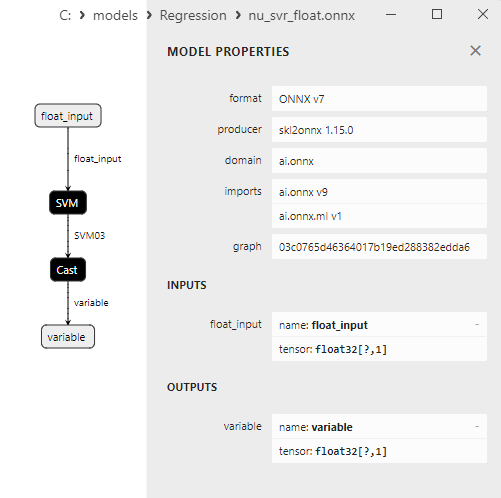

2.2.6.1. Code for creating the NuSVR model and exporting it to ONNX for float and double

2.2.6.2. MQL5 code for executing ONNX Models

2.2.6.3. ONNX representation of the nu_svr_float.onnx and nu_svr_double.onnx - 2.2.7. sklearn.ensemble.RandomForestRegressor

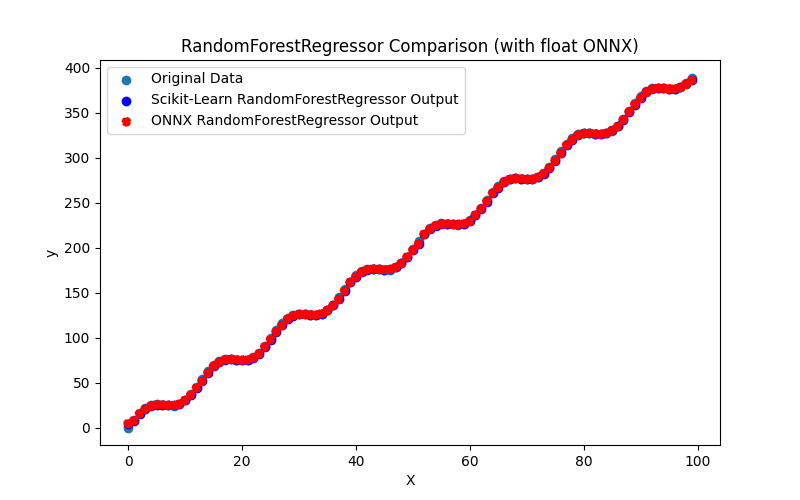

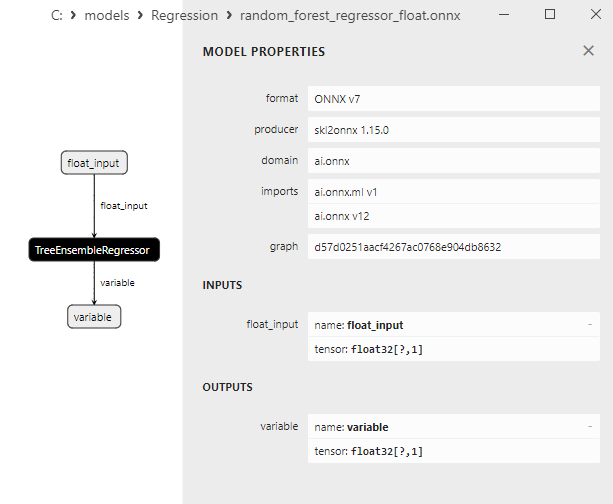

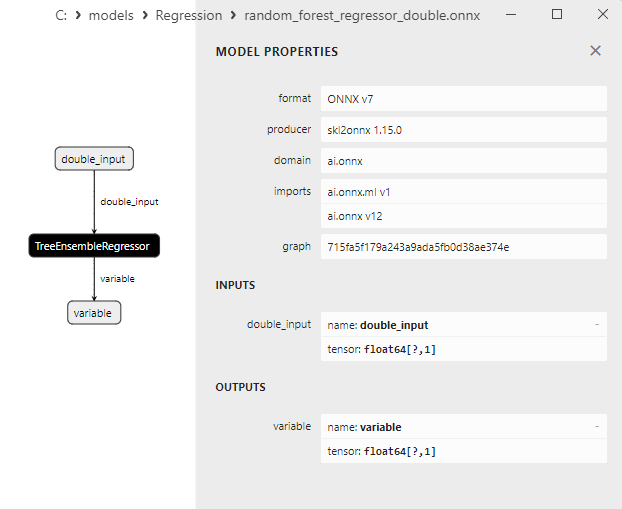

2.2.7.1. Code for creating the RandomForestRegressor model and exporting it to ONNX for float and double

2.2.7.2. MQL5 code for executing ONNX Models

2.2.7.3. ONNX representation of the random_forest_regressor_float.onnx and random_forest_regressor_double.onnx

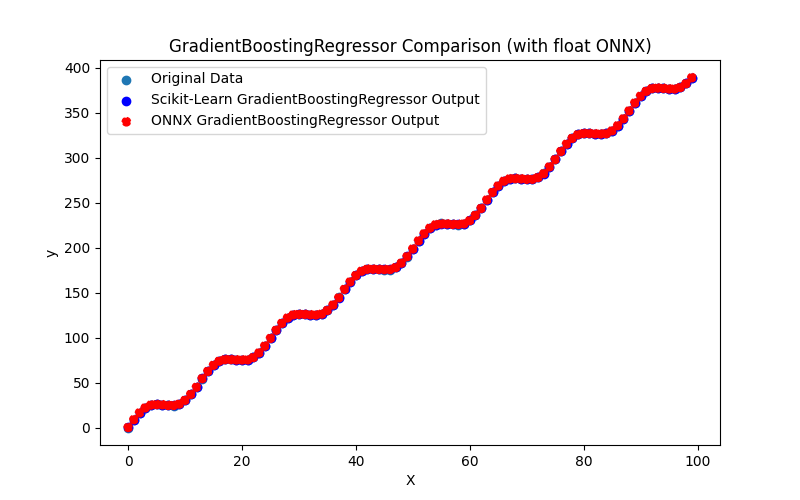

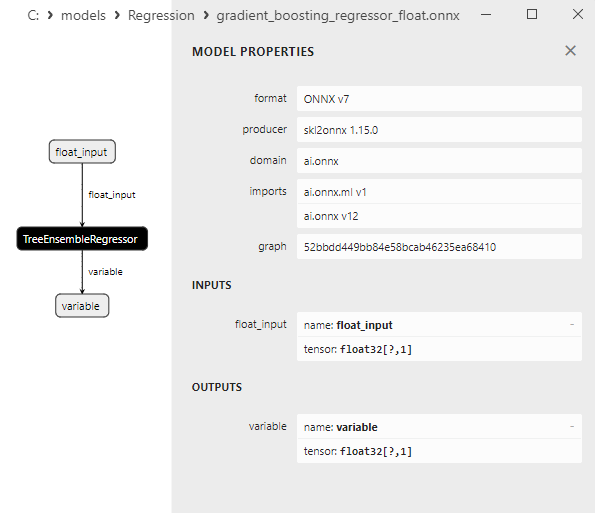

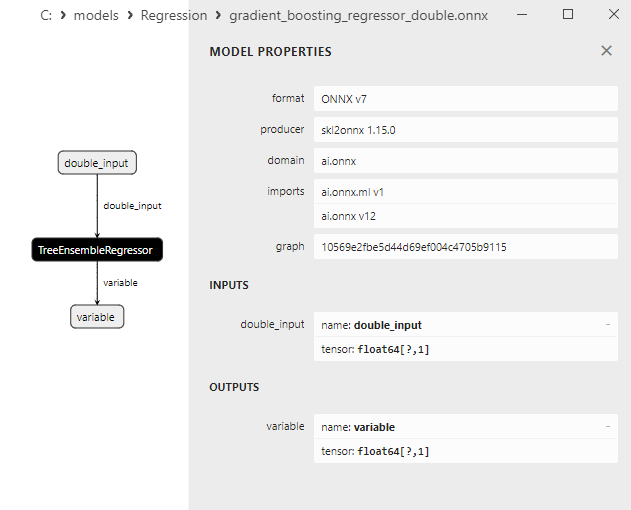

- 2.2.8. sklearn.ensemble.GradientBoostingRegressor

2.2.8.1. Code for creating the GradientBoostingRegressor model and exporting it to ONNX for float and double

2.2.8.2. MQL5 code for executing ONNX Models

2.2.8.3. ONNX representation of the gradient_boosting_regressor_float.onnx and gradient_boosting_regressor_double.onnx

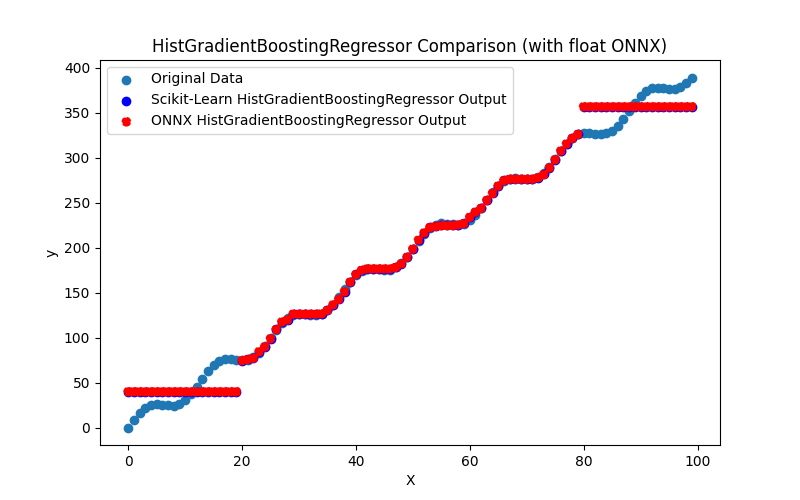

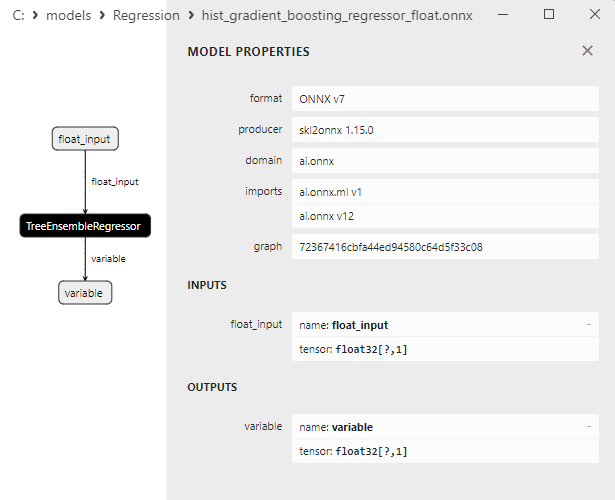

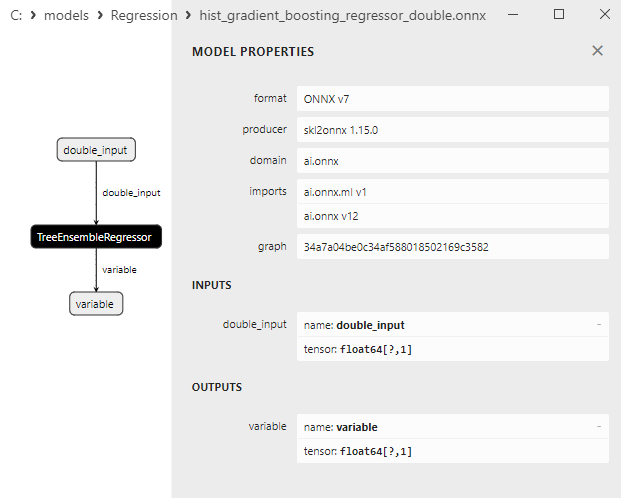

- 2.2.9. sklearn.ensemble.HistGradientBoostingRegressor

2.2.9.1. Code for creating the HistGradientBoostingRegressor model and exporting it to ONNX for float and double

2.2.9.2. MQL5 code for executing ONNX Models

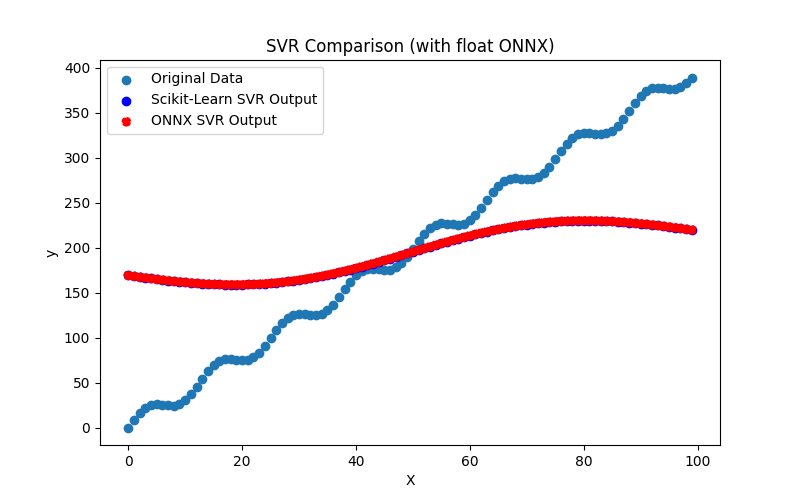

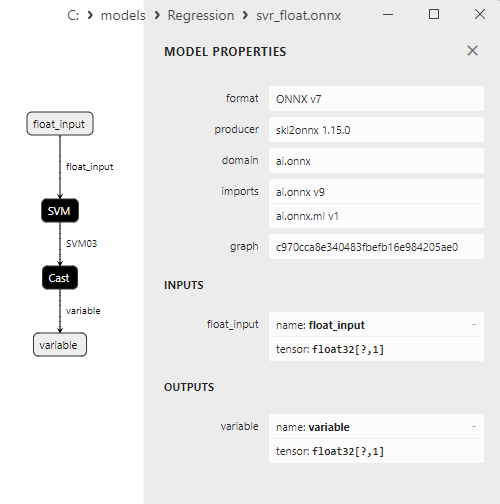

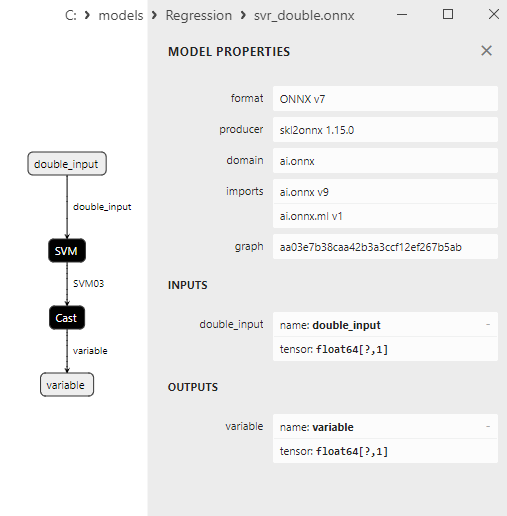

2.2.9.3. ONNX representation of the hist_gradient_boosting_regressor_float.onnx and hist_gradient_boosting_regressor_double.onnx - 2.2.10. sklearn.svm.SVR

2.2.10.1. Code for creating the SVR model and exporting it to ONNX for float and double

2.2.10.2. MQL5 code for executing ONNX Models

2.2.10.3. ONNX representation of the svr_float.onnx and svr_double.onnx

- 2.3. Regression Models that encountered problems when converting to ONNX

- 2.3.1. sklearn.dummy.DummyRegressor

Code for creating the DummyRegressor - 2.3.2. sklearn.kernel_ridge.KernelRidge

Code for creating the KernelRidge - 2.3.3. sklearn.isotonic.IsotonicRegression

Code for creating the IsotonicRegression - 2.3.4. sklearn.cross_decomposition.PLSCanonical

Code for creating the PLSCanonical - 2.3.5. sklearn.cross_decomposition.CCA

Code for creating the CCA - Conclusion

- Summary

If it bothers you, welcome to contribute

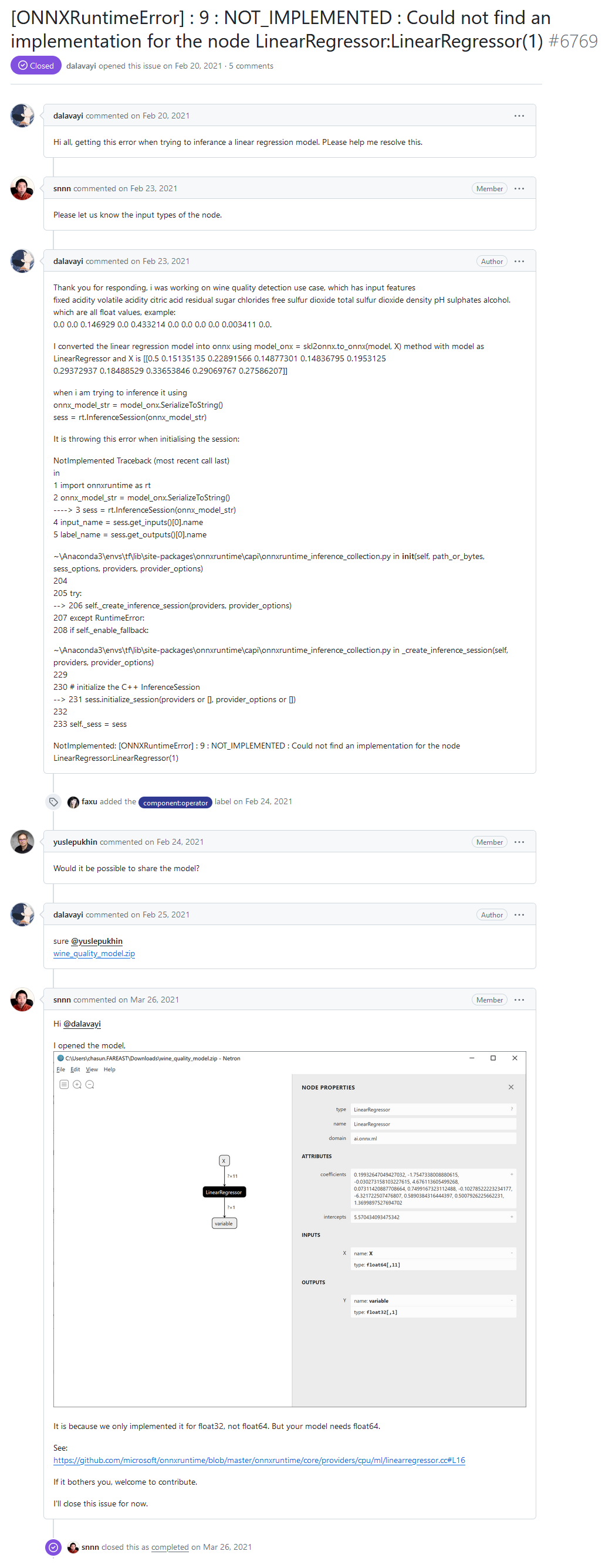

On the ONNX Runtime developer forum, one of the users reported an error "[ONNXRuntimeError] : 9 : NOT_IMPLEMENTED : Could not find an implementation for the node LinearRegressor:LinearRegressor(1)" when executing a model through ONNX Runtime.

Hi all, getting this error when trying to inferance a linear regression model. PLease help me resolve this.

"NOT_IMPLEMENTED : Could not find an implementation for the node LinearRegressor:LinearRegressor(1)" error from ONNX Runtime developer forum

Developer's response:

It is because we only implemented it for float32, not float64. But your model needs float64.

See:

https://github.com/microsoft/onnxruntime/blob/master/onnxruntime/core/providers/cpu/ml/linearregressor.cc#L16

If it bothers you, welcome to contribute.

In the user's ONNX model, the ai.onnx.ml.LinearRegressor operator is called with double (float64) data type, and the error message arises because the ONNX Runtime lacks support for the LinearRegressor() operator with double precision.

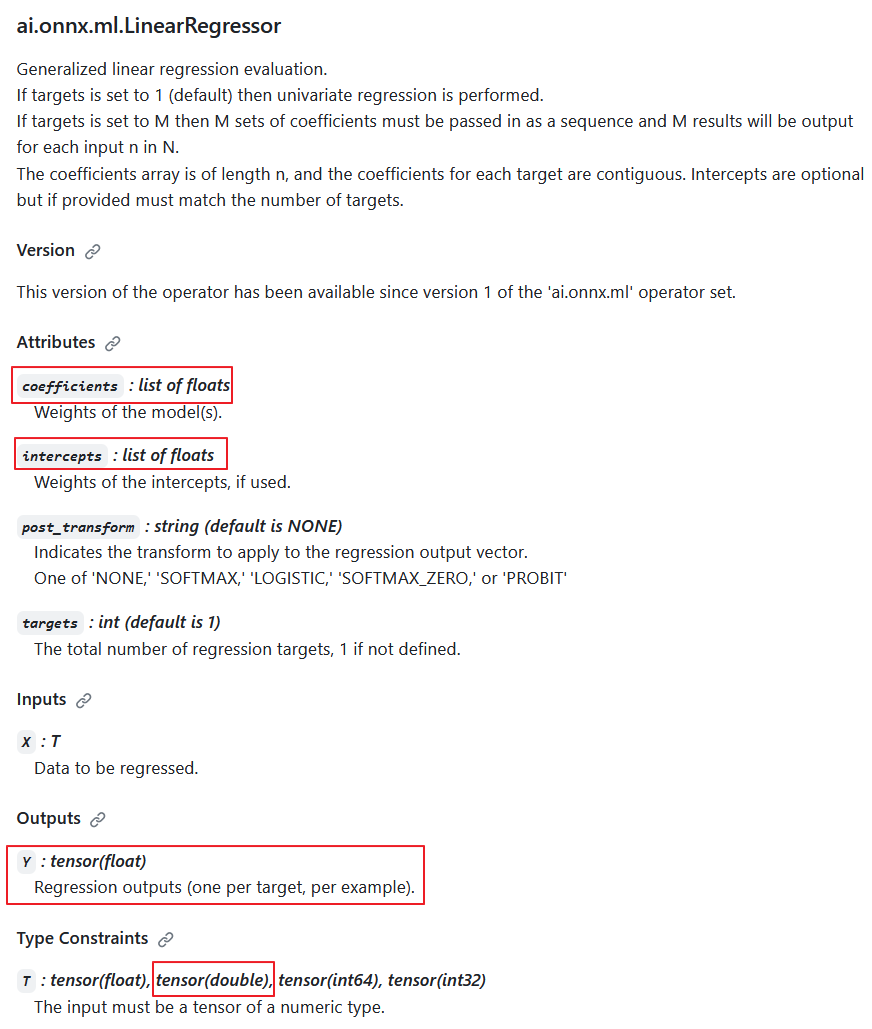

According to the specification of the ai.onnx.ml.LinearRegressor operator, the double input data type is possible (T: tensor(float), tensor(double), tensor(int64), tensor(int32)); however, the developers intentionally chose not to implement it.

The reason for this is that the output always returns Y: tensor(float) value. Furthermore, the computational parameters are float numbers (coefficients: list of floats, intercepts: list of floats).

Consequently, when the calculations are performed in double precision, this operator reduces the precision to float, and its implementation in double precision calculations has questionable value.

ai.onnx.ml.LinearRegressor operator description

Thus, the reduction of precision to float in the parameters and output value makes it impossible for the ai.onnx.ml.LinearRegressor to fully operate with double (float64) numbers. Presumably, for this reason, the ONNX Runtime developers decided to refrain from implementing it for the double type

The method of "adding double support" was demonstrated by the developers in code comments (highlighted in yellow).

In ONNX Runtime, its computation is performed using the LinearRegressor class (https://github.com/microsoft/onnxruntime/blob/main/onnxruntime/core/providers/cpu/ml/linearregressor.h).

The operator's parameters, coefficients_, and intercepts_, are stored as std::vector<float>:

#pragma once #include "core/common/common.h" #include "core/framework/op_kernel.h" #include "core/util/math_cpuonly.h" #include "ml_common.h" namespace onnxruntime { namespace ml { class LinearRegressor final : public OpKernel { public: LinearRegressor(const OpKernelInfo& info); Status Compute(OpKernelContext* context) const override; private: int64_t num_targets_; std::vector<float> coefficients_; std::vector<float> intercepts_; bool use_intercepts_; POST_EVAL_TRANSFORM post_transform_; }; } // namespace ml } // namespace onnxruntimeThe implementation of LinearRegressor operator (https://github.com/microsoft/onnxruntime/blob/main/onnxruntime/core/providers/cpu/ml/linearregressor.cc)

// Copyright (c) Microsoft Corporation. All rights reserved. // Licensed under the MIT License. #include "core/providers/cpu/ml/linearregressor.h" #include "core/common/narrow.h" #include "core/providers/cpu/math/gemm.h" namespace onnxruntime { namespace ml { ONNX_CPU_OPERATOR_ML_KERNEL( LinearRegressor, 1, // KernelDefBuilder().TypeConstraint("T", std::vector<MLDataType>{ // DataTypeImpl::GetTensorType<float>(), // DataTypeImpl::GetTensorType<double>()}), KernelDefBuilder().TypeConstraint("T", DataTypeImpl::GetTensorType<float>()), LinearRegressor); LinearRegressor::LinearRegressor(const OpKernelInfo& info) : OpKernel(info), intercepts_(info.GetAttrsOrDefault<float>("intercepts")), post_transform_(MakeTransform(info.GetAttrOrDefault<std::string>("post_transform", "NONE"))) { ORT_ENFORCE(info.GetAttr<int64_t>("targets", &num_targets_).IsOK()); ORT_ENFORCE(info.GetAttrs<float>("coefficients", coefficients_).IsOK()); // use the intercepts_ if they're valid use_intercepts_ = intercepts_.size() == static_cast<size_t>(num_targets_); } // Use GEMM for the calculations, with broadcasting of intercepts // https://github.com/onnx/onnx/blob/main/docs/Operators.md#Gemm // // X: [num_batches, num_features] // coefficients_: [num_targets, num_features] // intercepts_: optional [num_targets]. // Output: X * coefficients_^T + intercepts_: [num_batches, num_targets] template <typename T> static Status ComputeImpl(const Tensor& input, ptrdiff_t num_batches, ptrdiff_t num_features, ptrdiff_t num_targets, const std::vector<float>& coefficients, const std::vector<float>* intercepts, Tensor& output, POST_EVAL_TRANSFORM post_transform, concurrency::ThreadPool* threadpool) { const T* input_data = input.Data<T>(); T* output_data = output.MutableData<T>(); if (intercepts != nullptr) { TensorShape intercepts_shape({num_targets}); onnxruntime::Gemm<T>::ComputeGemm(CBLAS_TRANSPOSE::CblasNoTrans, CBLAS_TRANSPOSE::CblasTrans, num_batches, num_targets, num_features, 1.f, input_data, coefficients.data(), 1.f, intercepts->data(), &intercepts_shape, output_data, threadpool); } else { onnxruntime::Gemm<T>::ComputeGemm(CBLAS_TRANSPOSE::CblasNoTrans, CBLAS_TRANSPOSE::CblasTrans, num_batches, num_targets, num_features, 1.f, input_data, coefficients.data(), 1.f, nullptr, nullptr, output_data, threadpool); } if (post_transform != POST_EVAL_TRANSFORM::NONE) { ml::batched_update_scores_inplace(gsl::make_span(output_data, SafeInt<size_t>(num_batches) * num_targets), num_batches, num_targets, post_transform, -1, false, threadpool); } return Status::OK(); } Status LinearRegressor::Compute(OpKernelContext* ctx) const { Status status = Status::OK(); const auto& X = *ctx->Input<Tensor>(0); const auto& input_shape = X.Shape(); if (input_shape.NumDimensions() > 2) { return ORT_MAKE_STATUS(ONNXRUNTIME, INVALID_ARGUMENT, "Input shape had more than 2 dimension. Dims=", input_shape.NumDimensions()); } ptrdiff_t num_batches = input_shape.NumDimensions() <= 1 ? 1 : narrow<ptrdiff_t>(input_shape[0]); ptrdiff_t num_features = input_shape.NumDimensions() <= 1 ? narrow<ptrdiff_t>(input_shape.Size()) : narrow<ptrdiff_t>(input_shape[1]); Tensor& Y = *ctx->Output(0, {num_batches, num_targets_}); concurrency::ThreadPool* tp = ctx->GetOperatorThreadPool(); auto element_type = X.GetElementType(); switch (element_type) { case ONNX_NAMESPACE::TensorProto_DataType_FLOAT: { status = ComputeImpl<float>(X, num_batches, num_features, narrow<ptrdiff_t>(num_targets_), coefficients_, use_intercepts_ ? &intercepts_ : nullptr, Y, post_transform_, tp); break; } case ONNX_NAMESPACE::TensorProto_DataType_DOUBLE: { // TODO: Add support for 'double' to the scoring functions in ml_common.h // once that is done we can just call ComputeImpl<double>... // Alternatively we could cast the input to float. } default: status = ORT_MAKE_STATUS(ONNXRUNTIME, FAIL, "Unsupported data type of ", element_type); } return status; } } // namespace ml } // namespace onnxruntime

It turns out that there is an option to use double numbers as input values and perform the operator's computation with float parameters. Another possibility could be to reduce the precision of the input data to float. However, none of these options can be considered a proper solution.

The specification of the ai.onnx.ml.LinearRegressor operator restricts the capability for full operation with double numbers since the parameters and output value are limited to the float type.

A similar situation occurs with other ONNX ML operators, such as ai.onnx.ml.SVMRegressor and ai.onnx.ml.TreeEnsembleRegressor.

As a result, all developers utilizing ONNX model execution in double precision face this limitation of the specification. A solution might involve extending the ONNX specification (or adding similar operators like LinearRegressor64, SVMRegressor64, and TreeEnsembleRegressor64 with parameters and output values in double). However, at present, this issue remains unresolved.

Much depends on the ONNX converter. For models calculated in double, it might be preferable to avoid using these operators (though this may not always be possible). In this particular case, the converter to ONNX did not work optimally with the user's model.

As we will see later, the sklearn-onnx converter manages to bypass the limitation of LinearRegressor: for ONNX double models, it uses ONNX operators MatMul() and Add() instead. Thanks to this method, numerous regression models of the Scikit-learn library are successfully converted into ONNX models calculated in double, preserving the accuracy of the original double models.

1. Test Dataset

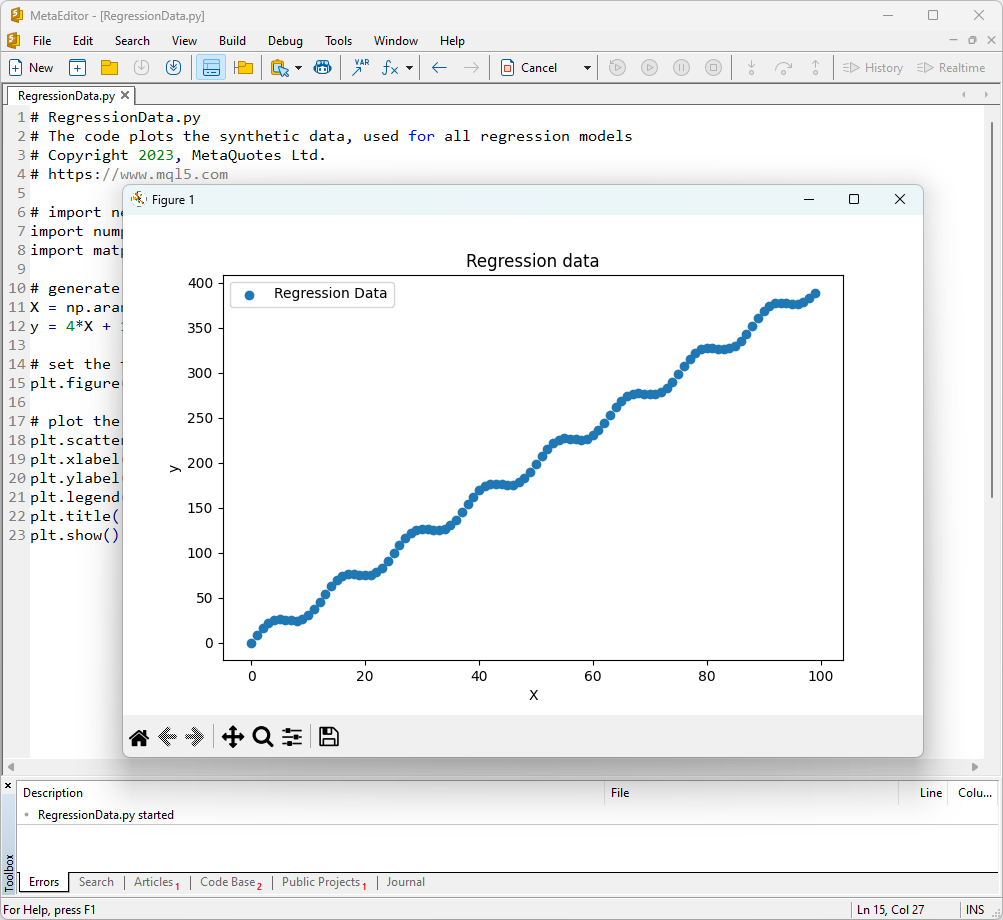

To run the examples, you will need to install Python (we used version 3.10.8), additional libraries (pip install -U scikit-learn numpy matplotlib onnx onnxruntime skl2onnx), and specify the path to Python in the MetaEditor (in the menu Tools->Options->Compilers->Python).

As a test dataset, we will use generated values of the function y = 4X + 10sin(X*0.5).

To display a graph of such a function, open MetaEditor, create a file named RegressionData.py, copy the script text, and run it by clicking the "Compile" button.

The script for displaying the test dataset

# RegressionData.py # The code plots the synthetic data, used for all regression models # Copyright 2023, MetaQuotes Ltd. # https://mql5.com # import necessary libraries import numpy as np import matplotlib.pyplot as plt # generate synthetic data for regression X = np.arange(0,100,1).reshape(-1,1) y = 4*X + 10*np.sin(X*0.5) # set the figure size plt.figure(figsize=(8,5)) # plot the initial data for regression plt.scatter(X, y, label='Regression Data', marker='o') plt.xlabel('X') plt.ylabel('y') plt.legend() plt.title('Regression data') plt.show()

As a result, a graph of the function will be displayed, which we will use to test regression methods.

Fig.1. Function for testing regression models

2. Regression Models

The goal of a regression task is to find a mathematical function or model that best describes the relationship between features and the target variable to predict numerical values for new data. This allows making forecasts, optimizing solutions, and making informed decisions based on data.

Let's consider the main regression models in the scikit-learn package.

2.0. List of Scikit-learn Regression Models

To display a list of available scikit-learn regression models, you can use the script:

# ScikitLearnRegressors.py # The script lists all the regression algorithms available inb scikit-learn # Copyright 2023, MetaQuotes Ltd. # https://mql5.com # print Python version from platform import python_version print("The Python version is ", python_version()) # print scikit-learn version import sklearn print('The scikit-learn version is {}.'.format(sklearn.__version__)) # print scikit-learn regression models from sklearn.utils import all_estimators regressors = all_estimators(type_filter='regressor') for index, (name, RegressorClass) in enumerate(regressors, start=1): print(f"Regressor {index}: {name}")

Output:

The scikit-learn version is 1.3.2.

Regressor 1: ARDRegression

Regressor 2: AdaBoostRegressor

Regressor 3: BaggingRegressor

Regressor 4: BayesianRidge

Regressor 5: CCA

Regressor 6: DecisionTreeRegressor

Regressor 7: DummyRegressor

Regressor 8: ElasticNet

Regressor 9: ElasticNetCV

Regressor 10: ExtraTreeRegressor

Regressor 11: ExtraTreesRegressor

Regressor 12: GammaRegressor

Regressor 13: GaussianProcessRegressor

Regressor 14: GradientBoostingRegressor

Regressor 15: HistGradientBoostingRegressor

Regressor 16: HuberRegressor

Regressor 17: IsotonicRegression

Regressor 18: KNeighborsRegressor

Regressor 19: KernelRidge

Regressor 20: Lars

Regressor 21: LarsCV

Regressor 22: Lasso

Regressor 23: LassoCV

Regressor 24: LassoLars

Regressor 25: LassoLarsCV

Regressor 26: LassoLarsIC

Regressor 27: LinearRegression

Regressor 28: LinearSVR

Regressor 29: MLPRegressor

Regressor 30: MultiOutputRegressor

Regressor 31: MultiTaskElasticNet

Regressor 32: MultiTaskElasticNetCV

Regressor 33: MultiTaskLasso

Regressor 34: MultiTaskLassoCV

Regressor 35: NuSVR

Regressor 36: OrthogonalMatchingPursuit

Regressor 37: OrthogonalMatchingPursuitCV

Regressor 38: PLSCanonical

Regressor 39: PLSRegression

Regressor 40: PassiveAggressiveRegressor

Regressor 41: PoissonRegressor

Regressor 42: QuantileRegressor

Regressor 43: RANSACRegressor

Regressor 44: RadiusNeighborsRegressor

Regressor 45: RandomForestRegressor

Regressor 46: RegressorChain

Regressor 47: Ridge

Regressor 48: RidgeCV

Regressor 49: SGDRegressor

Regressor 50: SVR

Regressor 51: StackingRegressor

Regressor 52: TheilSenRegressor

Regressor 53: TransformedTargetRegressor

Regressor 54: TweedieRegressor

Regressor 55: VotingRegressor

For convenience in this list of regressors, they are highlighted in different colors. Models that require base regression model are highlighted in gray, while other models can be used independently. Note that models successfully exported to the ONNX format are marked in green, models that encounter errors during conversion in the current version of scikit-learn 1.2.2 are marked in red. Methods unsuitable for the considered test task are highlighted in blue.

Regression quality analysis uses regression metrics, which are functions of true and predicted values. In MQL5 language, several different metrics are available, detailed in the article "Evaluating ONNX models using regression metrics".

In this article, three metrics will be used to compare the quality of different models:

- Coefficient of determination R-squared (R2);

- Mean Absolute Error (MAE);

- Mean Squared Error (MSE).

2.1. Scikit-learn Regression Models that convert to ONNX models float and double

This section presents regression models that are successfully converted into ONNX formats in both float and double precisions.

All the regression models discussed further are presented in the following format:

- Model description, working principle, advantages, and limitations

- Python script for creating the model, exporting it to ONNX files in float and double formats, and executing the obtained models using ONNX Runtime in Python. Metrics like R^2, MAE, MSE, calculated using sklearn.metrics, are used to evaluate the quality of the original and ONNX models.

- MQL5 script for executing ONNX models (float and double) via ONNX Runtime, with metrics calculated using RegressionMetric().

- ONNX model representation in Netron for float and double precision.

2.1.1. sklearn.linear_model.ARDRegression

ARDRegression (Automatic Relevance Determination Regression) is a regression method designed to address regression problems while automatically determining the importance (relevance) of features and establishing their weights during the model training process.

ARDRegression enables the detection and use of only the most important features to build a regression model, which can be beneficial when dealing with a large number of features.

Working Principle of ARDRegression:

- Linear Regression: ARDRegression is based on linear regression, assuming a linear relationship between the independent variables (features) and the target variable.

- Automatic Feature Importance Determination: The main distinction of ARDRegression is its automatic determination of which features are most important for predicting the target variable. This is achieved by introducing prior distributions (regularization) over the weights, allowing the model to automatically set zero weights for less significant features.

- Estimation of Posterior Probabilities: ARDRegression computes posterior probabilities for each feature, enabling the determination of their importance. Features with high posterior probabilities are considered relevant and receive non-zero weights, while features with low posterior probabilities receive zero weights.

- Dimensionality Reduction: Thus, ARDRegression can lead to data dimensionality reduction by removing insignificant features.

Advantages of ARDRegression:

- Automatic Determination of Important Features: The method automatically identifies and uses only the most important features, potentially enhancing model performance and reducing the risk of overfitting.

- Resilience to Multicollinearity: ARDRegression handles multicollinearity well, even when features are highly correlated.

Limitations of ARDRegression:

- Requires Selection of Prior Distributions: Choosing suitable prior distributions might require experimentation.

- Computational Complexity: Training ARDRegression can be computationally expensive, particularly for large datasets.

ARDRegression is a regression method that automatically determines feature importance and establishes their weights based on posterior probabilities. This method is useful when considering only significant features for building a regression model and reducing data dimensionality is necessary.

2.1.1.1. Code for creating the ARDRegression model and exporting it to ONNX for float and double

This code creates the sklearn.linear_model.ARDRegression model, trains it on synthetic data, saves the model in the ONNX format, and performs predictions using both float and double input data. It also evaluates the accuracy of both the original model and the models exported to ONNX.

# The code demonstrates the process of training ARDRegressor model, exporting it to ONNX format (both float and double), and making predictions using the ONNX models.

# Copyright 2023, MetaQuotes Ltd.

# https://www.mql5.com

# function to compare matching decimal places

def compare_decimal_places(value1, value2):

# convert both values to strings

str_value1 = str(value1)

str_value2 = str(value2)

# find the positions of the decimal points in the strings

dot_position1 = str_value1.find(".")

dot_position2 = str_value2.find(".")

# if one of the values doesn't have a decimal point, return 0

if dot_position1 == -1 or dot_position2 == -1:

return 0

# calculate the number of decimal places

decimal_places1 = len(str_value1) - dot_position1 - 1

decimal_places2 = len(str_value2) - dot_position2 - 1

# find the minimum of the two decimal places counts

min_decimal_places = min(decimal_places1, decimal_places2)

# initialize a count for matching decimal places

matching_count = 0

# compare characters after the decimal point

for i in range(1, min_decimal_places + 1):

if str_value1[dot_position1 + i] == str_value2[dot_position2 + i]:

matching_count += 1

else:

break

return matching_count

# import necessary libraries

import numpy as np

import matplotlib.pyplot as plt

from sklearn.linear_model import ARDRegression

from sklearn.metrics import r2_score,mean_absolute_error,mean_squared_error

import onnx

import onnxruntime as ort

from skl2onnx import convert_sklearn

from skl2onnx.common.data_types import FloatTensorType

from skl2onnx.common.data_types import DoubleTensorType

from sys import argv

# define the path for saving the model

data_path = argv[0]

last_index = data_path.rfind("\\") + 1

data_path = data_path[0:last_index]

# generate synthetic data for regression

X = np.arange(0,100,1).reshape(-1,1)

y = 4*X + 10*np.sin(X*0.5)

model_name="ARDRegression"

onnx_model_filename = data_path + "ard_regression"

# create an ARDRegression model

regression_model = ARDRegression()

# fit the model to the data

regression_model.fit(X, y.ravel())

# predict values for the entire dataset

y_pred = regression_model.predict(X)

# evaluate the model's performance

r2 = r2_score(y, y_pred)

mse = mean_squared_error(y, y_pred)

mae = mean_absolute_error(y, y_pred)

print("\n"+model_name+" Original model (double)")

print("R-squared (Coefficient of determination):", r2)

print("Mean Absolute Error:", mae)

print("Mean Squared Error:", mse)

# convert to ONNX-model (float)

# define the input data type as FloatTensorType

initial_type_float = [('float_input', FloatTensorType([None, X.shape[1]]))]

# export the model to ONNX format

onnx_model_float = convert_sklearn(regression_model, initial_types=initial_type_float, target_opset=12)

# save the model to a file

onnx_filename=onnx_model_filename+"_float.onnx"

onnx.save_model(onnx_model_float, onnx_filename)

print("\n"+model_name+" ONNX model (float)")

# print model path

print(f"ONNX model saved to {onnx_filename}")

# load the ONNX model and make predictions

onnx_session = ort.InferenceSession(onnx_filename)

input_name = onnx_session.get_inputs()[0].name

output_name = onnx_session.get_outputs()[0].name

# display information about input tensors in ONNX

print("Information about input tensors in ONNX:")

for i, input_tensor in enumerate(onnx_session.get_inputs()):

print(f"{i + 1}. Name: {input_tensor.name}, Data Type: {input_tensor.type}, Shape: {input_tensor.shape}")

# display information about output tensors in ONNX

print("Information about output tensors in ONNX:")

for i, output_tensor in enumerate(onnx_session.get_outputs()):

print(f"{i + 1}. Name: {output_tensor.name}, Data Type: {output_tensor.type}, Shape: {output_tensor.shape}")

# define the input data type as FloatTensorType

initial_type_float = X.astype(np.float32)

# predict values for the entire dataset using ONNX

y_pred_onnx_float = onnx_session.run([output_name], {input_name: initial_type_float})[0]

# calculate and display the errors for the original and ONNX models

r2_onnx_float = r2_score(y, y_pred_onnx_float)

mse_onnx_float = mean_squared_error(y, y_pred_onnx_float)

mae_onnx_float = mean_absolute_error(y, y_pred_onnx_float)

print("R-squared (Coefficient of determination)", r2_onnx_float)

print("Mean Absolute Error:", mae_onnx_float)

print("Mean Squared Error:", mse_onnx_float)

print("R^2 matching decimal places: ",compare_decimal_places(r2, r2_onnx_float))

print("MAE matching decimal places: ",compare_decimal_places(mae, mae_onnx_float))

print("MSE matching decimal places: ",compare_decimal_places(mse, mse_onnx_float))

print("float ONNX model precision: ",compare_decimal_places(mae, mae_onnx_float))

# set the figure size

plt.figure(figsize=(8, 5))

# plot the original data and the regression data

plt.scatter(X, y, label='Original Data', marker='o')

plt.scatter(X, y_pred, color='blue', label='Scikit-Learn '+model_name+' Output', marker='o')

plt.scatter(X, y_pred_onnx_float, color='red', label='ONNX '+model_name+' Output', marker='o', linestyle='--')

plt.xlabel('X')

plt.ylabel('y')

plt.legend()

plt.title(model_name+' Comparison (with float ONNX)')

#plt.show()

plt.savefig(data_path + model_name+'_plot_float.png')

# convert to ONNX-model (double)

# define the input data type as DoubleTensorType

initial_type_double = [('double_input', DoubleTensorType([None, X.shape[1]]))]

# export the model to ONNX format

onnx_model_double = convert_sklearn(regression_model, initial_types=initial_type_double, target_opset=12)

# save the model to a file

onnx_filename=onnx_model_filename+"_double.onnx"

onnx.save_model(onnx_model_double, onnx_filename)

print("\n"+model_name+" ONNX model (double)")

# print model path

print(f"ONNX model saved to {onnx_filename}")

# load the ONNX model and make predictions

onnx_session = ort.InferenceSession(onnx_filename)

input_name = onnx_session.get_inputs()[0].name

output_name = onnx_session.get_outputs()[0].name

# display information about input tensors in ONNX

print("Information about input tensors in ONNX:")

for i, input_tensor in enumerate(onnx_session.get_inputs()):

print(f"{i + 1}. Name: {input_tensor.name}, Data Type: {input_tensor.type}, Shape: {input_tensor.shape}")

# display information about output tensors in ONNX

print("Information about output tensors in ONNX:")

for i, output_tensor in enumerate(onnx_session.get_outputs()):

print(f"{i + 1}. Name: {output_tensor.name}, Data Type: {output_tensor.type}, Shape: {output_tensor.shape}")

# define the input data type as DoubleTensorType

initial_type_double = X.astype(np.float64)

# predict values for the entire dataset using ONNX

y_pred_onnx_double = onnx_session.run([output_name], {input_name: initial_type_double})[0]

# calculate and display the errors for the original and ONNX models

r2_onnx_double = r2_score(y, y_pred_onnx_double)

mse_onnx_double = mean_squared_error(y, y_pred_onnx_double)

mae_onnx_double = mean_absolute_error(y, y_pred_onnx_double)

print("R-squared (Coefficient of determination)", r2_onnx_double)

print("Mean Absolute Error:", mae_onnx_double)

print("Mean Squared Error:", mse_onnx_double)

print("R^2 matching decimal places: ",compare_decimal_places(r2, r2_onnx_double))

print("MAE matching decimal places: ",compare_decimal_places(mae, mae_onnx_double))

print("MSE matching decimal places: ",compare_decimal_places(mse, mse_onnx_double))

print("double ONNX model precision: ",compare_decimal_places(mae, mae_onnx_double))

# set the figure size

plt.figure(figsize=(8, 5))

# plot the original data and the regression line

plt.scatter(X, y, label='Original Data', marker='o')

plt.scatter(X, y_pred, color='blue', label='Scikit-Learn '+model_name+' Output', marker='o')

plt.scatter(X, y_pred_onnx_float, color='red', label='ONNX '+model_name+' Output', marker='o', linestyle='--')

plt.xlabel('X')

plt.ylabel('y')

plt.legend()

plt.title(model_name+' Comparison (with double ONNX)')

#plt.show()

plt.savefig(data_path + model_name+'_plot_double.png')

The script creates and trains the sklearn.linear_model.ARDRegression model (the original model is considered in double), then exports the model to ONNX for float and double (ard_regression_float.onnx and ard_regression_double.onnx) and compares the accuracy of its operation.

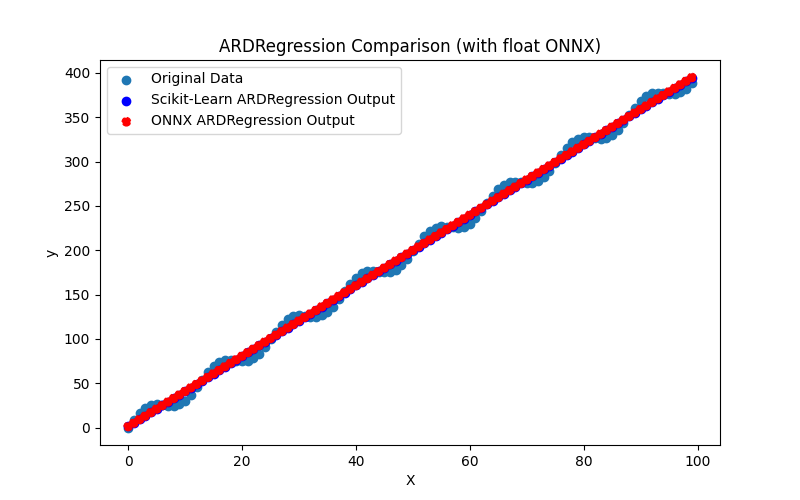

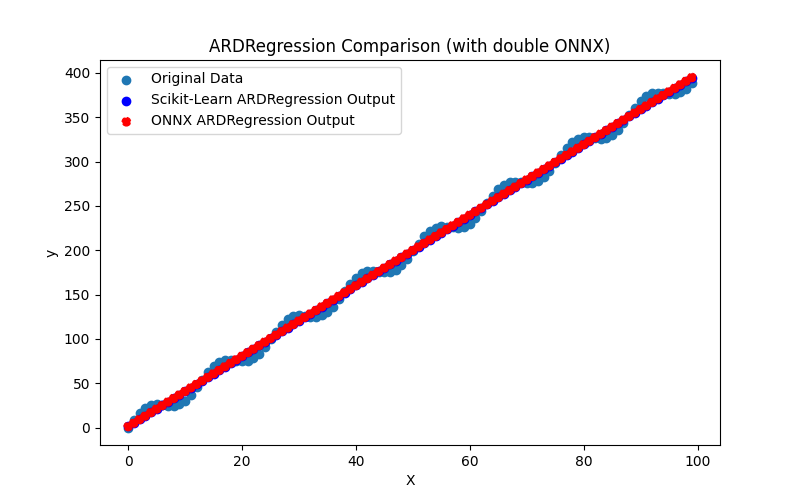

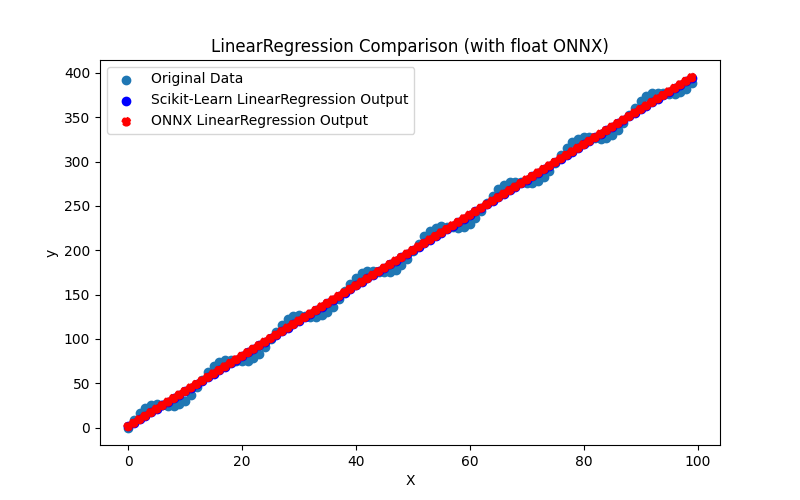

It also generates files ARDRegression_plot_float.png and ARDRegression_plot_double.png, allowing a visual assessment of the results of ONNX models for float and double (Fig. 2-3).

Fig.2. Results of the ARDRegression.py (float)

Fig.3. Results of the ARDRegression.py (double)

Visually, the ONNX models for float and double look the same (Fig. 2-3), detailed information can be found in the Journal tab:

Python ARDRegression Original model (double) Python R-squared (Coefficient of determination): 0.9962382628120845 Python Mean Absolute Error: 6.347568012853758 Python Mean Squared Error: 49.77815934891289 Python Python ARDRegression ONNX model (float) Python ONNX model saved to C:\Users\user\AppData\Roaming\MetaQuotes\Terminal\D0E8209F77C8CF37AD8BF550E51FF075\MQL5\Scripts\Regression\ard_regression_float.onnx Python Information about input tensors in ONNX: Python 1. Name: float_input, Data Type: tensor(float), Shape: [None, 1] Python Information about output tensors in ONNX: Python 1. Name: variable, Data Type: tensor(float), Shape: [None, 1] Python R-squared (Coefficient of determination) 0.9962382627587808 Python Mean Absolute Error: 6.347568283744705 Python Mean Squared Error: 49.778160054267204 Python R^2 matching decimal places: 9 Python MAE matching decimal places: 6 Python ONNX: MSE matching decimal places: 4 Python float ONNX model precision: 6 Python Python ARDRegression ONNX model (double) Python ONNX model saved to C:\Users\user\AppData\Roaming\MetaQuotes\Terminal\D0E8209F77C8CF37AD8BF550E51FF075\MQL5\Scripts\Regression\ard_regression_double.onnx Python Information about input tensors in ONNX: Python 1. Name: double_input, Data Type: tensor(double), Shape: [None, 1] Python Information about output tensors in ONNX: Python 1. Name: variable, Data Type: tensor(double), Shape: [None, 1] Python R-squared (Coefficient of determination) 0.9962382628120845 Python Mean Absolute Error: 6.347568012853758 Python Mean Squared Error: 49.77815934891289 Python R^2 matching decimal places: 16 Python MAE matching decimal places: 15 Python MSE matching decimal places: 14 Python double ONNX model precision: 15

In this example, the original model was considered in double, then it was exported into ONNX models ard_regression_float.onnx and ard_regression_double.onnx for float and double, respectively.

If the accuracy of the model is evaluated by Mean Absolute Error (MAE), the accuracy of the ONNX model for float is up to 6 decimal places, while the ONNX model using double showed accuracy retention up to 15 decimal places, in line with the precision of the original model.

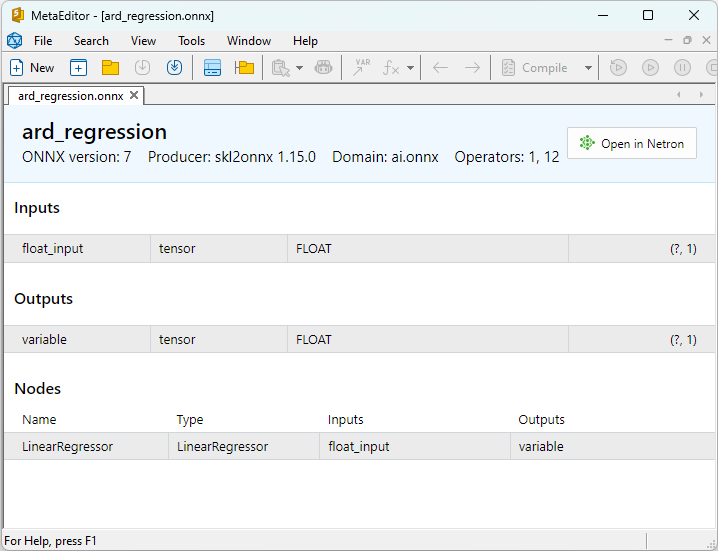

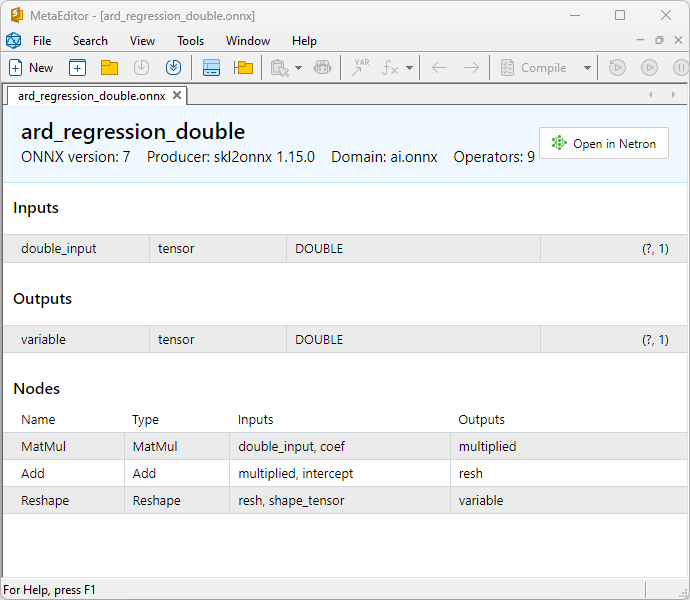

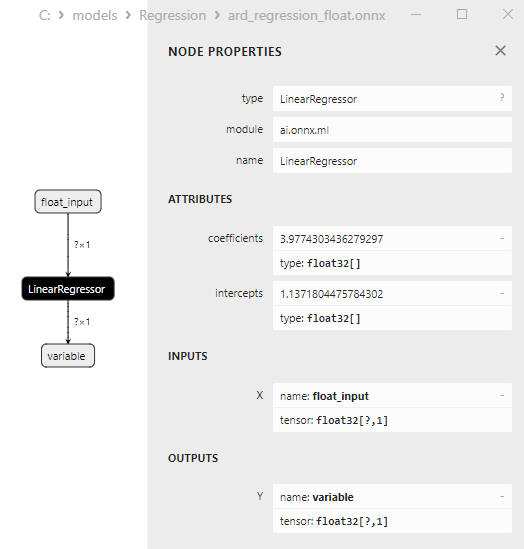

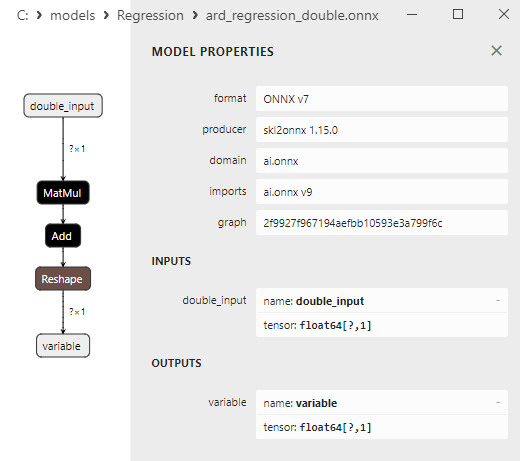

Properties of the ONNX models can be viewed in MetaEditor (Fig. 4-5).

Fig.4. ard_regression_float.onnx ONNX-model in MetaEditor

Fig.5. ard_regression_double.onnx ONNX model in MetaEditor

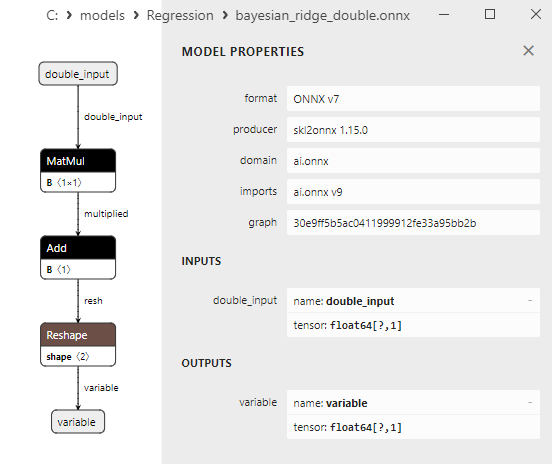

A comparison between float and double ONNX models shows that in this case, the computation of ONNX models for ARDRegression occurs differently: for float numbers, the LinearRegressor() operator from ONNX-ML is used, whereas for double numbers, ONNX operators MatMul(), Add(), and Reshape() are used.

The implementation of the model in ONNX depends on the converter; in the examples for exporting to ONNX, the skl2onnx.convert_sklearn() function from the skl2onnx library will be used.

2.1.1.2. MQL5 code for executing ONNX Models

This code executes the saved ard_regression_float.onnx and ard_regression_double.onnx ONNX models and demonstrating the use of regression metrics in MQL5.

//+------------------------------------------------------------------+ //| ARDRegression.mq5 | //| Copyright 2023, MetaQuotes Ltd. | //| https://www.mql5.com | //+------------------------------------------------------------------+ #property copyright "Copyright 2023, MetaQuotes Ltd." #property link "https://www.mql5.com" #property version "1.00" #define ModelName "ARDRegression" #define ONNXFilenameFloat "ard_regression_float.onnx" #define ONNXFilenameDouble "ard_regression_double.onnx" #resource ONNXFilenameFloat as const uchar ExtModelFloat[]; #resource ONNXFilenameDouble as const uchar ExtModelDouble[]; #define TestFloatModel 1 #define TestDoubleModel 2 //+------------------------------------------------------------------+ //| Calculate regression using float values | //+------------------------------------------------------------------+ bool RunModelFloat(long model,vector &input_vector, vector &output_vector) { //--- check number of input samples ulong batch_size=input_vector.Size(); if(batch_size==0) return(false); //--- prepare output array output_vector.Resize((int)batch_size); //--- prepare input tensor float input_data[]; ArrayResize(input_data,(int)batch_size); //--- set input shape ulong input_shape[]= {batch_size, 1}; OnnxSetInputShape(model,0,input_shape); //--- copy data to the input tensor for(int k=0; k<(int)batch_size; k++) input_data[k]=(float)input_vector[k]; //--- prepare output tensor float output_data[]; ArrayResize(output_data,(int)batch_size); //--- set output shape ulong output_shape[]= {batch_size,1}; OnnxSetOutputShape(model,0,output_shape); //--- run the model bool res=OnnxRun(model,ONNX_DEBUG_LOGS,input_data,output_data); //--- copy output to vector if(res) { for(int k=0; k<(int)batch_size; k++) output_vector[k]=output_data[k]; } //--- return(res); } //+------------------------------------------------------------------+ //| Calculate regression using double values | //+------------------------------------------------------------------+ bool RunModelDouble(long model,vector &input_vector, vector &output_vector) { //--- check number of input samples ulong batch_size=input_vector.Size(); if(batch_size==0) return(false); //--- prepare output array output_vector.Resize((int)batch_size); //--- prepare input tensor double input_data[]; ArrayResize(input_data,(int)batch_size); //--- set input shape ulong input_shape[]= {batch_size, 1}; OnnxSetInputShape(model,0,input_shape); //--- copy data to the input tensor for(int k=0; k<(int)batch_size; k++) input_data[k]=input_vector[k]; //--- prepare output tensor double output_data[]; ArrayResize(output_data,(int)batch_size); //--- set output shape ulong output_shape[]= {batch_size,1}; OnnxSetOutputShape(model,0,output_shape); //--- run the model bool res=OnnxRun(model,ONNX_DEBUG_LOGS,input_data,output_data); //--- copy output to vector if(res) { for(int k=0; k<(int)batch_size; k++) output_vector[k]=output_data[k]; } //--- return(res); } //+------------------------------------------------------------------+ //| Generate synthetic data | //+------------------------------------------------------------------+ bool GenerateData(const int n,vector &x,vector &y) { if(n<=0) return(false); //--- prepare arrays x.Resize(n); y.Resize(n); //--- for(int i=0; i<n; i++) { x[i]=(double)1.0*i; y[i]=(double)(4*x[i] + 10*sin(x[i]*0.5)); } //--- return(true); } //+------------------------------------------------------------------+ //| TestRegressionModel | //+------------------------------------------------------------------+ bool TestRegressionModel(const string model_name,const int model_type) { //--- long model=INVALID_HANDLE; ulong flags=ONNX_DEFAULT; if(model_type==TestFloatModel) { PrintFormat("\nTesting ONNX float: %s (%s)",model_name,ONNXFilenameFloat); model=OnnxCreateFromBuffer(ExtModelFloat,flags); } else if(model_type==TestDoubleModel) { PrintFormat("\nTesting ONNX double: %s (%s)",model_name,ONNXFilenameDouble); model=OnnxCreateFromBuffer(ExtModelDouble,flags); } else { PrintFormat("Model type is not incorrect."); return(false); } //--- check if(model==INVALID_HANDLE) { PrintFormat("model_name=%s OnnxCreate error %d",model_name,GetLastError()); return(false); } //--- vector x_values= {}; vector y_true= {}; vector y_predicted= {}; //--- int n=100; GenerateData(n,x_values,y_true); //--- bool run_result=false; if(model_type==TestFloatModel) { run_result=RunModelFloat(model,x_values,y_predicted); } else if(model_type==TestDoubleModel) { run_result=RunModelDouble(model,x_values,y_predicted); } //--- if(run_result) { PrintFormat("MQL5: R-Squared (Coefficient of determination): %.16f",y_predicted.RegressionMetric(y_true,REGRESSION_R2)); PrintFormat("MQL5: Mean Absolute Error: %.16f",y_predicted.RegressionMetric(y_true,REGRESSION_MAE)); PrintFormat("MQL5: Mean Squared Error: %.16f",y_predicted.RegressionMetric(y_true,REGRESSION_MSE)); } else PrintFormat("Error %d",GetLastError()); //--- release model OnnxRelease(model); //--- return(true); } //+------------------------------------------------------------------+ //| Script program start function | //+------------------------------------------------------------------+ int OnStart(void) { //--- test ONNX regression model for float TestRegressionModel(ModelName,TestFloatModel); //--- test ONNX regression model for double TestRegressionModel(ModelName,TestDoubleModel); //--- return(0); } //+------------------------------------------------------------------+

Output:

ARDRegression (EURUSD,H1) Testing ONNX float: ARDRegression (ard_regression_float.onnx) ARDRegression (EURUSD,H1) MQL5: R-Squared (Coefficient of determination): 0.9962382627587808 ARDRegression (EURUSD,H1) MQL5: Mean Absolute Error: 6.3475682837447049 ARDRegression (EURUSD,H1) MQL5: Mean Squared Error: 49.7781600542671896 ARDRegression (EURUSD,H1) ARDRegression (EURUSD,H1) Testing ONNX double: ARDRegression (ard_regression_double.onnx) ARDRegression (EURUSD,H1) MQL5: R-Squared (Coefficient of determination): 0.9962382628120845 ARDRegression (EURUSD,H1) MQL5: Mean Absolute Error: 6.3475680128537597 ARDRegression (EURUSD,H1) MQL5: Mean Squared Error: 49.7781593489128795

Comparison with the original double model in Python:

Testing ONNX float: ARDRegression (ard_regression_float.onnx) Python Mean Absolute Error: 6.347568012853758 MQL5: Mean Absolute Error: 6.3475682837447049 Testing ONNX double: ARDRegression (ard_regression_double.onnx) Python Mean Absolute Error: 6.347568012853758 MQL5: Mean Absolute Error: 6.3475680128537597

Accuracy of ONNX float MAE: 6 decimal places, Accuracy of ONNX double MAE: 14 decimal places.

2.1.1.3. The ONNX representations of models ard_regression_float.onnx and ard_regression_double.onnx

Netron (web version) is a tool for visualizing models and analyzing computation graphs, which can be used for models in the ONNX (Open Neural Network Exchange) format.

Netron presents model graphs and their architecture in a clear and interactive form, allowing the exploration of the structure and parameters of deep learning models, including those created using ONNX.

Key features of Netron include:

- Graph Visualization: Netron displays the model's architecture as a graph, enabling you to see the layers, operations, and connections between them. You can easily comprehend the structure and data flow within the model.

- Interactive Exploration: You can select nodes in the graph to obtain additional information about each operator and its parameters.

- Support for Various Formats: Netron supports a variety of deep learning model formats, including ONNX, TensorFlow, PyTorch, CoreML, and others.

- Parameter Analysis Capability: You can view the model's parameters and weights, which is useful for understanding the values used in different parts of the model.

Netron is convenient for developers and researchers in the field of machine learning and deep learning, as it simplifies the visualization and analysis of models, aiding in the understanding and debugging of complex neural networks.

This tool allows for quick model inspection, exploring their structure and parameters, easing the work with deep neural networks.

For more details about Netron, refer to the articles: Visualizing your Neural Network with Netron and Visualize Keras Neural Networks with Netron.

Video about Netron::

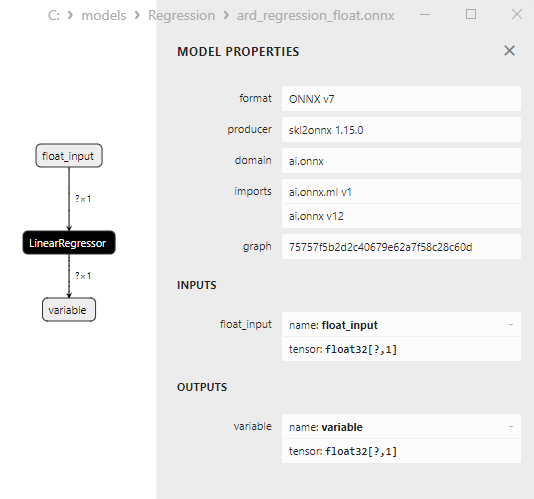

The ard_regression_float.onnx model is shown at Fig.6:

Fig.6. ONNX representation of the ard_regression_float.onnx model in Netron

The ai.onnx.ml LinearRegressor() ONNX operator is part of the ONNX standard, describing a model for regression tasks. This operator is used for regression, which involves predicting numerical (continuous) values based on input features

It takes model parameters as input, such as weights and bias, along with the input features, and executes linear regression. Linear regression estimates parameters (weights) for each input feature and then performs a linear combination of these features with the weights to generate a prediction.

This operator performs the following steps:

- Takes the model's weights and bias, along with input features.

- For each example of input data, performs a linear combination of weights with the corresponding features.

- Adds the bias to the resulting value.

The result is the prediction of the target variable in the regression task.

The LinearRegressor() parameters are shown in Fig.7.

Fig.7. The LinearRegressor() operator properties of the ard_regression_float.onnx model in Netron

Fig.8. ONNX representation of the ard_regression_double.onnx model in Netron

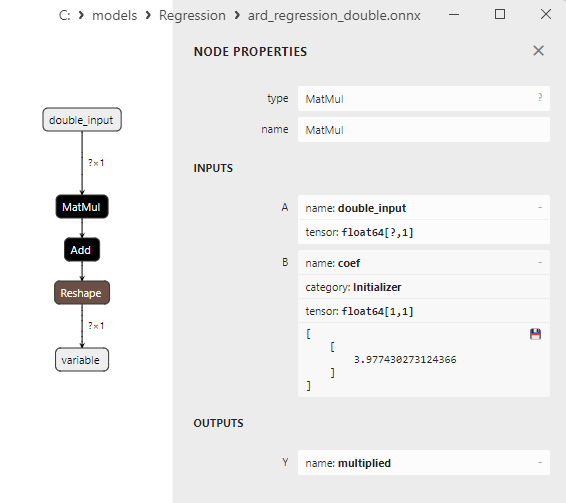

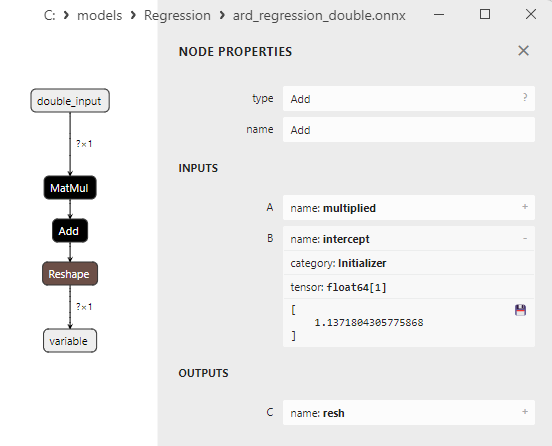

The parameters of the MatMul(), Add() and Reshape() ONNX-operators is shown at Fig.9-11.

Fig.9. Properties of the MatMul operator in the ard_regression_double.onnx model in Netron

The MatMul (matrix multiplication) ONNX operator performs the multiplication of two matrices.

It takes two inputs: two matrices and returns their matrix product.

If you have two matrices, A and B, then the result of Matmul(A, B) is a matrix C, where each element C[i][j] is calculated as the sum of the products of the elements from row i of matrix A by the elements from column j of matrix B.

Fig.10. Properties of the Add operator in the ard_regression_double.onnx model in Netron

The Add() ONNX operator performs element-wise addition of two tensors or arrays of the same shape.

It takes two inputs and returns the result, where each element of the resulting tensor equals the sum of the corresponding elements of the input tensors.

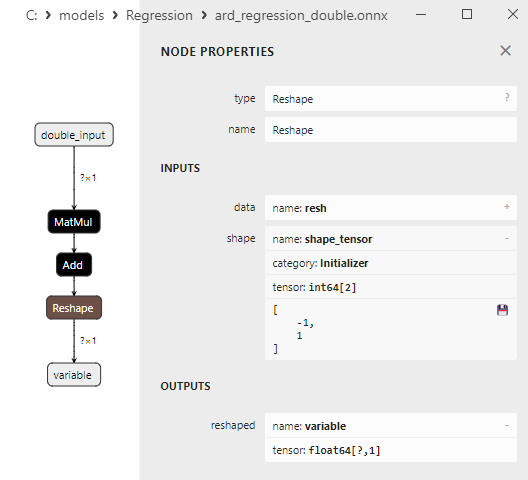

Fig.11. Properties of the Reshape operator in the ard_regression_double.onnx model in Netron

The Reshape(-1,1) ONNX operator is used to modify the shape (or dimension) of input data. In this operator, the value -1 for the dimension indicates that the size of that dimension should be automatically computed based on the other dimensions to ensure data consistency.

The value 1 in the second dimension specifies that after the shape transformation, each element will have a single sub-dimension.

2.1.2. sklearn.linear_model.BayesianRidge

BayesianRidge is a regression method that utilizes a Bayesian approach to estimate model parameters. This method enables modeling the prior distribution of parameters and updating it considering the data to obtain the posterior distribution of parameters.

BayesianRidge is a Bayesian regression method designed to predict the dependent variable based on one or several independent variables.

Working Principle of BayesianRidge:

- Prior distribution of parameters: It begins with defining the prior distribution of model parameters. This distribution represents prior knowledge or assumptions about model parameters before considering the data. In the case of BayesianRidge, Gaussian-shaped prior distributions are used.

- Updating the parameter distribution: Once the prior parameter distribution is set, it is updated based on the data. This is done using Bayesian theory, where the posterior distribution of parameters is computed considering the data. An essential aspect is the estimation of hyperparameters, which influence the form of the posterior distribution.

- Prediction: After estimating the posterior distribution of parameters, predictions can be made for new observations. This results in a distribution of forecasts rather than a single point value, allowing for uncertainty in predictions to be considered.

Advantages of BayesianRidge:

- Uncertainty consideration: BayesianRidge accounts for uncertainty in model parameters and predictions. Instead of point predictions, confidence intervals are provided.

- Regularization: The Bayesian regression method can be useful for model regularization, aiding in preventing overfitting.

- Automatic feature selection: BayesianRidge can automatically determine feature importance by reducing the weights of insignificant features.

Limitations of BayesianRidge:

- Computational complexity: The method requires computational resources to estimate parameters and compute the posterior distribution.

- High abstraction level: A deeper understanding of Bayesian statistics may be required to comprehend and use BayesianRidge.

- Not always the best choice: BayesianRidge may not be the most suitable method in certain regression tasks, particularly when dealing with limited data.

BayesianRidge is useful in regression tasks where the uncertainty of parameters and predictions is important and in cases where model regularization is needed.

2.1.2.1. Code for creating the BayesianRidge model and exporting it to ONNX for float and double

This code creates the sklearn.linear_model.BayesianRidge model, trains it on synthetic data, saves the model in the ONNX format, and performs predictions using both float and double input data. It also evaluates the accuracy of both the original model and the models exported to ONNX.

# The code demonstrates the process of training BayesianRidge model, exporting it to ONNX format (both float and double), and making predictions using the ONNX models.

# Copyright 2023, MetaQuotes Ltd.

# https://www.mql5.com

# function to compare matching decimal places

def compare_decimal_places(value1, value2):

# convert both values to strings

str_value1 = str(value1)

str_value2 = str(value2)

# find the positions of the decimal points in the strings

dot_position1 = str_value1.find(".")

dot_position2 = str_value2.find(".")

# if one of the values doesn't have a decimal point, return 0

if dot_position1 == -1 or dot_position2 == -1:

return 0

# calculate the number of decimal places

decimal_places1 = len(str_value1) - dot_position1 - 1

decimal_places2 = len(str_value2) - dot_position2 - 1

# find the minimum of the two decimal places counts

min_decimal_places = min(decimal_places1, decimal_places2)

# initialize a count for matching decimal places

matching_count = 0

# compare characters after the decimal point

for i in range(1, min_decimal_places + 1):

if str_value1[dot_position1 + i] == str_value2[dot_position2 + i]:

matching_count += 1

else:

break

return matching_count

# import necessary libraries

import numpy as np

import matplotlib.pyplot as plt

from sklearn.linear_model import BayesianRidge

from sklearn.metrics import r2_score,mean_absolute_error,mean_squared_error

import onnx

import onnxruntime as ort

from skl2onnx import convert_sklearn

from skl2onnx.common.data_types import FloatTensorType

from skl2onnx.common.data_types import DoubleTensorType

from sys import argv

# define the path for saving the model

data_path = argv[0]

last_index = data_path.rfind("\\") + 1

data_path = data_path[0:last_index]

# generate synthetic data for regression

X = np.arange(0,100,1).reshape(-1,1)

y = 4*X + 10*np.sin(X*0.5)

model_name = "BayesianRidge"

onnx_model_filename = data_path + "bayesian_ridge"

# create a Bayesian Ridge regression model

regression_model = BayesianRidge()

# fit the model to the data

regression_model.fit(X, y.ravel())

# predict values for the entire dataset

y_pred = regression_model.predict(X)

# evaluate the model's performance

r2 = r2_score(y, y_pred)

mse = mean_squared_error(y, y_pred)

mae = mean_absolute_error(y, y_pred)

print("\n"+model_name+" Original model (double)")

print("R-squared (Coefficient of determination):", r2)

print("Mean Absolute Error:", mae)

print("Mean Squared Error:", mse)

# convert to ONNX-model (float)

# define the input data type as FloatTensorType

initial_type_float = [('float_input', FloatTensorType([None, X.shape[1]]))]

# export the model to ONNX format

onnx_model_float = convert_sklearn(regression_model, initial_types=initial_type_float, target_opset=12)

# save the model to a file

onnx_filename=onnx_model_filename+"_float.onnx"

onnx.save_model(onnx_model_float, onnx_filename)

print("\n"+model_name+" ONNX model (float)")

# print model path

print(f"ONNX model saved to {onnx_filename}")

# load the ONNX model and make predictions

onnx_session = ort.InferenceSession(onnx_filename)

input_name = onnx_session.get_inputs()[0].name

output_name = onnx_session.get_outputs()[0].name

# display information about input tensors in ONNX

print("Information about input tensors in ONNX:")

for i, input_tensor in enumerate(onnx_session.get_inputs()):

print(f"{i + 1}. Name: {input_tensor.name}, Data Type: {input_tensor.type}, Shape: {input_tensor.shape}")

# display information about output tensors in ONNX

print("Information about output tensors in ONNX:")

for i, output_tensor in enumerate(onnx_session.get_outputs()):

print(f"{i + 1}. Name: {output_tensor.name}, Data Type: {output_tensor.type}, Shape: {output_tensor.shape}")

# define the input data type as FloatTensorType

initial_type_float = X.astype(np.float32)

# predict values for the entire dataset using ONNX

y_pred_onnx_float = onnx_session.run([output_name], {input_name: initial_type_float})[0]

# calculate and display the errors for the original and ONNX models

r2_onnx_float = r2_score(y, y_pred_onnx_float)

mse_onnx_float = mean_squared_error(y, y_pred_onnx_float)

mae_onnx_float = mean_absolute_error(y, y_pred_onnx_float)

print("R-squared (Coefficient of determination)", r2_onnx_float)

print("Mean Absolute Error:", mae_onnx_float)

print("Mean Squared Error:", mse_onnx_float)

print("R^2 matching decimal places: ", compare_decimal_places(r2, r2_onnx_float))

print("MAE matching decimal places: ",compare_decimal_places(mae, mae_onnx_float))

print("MSE matching decimal places: ",compare_decimal_places(mse, mse_onnx_float))

print("float ONNX model precision: ",compare_decimal_places(mae, mae_onnx_float))

# set the figure size

plt.figure(figsize=(8,5))

# plot the original data and the regression data

plt.scatter(X, y, label='Original Data', marker='o')

plt.scatter(X, y_pred, color='blue', label='Scikit-Learn '+model_name+' Output', marker='o')

plt.scatter(X, y_pred_onnx_float, color='red', label='ONNX '+model_name+' Output', marker='o', linestyle='--')

plt.xlabel('X')

plt.ylabel('y')

plt.legend()

plt.title(model_name+' Comparison (with float ONNX)')

#plt.show()

plt.savefig(data_path + model_name+'_plot_float.png')

# convert to ONNX-model (double)

# define the input data type as DoubleTensorType

initial_type_double = [('double_input', DoubleTensorType([None, X.shape[1]]))]

# export the model to ONNX format

onnx_model_double = convert_sklearn(regression_model, initial_types=initial_type_double, target_opset=12)

# save the model to a file

onnx_filename=onnx_model_filename+"_double.onnx"

onnx.save_model(onnx_model_double, onnx_filename)

print("\n"+model_name+" ONNX model (double)")

# print model path

print(f"ONNX model saved to {onnx_filename}")

# load the ONNX model and make predictions

onnx_session = ort.InferenceSession(onnx_filename)

input_name = onnx_session.get_inputs()[0].name

output_name = onnx_session.get_outputs()[0].name

# display information about input tensors in ONNX

print("Information about input tensors in ONNX:")

for i, input_tensor in enumerate(onnx_session.get_inputs()):

print(f"{i + 1}. Name: {input_tensor.name}, Data Type: {input_tensor.type}, Shape: {input_tensor.shape}")

# display information about output tensors in ONNX

print("Information about output tensors in ONNX:")

for i, output_tensor in enumerate(onnx_session.get_outputs()):

print(f"{i + 1}. Name: {output_tensor.name}, Data Type: {output_tensor.type}, Shape: {output_tensor.shape}")

# define the input data type as DoubleTensorType

initial_type_double = X.astype(np.float64)

# predict values for the entire dataset using ONNX

y_pred_onnx_double = onnx_session.run([output_name], {input_name: initial_type_double})[0]

# calculate and display the errors for the original and ONNX models

r2_onnx_double = r2_score(y, y_pred_onnx_double)

mse_onnx_double = mean_squared_error(y, y_pred_onnx_double)

mae_onnx_double = mean_absolute_error(y, y_pred_onnx_double)

print("R-squared (Coefficient of determination)", r2_onnx_double)

print("Mean Absolute Error:", mae_onnx_double)

print("Mean Squared Error:", mse_onnx_double)

print("R^2 matching decimal places: ",compare_decimal_places(r2, r2_onnx_double))

print("MAE matching decimal places: ",compare_decimal_places(mae, mae_onnx_double))

print("MSE matching decimal places: ",compare_decimal_places(mse, mse_onnx_double))

print("double ONNX model precision: ",compare_decimal_places(mae, mae_onnx_double))

# set the figure size

plt.figure(figsize=(8,5))

# plot the original data and the regression line

plt.scatter(X, y, label='Original Data', marker='o')

plt.scatter(X, y_pred, color='blue', label='Scikit-Learn '+model_name+' Output', marker='o')

plt.scatter(X, y_pred_onnx_float, color='red', label='ONNX '+model_name+' Output', marker='o', linestyle='--')

plt.xlabel('X')

plt.ylabel('y')

plt.legend()

plt.title(model_name+' Comparison (with double ONNX)')

#plt.show()

plt.savefig(data_path + model_name+'_plot_double.png')

Output:

Python BayesianRidge Original model (double) Python R-squared (Coefficient of determination): 0.9962382628120845 Python Mean Absolute Error: 6.347568012853758 Python Mean Squared Error: 49.77815934891288 Python Python BayesianRidge ONNX model (float) Python ONNX model saved to C:\Users\user\AppData\Roaming\MetaQuotes\Terminal\D0E8209F77C8CF37AD8BF550E51FF075\MQL5\Scripts\Regression\bayesian_ridge_float.onnx Python Information about input tensors in ONNX: Python 1. Name: float_input, Data Type: tensor(float), Shape: [None, 1] Python Information about output tensors in ONNX: Python 1. Name: variable, Data Type: tensor(float), Shape: [None, 1] Python R-squared (Coefficient of determination) 0.9962382627587808 Python Mean Absolute Error: 6.347568283744705 Python Mean Squared Error: 49.778160054267204 Python R^2 matching decimal places: 9 Python MAE matching decimal places: 6 Python MSE matching decimal places: 4 Python float ONNX model precision: 6 Python Python BayesianRidge ONNX model (double) Python ONNX model saved to C:\Users\user\AppData\Roaming\MetaQuotes\Terminal\D0E8209F77C8CF37AD8BF550E51FF075\MQL5\Scripts\Regression\bayesian_ridge_double.onnx Python Information about input tensors in ONNX: Python 1. Name: double_input, Data Type: tensor(double), Shape: [None, 1] Python Information about output tensors in ONNX: Python 1. Name: variable, Data Type: tensor(double), Shape: [None, 1] Python R-squared (Coefficient of determination) 0.9962382628120845 Python Mean Absolute Error: 6.347568012853758 Python Mean Squared Error: 49.77815934891288 Python R^2 matching decimal places: 16 Python MAE matching decimal places: 15 Python MSE matching decimal places: 14 Python double ONNX model precision: 15

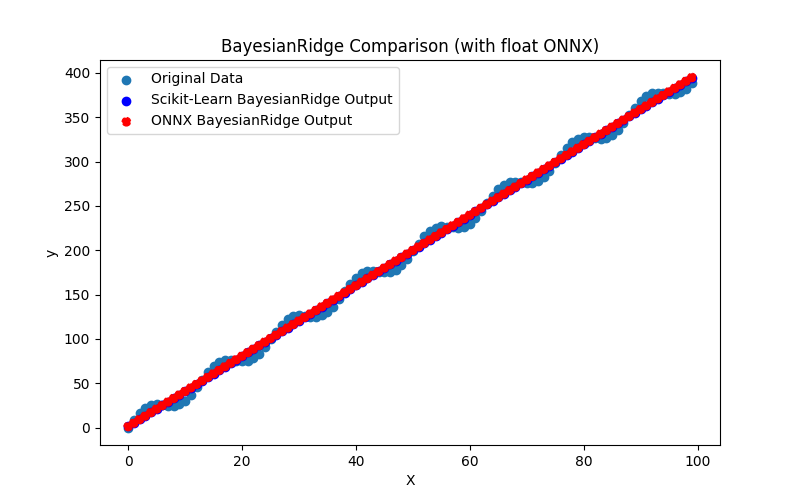

Fig.12. Results of the BayesianRidge.py (float ONNX)

2.1.2.2. MQL5 code for executing ONNX Models

This code executes the saved bayesian_ridge_float.onnx and bayesian_ridge_double.onnx ONNX models and demonstrating the use of regression metrics in MQL5.

//+------------------------------------------------------------------+ //| BayesianRidge.mq5 | //| Copyright 2023, MetaQuotes Ltd. | //| https://www.mql5.com | //+------------------------------------------------------------------+ #property copyright "Copyright 2023, MetaQuotes Ltd." #property link "https://www.mql5.com" #property version "1.00" #define ModelName "BayesianRidge" #define ONNXFilenameFloat "bayesian_ridge_float.onnx" #define ONNXFilenameDouble "bayesian_ridge_double.onnx" #resource ONNXFilenameFloat as const uchar ExtModelFloat[]; #resource ONNXFilenameDouble as const uchar ExtModelDouble[]; #define TestFloatModel 1 #define TestDoubleModel 2 //+------------------------------------------------------------------+ //| Calculate regression using float values | //+------------------------------------------------------------------+ bool RunModelFloat(long model,vector &input_vector, vector &output_vector) { //--- check number of input samples ulong batch_size=input_vector.Size(); if(batch_size==0) return(false); //--- prepare output array output_vector.Resize((int)batch_size); //--- prepare input tensor float input_data[]; ArrayResize(input_data,(int)batch_size); //--- set input shape ulong input_shape[]= {batch_size, 1}; OnnxSetInputShape(model,0,input_shape); //--- copy data to the input tensor for(int k=0; k<(int)batch_size; k++) input_data[k]=(float)input_vector[k]; //--- prepare output tensor float output_data[]; ArrayResize(output_data,(int)batch_size); //--- set output shape ulong output_shape[]= {batch_size,1}; OnnxSetOutputShape(model,0,output_shape); //--- run the model bool res=OnnxRun(model,ONNX_DEBUG_LOGS,input_data,output_data); //--- copy output to vector if(res) { for(int k=0; k<(int)batch_size; k++) output_vector[k]=output_data[k]; } //--- return(res); } //+------------------------------------------------------------------+ //| Calculate regression using double values | //+------------------------------------------------------------------+ bool RunModelDouble(long model,vector &input_vector, vector &output_vector) { //--- check number of input samples ulong batch_size=input_vector.Size(); if(batch_size==0) return(false); //--- prepare output array output_vector.Resize((int)batch_size); //--- prepare input tensor double input_data[]; ArrayResize(input_data,(int)batch_size); //--- set input shape ulong input_shape[]= {batch_size, 1}; OnnxSetInputShape(model,0,input_shape); //--- copy data to the input tensor for(int k=0; k<(int)batch_size; k++) input_data[k]=input_vector[k]; //--- prepare output tensor double output_data[]; ArrayResize(output_data,(int)batch_size); //--- set output shape ulong output_shape[]= {batch_size,1}; OnnxSetOutputShape(model,0,output_shape); //--- run the model bool res=OnnxRun(model,ONNX_DEBUG_LOGS,input_data,output_data); //--- copy output to vector if(res) { for(int k=0; k<(int)batch_size; k++) output_vector[k]=output_data[k]; } //--- return(res); } //+------------------------------------------------------------------+ //| Generate synthetic data | //+------------------------------------------------------------------+ bool GenerateData(const int n,vector &x,vector &y) { if(n<=0) return(false); //--- prepare arrays x.Resize(n); y.Resize(n); //--- for(int i=0; i<n; i++) { x[i]=(double)1.0*i; y[i]=(double)(4*x[i] + 10*sin(x[i]*0.5)); } //--- return(true); } //+------------------------------------------------------------------+ //| TestRegressionModel | //+------------------------------------------------------------------+ bool TestRegressionModel(const string model_name,const int model_type) { //--- long model=INVALID_HANDLE; ulong flags=ONNX_DEFAULT; if(model_type==TestFloatModel) { PrintFormat("\nTesting ONNX float: %s (%s)",model_name,ONNXFilenameFloat); model=OnnxCreateFromBuffer(ExtModelFloat,flags); } else if(model_type==TestDoubleModel) { PrintFormat("\nTesting ONNX double: %s (%s)",model_name,ONNXFilenameDouble); model=OnnxCreateFromBuffer(ExtModelDouble,flags); } else { PrintFormat("Model type is not incorrect."); return(false); } //--- check if(model==INVALID_HANDLE) { PrintFormat("model_name=%s OnnxCreate error %d",model_name,GetLastError()); return(false); } //--- vector x_values= {}; vector y_true= {}; vector y_predicted= {}; //--- int n=100; GenerateData(n,x_values,y_true); //--- bool run_result=false; if(model_type==TestFloatModel) { run_result=RunModelFloat(model,x_values,y_predicted); } else if(model_type==TestDoubleModel) { run_result=RunModelDouble(model,x_values,y_predicted); } //--- if(run_result) { PrintFormat("MQL5: R-Squared (Coefficient of determination): %.16f",y_predicted.RegressionMetric(y_true,REGRESSION_R2)); PrintFormat("MQL5: Mean Absolute Error: %.16f",y_predicted.RegressionMetric(y_true,REGRESSION_MAE)); PrintFormat("MQL5: Mean Squared Error: %.16f",y_predicted.RegressionMetric(y_true,REGRESSION_MSE)); } else PrintFormat("Error %d",GetLastError()); //--- release model OnnxRelease(model); //--- return(true); } //+------------------------------------------------------------------+ //| Script program start function | //+------------------------------------------------------------------+ int OnStart(void) { //--- test ONNX regression model for float TestRegressionModel(ModelName,TestFloatModel); //--- test ONNX regression model for double TestRegressionModel(ModelName,TestDoubleModel); //--- return(0); } //+------------------------------------------------------------------+

Output:

BayesianRidge (EURUSD,H1) Testing ONNX float: BayesianRidge (bayesian_ridge_float.onnx) BayesianRidge (EURUSD,H1) MQL5: R-Squared (Coefficient of determination): 0.9962382627587808 BayesianRidge (EURUSD,H1) MQL5: Mean Absolute Error: 6.3475682837447049 BayesianRidge (EURUSD,H1) MQL5: Mean Squared Error: 49.7781600542671896 BayesianRidge (EURUSD,H1) BayesianRidge (EURUSD,H1) Testing ONNX double: BayesianRidge (bayesian_ridge_double.onnx) BayesianRidge (EURUSD,H1) MQL5: R-Squared (Coefficient of determination): 0.9962382628120845 BayesianRidge (EURUSD,H1) MQL5: Mean Absolute Error: 6.3475680128537624 BayesianRidge (EURUSD,H1) MQL5: Mean Squared Error: 49.7781593489128866

Comparison with the original double model in Python:

Testing ONNX float: BayesianRidge (bayesian_ridge_float.onnx) Python Mean Absolute Error: 6.347568012853758 MQL5: Mean Absolute Error: 6.3475682837447049 Testing ONNX double: BayesianRidge (bayesian_ridge_double.onnx) Python Mean Absolute Error: 6.347568012853758 MQL5: Mean Absolute Error: 6.3475680128537624

Accuracy of ONNX float MAE: 6 decimal places, Accuracy of ONNX double MAE: 13 decimal places.

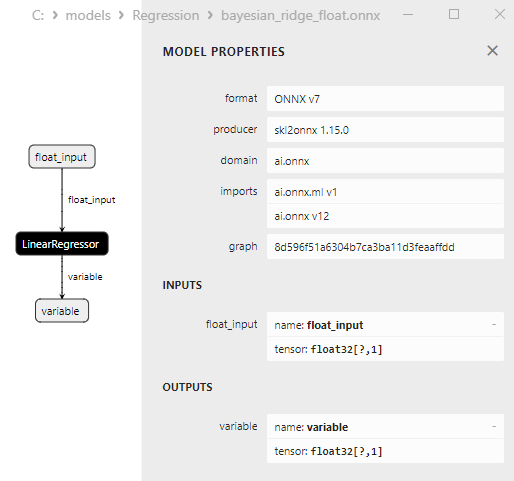

2.1.2.3. ONNX representation of bayesian_ridge_float.onnx and bayesian_ridge_double.onnx

Fig.13. ONNX representation of the bayesian_ridge_float.onnx in Netron

Fig.14. ONNX representation of the bayesian_ridge_double.onnx in Netron

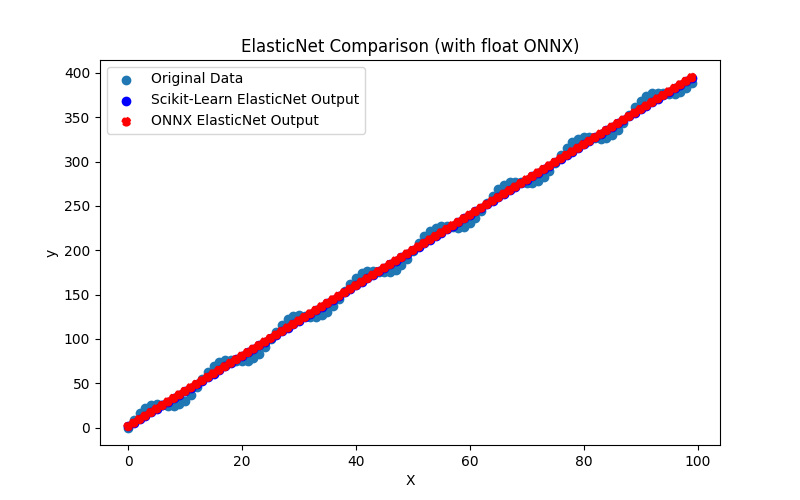

Note on ElasticNet and ElasticNetCV Methods

ElasticNet and ElasticNetCV are two related machine learning methods used for regularizing regression models, especially linear regression. They share common functionality but differ in their manner of use and application.

ElasticNet (Elastic Net Regression):

- Working Principle: ElasticNet is a regression method that combines Lasso (L1 regularization) and Ridge (L2 regularization). It adds two regularization components to the loss function: one penalizes the model for large absolute values of coefficients (like Lasso), and the other penalizes the model for large squares of coefficients (like Ridge).

- ElasticNet is commonly used when there is multicollinearity in the data (when features are highly correlated) and when dimensionality reduction is needed, as well as controlling coefficient values.

ElasticNetCV (Elastic Net Cross-Validation):

- Working Principle: ElasticNetCV is an extension of ElasticNet that involves automatically selecting optimal hyperparameters alpha (the mixing coefficient between L1 and L2 regularization) and lambda (the regularization strength) using cross-validation. It iterates through various alpha and lambda values, choosing the combination that performs best in cross-validation.

- Advantages: ElasticNetCV automatically tunes model parameters based on cross-validation, allowing for the selection of optimal hyperparameter values without the need for manual tuning. This makes it more convenient to use and helps prevent model overfitting.