- Regularization

- Dropout

- Normalization

Batch normalization

The practical application of neural networks implies the use of various approaches to data normalization. All of these approaches aim to maintain the training data and the output of hidden layers of the neural network within a specified range and with certain statistical characteristics of the dataset, such as variance and median. Why is this so important? We remember that network neurons apply linear transformations which shift the sample towards the anti-gradient in the learning process.

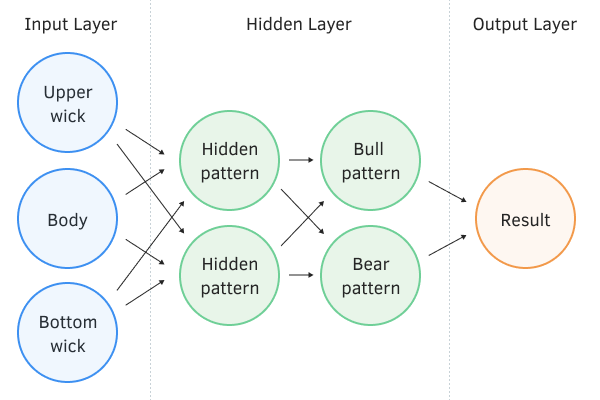

Consider a fully connected perceptron with two hidden layers. In the forward pass, each layer generates a set of data that serves as a training sample for the next layer. The output layer's results are compared with the reference data, and during the backward pass, the error gradient is propagated from the output layer through hidden layers to the input data.

Perceptron with two hidden layers

Having obtained the error gradient for each neuron, we update the weights, tuning our neural network to the training samples from the last forward pass. Here arises a conflict: we are adjusting the second hidden layer (labeled as 'Bull pattern' and 'Bear pattern' in the above diagram) to the output data of the first hidden layer (labeled as 'Hidden pattern' in the diagram). However, by changing the parameters of the first hidden layer, we have already altered the data array. In other words, we are adjusting the second hidden layer to a data sample that no longer exists.

The situation is similar to the output layer, which adjusts to the already modified output of the second hidden layer. If we also consider the distortion between the first and second hidden layers, the scales of error amplification increase. The deeper the neural network, the stronger the manifestation of this effect. This phenomenon is referred to as internal covariate shift.

In classical neural networks, this problem was partially solved by reducing the learning rate. Small changes in the weights do not significantly alter the distribution of the output of the neural layer. However, this approach does not solve the problem of scaling with an increase in the number of layers of the neural network and reduces the learning rate. Another issue with a low learning rate is the potential stop at local minima (we already discussed this issue in the section about neural network optimization methods).

Here, it's also worth mentioning the necessity of normalizing the input data. Quite often, when solving various tasks, diverse input data is fed into the input layer of a neural network, which might belong to samples with different distributions. Some inputs can have values that significantly exceed the magnitudes of the others. Such values will have a greater impact on the final result of the neural network. At the same time, the actual impact of the described factor might be significantly lower, while the absolute values of the sample are determined by the nature of the metric.

The below chart shows an example illustrating the reflection of a single price movement using two oscillators (MACD and RSI). When considering the indicator charts, you can notice the correlation of the curves. At the same time, the numerical values of the indicators differ by hundreds of thousands of times. This is because RSI values are normalized on a scale from 0 to 100, while MACD values depend on the accuracy of price representation on the graph, as MACD shows the distance between two moving averages.

When building a trading strategy, we can utilize either of these indicators individually or consider the values of both indicators and execute trading operations only when the signals from the indicators align. In practice, this approach enables the exclusion of some false signals, which eventually can reduce the drawdown of the trading strategy. However, before we input such diverse signals into the neural network, it's advisable to normalize them to a comparable form. This is what the normalization of the initial data will help us to achieve.

Of course, we can perform the normalization of the input data while preparing the training and testing datasets outside the neural network. But this approach increases the preparatory work. Moreover, during practical usage of such a neural network, we will need to consistently prepare the input data using a similar algorithm. It is much more convenient to assign this work to the neural network itself.

In February 2015, Sergey Ioffe and Christian Szegedy proposed the Batch Normalization method in their work "Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift". The method was proposed to address the issue of internal covariate shift. This algorithm can also be applied for normalizing input data.

EURUSD H1

The essence of the method was to normalize each individual neuron over a certain time interval by shifting the median of the sample to zero and scaling the sample's variance to one.

The normalization algorithm is as follows. First, the average value is calculated from the data sample.

Where :

- μ B = the arithmetic mean of the feature over the sample

- m = the sample size (batch)

Then we calculate the variance of the original sample.

We normalize the sample data by reducing the sample to zero mean and unit variance.

Note that a small positive constant ε is added to the denominator of the sample variance to prevent division by zero.

As it turned out, such normalization can distort the influence of the input data. This is why the authors of the method added one more step: scaling and offset. They introduced the variables γ and β, which are trained together with the neural network using the gradient descent method.

Applying this method allows obtaining a dataset with a consistent distribution at each training step, which in practice makes the training of the neural network more stable and enables an increase in the learning rate. Overall, this enhances the training quality while reducing the time required for neural network training.

However, at the same time, the cost of storing additional coefficients increases. Furthermore, calculating the moving average and variance requires storing in memory the historical data of each neuron for the entire batch size. An alternative here could be the use of Exponential Moving Average (EMA): calculating the EMA only requires the previous value of the function and the current element of the sequence.

Experiments conducted by the authors of the method demonstrate that the application of Batch Normalization also serves as a form of regularization. This allows for the elimination of other regularization methods, including the previously discussed Dropout. Moreover, there are more recent works showing that the combined use of Dropout and Batch Normalization has a negative effect on the training results of a neural network.

In modern architectures of neural networks, the proposed normalization algorithm can be found in various shapes. The authors suggest using Batch Normalization directly before the non-linearity (activation function). One of the variations of this algorithm is Layer Normalization, introduced by Jimmy Lei Ba, Jamie Ryan Kiros, and Geoffrey E. Hinton in July 2016 in their work "Layer Normalization."