- 4.2.2.1 Feed-forward method

- 4.2.2.2 Backpropagation methods

- 4.2.2.3 Saving and restoring the LSTM block

4.Backpropagation methods for LSTM block

The feed-forward pass represents the standard mode of operation of a neural network. However, before it can be used in real-life operations, we need to train our model. Recurrent neural networks are trained using the familiar backpropagation method with a slight addition. The reason is that, unlike the neural layer types we've discussed before, only recurrent layers use their own output as their input on future iterations. Also, they all have their own weights that need to be learned as well. In the learning process, we have to unfold the recurrent layers chronologically as a multilayer perceptron. The only difference is that all layers will use the same weight matrix. Precisely for this purpose, during the feed-forward pass, we kept a record of the state history of all objects. Now it's time to put them to good use.

We have three methods responsible for the backward pass in the base class of the neural layer:

- CalcHiddenGradient — a gradient distribution through a hidden layer.

- CalcDeltaWeights — a distribution of the gradient to the weighting matrix.

- UpdateWeights — the method of updating the weights.

class CNeuronLSTM : public CNeuronBase

|

We have to redefine them.

First, we will override the CalcHiddenGradient method for distributing the gradient through the hidden layer. Here we will need to unwrap the entire historical chain and run the error gradient through all states. Additionally, let's not forget that besides distributing gradients within the LSTM block, we must also perform the second function of this method: propagating the gradient of the error back to the previous layer.

The method receives a pointer to the object of the previous layer and returns a boolean result indicating the success of the operations.

At the beginning of the method, we check all the objects used. We check both pointers to objects of the previous layer and internal objects received in the parameters.

bool CNeuronLSTM::CalcHiddenGradient(CNeuronBase *prevLayer)

|

Let's not forget that a backpropagation pass is only possible after a feed-forward pass. The foundation of source data for the backpropagation pass is established exactly during the feed-forward pass. Therefore, the next step is to check for the presence of information in the memory stacks and hidden states. In addition, the stack filling indicates the depth of gradient propagation in the story.

//--- check the presence of forward pass data

|

Continuing the preparatory work, let's create pointers to the result and gradient buffers of the internal layers. I think the need for pointers to gradient buffers is obvious. We will need to write error gradients to them, propagating them through the LSTM block. The need for result buffers, on the other hand, is not so obvious. As you know, every neuron has an activation function. Our inner layers are activated by the logistic function and by the hyperbolic tangent. The error gradient obtained at the input of the neural layer must be adjusted to the derivative of the activation function. The derivative of the above activation functions can be easily recalculated based on the result of the function itself. Thus, we need the appropriate input data to perform a correct backpropagation pass. For the previously considered neural layers, such an issue was not raised because the correct data were written to the result buffer in a forward pass. In the case of a recurrent block, only the result of the last forward pass will be stored in the result buffer. To work out the depth of the history, we will have to overwrite the values of the result buffer with the values of the corresponding time step.

//--- make pointers to buffers of gradients and results of internal layers

|

CBufferType *ig_grad = m_cInputGate.GetGradients();

|

CBufferType *og_grad = m_cOutputGate.GetGradients();

|

CBufferType *nc_grad = m_cNewContent.GetGradients();

|

At the end of the preparatory process, we will store the size of the internal thread buffers into a local variable.

uint out_total = m_cOutputs.Total(); |

Next, we create a loop through historical data. The main operations of our method will be performed in the body of this loop. At the beginning of the loop, we will load information from the corresponding historical step in our stacks. Note that all buffers are loaded for the analyzed chronological step, while the memory buffer is taken from the preceding step. I will explain the reasons for this below.

//--- loop through the accumulated history

|

Next, we have to distribute the error gradient received at the input of the LSTM block between the internal neural layers. This is where we build a new process. Following our class construction concept, we create a branching of the algorithm based on the execution device for mathematical operations.

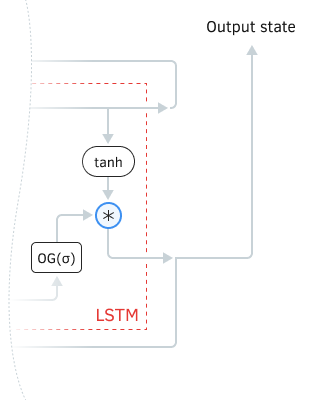

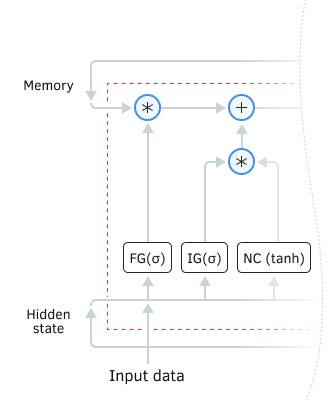

The error gradient distribution is performed in reverse order of the forward flow of information. Hence, we will construct its propagation algorithm from output to input. Let's look at the result node of our LSTM block. During the feed-forward pass, the updated memory state is activated by the hyperbolic tangent and multiplied by the output gate state. Thus, we have two components affecting the result of the block: the memory value and the gate.

LSTM block result node

In order to reduce the error at the block output, we need to adjust the values of both components. To do this, we need to distribute the overall error gradient through a multiplication function that combines the two threads of information. That is, multiply the error gradient we know by the derivative of the function along each direction. We know from our high school math course that the derivative of the product of a constant over a variable is a constant. We apply the following approach: when determining the influence of one of the factors, we assume that all other components have constant values. Hence, we can write the following mathematical formulas.

Then we can easily distribute the derivative in both directions using the following mathematical formulas.

We haven't created a separate buffer for the activated memory state. However, we can easily count it by re-activating the corresponding state or by dividing the hidden state by the output gate value. I chose the second path, and the entire algorithm for distributing the error gradient at this site is expressed in the following code.

//--- branching of the algorithm across the computing device

|

Before distributing the memory gradient to the rest of the internal layers, we must correct the resulting gradient by the derivative of the activation function.

//--- adjust the gradient to the derivative

|

We continue to distribute the error gradient between the internal layers. We need to distribute the error gradient from the memory flow to three more internal layers. Moving along the information flow inside the LSTM block in reverse, the first function we encounter is summation. The derivative of the sum is 1. Therefore, we pass the error gradient in both directions unchanged.

Error gradient distribution inside the LSTM block

Next, in both directions, we encounter the product. The principles of propagating the gradient through the multiplication of two numbers have been explained in detail above, so there is no need to repeat them. I just want to remind you that, unlike all buffers from the stack, only the memory buffer was taken one step further back in history. I promised to clarify this point, and now is the most suitable time to do so. Take a look at the LSTM block diagram. To refresh memory, we multiply the output of the Forget gate by the memory state transferred from the previous iteration. Hence, to determine the error gradient at the output of the Forget gate, we need to multiply the error gradient in the memory thread by the memory state of the previous iteration. It is the buffer of this state that we loaded at the start of the loop

The MQL5 code of the described operations is presented below.

//--- InputGate gradient

|

This completes the thread separation block by computational operation unit, and we merge the threads of the algorithm. We set a stub for the OpenCL branch and move on.

else

|

We have already discussed the need to use the historical states of the inner layer result buffers. Now we need to put this into practice and fill the result buffers with relevant historical data.

//--- copy the corresponding historical data to the buffers of the internal layers

|

Next, we need to propagate the gradient from the output to the input of the internal neural layers. This functionality is easily implemented by the base class method. However, please note the following. All four internal neural layers use the same input data. We also need to put the error gradient together in the same buffer. The neural layer base class methods we developed earlier are constructed in such a way that they overwrite values. Therefore, we need to organize the process of summing the error gradients from each internal neural layer.

First, we'll run a gradient through the Forget gate. Recall that in order to transfer the source data to the internal neural layers, we created a base layer of source data and after performing forward pass operations, we stored a pointer to it in the source data stack. This type of object already contains buffers for writing data and error gradients. So, now we just take this pointer and pass it in the parameters of the CNeuronBase::CalcHiddenGradient method. After this, our base class method will execute and fill the error gradient buffer at the source data level for the forget gates. But it's only one gate, and we need to gather information from all of them. To avoid losing the computed error gradient when calling a similar method for other internal layers, we will copy the data into the m_cInputGradient buffer which we created in advance for accumulating error gradients.

//--- propagate a gradient through the inner layers

|

We repeat the operations for the remaining internal layers. However, now we add the new values of the error gradient to the previously obtained values.

if(!m_cInputGate.CalcHiddenGradient(inputs))

|

Please note the following. While processing the first three internal layers we move values into the temporary buffer m_cInputGradient. However, while processing the last layer, we transfer the previously accumulated error gradient into the source data layer buffer. Thus, we keep the overall error gradient at the initial data layer along with the initial data itself in the same initial data layer. It will also be automatically saved in our stack. Recall what I wrote about objects and pointers to them.

Here comes an interesting moment. Remember, why we did all this? Propagation of the error gradient across all elements of the neural network is necessary to have a reference for determining the direction and extent of weight matrix element adjustments to reduce the overall error of our neural network performance. Consequently, as a result of the operations of this method, we must:

- Bring the error gradient to the previous layer, and

- Bring the error gradient to the weight matrices of the internal neural layers.

If we run the next iteration cycle in this state with new data for recalculating the error gradients of internal layers, we will simply replace the just-calculated values. However, we need to propagate the error gradients all the way to the weight matrices of the internal neural layers. Therefore, without waiting for a call from an external program, we call the CNeuronBase::CalcDeltaWeights method for all internal layers, which will recalculate the gradient at the weight matrix level.

//--- project the gradient onto the weight matrices of the internal layers

|

We pass the error gradient only from the current state to the previous neural layer. Historical data remains only for the internal user of the LSTM block. Therefore, we check the iteration index and only then pass the error gradient to the buffer of the previous layer. Do not forget that our error gradient buffer at the source data level contains more data than the buffer of the previous layer. This is because it also contains the error gradient of the hidden state. Hence, we will transfer only the necessary part of the data to the previous layer.

We transfer the remainder to the error gradient buffer of our LSTM block. Remember, at the beginning of the loop, it was from this buffer that we took the error gradient to propagate throughout the LSTM block? It's time to prepare the initial data for the next iteration of our loop through the chronological iterations of the feed-forward pass and error gradient propagation.

//--- if the gradient of the current state is calculated, then transfer it to the previous layer

|

After the successful execution of all iterations, we exit the method with a positive result.

We have gone through two of the most complex and intricate methods for constructing a recurrent LSTM block algorithm. The rest of it will be much easier. For example, the CalcDeltaWeights method. The functionality of this method involves passing the error gradient to the level of the weight matrix. The LSTM block does not have any separate weight matrix. All parameters are located within the nested neural layers, and we have already brought the error gradient to the level of their weight matrices in the previous method. Therefore, we rewrite the method with an empty stub with a positive result.

virtual bool CalcDeltaWeights(CNeuronBase *prevLayer) { return true; } |

Another backward pass method, UpdateWeights, is a method for updating the weights matrix. The method is also inherited from the neural layer base class and overridden as needed. LSTM block unlike the previously discussed types of neural layers does not have a single weight matrix. Instead, internal neural layers with their own weight matrices are used. So we can't just use the method of the parent class and have to override it.

The CNeuronLSTM::UpdateWeights method from an external program receives the parameters required to execute the algorithm for updating the weight matrix and returns the logical value of the result of the method operations.

Even though the method parameters do not include any object pointers, we still set up control structures at the beginning of the method. Here, we check the validity of pointers to internal neural layers and the value of the parameter indicating the depth of history analysis, which should be greater than 0.

bool CNeuronLSTM::UpdateWeights(int batch_size, TYPE learningRate, VECTOR &Beta,

|

Please note the batch_size parameter. This parameter indicates the number of backpropagation iterations between weight updates. It is tracked by an external program and passed to the method in parameters. For an external program and for the neural network types considered earlier, the number of feed-forward and backpropagation passes is equal, as each feed-forward pass is followed by a backpropagation pass, in which the deviation of the estimated neural network result from the expected result is determined and the error gradient is propagated throughout the neural network. In the case of a recurrent block, the situation is slightly different: for each feed-forward pass, a recurrent block undergoes multiple iterations of backward passes, determined by the depth of the analyzed history. Consequently, we must adjust the batch size received from the external program to the depth of the historical data.

int batch = batch_size * m_iDepth; |

We can then use the methods to update the weight matrix by passing them the correct data in the parameters.

//--- update the weight matrices of the internal layers

|

After successfully updating the weight matrices of all internal neural layers, we exit the method with a positive result.

This concludes our review of LSTM block backpropagation methods. We can move forward in building our system.