Forum on trading, automated trading systems and testing trading strategies

fxsaber, 2024.01.26 08:52

Thanks for the article! Added the algorithm to the general list.34: OPTIMIZATION_METHOD_AO_HS = 1 e-323 33: OPTIMIZATION_METHOD_AO_GSA = 0.11926002964619356 32: OPTIMIZATION_METHOD_AO_ABC = 0.4042085745674859 31: OPTIMIZATION_METHOD_AO_MA = 0.612338549995716 30: OPTIMIZATION_METHOD_AO_BFO = 0.6679763921514072 29: OPTIMIZATION_METHOD_AO_BFO_GA = 0.71308437578657 28: OPTIMIZATION_METHOD_AO_CSS = 0.7316626048819873 27: OPTIMIZATION_METHOD_AO_FSS = 0.7355579934372427 26: OPTIMIZATION_METHOD_AO_GWO = 0.7390576242470235 25: OPTIMIZATION_METHOD_AO_RND = 0.8155449913938271 24: OPTIMIZATION_METHOD_AO_PSO = 0.8222303819859975 23: OPTIMIZATION_METHOD_AO_SC = 0.8340939685401099 22: OPTIMIZATION_METHOD_AO_BA = 0.845763320077643 21: OPTIMIZATION_METHOD_AO_Micro_AIS = 0.8528065344750899 20: OPTIMIZATION_METHOD_AO_SA = 0.8589854552563216 19: OPTIMIZATION_METHOD_AO_SFL = 0.8597046576708655 18: OPTIMIZATION_METHOD_AO_IWDm = 0.862259472275573 17: OPTIMIZATION_METHOD_AO_EM = 0.8779833807818905 16: OPTIMIZATION_METHOD_AO_SDS = 0.9027267066161594 15: OPTIMIZATION_METHOD_AO_NMm = 0.9076903894257041 14: OPTIMIZATION_METHOD_AO_FAm = 0.9111364322206529 13: OPTIMIZATION_METHOD_AO_ESG = 0.9128694208149278 12: OPTIMIZATION_METHOD_AO_SDOm = 0.9182612507465655 11: OPTIMIZATION_METHOD_AO_COAm = 0.9198698363722636 10: OPTIMIZATION_METHOD_AO_IWO = 0.923914294524697 09: OPTIMIZATION_METHOD_AO_SSG = 0.9304990658351672 08: OPTIMIZATION_METHOD_AO_BGA = 0.9389284935189659 07: OPTIMIZATION_METHOD_AO_ACOm = 0.944545536542497 06: OPTIMIZATION_METHOD_AO_DE = 0.9482478998933197 05: OPTIMIZATION_METHOD_AO_POES = 0.9528516011673952 04: OPTIMIZATION_METHOD_AO_SIA = 0.9540996483364099 03: OPTIMIZATION_METHOD_AO_MEC = 0.9574730447145243 02: OPTIMIZATION_METHOD_AO_SDSm = 0.9648638811101882 01: OPTIMIZATION_METHOD_PSO = 0.9653160312454183 00: OPTIMIZATION_METHOD_AO_P_O_ES = 0.9654152361899765

Forum on trading, automated trading systems and testing trading strategies

fxsaber, 2024.01.21 01:38 AM

According to this methodology, testing with the Hilly function.

34: OPTIMIZATION_METHOD_AO_ABC = 0.42453133581346014 33: OPTIMIZATION_METHOD_AO_IWDm = 0.48360991828383987 32: OPTIMIZATION_METHOD_AO_SIA = 0.49114081735004017 31: OPTIMIZATION_METHOD_AO_EM = 0.49479704987697276 30: OPTIMIZATION_METHOD_AO_RND = 0.5085913649249508 29: OPTIMIZATION_METHOD_AO_MA = 0.5129110822292692 28: OPTIMIZATION_METHOD_AO_HS = 0.5129110822292692 27: OPTIMIZATION_METHOD_AO_CSS = 0.5138134842496185 26: OPTIMIZATION_METHOD_AO_SSG = 0.518468314742929 25: OPTIMIZATION_METHOD_AO_SC = 0.5243709181146918 24: OPTIMIZATION_METHOD_AO_SA = 0.532630712905892 23: OPTIMIZATION_METHOD_AO_FSS = 0.5405996550405998 22: OPTIMIZATION_METHOD_AO_PSO = 0.5430691869913079 21: OPTIMIZATION_METHOD_AO_FAm = 0.5629971666466362 20: OPTIMIZATION_METHOD_AO_BA = 0.5653828707497576 19: OPTIMIZATION_METHOD_AO_BFO = 0.5708620661833331 18: OPTIMIZATION_METHOD_AO_IWO = 0.5736967768562664 17: OPTIMIZATION_METHOD_AO_NMm = 0.5818790406200212 16: OPTIMIZATION_METHOD_AO_ESG = 0.5945790493029925 15: OPTIMIZATION_METHOD_AO_GWO = 0.6234871646160723 14: OPTIMIZATION_METHOD_PSO = 0.6256882439878475 13: OPTIMIZATION_METHOD_AO_GSA = 0.6735183680285166 12: OPTIMIZATION_METHOD_AO_SFL = 0.6885524892005978 11: OPTIMIZATION_METHOD_AO_DE = 0.7092034045816206 10: OPTIMIZATION_METHOD_AO_COAm = 0.7263185318109061 09: OPTIMIZATION_METHOD_AO_SDS = 0.7686064552778226 08: OPTIMIZATION_METHOD_AO_Micro_AIS = 0.7722431882203732 07: OPTIMIZATION_METHOD_AO_SDOm = 0.7808430850753312 06: OPTIMIZATION_METHOD_AO_MEC = 0.7816647439743983 05: OPTIMIZATION_METHOD_AO_ACOm = 0.7830252357918316 04: OPTIMIZATION_METHOD_AO_POES = 0.8453008986622238 03: OPTIMIZATION_METHOD_AO_P_O_ES = 0.8523920887258357 02: OPTIMIZATION_METHOD_AO_SDSm = 0.9046058644799349 01: OPTIMIZATION_METHOD_AO_BGA = 0.9856063511804057 00: OPTIMIZATION_METHOD_AO_BFO_GA = 0.9880292622094636

Because the largest result is not guaranteed. I will tell you more, no result is guaranteed in stochastic search.

You can only estimate the average result for some number of tests, in the articles we take 10 tests, it is enough for a good evaluation of comparison of algos among themselves. It is possible to increase the number of tests even more, of course, for example 100 or more, but it ceases to make sense because of the rapid decrease of measurement accuracy. In short, 10 is the best, earlier I used 5 tests - it was not enough.

And, some algorithms show a very large scatter in convergence, this is a very important property of optimisation algorithms - repeatability of results, which characterises the stability of the probabilistic model of the algorithm (unless, of course, we are talking about deterministic search strategies, the results of which will be invariably the same, but, as a rule, such algorithms have low convergence).

In general, it is necessary to do at least 10 tests to be able to adequately compare algorithms that have stochastic nature of search strategy in their basis.

Very roughly we can give an analogy: which of the strategies of poking a spear into a haystack is more effective to find an apple among a pile of straw? The comparison can only be made in several tests, otherwise there is a non-zero probability of hitting the apple with the spear the first time if you poke strictly from the top of the stack in the centre, and it is wrong to think that this is a natural result of such a strategy.

Besides, the strategies are given in absolutely pure form, it can be said to be an elixir of strategies, unclouded by the application of duplicates screening and other techniques of search acceleration for a limited number of FF runs. Each new duplicate set wastes a run, and algorithms in this pure form are very easy to compare.

When the search space is small, duplicate exclusion can make a very big difference, and even very weak algos can show good results. But as the search space increases, duplicate exclusion becomes meaningless faster than the search space increases, and the more the differences in the abilities of the search strategies become apparent.

I may have conveyed my point poorly with this post, some things are very hard to explain, unfortunately.

I see it logical to have an input parameter "number of repetitions" to search for the global maximum.

Forum on trading, automated trading systems and testing trading strategies

fxsaber, 2024.01.21 01:38 AM

According to this methodology, testing by the Hilly function.

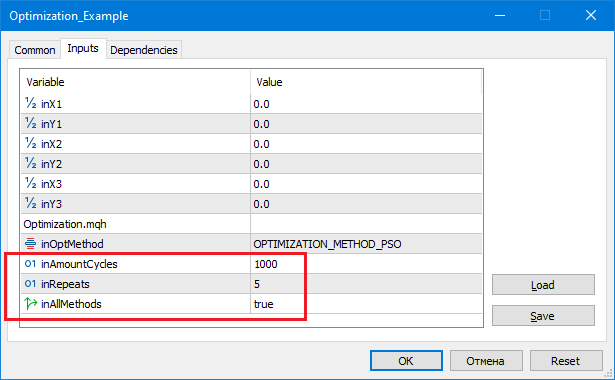

34: OPTIMIZATION_METHOD_AO_HS = -1.7976931348623157 e+308 33: OPTIMIZATION_METHOD_AO_CSS = 0.49720358822818334 32: OPTIMIZATION_METHOD_AO_FSS = 0.5126268634117646 31: OPTIMIZATION_METHOD_AO_MA = 0.5456638212207142 30: OPTIMIZATION_METHOD_AO_SC = 0.5493573778417595 29: OPTIMIZATION_METHOD_AO_SFL = 0.5586288936731915 28: OPTIMIZATION_METHOD_AO_EM = 0.5622069376759021 27: OPTIMIZATION_METHOD_AO_BFO = 0.572272929264936 26: OPTIMIZATION_METHOD_AO_GWO = 0.576823172558009 25: OPTIMIZATION_METHOD_AO_BA = 0.5780620208551676 24: OPTIMIZATION_METHOD_AO_COAm = 0.6122501636921197 23: OPTIMIZATION_METHOD_AO_FAm = 0.6140389813209256 22: OPTIMIZATION_METHOD_AO_IWO = 0.668152749061326 21: OPTIMIZATION_METHOD_AO_RND = 0.6708834560036839 20: OPTIMIZATION_METHOD_AO_SDSm = 0.6949312990837662 19: OPTIMIZATION_METHOD_AO_ESG = 0.6998001260367949 18: OPTIMIZATION_METHOD_AO_DE = 0.7011196053256343 17: OPTIMIZATION_METHOD_AO_IWDm = 0.7021399692041209 16: OPTIMIZATION_METHOD_AO_GSA = 0.7220579685405103 15: OPTIMIZATION_METHOD_AO_PSO = 0.749441828773945 14: OPTIMIZATION_METHOD_AO_SA = 0.7682447940796119 13: OPTIMIZATION_METHOD_AO_MEC = 0.7861990306904714 12: OPTIMIZATION_METHOD_AO_ABC = 0.7907454297118246 11: OPTIMIZATION_METHOD_AO_SDS = 0.8196051951016118 10: OPTIMIZATION_METHOD_AO_SIA = 0.8221393904152611 09: OPTIMIZATION_METHOD_AO_SDOm = 0.8473736964247897 08: OPTIMIZATION_METHOD_PSO = 0.8554905139189528 07: OPTIMIZATION_METHOD_AO_BFO_GA = 0.9302287492114422 06: OPTIMIZATION_METHOD_AO_SSG = 0.9508929176886642 05: OPTIMIZATION_METHOD_AO_Micro_AIS = 0.9744230924005981 04: OPTIMIZATION_METHOD_AO_BGA = 0.9758026556865227 03: OPTIMIZATION_METHOD_AO_ACOm = 0.9842635986445009 02: OPTIMIZATION_METHOD_AO_P_O_ES = 0.9862393247408759 01: OPTIMIZATION_METHOD_AO_POES = 0.9939584765415376 00: OPTIMIZATION_METHOD_AO_NMm = 0.9992316949848764 inAmountCycles = 1000, inRepeats = 5

Repeats - the number of independent calls to the algorithm.

AmountCycles - the number of FF calls in each attempt (see Repeats).

For each algorithm, the best result is output (see Repeats).

MathSrand ((int)GetMicrosecondCount ()); // reset of the generator

I'd do it this way.

Sleep(1); // random GetMicrosecondCount (). MathSrand ((int)TimeLocal() + (int)GetMicrosecondCount ()); // reset of the generator

Otherwise it repeats every time the script is run.

I think it is logical to have an input parameter "number of repetitions" to search for the global maximum. For example, we optimise a TS in the standard Tester. Sometimes it is logical to run the optimiser several times in a row to reduce the probability of getting stuck in local extrema. Yes, the speed of calculations will drop by the corresponding number of times, but the probability of hitting a global peak will be higher.

Repeats - number of independent calls of the algorithm.

AmountCycles - number of FF calls in each attempt (see Repeats).

For each algorithm the best result is displayed (see Repeats).

Yes, to increase the chance of finding a global, all other things being equal - you just need to increase the number of tests and use the best solution found. Even the RND algorithm has a non-zero chance to find what you need with this approach.

But, as I described above, only the average result can allow comparing algorithms with each other.

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

Check out the new article: Population optimization algorithms: Evolution of Social Groups (ESG).

We will consider the principle of constructing multi-population algorithms. As an example of this type of algorithm, we will have a look at the new custom algorithm - Evolution of Social Groups (ESG). We will analyze the basic concepts, population interaction mechanisms and advantages of this algorithm, as well as examine its performance in optimization problems.

In the field of optimization, there is a wide range of population algorithms designed to find optimal solutions in various problems. However, despite their importance, multi-population and multi-swarm algorithms have not previously been sufficiently covered in my articles. In this regard, I feel the need for a more detailed consideration of this fascinating and promising topic.

Multi-population algorithms are based on the idea of using multiple independent populations to solve optimization problems. Populations work logically in parallel and can exchange information about optimal solutions, which makes it possible to simultaneously explore different regions of the parameter space and find different optima. On the other hand, multi-swarm algorithms use social groups (swarms) of many interacting particles that can also cooperate with each other and exchange information to achieve optimal solutions.

In this article, we will consider the multi-population ESG algorithm that I created specifically for this article. We will look at the basic principles of such algorithms. In addition, we will consider the results of comparative studies that will allow us to evaluate the effectiveness of these algorithms in comparison with mono-population optimization methods.

Author: Andrey Dik