The article Neural networks are simple (Part 36): Relational Reinforcement Learning:

Author: Dmitriy Gizlyk

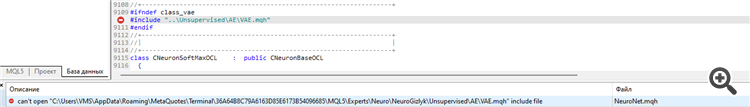

CS 0 15:22:10.739 Core 01 2023.01.01 00:00:00 EURUSD_PERIOD_H1_RRL-learning.nnw CS 0 15:22:10.739 Core 01 2023.01.01 00:00:00 OpenCL not found. Error code=5103 CS 2 15:22:10.739 Core 01 2023.01.01 00:00:00 invalid pointer access in 'NeuroNet.mqh' (2876,11) CS 2 15:22:10.739 Core 01 OnInit critical error CS 2 15:22:10.739 Core 01 tester stopped because OnInit failed CS 2 15:22:10.740 Core 01 disconnected CS 0 15:22:10.740 Core 01 connection closed

I keep getting the same error when I try to train, I tried to make the neural network with the NetCreator as well but the same error occurred.

what could be causing the problem?

I keep getting the same error when I try to train, I tried to make the neural network with the NetCreator as well but the same error occurred.

what could be causing the problem?

| ERR_OPENCL_CONTEXT_CREATE | 5103 | Error creating the OpenCL context |

Before use this library you must install OpenCL on your PC

| ERR_OPENCL_CONTEXT_CREATE | 5103 | Error creating the OpenCL context |

Before using this library you must install OpenCL on your PC

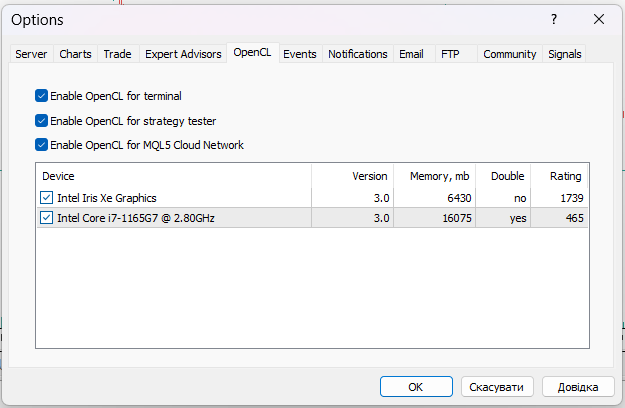

Yes, it's enabled but I was able to identify the problem. My strategy tester uses Processor instead of GPU but my Processor doesn't have OpenCL I think. How do I make the tester use GPU instead if CPU

CS 0 20 : 01 : 11.215 Core 01 AMD EPYC 7 V13 64 -Core, 225278 MB

Hey Dmitry!

Wonderful work.

In this part, training a neural network last a hell of a lot more time than previous. Do you have the same experiences?

Regards,

Tomasz

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

New article Neural networks made easy (Part 36): Relational Reinforcement Learning has been published:

In the reinforcement learning models we discussed in previous article, we used various variants of convolutional networks that are able to identify various objects in the original data. The main advantage of convolutional networks is the ability to identify objects regardless of their location. At the same time, convolutional networks do not always perform well when there are various deformations of objects and noise. These are the issues which the relational model can solve.

The main advantage of relational models is the ability to build dependencies between objects. That enables the structuring of the source data. The relational model can be represented in the form of graphs, in which objects and events are represented as nodes, while relationships show dependencies between objects and events.

By using the graphs, we can visually build the structure of dependencies between objects. For example, if we want to describe a channel breakout pattern, we can draw up a graph with a channel formation at the top. The channel formation description can also be represented as a graph. Next, we will create two channel breakout nodes (upper and lower borders). Both nodes will have the same links to the previous channel formation node, but they are not connected to each other. To avoid entering a position in case of a false breakout, we can wait for the price to roll back to the channel border. These are two more nodes for the rollback to the upper and lower borders of the channel. They will have connections with the nodes of the corresponding channel border breakouts. But again, they will not have connections with each other.

The described structure fits into the graph and thus provides a clear structuring of data and of an event sequence. We considered something similar when constructing association rules. But this can hardly be related to the convolutional networks we used earlier.

Convolutional networks are used to identify objects in data. We can train the model to detect some movement reversal points or small trends. But in practice, the channel formation process can be extended in time with different intensity of trends within the channel. However, convolutional models may not cope well with such distortions. In addition, neither convolutional nor fully connected neural layers can separate two different patterns that consist of the same objects with a different sequence.

It should also be noted that convolutional neural networks can only detect objects, but they cannot build dependencies between them. Therefore, we need to find some other algorithm which can learn such dependencies. Now, let us get back to attention models. The attention models that make it possible to focus attention on individual objects, singling them out from the general data array.

Author: Dmitriy Gizlyk