MQL's OOP notes: Optimystic library for On The Fly Optimization of EA meets fast particle swarm algorithm

Next step is to embed the particle swarm into the library. Let's go back to Optimystic.mqh.

{

METHOD_BRUTE_FORCE, // brute force

METHOD_PARTICLE_SWARM // particle swarm

};

And we need a method to switch either of the methods on.

{

public:

virtual void setMethod(OPTIMIZATION_METHOD method) = 0;

That's it! We're done with the header file. Let's move to the implementation part in Optimystic.mq4.

And add new declarations to the class.

{

private:

OPTIMIZATION_METHOD method;

Swarm *swarm;

public:

virtual void setMethod(OPTIMIZATION_METHOD m)

{

method = m;

}

The class should change optimization approach according to the selected method, so onTick should be updated.

{

static datetime lastBar;

if(enabled)

{

if(lastBar != Time[0] && TimeCurrent() > lastOptimization + PeriodSeconds() * resetInBars)

{

callback.setContext(context); // virtual trading

if(method == METHOD_PARTICLE_SWARM)

{

optimizeByPSO();

}

else

{

optimize();

}

}

}

callback.setContext(NULL); // real trading

callback.trade(0, Ask, Bid);

lastBar = Time[0];

}

Here we added a new procedure optimizeByPSO. Let's define it using already existing optimize as a draft.

{

if(!callback.onBeforeOptimization()) return false;

Print("Starting on-the-fly optimization at ", TimeToString(Time[rangeInBars]));

uint trap = GetTickCount();

We marked the time to compare performance of the new and old algorithms later.

if(spread == 0) spread = (int)MarketInfo(Symbol(), MODE_SPREAD);

best = 0;

int count = resetParameters();

We modified the function resetParameters to return number of active parameters (as you remember, we can disable specific parameters by calling setEnabled). Normally all parameters are enabled, so count will be equal to the size of parameters array.

The reason why we're bothering with this is our need to pass work ranges of parameters values to the swarm on the next lines.

ArrayResize(max, count);

ArrayResize(min, count);

ArrayResize(step, count);

for(int i = 0, j = 0; i < n; i++)

{

if(parameters[i].getEnabled())

{

max[j] = parameters[i].getMax();

min[j] = parameters[i].getMin();

step[j] = parameters[i].getStep();

j++;

}

}

swarm = new Swarm(count, max, min, step);

And here is the problems begin. Next thing that we should write is somethig like this:

But we need a functor instead of ellipsis. The first thought you may have - let's derive the class OptimysticImplementation from the swarm's Functor interface, but this is not possible. The class is already derived from Optimystic, and MQL does not allow multiple inheritance. This is a classical dilemma for many OOP languages, because multiple inheritance is a double-edged sword.

When multiple inheritance is not supported one can apply a composition. This is a widely used technique.

{

private:

OptimysticImplementation *parent;

public:

SwarmFunctor(OptimysticImplementation *_parent)

{

parent = _parent;

}

virtual double calculate(const double &vector[])

{

return parent.calculatePS(vector);

}

};

This class does minimal job. It's only purpose is to accept calls from the swarm and redirect them to OptimysticImplementation, where we'll need to add new function calculatePS. This is done so, because only OptimysticImplementation manages all information required to perform EA testing. We'll consider the function calculatePS in a few minutes, but first let's continue the composition.

{

private:

SwarmFunctor *functor;

And now we can return back to the method optimizeByPSO which we have left on half way. Let's create an instance of SwarmFunctor and pass it to the swarm.

swarm.optimize(functor, 100); // TODO: 100 cycles are hardcoded - this should be adjusted

double result[];

bool ok = swarm.getSolution(result);

if(ok)

{

for(int i = 0, j = 0; i < n; i++)

{

if(parameters[i].getEnabled())

{

parameters[i].setValue(result[j]);

parameters[i].markAsBest();

j++;

}

}

}

delete functor;

delete swarm;

isOptimizationOn = false;

callback.onAfterOptimization();

Print("Done in ", DoubleToString((GetTickCount() - trap) * 1.0 / 1000, 3), " seconds");

lastOptimization = TimeCurrent();

return ok;

}

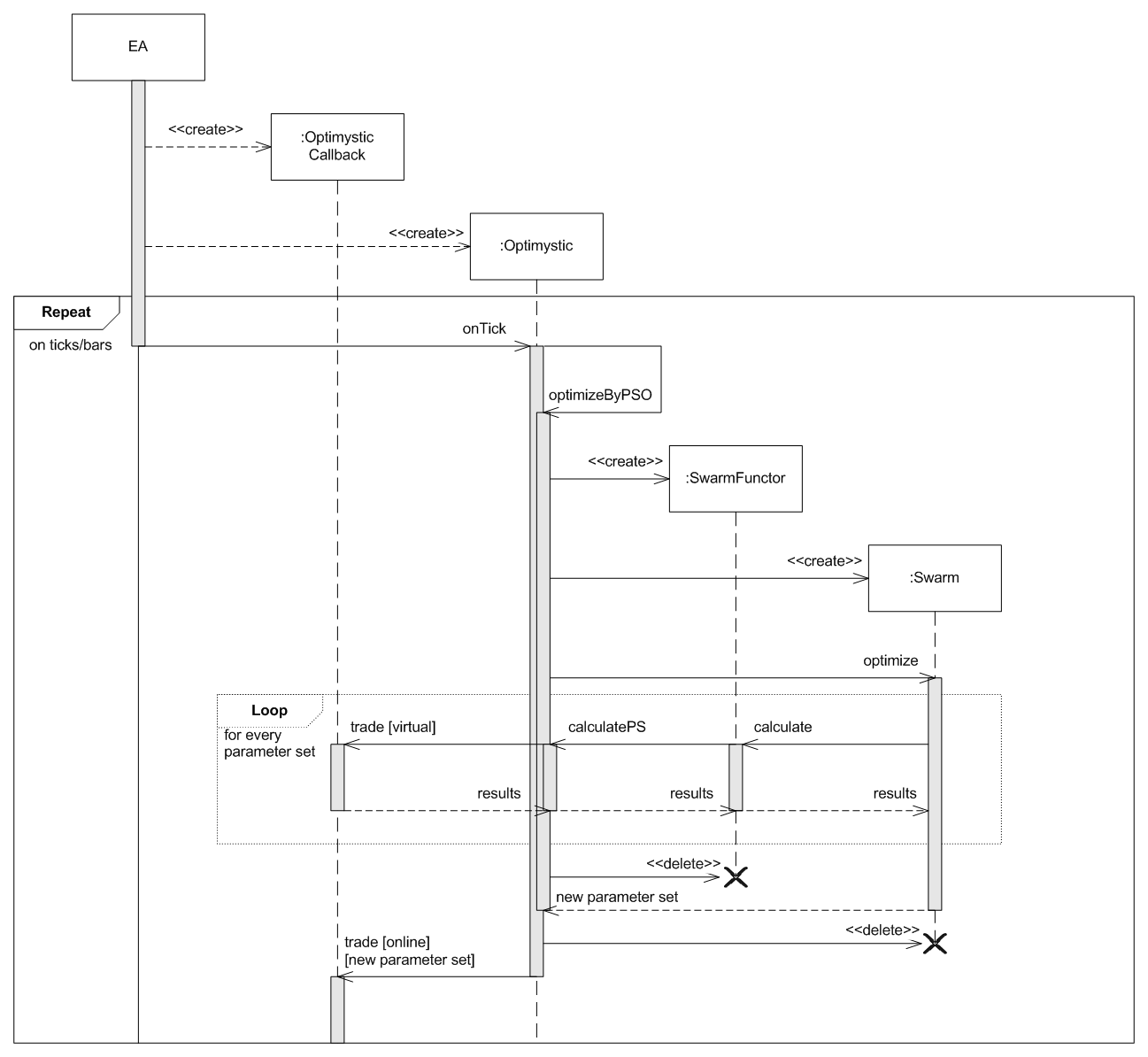

When the library invokes optimizeByPSO from onTick, we create the Swarm object and SwarmFunctor object taking a back reference to its owner (OptimysticImplementation object) and execute Swarm's optimize passing SwarmFunctor as a parameter. The swarm will call SwarmFunctor's calculate with current parameters, and the latter will in turn call our OptimysticImplementation object to test EA on given parameters. One pictrure is worth a thousand words.

So we need to add calculatePS to the implementation. Most of lines in it are taken from the method optimize used for straightforward optimization.

double calculatePS(const double &vector[])

{

int n = parameters.size();

for(int i = 0, j = 0; i < n; i++)

{

if(parameters[i].getEnabled())

{

parameters[i].setValue(vector[j++]);

}

}

if(!callback.onApplyParameters()) return 0;

context.initialize(deposit, spread, commission);

// build balance and equity curves on the fly

for(int i = rangeInBars; i >= forwardBars; i--)

{

context.setBar(i);

callback.trade(i, iOpen(Symbol(), Period(), i) + spread * Point, iOpen(Symbol(), Period(), i));

context.activatePendingOrdersAndStops();

context.calculateEquity();

}

context.closeAll();

// calculate estimators

context.calculateStats();

double gain;

if(estimator == ESTIMATOR_CUSTOM)

{

gain = callback.onCustomEstimate();

if(gain == EMPTY_VALUE)

{

return 0;

}

}

else

{

gain = context.getPerformance(estimator);

}

// if estimator is better than previous, then save current parameters

if(gain > best && context.getPerformance(ESTIMATOR_TRADES_COUNT) > 0)

{

best = gain;

bestStats = context.getStatistics();

}

callback.onReadyParameters();

return gain;

}

Now we're finally done with embedding particle swarm into Optimystic. The complete source code of the updated library is provided below.

You may see that instead of total set of 1000 passes, particle swarm invoked EA 139 times. Resulting parameter sets of two methods may differ of course, but this is also the case when you use built-in genetic optimization.