.

Please add another image of the same size on the right - a quadrupled (instead of one pixel - four (2x2) of the same colour) original image.

You can replace the code to display it:

//ShowImage(canvas_original,"original_image",new_image_width,0,image_width,image_height,image_data); ShowImage4(canvas_original,"original_image",new_image_width,0,image_width,image_height,image_data);

//+------------------------------------------------------------------+ //| ShowImage4 | //+------------------------------------------------------------------+ bool ShowImage4(CCanvas &canvas,const string name,const int x0,const int y0,const int image_width,const int image_height, const uint &image_data[]) { if(ArraySize(image_data)==0 || name=="") return(false); //--- prepare canvas canvas.CreateBitmapLabel(name,x0,y0,4*image_width,4*image_height,COLOR_FORMAT_XRGB_NOALPHA); //--- copy image to canvas for(int y=0; y<4*image_height-1; y++) for(int x=0; x<4*image_width-1; x++) { uint clr =image_data[(y/4)*image_width+(x/4)]; canvas.PixelSet(x,y,clr); } //--- ready to draw canvas.Update(true); return(true); }

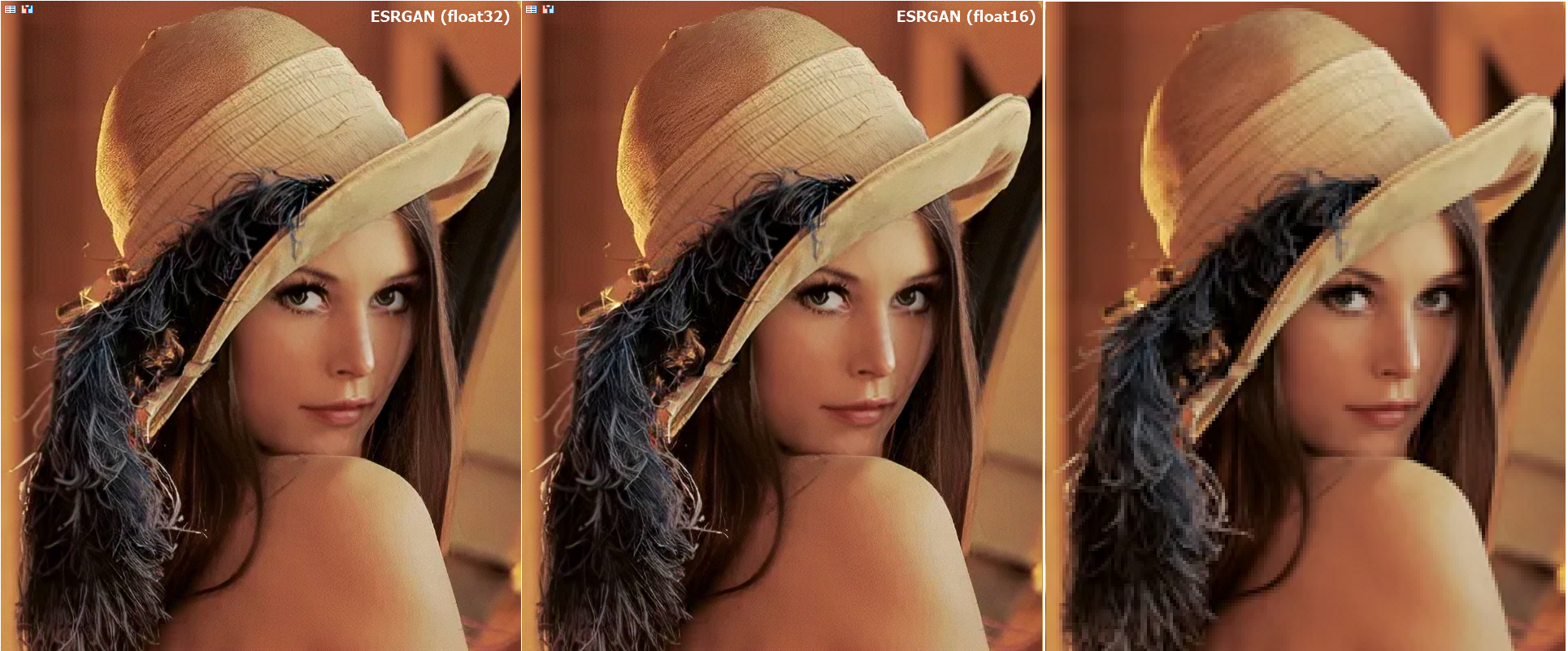

Thanks! Reduced by a factor of two at each coordinate, getting the right image as the original.

I thought that float16/32 would become close to the original with this transformation. But they are noticeably better! I.e. UpScale+DownScale >> Original.

ZY Surprised. It seems reasonable to run all old images/videos through such onnx-model.

If the onnx-model input is given the same data, will the output always be the same?

Is there an element of randomness within the onnx-model?

If the onnx model is fed the same data as input, but the output will always have the same result?

Is there an element of randomness within the onnx-model?

In general, it depends on what operators are used inside the ONNX model.

For this model the result should be the same, it contains deterministic operations (1195 in total)

Примеры чисел половинной точности

In these examples, floating point numbers are represented in binary. They include the sign bit, exponent, and mantissa.

0 01111 0000000000 = +1 *215-15 = 1

0 01111 0000000001 = +1.0000000001 2 *215-15=1+ 2-10 = 1.0009765625 (the next higher number after 1)

I.e. for numbers with 5 decimal places (most currencies) only 1.00098 can be applied after 1.00000.

Cool! But not for trading and working with quotes.

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

Check out the new article: Working with ONNX models in float16 and float8 formats.

Data formats used to represent machine learning models play a crucial role in their effectiveness. In recent years, several new types of data have emerged, specifically designed for working with deep learning models. In this article, we will focus on two new data formats that have become widely adopted in modern models.

In this article, we will focus on two such new data formats - float16 and float8, which are beginning to be actively used in modern ONNX models. These formats represent alternative options to more precise but resource-intensive floating-point data formats. They provide an optimal balance between performance and accuracy, making them particularly attractive for various machine learning tasks. We will explore the key characteristics and advantages of float16 and float8 formats, as well as introduce functions for converting them to standard float and double formats.

This will help developers and researchers better understand how to effectively use these formats in their projects and models. As an example, we will examine the operation of the ESRGAN ONNX model, which is used for image quality enhancement.

Author: MetaQuotes