cool

thanks for the article!

Handy script , thanks .

I baked some too :

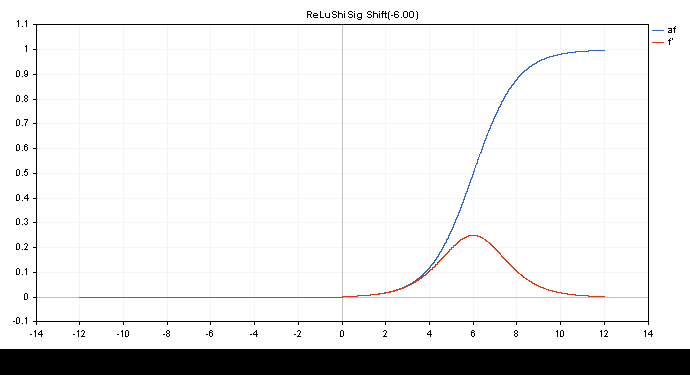

ReLu + Sigmoid + Shift to capture from 0.0 to 1.0 output (input -12 to 12)

double activationSigmoid(double x){ return(1/(1+MathExp(-1.0*x))); } double derivativeSigmoid(double activation_value){ return(activation_value*(1-activation_value)); } double activationReLuShiSig(double x,double shift=-6.0){ if(x>0.0){ return(activationSigmoid(x+shift)); } return(0.0); } double derivativeReLuShiSig(double output){ if(output>0.0){ return(derivativeSigmoid(output)); } return(0.0); }

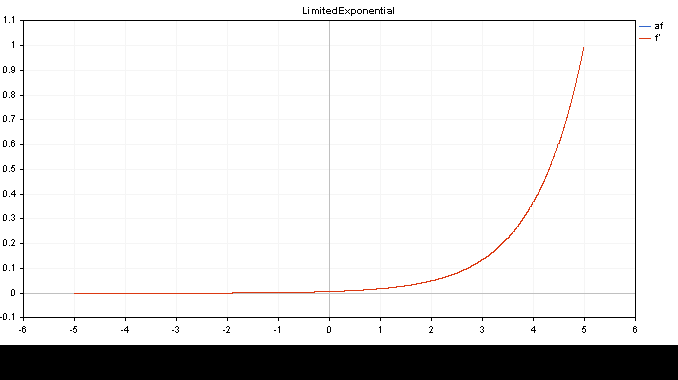

Limited exponential .af=f' (input -5.0 to 5.0)

double activationLimitedExponential(double x){ return(MathExp(x)/149.00); } //and the derivative is itself double derivativeLimitedExponential(double x){ return(x); }

👨🔧

You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

New article Matrices and vectors in MQL5: Activation functions has been published:

Here we will describe only one of the aspects of machine learning - activation functions. In artificial neural networks, a neuron activation function calculates an output signal value based on the values of an input signal or a set of input signals. We will delve into the inner workings of the process.

The graphs of the activation function and their derivatives have been prepared in a monotonically increasing sequence from -5 to 5 as illustrations. The script displaying the function graph on the price chart has been developed as well. The file open dialog is displayed for specifying the name of the saved image by pressing the Page Down key.

The ESC key terminates the script. The script itself is attached below.

Author: MetaQuotes