Population optimization algorithms: Mind Evolutionary Computation (MEC) algorithm

Contents

1. Introduction

2. Algorithm

3. Test results

1. Introduction

Evolutionary computation is a subfield of computational intelligence, machine learning and artificial intelligence. It is widely used in optimization problems, robot design, creating decision trees, tuning data analysis algorithms, training neural networks and tuning hyperparameters. Instead of using classical numerical methods, evolutionary computing uses inspiration from biological evolution to develop good solutions. They are especially useful when there is no known derivative of the fitness function or when the fitness function has many local extrema that can hamper sequential methods.

Population algorithms used in evolutionary calculations have a number of advantages over classical algorithms when solving complex high-dimensional problems. They can more efficiently find suboptimal solutions that are close enough to the optimal one, which is often acceptable in practical optimization problems.

One interesting approach in evolutionary computing is the Mind Evolutionary Computation (MEC) algorithm proposed in 1998 by Chengai and his co-authors. Unlike the expected modeling of the human brain, the MEC algorithm models some aspects of human behavior in society. In this algorithm, each individual is considered as an intelligent agent functioning in a group of people. When making decisions, an individual feels influenced both by members of his group and by members of other groups. To achieve a high position in society, an individual has to learn from the most successful individuals in his group. At the same time, in order for his group to become more successful than other groups, all individuals must be guided by the same principle in intergroup competition. An important aspect of the MEC algorithm is the exchange of information between individuals within a group and between groups. This reflects the need for continuous and free exchange of information for the successful development of a society of intelligent individuals.

MEC algorithms implement the presented concept using local competition and dissimilation operations responsible for local and global search, respectively. Message boards are used by the algorithm to store information about the evolutionary history of the population. The optimization process is controlled based on this information.

2. Algorithm

The MEC algorithm can be interpreted as a multi-population algorithm consisting of leading and lagging groups. The number of agents in each group is assumed to be the same. Each group has its own local message board, and there is also a common global message board for the entire multipopulation.

The original version of the MEC algorithm, known as Simple MEC (SMEC), uses group initialization, local competition and dissimilation operations.

The initialization operation creates groups and places them in the search area. For each group, a random vector is generated, which is identified with the individual of the group. Then the initial coordinates of the remaining agents of the group are determined, placing them randomly around the individual of the group using the normal distribution law.

The local competition operation implements a local search for the maximum fitness function of each group. For each group, information about the current winning agent is read from its message board. Then new coordinates of the remaining agents in the group are determined and placed randomly around the winner using the normal distribution law. New accounts of the group agents are calculated, and a new group winner having the maximum current account is determined. Information about the new winner is posted on the group message board.

The dissimilation operation controls the global search. First, the accounts of all groups from the message bulletin board are read. These accounts are then compared with each other. If the score of the leading group is less than the score of one of the lagging groups, then the latter takes the place of the leader, and the leading group becomes lagging. If a group's score is lower than the scores of all lagging groups, then the group is removed from the population. Instead of deleted groups, new groups are initialized using the initialization operation.

Thus, the MEC algorithm is a multi-population algorithm that includes initialization, local competition and dissimilation operations.

Above was a general description of the simple MEC algorithm from the creators of the algorithm. Such a description, in my opinion, makes it difficult to understand the principles underlying the search strategy in a multidimensional space. As a result, many questions arise, such as “what is a message board and how does it differ from the characteristics of specific individuals in a group?” and other questions, so let’s take a closer look at these subtleties.

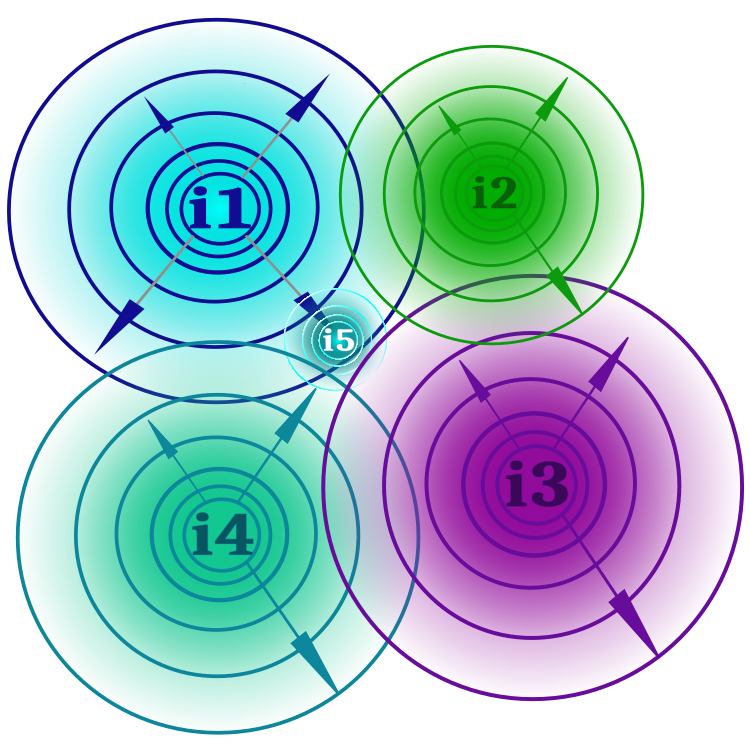

It was said above that the algorithm models human society and the interaction between its members. The most simple and clear way to imagine the concept of "idea" is that it can be a scientific idea, artistic, literary or any other. In society there are always dominant ideas and alternative ones. Unlike the authors, I will not divide ideas into "leading and lagging" in relation to groups. As we will see below, this will give some advantage to the algorithm compared to its basic version. Since each idea includes at least one thesis (a thesis is an optimized parameter of the fitness function), an idea can have ideological branches, just as in science there are various scientific theories and movements. Figure 1 schematically shows the ideas labeled i1, i2, etc. Each idea can give branches within the limits determined by the power of thought (an external parameter of the algorithm). The further the branch is from the idea, the less likely it is (the probability and boundaries of ideological branches are shown as expanding concentric circles). If the idea has shown itself to be more consistent (the value of the fitness function is better) than its ideological parent, then this branch replaces the parent idea (the new idea as a result of the ideological branch is shown in the figure as i5). In the figure, we can see that there are no restrictions on crossing ideological boundaries, which means the emergence of ideological branches with the same theses is possible.

Figure 1. Ideas i1, i2, i3, i4, their boundaries, intersections of boundaries and the emergence of a new idea i5 as a result of the branching of idea i1

Idea branching implements local competition within each idea and provides a search in the vicinity of the local extremum. The multiple branches of all ideas represent a multipopulation, while a single idea and its branches represent a single population. Each idea only stores its theses and practical value, and the user program refers to ideological branches, and not to the ideas themselves.

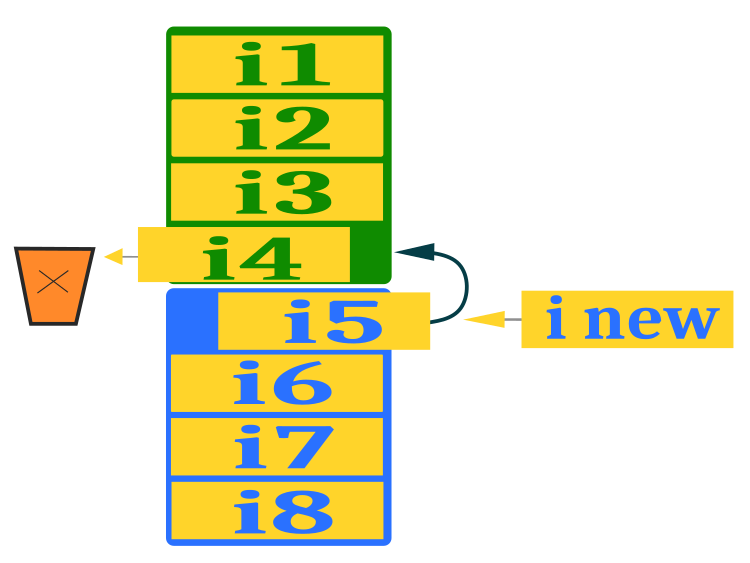

To ensure global search, it is necessary to ensure the emergence of new ideas beyond the current ones in the population, otherwise the ideas will get stuck in local extrema and there will be no emergence of a better value of the fitness function. The process of dissimilation (destruction) is intended for this purpose. We will use a slightly different scheme for the exchange of ideas between the dominant group (green color) of ideas and the alternative group (blue color) than in the original algorithm. After separate sorting of ideas in each group, we remove the worst from the dominant group replacing it with the best from the alternative group, thereby ensuring an ideological exchange. In the vacant place in the alternative group, we place a new idea, which is assembled from the abstracts of randomly selected ideas from the dominant group (excluding the last one). To provide variability, a random component can be added to this action, which I leave for possible further experiments by researchers of optimization algorithms. Selecting abstracts from the ideas of the dominant group increases the combinatorial abilities of the algorithm. Figure 2 below shows a diagram of replacing an idea from an alternative group and creating a new one.

Figure 2. Replacing an idea from an alternative group and creating a new one

Let's write MEC pseudo-code to simplify the further writing of the algorithm code:

1.1 In case of the first run of the algorithm

1.1.1 Create randomly two groups of ideas according to the field of ideas (dominant and alternative)

1.2 In case of the second and subsequent runs of the algorithm

1.2.1 For a dominant idea to create branches according to Levy's law*, one of the branches will be the result of a combination of theses from the dominant ideas.

1.2.2 For an alternative idea, all branches are created according to Levy's law*

2 Calculate the value of ideological branches

3.1 If there is a better ideological branch, replace the corresponding idea

3.2 Sort the dominant and alternative groups separately

3.3 Replace the dominant group's worst idea with a better idea from the alternative group

3.4 Instead of an empty idea in an alternative group, create a new idea based on elements of the dominant group** (all except the last replaced one)

* - instead of a normal distribution proposed by the author

** - instead of creating random theses proposed by the author

We can see from the pseudocode that the original algorithm has undergone changes.

Firstly, instead of the normal distribution law proposed by the authors of the algorithm, we use the Levy flight law, which has increased the research and refinement capabilities of the algorithm. Note that Levy's law of flight cannot always improve the efficiency of each optimization algorithm if we try to use it instead of the normal or uniform distribution. This is primarily due to the peculiarities of the algorithm’s search strategy. For instance, in the previous article, we looked at the shuffled frog-leaping algorithm. I tried to use Levy's law of flights in frog leaps instead of the normal distribution, which did not bring noticeable improvements in search performance.

Secondly, the original algorithm does not provide for any combinatorial mechanisms in the search strategy. Let’s try to figure out why this is extremely important. For example, imagine several baskets containing aluminum (light) balls and one gold (heavy) ball. Our task is to collect balls into an empty basket so that they are all gold, while we are allowed to either smoothly change the chemical composition of each new ball in the basket and it is unknown whether this will increase the mass, or simply randomly take a ball lying in one of the baskets. We know that one of them must be gold, and we can only weigh the entire basket and not the balls individually. Once we have a full basket, we have to weigh it and the challenge is to maximize the weight of the basket. This problem shows that by simple combination we can collect a basket full of golden balls in much fewer steps.

Let's move on to a description of the algorithm code.

The elementary logical unit of the algorithm is the idea, and it will also represent an ideological branch. The S_Idea structure is perfect for describing an idea. This is an object containing information about the coordinates and suitability of an idea. The structure features the Init method for the ease of initializing the fitness with a negative value of DBL_MAX.

//—————————————————————————————————————————————————————————————————————————————— struct S_Idea { void Init (int size) { ArrayResize (c, size); f = -DBL_MAX; } double c []; //coordinates double f; //fitness }; //——————————————————————————————————————————————————————————————————————————————

In the C_AO_MEC class, describe the MEC algorithm. It contains a number of variables and methods for working with these variables.

Class variables:

- cB: array containing the best coordinates

- fB: fitness function value for the best coordinates

- idBr: array containing ideological branches

- rangeMax: array containing the maximum search values

- rangeMin: array containing the minimum search values

- rangeStep: array containing search steps

- leadIdeolGroup: array containing a leading ideological group

- alteIdeolGroup: array containing an alternative ideological group

- tempIdeolGroup: array containing a temporary ideological group

- coordinatesNumber: number of coordinates

- populationSize: population size

- ideasNumber: number of ideas

- thoughtPower: power of thought

- ideasBr: number of ideological branches

- leadIdGroupSize: size of the leading ideological group

- alteIdGroupSize: size of the alternative ideological group

- vect: vector to use coordinate increment

- ind: array of indices

- val: array of values

- revision: revision flag

Class methods:

- Init: initializing a class with passing parameters

- Moving: performing a movement

- Revision

- Sorting: sorting ideological groups

- SeInDiSp: search interval calculation

- RNDfromCI: generating a random number from a given interval

//—————————————————————————————————————————————————————————————————————————————— class C_AO_MEC { //---------------------------------------------------------------------------- public: double cB []; //best coordinates public: double fB; //FF of the best coordinates public: S_Idea idBr []; //ideological branches public: double rangeMax []; //maximum search range public: double rangeMin []; //manimum search range public: double rangeStep []; //step search public: void Init (const int coordinatesNumberP, //coordinates number const int populationSizeP, //population size const int ideasNumberP, //ideas number const double thoughtPowerP); //thought power public: void Moving (); public: void Revision (); //---------------------------------------------------------------------------- private: S_Idea leadIdeolGroup []; //leading ideological group private: S_Idea alteIdeolGroup []; //alternative ideological group private: S_Idea tempIdeolGroup []; //temporal ideological group private: int coordinatesNumber; //coordinates number private: int populationSize; //population size private: int ideasNumber; //ideas number private: double thoughtPower; //thought power private: int ideasBr; //number of ideological branches private: int leadIdGroupSize; //leading ideological group size private: int alteIdGroupSize; //alternative ideological group size private: double vect []; //vector private: int ind []; private: double val []; private: bool revision; private: void Sorting (S_Idea &ideas []); private: double SeInDiSp (double In, double InMin, double InMax, double Step); private: double RNDfromCI (double min, double max); }; //——————————————————————————————————————————————————————————————————————————————

The Init initialization method performs the following actions:

- At the beginning of the code, the initial value of the random number generator is set and the variable fB is set to the minimum value of 'double' type.

- Next, the coordinatesNumber, populationSize, ideasNumber and thoughtPower variables are set to the values passed to the Init function.

- The values of the ideasBr, leadIdGroupSize and alteIdGroupSize are calculated based on the populationSize and ideasNumber values.

- The rangeMax, rangeMin, rangeStep, vect, ind, val, tempIdeolGroup and cB arrays are resized using the ArrayResize function.

- The objects of the Ideology class are then created in the leadIdeolGroup and alteIdeolGroup arrays using 'for' loops and Init function.

- Ideology class objects are created next in the idBr array using 'for' loop and Init function.

//—————————————————————————————————————————————————————————————————————————————— void C_AO_MEC::Init (const int coordinatesNumberP, //coordinates number const int populationSizeP, //population size const int ideasNumberP, //ideas number const double thoughtPowerP) //thought power { MathSrand ((int)GetMicrosecondCount ()); // reset of the generator fB = -DBL_MAX; revision = false; coordinatesNumber = coordinatesNumberP; populationSize = populationSizeP; ideasNumber = ideasNumberP; thoughtPower = thoughtPowerP; ideasBr = populationSize / ideasNumber; leadIdGroupSize = ideasNumber / 2; alteIdGroupSize = ideasNumber - leadIdGroupSize; ArrayResize (rangeMax, coordinatesNumber); ArrayResize (rangeMin, coordinatesNumber); ArrayResize (rangeStep, coordinatesNumber); ArrayResize (vect, coordinatesNumber); ArrayResize (ind, alteIdGroupSize, alteIdGroupSize); ArrayResize (val, alteIdGroupSize, alteIdGroupSize); ArrayResize (tempIdeolGroup, alteIdGroupSize, alteIdGroupSize); ArrayResize (cB, coordinatesNumber); ArrayResize (leadIdeolGroup, leadIdGroupSize); for (int i = 0; i < leadIdGroupSize; i++) leadIdeolGroup [i].Init (coordinatesNumber); ArrayResize (alteIdeolGroup, alteIdGroupSize); for (int i = 0; i < alteIdGroupSize; i++) alteIdeolGroup [i].Init (coordinatesNumber); ArrayResize (idBr, populationSize); for (int i = 0; i < populationSize; i++) idBr [i].Init (coordinatesNumber); } //——————————————————————————————————————————————————————————————————————————————

The method of creating Moving ideological branches performs the core logic of the MEC search strategy. Part of the code is executed only on the first iteration of the algorithm, and the rest of the code is executed on each iteration.

In the first iteration, it is necessary to initialize a vector, which is filled with values calculated based on the range and thought power (this is the increment during branching), and create ideas in two groups - dominant and alternative. To do this, we will scatter the ideas of both groups (they are equivalent) with equal probability throughout the entire search field.

//============================================================================ if (!revision) { fB = -DBL_MAX; int cnt = 0; for (int c = 0; c < coordinatesNumber; c++) vect [c] = (rangeMax [c] - rangeMin [c]) * thoughtPower; //-------------------------------------------------------------------------- for (int i = 0; i < leadIdGroupSize; i++) { for (int c = 0; c < coordinatesNumber; c++) { coord = RNDfromCI (rangeMin [c], rangeMax [c]); leadIdeolGroup [i].c [c] = SeInDiSp (coord, rangeMin [c], rangeMax [c], rangeStep [c]); } leadIdeolGroup [i].f = -DBL_MAX; } //-------------------------------------------------------------------------- for (int i = 0; i < alteIdGroupSize; i++) { for (int c = 0; c < coordinatesNumber; c++) { coord = RNDfromCI (rangeMin [c], rangeMax [c]); alteIdeolGroup [i].c [c] = SeInDiSp (coord, rangeMin [c], rangeMax [c], rangeStep [c]); } alteIdeolGroup [i].f = -DBL_MAX; } revision = true; }

Further, in the first and subsequent iterations, we will generate ideological branches, since we have already created the ideas themselves. We are interested precisely in ideological branches, which we can evaluate using the fitness function.

The main operation for creating a branch is the task of moving to the abstracts of ideas according to Levy's law of flights with normalization by the coefficient of thought power. We will do this for all ideas of the alternative group and for all but the last one for the dominant group according to the equation:

coord = leadIdeolGroup [i].c [c] + r1 * vect [c] * pow (r2, -2.0);

for the last idea of the dominant group, we will randomly select an idea from this group and take the thesis of the corresponding index:

r1 = RNDfromCI (0.0, leadIdGroupSize - 1); idBr [cnt].c [c] = leadIdeolGroup [(int)r1].c [c];

Below is an entire code for creating ideological branches for both groups:

//---------------------------------------------------------------------------- for (int i = 0; i < leadIdGroupSize; i++) { for (int b = 0; b < ideasBr; b++) { if (b < ideasBr -1) { for (int c = 0; c < coordinatesNumber; c++) { r1 = RNDfromCI (0.0, 1.0); r1 = r1 > 0.5 ? 1.0 : -1.0; r2 = RNDfromCI (1.0, 20.0); coord = leadIdeolGroup [i].c [c] + r1 * vect [c] * pow (r2, -2.0); idBr [cnt].c [c] = SeInDiSp (coord, rangeMin [c], rangeMax [c], rangeStep [c]); } } else { for (int c = 0; c < coordinatesNumber; c++) { r1 = RNDfromCI (0.0, leadIdGroupSize - 1); idBr [cnt].c [c] = leadIdeolGroup [(int)r1].c [c]; } } cnt++; } } //---------------------------------------------------------------------------- for (int i = 0; i < alteIdGroupSize; i++) { for (int b = 0; b < ideasBr; b++) { for (int c = 0; c < coordinatesNumber; c++) { r1 = RNDfromCI (0.0, 1.0); r1 = r1 > 0.5 ? 1.0 : -1.0; r2 = RNDfromCI (1.0, 20.0); coord = alteIdeolGroup [i].c [c] + r1 * vect [c] * pow (r2, -2.0); idBr [cnt].c [c] = SeInDiSp (coord, rangeMin [c], rangeMax [c], rangeStep [c]); } cnt++; } }

The Revision method is designed to revise ideas based on the results of assessing their ideological branches, as well as to update the global solution indicators. The code performs the following steps:

1. cnt and pos variables are initialized.

2. Replacing the best ideas: The search for the best ideological branch from the global one (idBr) occurs in the loop for each leader in the leader group (leadIdeolGroup). For each branch, we check if its fitness function value (f) is greater than the value of the current leader, then the position (pos) is set equal to the current index (cnt). If a better idea is found, the functional value and leader coordinates are updated with the values of that idea. If the new idea's feature value is also greater than the current best feature value (fB), then fB and the best idea's coordinates (cB) are also updated.

3. Same goes for the group of alternative solutions (alteIdeolGroup).

4. Sorting idea groups: Groups of leaders and alternative ideas are sorted in descending order of fitness function value.

5. Replacing the worst idea: The worst idea in the leader group (leadIdeolGroup) is replaced with the best idea of the alternative solutions group (alteIdeolGroup).

6. Creating of a new idea: for each coordinate (c) in the group of alternative solutions (alteIdeolGroup), a new idea is created based on the elements of the dominant group (leadIdeolGroup). The coordinate is selected randomly from the dominant group of leaders.

//—————————————————————————————————————————————————————————————————————————————— void C_AO_MEC::Revision () { int cnt = 0; int pos = -1; //---------------------------------------------------------------------------- //4. If there is a better ideological branch, replace the corresponding idea for (int i = 0; i < leadIdGroupSize; i++) { pos = -1; for (int b = 0; b < ideasBr; b++) { if (idBr [cnt].f > leadIdeolGroup [i].f) pos = cnt; cnt++; } if (pos != -1) { leadIdeolGroup [i].f = idBr [pos].f; ArrayCopy (leadIdeolGroup [i].c, idBr [pos].c, 0, 0, WHOLE_ARRAY); if (idBr [pos].f > fB) { fB = idBr [pos].f; ArrayCopy (cB, idBr [pos].c, 0, 0, WHOLE_ARRAY); } } } //---------------------------------------------------------------------------- for (int i = 0; i < alteIdGroupSize; i++) { pos = -1; for (int b = 0; b < ideasBr; b++) { if (idBr [cnt].f > alteIdeolGroup [i].f) pos = cnt; cnt++; } if (pos != -1) { alteIdeolGroup [i].f = idBr [pos].f; ArrayCopy (alteIdeolGroup [i].c, idBr [pos].c, 0, 0, WHOLE_ARRAY); if (idBr [pos].f > fB) { fB = idBr [pos].f; ArrayCopy (cB, idBr [pos].c, 0, 0, WHOLE_ARRAY); } } } //---------------------------------------------------------------------------- //5. Sort two groups of ideas. Sorting (leadIdeolGroup); Sorting (alteIdeolGroup); //---------------------------------------------------------------------------- //6. Replace the worst idea of the first group with the best idea from the second group leadIdeolGroup [leadIdGroupSize - 1] = alteIdeolGroup [0]; //---------------------------------------------------------------------------- //7. Instead of an empty idea, create a new idea based on elements of the dominant group double rnd = 0.0; double coord = 0.0; for (int c = 0; c < coordinatesNumber; c++) { rnd = RNDfromCI (0.0, leadIdGroupSize - 2); alteIdeolGroup [0].c [c] = leadIdeolGroup [(int)rnd].c [c]; } } //——————————————————————————————————————————————————————————————————————————————

3. Test results

Printout of the operation of the mind evolution algorithm on a test bench:

=============================

5 Rastrigin's; Func runs 10000 result: 78.73205273291103

Score: 0.97553

25 Rastrigin's; Func runs 10000 result: 58.554840607439516

Score: 0.72553

500 Rastrigin's; Func runs 10000 result: 42.32395504312925

Score: 0.52442

=============================

5 Forest's; Func runs 10000 result: 1.2024830541165685

Score: 0.68018

25 Forest's; Func runs 10000 result: 0.4588423143844061

Score: 0.25954

500 Forest's; Func runs 10000 result: 0.08756810826330522

Score: 0.04953

=============================

5 Megacity's; Func runs 10000 result: 5.279999999999999

Score: 0.44000

25 Megacity's; Func runs 10000 result: 2.048

Score: 0.17067

500 Megacity's; Func runs 10000 result: 0.4364

Score: 0.03637

=============================

All score: 3.86178

MEC on the Rastrigin test function.

MEC on the Forest test function.

MEC on the Megacity test function.

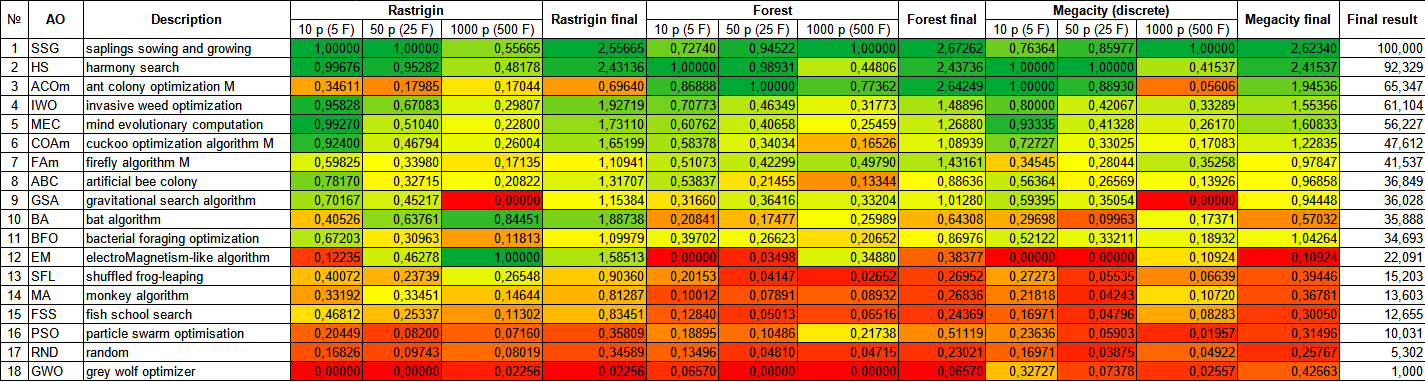

When analyzing the ranking table of optimization algorithm testing results, it can be noted that the MEC algorithm achieves very high performance and takes an honorable fifth place. MEC demonstrates excellent results on the Rastrigin, Forest and Megacity functions, despite the completely different surface of these test functions. Particularly impressive results are achieved with the Megacity function with 10 parameters. However, it is worth noting that MEC shows even higher efficiency on functions with fewer optimized parameters, compared to other participants in the comparative analysis. This is more of a disadvantage.

| # | AO | Description | Rastrigin | Rastrigin final | Forest | Forest final | Megacity (discrete) | Megacity final | Final result | ||||||

| 10 p (5 F) | 50 p (25 F) | 1000 p (500 F) | 10 p (5 F) | 50 p (25 F) | 1000 p (500 F) | 10 p (5 F) | 50 p (25 F) | 1000 p (500 F) | |||||||

| 1 | SSG | saplings sowing and growing | 1.00000 | 1.00000 | 0.55665 | 2.55665 | 0.72740 | 0.94522 | 1.00000 | 2.67262 | 0.76364 | 0.85977 | 1.00000 | 2.62340 | 100.000 |

| 2 | HS | harmony search | 0.99676 | 0.95282 | 0.48178 | 2.43136 | 1.00000 | 0.98931 | 0.44806 | 2.43736 | 1.00000 | 1.00000 | 0.41537 | 2.41537 | 92.329 |

| 3 | ACOm | ant colony optimization M | 0.34611 | 0.17985 | 0.17044 | 0.69640 | 0.86888 | 1.00000 | 0.77362 | 2.64249 | 1.00000 | 0.88930 | 0.05606 | 1.94536 | 65.347 |

| 4 | IWO | invasive weed optimization | 0.95828 | 0.67083 | 0.29807 | 1.92719 | 0.70773 | 0.46349 | 0.31773 | 1.48896 | 0.80000 | 0.42067 | 0.33289 | 1.55356 | 61.104 |

| 5 | MEC | mind evolutionary computation | 0.99270 | 0.51040 | 0.22800 | 1.73110 | 0.60762 | 0.40658 | 0.25459 | 1.26880 | 0.93335 | 0.41328 | 0.26170 | 1.60833 | 56.227 |

| 6 | COAm | cuckoo optimization algorithm M | 0.92400 | 0.46794 | 0.26004 | 1.65199 | 0.58378 | 0.34034 | 0.16526 | 1.08939 | 0.72727 | 0.33025 | 0.17083 | 1.22835 | 47.612 |

| 7 | FAm | firefly algorithm M | 0.59825 | 0.33980 | 0.17135 | 1.10941 | 0.51073 | 0.42299 | 0.49790 | 1.43161 | 0.34545 | 0.28044 | 0.35258 | 0.97847 | 41.537 |

| 8 | ABC | artificial bee colony | 0.78170 | 0.32715 | 0.20822 | 1.31707 | 0.53837 | 0.21455 | 0.13344 | 0.88636 | 0.56364 | 0.26569 | 0.13926 | 0.96858 | 36.849 |

| 9 | GSA | gravitational search algorithm | 0.70167 | 0.45217 | 0.00000 | 1.15384 | 0.31660 | 0.36416 | 0.33204 | 1.01280 | 0.59395 | 0.35054 | 0.00000 | 0.94448 | 36.028 |

| 10 | BA | bat algorithm | 0.40526 | 0.63761 | 0.84451 | 1.88738 | 0.20841 | 0.17477 | 0.25989 | 0.64308 | 0.29698 | 0.09963 | 0.17371 | 0.57032 | 35.888 |

| 11 | BFO | bacterial foraging optimization | 0.67203 | 0.30963 | 0.11813 | 1.09979 | 0.39702 | 0.26623 | 0.20652 | 0.86976 | 0.52122 | 0.33211 | 0.18932 | 1.04264 | 34.693 |

| 12 | EM | electroMagnetism-like algorithm | 0.12235 | 0.46278 | 1.00000 | 1.58513 | 0.00000 | 0.03498 | 0.34880 | 0.38377 | 0.00000 | 0.00000 | 0.10924 | 0.10924 | 22.091 |

| 13 | SFL | shuffled frog-leaping | 0.40072 | 0.23739 | 0.26548 | 0.90360 | 0.20153 | 0.04147 | 0.02652 | 0.26952 | 0.27273 | 0.05535 | 0.06639 | 0.39446 | 15.203 |

| 14 | MA | monkey algorithm | 0.33192 | 0.33451 | 0.14644 | 0.81287 | 0.10012 | 0.07891 | 0.08932 | 0.26836 | 0.21818 | 0.04243 | 0.10720 | 0.36781 | 13.603 |

| 15 | FSS | fish school search | 0.46812 | 0.25337 | 0.11302 | 0.83451 | 0.12840 | 0.05013 | 0.06516 | 0.24369 | 0.16971 | 0.04796 | 0.08283 | 0.30050 | 12.655 |

| 16 | PSO | particle swarm optimisation | 0.20449 | 0.08200 | 0.07160 | 0.35809 | 0.18895 | 0.10486 | 0.21738 | 0.51119 | 0.23636 | 0.05903 | 0.01957 | 0.31496 | 10.031 |

| 17 | RND | random | 0.16826 | 0.09743 | 0.08019 | 0.34589 | 0.13496 | 0.04810 | 0.04715 | 0.23021 | 0.16971 | 0.03875 | 0.04922 | 0.25767 | 5.302 |

| 18 | GWO | grey wolf optimizer | 0.00000 | 0.00000 | 0.02256 | 0.02256 | 0.06570 | 0.00000 | 0.00000 | 0.06570 | 0.32727 | 0.07378 | 0.02557 | 0.42663 | 1.000 |

Summary

The Mind Evolutionary Computation (MEC) algorithm is an efficient and powerful optimization method that is based on the principles of biological evolution. It has a number of significant advantages that make it attractive for solving complex optimization problems.

The evolution of the mind algorithm can be applied to a wide range of problems, including functional optimization, searching for optimal parameter values, machine learning problems, and others. It does not require knowledge of the analytical form of the objective function and can work with continuous, discrete or combinatorial solution spaces.

MEC is easily parallelized, allowing for the use of multiple computing resources to speed up the optimization process. This is especially useful when working with problems that require a lot of calculations or iterations.

MEC has a certain tendency to get stuck in local extrema, but this drawback manifests itself mainly on discrete and saw-like functions. When designing a fitness function, this drawback can be considered insignificant.

MEC performs relatively well on problems where the solution space is high in dimension. The algorithm has not been widely studied and has potential for improvement.

In general, the MEC algorithm is a powerful optimization tool that has flexibility, versatility and good elaboration of local extrema. These advantages make it an attractive choice for solving complex optimization problems in various fields. The simplicity of the algorithm logic can be especially useful in custom non-standard software solutions.

For a more visual representation of the advantages and disadvantages of individual algorithms, the table above can be represented using the color scale in Figure 3.

Figure 3. Color gradation of algorithms according to relevant tests

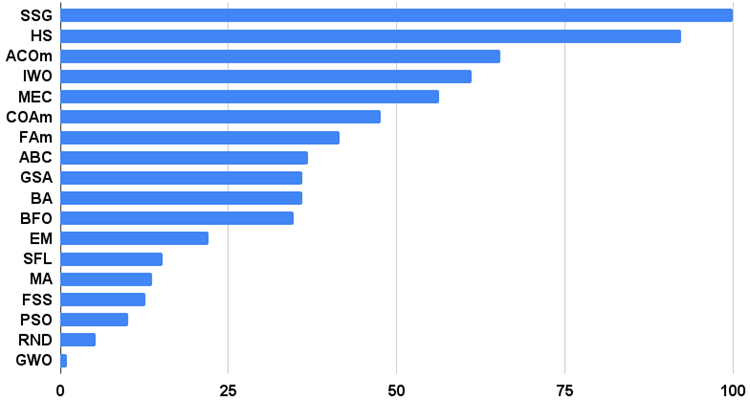

The histogram of the algorithm test results is provided below (on a scale from 0 to 100, the more the better, in the archive there is a script for calculating the rating table using the method described in this article):

Figure 4. Histogram of the final results of testing algorithms

MEC pros and cons:

1. Minimum number of external parameters.

2. High efficiency in solving a variety of problems.

3. Low load on computing resources.

4. Ease of implementation.

Cons:

1. Getting stuck on functions with flat horizontal "pads".

Each article features an archive that contains updated current versions of the algorithm codes for all previous articles. However, it should be noted that I am not responsible for absolute accuracy in the description of canonical algorithms. I often add my own ideas and improvements based on my experience and personal opinions. The conclusions and judgments presented in the articles are based on the results of the experiments.

Translated from Russian by MetaQuotes Ltd.

Original article: https://www.mql5.com/ru/articles/13432

Implementing the Generalized Hurst Exponent and the Variance Ratio test in MQL5

Implementing the Generalized Hurst Exponent and the Variance Ratio test in MQL5

Building and testing Keltner Channel trading systems

Building and testing Keltner Channel trading systems

MQL5 Wizard Techniques you should know (Part 11): Number Walls

MQL5 Wizard Techniques you should know (Part 11): Number Walls

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use