Developing a Replay System — Market simulation (Part 06): First improvements (I)

Introduction

Our market replay system is presented in this series of articles along with its creation to show readers how things really begin before they take a more defined form. As a result of the process, we can move slowly and carefully, which gives us the ability to create, delete, add, and change many things. The reason is that the market replay system is created at the same time as the articles are being published. Therefore, before the publication of the article, some testing is performed to ensure that the system is stable and functional while it is in the modeling and adjustment stage.

Now let's get down to the point. In the previous article Developing a Replay System — Market simulation (Part 05): Adding Previews, we have created a system to load the preview panel. Although this system works, we still have some problems. The most pressing problem is that in order to obtain a more usable database, it is necessary to create an already existing bar file, which often contains several days and does not allow data to be inserted.

When we create a preview file containing, for example, data for a week, from Monday to Friday, we cannot use the same database to make a replay, for example, for Thursday. This would require the creation of a new database. And it's terrible if you think about it.

In addition to this inconvenience, we have other problems, such as a complete lack of proper testing to ensure that we are using the right database. Because of this, we may accidentally use bar files as if they were tick data from executed trades or vice versa. This causes serious disturbances to our system, preventing it from functioning properly. We will also make other small changes as part of this article. Let's get to the point.

Remember that we will go through each of the points to understand what is happening.

Implementing the improvements

The first change we need to implement is to add two new lines to the service file. They are shown below:

#define def_Dependence "\\Indicators\\Market Replay.ex5" #resource def_Dependence

Why do you need to do this? For the simple fact that the system consists of modules, and we must somehow ensure their presence when we use the replay system. The most important module is precisely this indicator, since it is responsible for certain control over what will be done.

I admit, this is not the best way. Perhaps in the future those who develop the MetaTrader 5 platform and the MQL5 language will make some additions, such as compilation directives, so that we can actually ensure that the file is compiled or should exist. But in the absence of any other solution, we will do it this way.

Okay, now we're setting up a directive indicating that the service depends on something else. We then add the same as a resource to the service. One important point: turning this element into a resource will not necessarily allow us to use it as a resource. This case is special.

These two simple lines ensure that the indicator present in the template for use by replay will actually be compiled when the replay service is compiled. You may forget to do this, and when the template loads the indicator and it is not found, there will be a failure which you will notice only when you realize that the indicator used to control the service is not found on the chart.

To avoid this, we have already made sure that the indicator is compiled together with the service. However, if you use a custom template and then manually add the control indicator, we can remove those two lines above the service code. The absence of such indicators will not affect the code or the service operation.

NOTE: Even if we force the compilation, it will only actually happen if the indicator executable doesn't exist. If the indicator is modified, compiling just the service will not cause the indicator to be built.

Some might say that this would be solved using MetaEditor's project mode. However, this mode does not allow us to work in the same way as in languages like C/C++ where we use a MAKE file to control compilation. You could even do this vi aa BATCH file, but this would force us to exit MetaEditor just to compile the code.

Continuing, we now have two new lines:

input string user00 = "Config.txt"; //Replay configuration file input ENUM_TIMEFRAMES user01 = PERIOD_M5; //Initial timeframe

Here's something really useful for our replay service: This line is actually the name of the file that will contain the settings for the replay symbol. These settings include, for now, which files are used to generate previous bars and which files will be used to store traded ticks.

Now you can use several files at the same time. Also, I know that many traders like to use a specific timeframe when trading the market and at the same time like to use the chart in the full screen mode. So, this line allows you to set the timeframe that should be used from the very beginning. This is very simple and also much more convenient because you can save all these settings for further use. We can add more things here, but for now, it is good.

Now we need to understand a few more points, which can be seen in the code below:

void OnStart() { ulong t1; int delay = 3; long id = 0; u_Interprocess Info; bool bTest = false; Replay.InitSymbolReplay(); if (!Replay.SetSymbolReplay(user00)) { Finish(); return; } Print("Wait for permission from [Market Replay] indicator to start replay..."); id = Replay.ViewReplay(user01); while ((!GlobalVariableCheck(def_GlobalVariableReplay)) && (!_StopFlag) && (ChartSymbol(id) != "")) Sleep(750); if ((_StopFlag) || (ChartSymbol(id) == "")) { Finish(); return; } Print("Permission received. Now you can use the replay service..."); t1 = GetTickCount64(); while ((ChartSymbol(id) != "") && (GlobalVariableGet(def_GlobalVariableReplay, Info.Value)) && (!_StopFlag)) { if (!Info.s_Infos.isPlay) { if (!bTest) bTest = true; }else { if (bTest) { delay = ((delay = Replay.AdjustPositionReplay()) == 0 ? 3 : delay); bTest = false; t1 = GetTickCount64(); }else if ((GetTickCount64() - t1) >= (uint)(delay)) { if ((delay = Replay.Event_OnTime()) < 0) break; t1 = GetTickCount64(); } } } Finish(); }

Initially we were just waiting for everything to be ready to go. But from now on we're going to make sure that everything actually works. That's done through testing. Let's test whether the files that will be used to perform the replay are indeed suitable for this. We check the return of the function that reads data contained in the files. If a failure occurs, a message notifying about what happened will be shown in the MetaTrader 5 toolbox, while the replay service will simply close because we do not have enough data to use it.

If the data has loaded correctly, we will see a corresponding message in the toolbox and we can continue. We then open the replay symbol chart and wait for permission to continue with the next steps. However, it may happen that while waiting, the user closes the service or closes the replay symbol chart. If this happens, we must stop the replay service. If everything is working correctly, we will enter the replay loop, but at the same time we will make sure that the user does not close the chart or terminate the service. Because if this happens, then the replay will also have to be closed.

Note that now not only imagine that everything works, but we actually make sure that works properly. It may seem strange that this type of testing was not carried out in earlier versions of the system, but there were other problems, so every time replay was closed or was terminated for some reason, there was something that remained behind the scenes. But now, we will make sure that everything works correctly and there are no unnecessary elements.

Ultimately, we have the following code still inside the service file:

void Finish(void) { Replay.CloseReplay(); Print("Replay service completed..."); }

It's not difficult to understand. Here we simply complete the replay and notify the user via the toolbox. This way we can go to the file that implements the C_Replay class, where we will also perform additional checks to make sure everything is working correctly.

There are no major changes in the C_Replay class. We construct it this way so that the replay service is as stable and reliable as possible. Therefore, we will make changes gradually so as not to spoil all the work done so far.

The first thing that catches your eye is the lines shown below:

#define def_STR_FilesBar "[BARS]" #define def_STR_FilesTicks "[TICKS]" #define def_Header_Bar "<DATE><TIME><OPEN><HIGH><LOW><CLOSE><TICKVOL><VOL><SPREAD>" #define def_Header_Ticks "<DATE><TIME><BID><ASK><LAST><VOLUME><FLAGS>"

Although they may seem insignificant, these 4 lines are very interesting because they perform the required tests. These two definitions are used in the configuration file, which we will look at shortly. It contains exactly the data from the first line of the file containing the bars that will be used as previous bars. This definition exactly contains the exact content of the first line of the file containing traded ticks.

But wait a minute. These definitions are not exactly the same as those found in the file headers: there are no tabs. Yes, in fact, there are no tabs present in the original files. However, there is a small detail related to the way the data is read.

But before going into detail, let's take a look at what the replay service configuration file looks like at the current stage of development. The file example is shown below:

[Bars]

WIN$N_M1_202108020900_202108021754

WIN$N_M1_202108030900_202108031754

WIN$N_M1_202108040900_202108041754

[Ticks]

WINQ21_202108050900_202108051759

WINQ21_202108060900_202108061759

The line defined as [Bars] which can be types like this, since I do not set the system as case sensitive, indicates that all subsequent lines will be used as previous bars. So, we have three different loaded in the specified order. Be careful, because if you place them in the wrong order, you will not get the required replay. All bars present in these files will be added one by one to the symbol that will be used for the replay. Regardless of the number of files or bars, all files will be added as previous bars until something instructs to change this.

In the case of the [Ticks] line, this will tell the replay service that all subsequent lines will or should contain traded ticks which will be used for the replay. As with bars, the same warning applies here: be careful to place the files in the correct order. Otherwise, the replay will be different from what is expected; all files are always read from the beginning to the end. This way we can combine bars with ticks.

However, there is a slight limitation at the moment. Perhaps this is not really a limitation, since it makes no sense to add traded ticks, replay them, and then watch as more bars appear, which will be used in another replay call. If you put ticks before bars, this will not make any difference for the replay system. The traded The bars will always come first, and only then there will be traded ticks.

Important: In the example above, I did not consider the fact that it is possible to do this. If we want to organize things in a better way, you can use a directory tree to separate things and organize then in a more appropriate way. This can be done without extra modifications to the class code. All we need to do is to carefully follow a certain logic in the structures present in the class file. To make things clearer, let's look at an example of how to use a directory tree to separate things by symbol, month, or year.

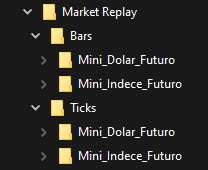

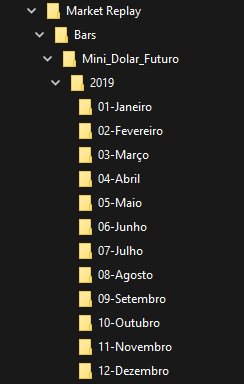

To understand it, let's look at the images below:

Please note that we have the MARKET REPLAY directory specified as ROOT, which is the base that we must use within this directory. We can organize things by dividing them into symbols, years and months, where each month will contain files corresponding to what happened in that particular month. The way the system is being created allows us to use structuring as shown above without making any changes to the code. Specific data is accessed by simply informing this in the configuration file.

This file can have any name, only its content is structured. So the name is free for you to choose.

Very good. So if you need to call a file, let's say a tick file for the mini dollar, for the year 2020, June the 16th, you use the following line in the configuration file:

[Ticks] Mini_Dolar_Futuro\2020\06-Junho\WDO_16062020

This will tell the system to read exactly this file. Of course, this is just one example of how work can be organized.

But to understand why this happens and becomes possible, we must look at the function that is responsible for reading this configuration file. Let's see it.

bool SetSymbolReplay(const string szFileConfig) { int file; string szInfo; bool isBars = true; if ((file = FileOpen("Market Replay\\" + szFileConfig, FILE_CSV | FILE_READ | FILE_ANSI)) == INVALID_HANDLE) { MessageBox("Failed to read the configuration file.", "Market Replay", MB_OK); return false; } Print("Loading data for replay.\nPlease wait...."); while ((!FileIsEnding(file)) && (!_StopFlag)) { szInfo = FileReadString(file); StringToUpper(szInfo); if (szInfo == def_STR_FilesBar) isBars = true; else if (szInfo == def_STR_FilesTicks) isBars = false; else if (szInfo != "") if (!(isBars ? LoadPrevBars(szInfo) : LoadTicksReplay(szInfo))) { if (!_StopFlag) MessageBox(StringFormat("File %s of %s\ncould not be loaded.", szInfo, (isBars ? def_STR_FilesBar : def_STR_FilesTicks), "Market Replay", MB_OK)); FileClose(file); return false; } } FileClose(file); return (!_StopFlag); }

We start by trying to read the configuration file, which must be located in a specific location. This location cannot be changed, at least not after the system has been compiled. If the file cannot be opened, an error message will appear and the function will close. If the file can be opened, then we start reading it. However, please note that we will continually check to see if the MetaTrader 5 user has asked to stop the replay service.

If this happens, i.e. if the user stops the service, the function will be closed as if it had failed. We read line by line and convert all the read characters into corresponding capital letters, which makes analysis easier. We then parse and call the appropriate function to read data from the file specified in the configuration script. If reading any of these files fails for any reason, the user will see an error message and the function will fail. When the entire configuration file has been read, the function will simply exit. And the user asked to stop it, we will receive a response indicating that everything is fine.

Now that we have seen how the configuration file is read, let's look at the function that is responsible for reading the data and then we will understand why there is a warning that the requested file is not suitable. That is, if you try to use a file containing bars instead of a file that should contain traded ticks, or vice versa, the system will report an error. Let's see how this happens.

Let's start with the simplest function, which is responsible for reading and loading bars. The code responsible for this is shown below:

bool LoadPrevBars(const string szFileNameCSV) { int file, iAdjust = 0; datetime dt = 0; MqlRates Rate[1]; string szInfo = ""; if ((file = FileOpen("Market Replay\\Bars\\" + szFileNameCSV + ".csv", FILE_CSV | FILE_READ | FILE_ANSI)) != INVALID_HANDLE) { for (int c0 = 0; c0 < 9; c0++) szInfo += FileReadString(file); if (szInfo != def_Header_Bar) { Print("Файл ", szFileNameCSV, ".csv это не файл предыдущих баров."); return false; } Print("Loading bars for the replay. Please wait...."); while ((!FileIsEnding(file)) && (!_StopFlag)) { Rate[0].time = StringToTime(FileReadString(file) + " " + FileReadString(file)); Rate[0].open = StringToDouble(FileReadString(file)); Rate[0].high = StringToDouble(FileReadString(file)); Rate[0].low = StringToDouble(FileReadString(file)); Rate[0].close = StringToDouble(FileReadString(file)); Rate[0].tick_volume = StringToInteger(FileReadString(file)); Rate[0].real_volume = StringToInteger(FileReadString(file)); Rate[0].spread = (int) StringToInteger(FileReadString(file)); iAdjust = ((dt != 0) && (iAdjust == 0) ? (int)(Rate[0].time - dt) : iAdjust); dt = (dt == 0 ? Rate[0].time : dt); CustomRatesUpdate(def_SymbolReplay, Rate, 1); } m_dtPrevLoading = Rate[0].time + iAdjust; FileClose(file); }else { Print("Could not access the bars data file."); m_dtPrevLoading = 0; return false; } return (!_StopFlag); }

At first glance, this code does not differ much from the code provided in the previous article "Developing a Replay system (Part 05)". But there are differences, and they are quite significant in general and structural terms.

The first difference is that we now intercept the header data of the file being read. This header is compared with the value determined and expected by the bar reading function. If this header is different from what is expected, then an error will be thrown and the function will exit. But if this is the expected header, then we will end up in a loop.

Previously, the output of this loop was controlled only by the end of the file being read. If the user stopped the service for any reason, it was not closed. We have now fixed this so that if the user exits the replay system while reading one of the files, the loop will be closed and an error will be thrown indicating that the system has failed. But this is just a formality to bring everything to a smoother and less abrupt conclusion.

The rest of the function continues to run the same way, since no changes have been made to the way the data is read.

Now let's look at the function for reading traded ticks, which has been modified and has become even more interesting than the bar reading function. Its code is shown below:

#define macroRemoveSec(A) (A - (A % 60)) bool LoadTicksReplay(const string szFileNameCSV) { int file, old; string szInfo = ""; MqlTick tick; if ((file = FileOpen("Market Replay\\Ticks\\" + szFileNameCSV + ".csv", FILE_CSV | FILE_READ | FILE_ANSI)) != INVALID_HANDLE) { ArrayResize(m_Ticks.Info, def_MaxSizeArray, def_MaxSizeArray); old = m_Ticks.nTicks; for (int c0 = 0; c0 < 7; c0++) szInfo += FileReadString(file); if (szInfo != def_Header_Ticks) { Print("File ", szFileNameCSV, ".csv is not a file with traded ticks."); return false; } Print("Loading replay ticks. Please wait..."); while ((!FileIsEnding(file)) && (m_Ticks.nTicks < (INT_MAX - 2)) && (!_StopFlag)) { ArrayResize(m_Ticks.Info, (m_Ticks.nTicks + 1), def_MaxSizeArray); szInfo = FileReadString(file) + " " + FileReadString(file); tick.time = macroRemoveSec(StringToTime(StringSubstr(szInfo, 0, 19))); tick.time_msc = (int)StringToInteger(StringSubstr(szInfo, 20, 3)); tick.bid = StringToDouble(FileReadString(file)); tick.ask = StringToDouble(FileReadString(file)); tick.last = StringToDouble(FileReadString(file)); tick.volume_real = StringToDouble(FileReadString(file)); tick.flags = (uchar)StringToInteger(FileReadString(file)); if ((m_Ticks.Info[old].last == tick.last) && (m_Ticks.Info[old].time == tick.time) && (m_Ticks.Info[old].time_msc == tick.time_msc)) m_Ticks.Info[old].volume_real += tick.volume_real; else { m_Ticks.Info[m_Ticks.nTicks] = tick; m_Ticks.nTicks += (tick.volume_real > 0.0 ? 1 : 0); old = (m_Ticks.nTicks > 0 ? m_Ticks.nTicks - 1 : old); } } if ((!FileIsEnding(file))&& (!_StopFlag)) { Print("Too much data in the tick file.\nCannot continue...."); return false; } }else { Print("Tick file ", szFileNameCSV,".csv not found..."); return false; } return (!_StopFlag); }; #undef macroRemoveSec

Before the previous article, this function for reading traded ticks had a limitation, which is expressed in the following line of definition:

#define def_MaxSizeArray 134217727 // 128 Mbytes of positions

This line still exists, but we have removed the restriction, at least partially. Because it can be useful to create a replay system that can handle more than one traded tick database. This way we can add 2 or more days to the system and do some larger repeat tests. Additionally, there are very specific cases where we may have a file with more than 128MB of positions to work with. Such cases are rare, but can happen. From now on, we can use a smaller value for this definition above to optimize memory usage.

But wait a second. Did I say LESS? YES. If you look at the new definition, you'll see the following code:

#define def_MaxSizeArray 16777216 // 16 Mbytes of positions

You might be thinking: You're crazy, this will damage the system... But it won't. If you look at a normal reading of traded ticks, you can see two rather interesting lines that were not there before. They are responsible for allowing us to read and store a maximum of 2 to the power of 32 data positions. This is ensured by a check at the beginning of the loop.

In order not to lose the first positions, we subtract 2 so that the test does not fail for some reason. We could add an additional outer loop to increase the memory capacity, but I personally see no reason to do so. If 2 gigabytes of positions is not enough, then I don’t know what should be enough. Let's now understand how reducing the definition value provides better optimization using two lines. Let's take a closer look at the code fragment responsible for this.

// ... Previous code .... if ((file = FileOpen("Market Replay\\Ticks\\" + szFileNameCSV + ".csv", FILE_CSV | FILE_READ | FILE_ANSI)) != INVALID_HANDLE) { ArrayResize(m_Ticks.Info, def_MaxSizeArray, def_MaxSizeArray); old = m_Ticks.nTicks; for (int c0 = 0; c0 < 7; c0++) szInfo += FileReadString(file); if (szInfo != def_Header_Ticks) { Print("File ", szFileNameCSV, ".csv is not a file with traded ticks."); return false; } Print("Loading replay ticks. Please wait..."); while ((!FileIsEnding(file)) && (m_Ticks.nTicks < (INT_MAX - 2)) && (!_StopFlag)) { ArrayResize(m_Ticks.Info, (m_Ticks.nTicks + 1), def_MaxSizeArray); szInfo = FileReadString(file) + " " + FileReadString(file); // ... The rest of the code...

The first time we allocate memory, we will allocate the entire specified size plus a reserve value. This reserve value will be our safeguard. Then, when we enter the reading loop, we will have a sequence of relocations, but only when actually necessary.

Now notice that in this second allocation, we will be using the current value of the tick counter that has already been read plus 1. When testing the system, I noticed that it executed this call with a value of 0, which caused a runtime error. You might think this is crazy since the memory was previously allocated with a higher value. And here's the thing, documentation of the ArrayResize function tells us that we will redefine the size of the array.

When we use this second call, the function RESETS the array to zero. That is the current value of the variable when the function is first called, and we did not increase it. I won't explain the reason for this here, but you should be careful when working with dynamic allocation in MQL5. Because it may happen so that your code looks correct but the system interprets it in a wrong way.

Here's another little detail to pay attention to: why am I using INT_MAX and not UINT_MAX in the test? In fact, the ideal option would be to use UINT_MAX, which would give us 4 gigabytes of allocated space, but the ArrayResize function works with the INT system, that is, a signed integer.

And even if we want to allocate 4 gigabytes, which would be possible using a 32-bit long type, we will always lose 1 bit in the data length due to sign. So, we will be using 31 bits, which guarantees us 2 gigabytes of possible space to allocate using the ArrayResize function. We could try to get around this limitation by using a sharing scheme which would guarantee the allocation of 4 gbytes or even more, but I see no reason to do so. Two gigabytes of data is quite enough.

After the explanation, let's get back to the code. We have yet to see the function that read traded ticks. To check if the data in the file is actually traded ticks, we read and save the values found in the file header. After this, we can check if the header matches the header that the system expects to find in the traded tick file. Otherwise, the system will generate an error and then shut down.

Just like in the case of reading bars, we check whether the user has requested to shut down the system. This provides a smoother, cleaner output. Because if the user closes or terminates the replay service, we don't want some error messages to be displayed causing confusion.

In addition to all these tests we performed here, we have a few more to execute. In fact, there is no need for these manipulations, but I don't want to leave everything in the hands of the platform. I want to make sure that some things are actually implemented, for this reason there will be a new line in our code:

void CloseReplay(void) { ArrayFree(m_Ticks.Info); ChartClose(m_IdReplay); SymbolSelect(def_SymbolReplay, false); CustomSymbolDelete(def_SymbolReplay); GlobalVariableDel(def_GlobalVariableReplay); }

You might think that this call is not important at all. However, one of the best programming practices is to clean up everything we've created, or to explicitly reclaim all the memory we've allocated. And that's exactly what we've done for now. We guarantee that the memory allocated while loading the ticks will be returned to the operating system. This is usually done when we close the platform or end the program on the chart. But it's good to make sure this has been done, even if the platform already does it for us. We must be sure of this.

If a failure occurs and the resource is not returned to the operating system, it may happen that when we try to use the resource again, it will be unavailable. This is not due to an error in the platform or operating system, but to forgetfulness when programming.

Conclusion

In the video below, you can see how the system works at the current stage of development. Please note that previous bars end on August 4th. The first day of the replay begins with the first tick on August 5th. However, you can advance the replay to August 6th and then return to the beginning of August 5th. This was not possible in the previous version of the replay system, but now we have this opportunity.

If you look closely, you can see an error in the system. We will fix this error in the next article, in which we will further improve our market replay, making it more stable and intuitive to use.

The attached file includes the source code and files used in the video. Use them to better understand and practice the creation of the configuration file. It is important to start studying this stage now, since the configuration file will change positively over time, increasing its capabilities. Therefore, we need to understand this right now, from the very beginning.

Translated from Portuguese by MetaQuotes Ltd.

Original article: https://www.mql5.com/pt/articles/10768

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use