NeuroExt

- エキスパート

- Dmytryi Voitukhov

- バージョン: 1.75

- アップデート済み: 26 4月 2022

https://t.me/mql5_neuroExt actual version

Signal https://www.mql5.com/ru/signals/1511461

You can use any tool. The bases will be automatically created at the start of the Learn. If you need to start learning from 0 - just delete the base files.

Initial deposit - from 200 ye. Options:

DO NOT ATTEMPT TO TEST WITHOUT NEURAL NETWORK TRAINING!

generating a training base is extremely simple.

there is a ready-made training for USDCHF.

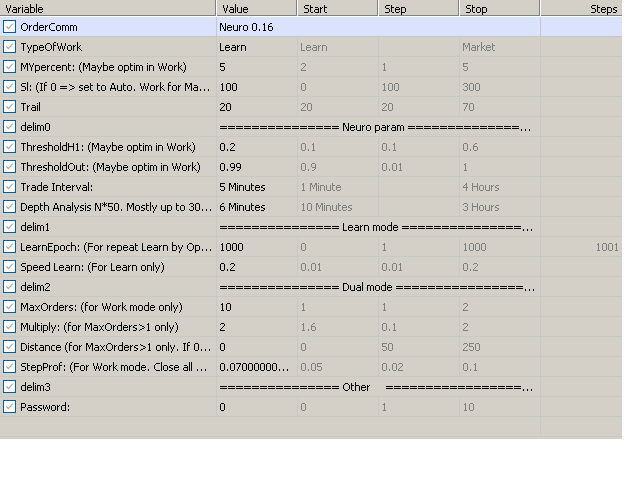

- OrderComm: in the order, it will be supplemented with an indication of the mode of operation and the depth of analysis.

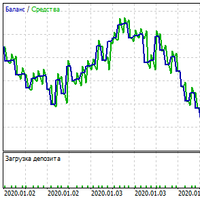

- TypeOfWork: Study \ Work \ Market. "Study" - in this mode it is necessary to achieve a schedule with at least a small increase. No holes. This mode can also be used for work, but there will be only 1 order in the market at a time. "Work" - The database is loaded periodically. In case of simultaneous training in the tester and constant pumping of better training. "Market" - only for acceptance by the Market. Must be switched. - MYpercent: ...

- SL: if you set = 0 in the Learning mode, a value from 40 to 110 will be automatically assigned and will give too many trades, slow learning and distorted images. When MaxOrders> 1 and Run mode is forced to 5000. - StartTrail: Not used in Learn Mode. Optimal = 0 (automatic).

- Trail: ... = Neuro param - ThresholdH1: threshold for filtering out noise on 1 hidden layer - ThresholdOut: threshold for rejecting unsuitable images. When taught, it is forced to 0. - Trade interval: trade every ... H1 is recommended for training. Then, in Work, it is forcibly set to 1 minute.

- Depth Analys: interval of bars for analysis / snapshot of the price picture. The last 50 bars from the selected TF are loaded. Example: with TF M6 the snapshot => 50 * 6/60 = 5 hours. A drawing of this length is processed for memorization. Too large TF will result in non-repeating shots. Too small will produce many very similar images. The database is formed by this parameter. If you choose a different value, the base will be created and full training is required. The bases for each TF are not deleted and once created, you can continue to train and use. If you want to retrain the network, these files must be deleted. The location of the files will be indicated in the log when the EA starts. This is where the attached databases should be located. Example: data_w1_6_USDCHF.csv, data_w2_6_USDCHF.csv. These files contain "_6_" in the name and will be included when "Depth Analys" = M6.

= Learn mode

- LearnEpoch: parameter for setting the number of optimization runs in the Learn mode. - speed: during initial training, several training cycles (5-10) can be done at a speed of 0.5-0.9. Next at 0.2-0.5. Etc. When an entry of the form w1 [XXX] [YYY] N.NNNNNN = ZZ.ZZZZZZZZ appears in the logs and the improvement of learning of a section is stopped, set the speed to 0.1 or less.

= Dual mode

- MaxOrders: if the value is> 1, the insurance strategy will be launched. After all, a neural network does not guarantee a repetition of the trained outcome after a similar snapshot, but it assumes the highest probability. In Teach mode, it is forced to = 1 because training takes place on the data of only 1 simultaneously working order. - Multiply: volume increment factor. Optimal 2.

- Distance: distance between orders of the same direction. Increases with each new order of the same direction.

- StepProf: another additional system, but no less important. Closing all orders when the balance gain is reached by ... The target trigger value is indicated in the comment on the chart as "Next"

= Other

- Pass: key to remove restrictions.

The price is negotiable.

Its already 2024, I don't know why no one is using this. or giving a review. everyhting looks good on testing and Demo. in a few weeks I will put this to work on a Live account. Would like to Thank the Author for this free EA, it works really good, especially for a small Cap player like me. p.s. proper training of the bot gives a good yield. Hope we can have an update soon from the author, but as is. this is really promising. Thank you!