- Description of the architecture

- Building a GPT model in MQL5

- Organizing parallel computing in the GPT model

- Comparative testing of implementations

Description of the architecture and implementation principles

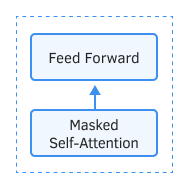

Let's consider the differences between GPT models and the previously considered Transformer. First, GPT models do not use the encoder while only using the decoder. This has led to the disappearance of the inner layer of Encoder-Decoder Self-Attention. The figure below shows the transformer block in GPT.

GPT Block

As in the classic Transformer, these blocks in GPT models are lined up on top of each other. Each block has its own weigh matrices for the attention engine and fully connected Feed Forward layers. The number of such blocks determines the size of the model. As it turns out, the stack of blocks can be quite large. There are 12 of them in GPT-1 and the smallest of GPT-2 (GPT-2 Small), 48 in GPT-2 Extra Large, and 96 in GPT-3.

Like traditional language models, GPT allows you to find relationships only with the previous elements of the sequence, not allowing you to look into the future. However, unlike the Transformer, it doesn't use masking of elements but rather introduces changes to the computation process. In GPT, the attention coefficients in the Score matrix for subsequent elements are zeroed.

At the same time, GPT can be attributed to autoregressive models. Generating one token of the sequence at a time, the generated token is added to the input sequence and fed into the model for the next iteration.

As in the classic transformer, three vectors are generated inside the Self-Attention mechanism for each token: Query, Key, and Value. In an autoregressive model, when the input sequence changes by only 1 token on each new iteration, there is no need to recalculate the vectors for each token from scratch. That's why in GPT, each layer calculates vectors only for the new elements in the sequence and stores them for each element in the sequence. Each transformer block saves its vectors for later use.

This approach allows the model to generate text word by word until it reaches the end token.

And of course, GPT models use the Multi-Head Self-Attention mechanism.