Fully connected neural layer

In the previous sections, we established the fundamental logic of organizing and operating a neural network. Now we are moving on to its specific content. We begin the construction of actual working elements — neural layers and their constituent neurons. We will start with constructing a fully connected neural layer.

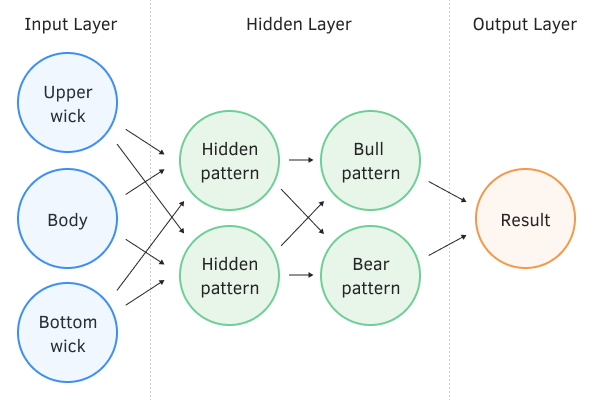

It was precisely the fully connected neural layers that formed the Perceptron, created by Frank Rosenblatt in 1957. In this architecture, each neuron of the layer has connections with all neurons of the previous layer. Each link has its own weight factor. The figure below shows a perceptron with two hidden fully connected layers. The output neuron can also be represented as a fully connected layer with one neuron. Almost always, at the output of a neural network, we will use at least one fully connected layer.

It can be said that each neuron assesses the overall picture of the input vector and responds to the emergence of a certain pattern. Through the adjustment of various weights, a model is constructed in which each neuron responds to its specific pattern in the input signal. It is this property that makes it possible to use a fully connected neural layer at the output of classifier models.

Perceptron has two hidden fully connected layers.

If we consider a fully connected neural layer from the perspective of vector mathematics, in the framework of which the input values vector represents a certain point in the N-dimensional space (where N is the number of elements in the input vector), then each neuron builds a projection of this point on its own vector. In this case, the activation function decides whether to transmit the signal further, or not.

Here, it's important to pay attention to the displacement of the obtained projection relative to the origin of the coordinates. While the activation function is designed to make decisions within a strict range of input data, this displacement is, for us, a systematic error. To compensate for this bias, an additional constant called bias is introduced on each neuron. In practice, this constant is tuned during the training process along with the weights. For this, another element with a constant value of 1 is added to the input signal vector, and the selected weight for this element will play the role of bias.