AO Core

- Libraries

- Andrey Dik

- Version: 1.8

- Updated: 14 July 2023

- Activations: 20

AO Core is the core of the optimization algorithm, it is a library built on the author's HMA (hybrid metaheuristic algorithm) algorithm.

Pay attention to the MT5 Optimization Booster product, which makes it very easy to manage the regular MT5 optimizer.

An example of using AO Core is described in the article:

https://www.mql5.com/ru/articles/14183

https://www.mql5.com/en/blogs/post/756510

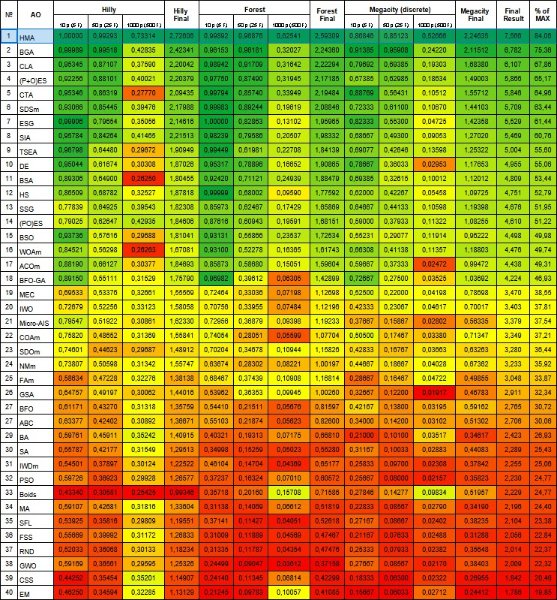

This hybrid algorithm is based on a genetic algorithm and contains the best qualities and properties of population algorithms.

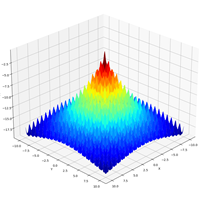

High-speed calculation in HMA guarantees unsurpassed accuracy and high search capabilities, allows you to save the total time for optimization, where the best solution will be found in fewer iterations. The performance of this algorithm exceeds all known population optimization algorithms.

Which projects can use this library and improve the results:

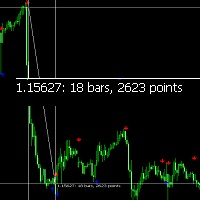

1. Automatic self-optimization in Expert Advisors.

2. Search for the optimal profit/risk ratio for the implementation of flexible money management.

3. Portfolio optimization, including portfolio self-optimization.

4. The use of already found solutions as part of the optimizer.

5. Application in machine learning and in conjunction with neural networks.

Technical specifications:

1. The number of optimized parameters: unlimited.

2. The step of optimized parameters: unlimited, starting from 0.0.

3. High scalability and stability of results.

Important!

Please do not buy the library if you do not fully understand what to do with it and how to use it.

The library has no tuning parameters at all (reducing the degrees of freedom increases the stability of the results). It is only necessary to set the population size (it can be very useful when organizing parallelization of historical runs on OpenCL devices).

Exported library functions:

//—————————————————————————————————————————————————————————————————————————————— #import "MQL5\\Scripts\\Market\\AO Core.ex5" bool Init (int colonySize, double &range_min [] , double &range_max [] , double &range_step []); //------------------------------------------------------------------------------ void Preparation (); void GetVariantCalc (double &variant [], int pos); void SetFitness (double value, int pos); void Revision (); //------------------------------------------------------------------------------ void GetVariant (double &variant [], int pos); double GetFitness (int pos); #import //——————————————————————————————————————————————————————————————————————————————

Published articles by the author:

Genetic algorithms are easy!: https://www.mql5.com/ru/articles/55Population optimization algorithms: https://www.mql5.com/en/articles/8122

Population optimization algorithms: Particle Swarm (PSO): https://www.mql5.com/ru/articles/11386

Population optimization algorithms: Ant Colony Optimization (ACO): https://www.mql5.com/en/articles/11602

Population optimization algorithms: Artificial Bee Colony (ABC): https://www.mql5.com/ru/articles/11736

Population optimization algorithms: Gray Wolf Optimizer (GWO): https://www.mql5.com/en/articles/11785

Population optimization algorithms: Cuckoo Optimization Algorithm (COA): https://www.mql5.com/en/articles/11786

Population Optimization Algorithms: Fish School Search (FSS): https://www.mql5.com/ru/articles/11841

Population Optimization Algorithms: Firefly Algorithm (FA): https://www.mql5.com/ru/articles/11873

Population Optimization Algorithms: Bat algorithm (BA): https://www.mql5.com/ru/articles/11915

Hello, I have to congratulate you for allowing me to purchase this application, excellent service from every point of view. Thank you.!!!