Dependency statistics in quotes (information theory, correlation and other feature selection methods) - page 15

You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

People who have EViews don't ask such questions, hee-hee

Would you be so kind as to send me, either privately or here, EViews, if possible?

People who have EViews don't ask such questions, heehee

Well yes, it's a silly question, I agree. The warranties are the same as the model itself.

What version do you have? They say the 5 is outdated.

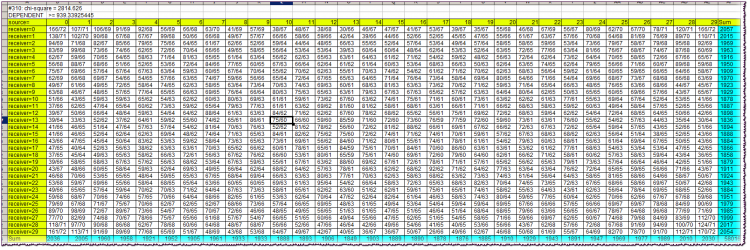

First line of the block: #310: chi-square = 2814.626First - the value of Lag variable. Then the chi-square value of the independence of the variables.

Second line: conclusion of dependence/independence, together with the chi-square boundary value.

The yellow cells in the table are caps. They allow you to orient which characters of the alphabet were observed simultaneously at the intersection of the corresponding row and column. Recall that the alphabets at source and receiver are identical and equal to the quantiles into which the returns of the corresponding bars (source and receiver) fell.

For example, at the intersection of column source=10 and row receiver=13 we find a cell value of 75/60. This means that the combination "the return of the source (bar in the past) caught in the 10th quantile and the return of the receiver (bar closer to the present) caught in the 13th quantile" was observed 75 times in the history, with the theoretical number of such combinations being 60 if they were independent.

The turquoise cells are the sums of the actual frequencies by row or column needed to calculate the chi-squared.

The chi-square itself in the case of source and receiver independence should almost always be smaller than the boundary one, i.e. specified in the second line of the block. As we can see, it is much larger, almost 3 times larger. This indicates a very strong bar dependence in this case.

The whole file, even archived, takes too much space, so I can't post it here.

Well yes, it's a silly question, I agree. The guarantees are the same as for the model itself.

What version are you running? They say that the '5' is outdated.

There is the Shannon definition of entropy, in which independence is mandatory.

And there is a definition of mutual information, in which the Shannon definition is applied purely formally, since it is still assumed that dependencies exist.

If you want to dig into the philosophical depths and contradictions of the definition of mutual information - please, dig. I'd rather not sweat it and just use the "American" formula with probabilities, without bothering about independence.

A bit of a misnomer. The translation of the meanings of bark..., TI is as follows. There is a concept of entropy. It must be clearly understood that behind this beautiful and incomprehensible foreign word there is some artificially invented figure. In textbooks, those that are more honest, it is written directly so, an axiomatically introduced measure of uncertainty. How it turns out is actually a convolution. Roughly speaking, a large table describing states and their frequencies, with the help of arithmetic formulas are convolved into one number. Moreover, only frequencies are rolled up, and states themselves (which, by the way, can also have their measures) are interesting only from the point of view of their total number.

In ter.faith there is a similar operation, roughly speaking, mo systems are counted in a similar way. But only there the probabilities of states are multiplied with their measures, summed up and a figure obtained, about which then the theorems about convergence are proved, etc. Here it is not so. Here the frequency itself (called its probability), or rather its logarithm, acts as a measure. The logarithm solves some arithmetic problems which Shannon formulated in three conditions (must grow monotonically, etc.).

After that Shannon says: -Friends, you know, I have come up with reasonable and obvious rules how you can add up the numbers I recently discovered, which I call entropy, from two different tablets. Friends (mostly military people) ask: - Fine, how do you do it? - Very simply. - Shannon answers them, flashing his eyes. - Let's say, one plate is not connected to another, they are independent, it happens in life. And in this case I have a theorem about addition of entropies, with a wonderful result - the numbers simply add up! - Fine, but what if the plates are connected in some incomprehensible way? - Here it's the same simple, build one big square table from the two initial tables, multiply the probabilities in each cell. We add up the numbers in the cells by columns, add up the columns separately, build a new table, divide one by the other, here early zero, here fish wrapped... Oops, the result is one number, which I call conditional entropy! What is interesting, the conditional entropy can be calculated both from the first table to the second, and vice versa! And to take into account this unfortunate duality, I have invented the total mutual entropy, and it is the same one figure! - And so, - friends ask, munching pop-corn, - It is much easier to compare one figure with another, than to understand the essence of the data from the original tables. And it's even easier when it comes to understanding the meanings and reliability of relationships - it's boring, really. Curtain.

This is what a brief history of TI looks like for me. Compiled from my own research and the claims of an academic dictionary (see quotes earlier).

Now, what's important. The key point in this whole story is hidden in conditional entropy. In fact, if one wants to operate with one figure, it is not necessary to count any mutual information and other things, it is enough to master the technique of calculating conditional entropy. It is it that will give some measure of the interrelation of one with the other. Thus, it is necessary to understand that figures in tables should be "stationary", and besides, interrelations of tables should be "stationary". And furthermore, the initial figures in the tables must come from appropriate sources.

It must be said that there are extensions of TI to the case of Markov chains. Seems to be just what the doctor ordered. But no, the transition probabilities for the market are not constant, due to general non-stationarity. Besides, while for the CM it doesn't matter how the system ended up in its current state, for the market it is very important.

I don't know what your problem alphabet is. I have a system of a pair of bars separated by Lag distance. One bar, in the past, is the source and the other is the receiver. The alphabets of both are identical (as far as bar returns are concerned, of course).

Clearly, something similar to autocorrelation, but with a different measure. My objections are exactly the same as for the CM case. And all the others, stationarity etc.

SZU. I must say that if you really trade it (TI) and successfully, what with it, with objections. Let even a hare's foot on the monitor, if it helps.

Read HideYourRichess.

Yes, interesting. Indeed, the frequency matrix of conditional states of a system - under the assumption of connectivity - being reformulated in terms of conditional entropy, gives us ONE figure describing the possibility of there being some determinacy of one variable from another. If the PDF in such an initial matrix is uniform, then we have the maximum entropy of the system (of course, I meant to say conditional entropy). And if there are irregularities, the entropy will be less. As a first approximation we can do with one figure. You are right about that. And the mutual information for set of variables, separated from the receiver by Lag, as Alexey has written, is calculated already for quite concrete purposes, namely, for the purpose of selection of informative variables.

So far, I personally don't trade using TI. This topic was a "first try at it", I think.

HideYourRichess: Вот так выглядит краткая история ТИ в моём исполнении. Составлена по мотивам моих собственных изысканий и утверждений академического словарика (см. цитаты ранее).

Very interesting. Shannon made such a mess that we are still figuring it out...

It should be said that there are extensions of TI for cases of Markov chains. Seems to be just what the doctor ordered. But no, the transition probabilities for the market are not constant, due to general non-stationarity. Besides, if for CM it does not matter how the system turned out in the current state, for the market it is very important.

That's the point, the return stream is not a Markovian process, as the return of the zero bar strongly depends on the return of not only the first bar, but also the second, third etc. At any rate, that's how the main branch study can be directly interpreted.

ZS. I have to say that if you really do trade this (TI) and successfully, then what the hell with it, with objections. Let the hare paw on the monitor, if it helps.

No, I don't. But it would be very interesting to develop it into something commercial. I heard from alsu ( Alexey has disappeared somewhere, sorry), that he vaguely remembers a program on the RBC channel, in which some rhetorician-trader who really trades something similar appeared. And he bet a lot of money on it. And he calls his system "catching market inefficiencies".

I've been thinking about entropy for a long time now. I even put something out here. But then it was more like statistical physics entropy, not TI, and referred to multicurrency. Anyway, karma has been whispering something entropic in my ear for some years now, and I've been listening to it and trying to figure out what it wants from me...

That's the point, the return flow is not a Markovian process, as the return of the zero bar is strongly dependent on the return of not only the first bar, but also the second, third, etc. At any rate, that's how the main branch study can be directly interpreted.

No, I don't trade. But it would be very interesting to develop this into something tradeable. I heard from alsu ( Alexey has disappeared somewhere, sorry), that he vaguely remembers a program on the RBC channel, in which some rhetorician-trader who actually trades something similar appeared. And he bet a lot of money on it. And he calls his system "catching market inefficiencies".

And I've been thinking about entropies for a long time now. And I've even posted something here. But then it was more like the entropy of statistical physics, not TI, and was related to multicurrency. Anyway, karma has been whispering something entropic in my ear for some years now, and I've been listening to it and trying to figure out what it wants from me...

Very interesting.

But could you, Alexey, more clearly formulate (on your table) what hypothesis about the distribution of returns do the chi-square estimates correspond to?

The primordial "browne", or something cooler?

;)