"New Neural" is an Open Source neural network engine project for the MetaTrader 5 platform. - page 9

You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

It can't be like that. Different types in different layers. I'm telling you, you could even make each neuron a separate layer.

About the buffer, let me give you an example. Let me get home.

A layer is a union of neurons independent of each other in one iteration.

Who says it can't be, but I want to, but you're cutting my wings :o)

You never know what people can come up with, and tell them that all neurons in a layer should have the same number of inputs.

And types and connections can be different even for neurons of the same layer, this is what we have to reckon with, otherwise we'll just get a set of algorithms (which are a lot on the Internet) that need to be shaped in order to connect something.

FWhat is the complexity of the algorithm if each neuron is a separate layer?

Who can advise me on some online drawing software for diagrams and shit?

Google Docs has drawings, you can share them.

Can secretary part-time drawing, provided no more than 1 hour a day.

Okay, for me, four of the networks that have been implemented are of interest

1. Kohonen networks, including SOM. Good to use for cluster partitioning where it is not clear what to look for. I think the topology is well known: vector as input, vector as output or otherwise grouped outputs. Learning can be with or without a teacher.

2. MLP , in its most general form, i.e. with an arbitrary set of layers organized as a graph with the presence of feedbacks. Used very widely.

3. Recirculation network. Frankly speaking, I have never seen a normal working non-linear realization. It is used for information compression and main component extraction (PCA). In its simplest linear form, it is represented as a linear two-layer network in which the signal can be propagated from both sides (or three-layer in expanded form).

4.Echo-net. Similar in principle to MLP, applied there. But it is absolutely different in organization and has clearly specified time of training (well, and always gives global minimum, unlike).

5. PNN -- I haven't used it, I don't know how. But I think you'll find someone who can.

6. Models for fuzzy logic (not to be confused with probabilistic networks). Haven't implemented them. But could be useful. If anyone can find information, throw plz. Almost all models have Japanese authorship. Almost all are built manually, but if it were possible to automate topology construction by logical expression(if I remember everything correctly), it would be unreal cool.

+ networks with evolutionary increasing or decreasing number of neurons.

+ genetic alorhythms + learning acceleration methods.

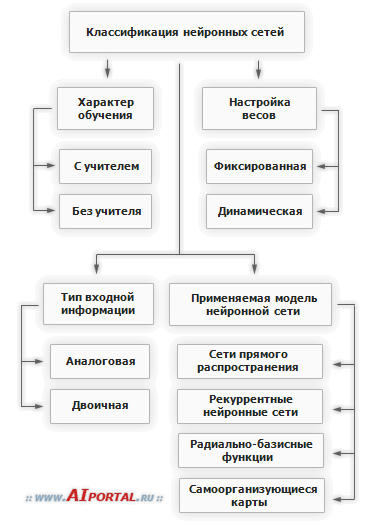

I found this little classification

+ genetic alorhythms

Genetics gobbles up a lot of extra resources. Gradient algorithms are better.

Why decide for the users what they need? You have to give them a choice. PNN, for example, also eats a lot of resources.

The library should be universal and extensive, allowing to find variants of solutions, but not in standard backpropagation kit, which you can find on the web anyway.

A layer is a union of neurons independent of each other in one iteration.

What's the point of this?

You never know what people can come up with, and tell them that all neurons in a layer should have the same number of inputs.

Hmm. Each neuron has one input and one output.

and types and connections can all be different even for neurons of the same layer, that's what we should start from, otherwise we'll just get a set of algorithms (lots of them in the Internet) that need to be shaped in order to connect something.

First of all, I'm not finished. Second, see the rules. Critique later. You don't see the model as a whole and you start criticizing. That's not good.

SZY How much more complicated would the algorithm be if each neuron had a separate layer?

Algorithm of what? It would just slow down the learning and functioning.

And please, what is the entity "Buffer"?

Buffer is the entity through which synapses and neurons communicate. Once again, my model is very different from the biological one.

_____________________

Sorry I didn't finish it yesterday. I forgot to pay for the Internet, they cut me off :)

A layer is a union of neurons independent of each other in one iteration.

Who says it can't be, but I want to, but you're cutting my wings :o)

You never know what people can come up with, tell me that all neurons of the layer should have the same number of inputs.

and types and connections all can be different even for neurons of one layer, from this we must dance, otherwise we will get just a set of algorithms (of which there are a lot on the Internet) of which you have to look for something to connect.

FWhat is the complexity of the algorithm if each neuron is a separate layer?

Theoretically it is probably possible, in practice I have not come across such a thing. Even with the idea for the first time.

I think that, purely for experimental purposes, you can think about its implementation, within the framework of the project implementation of a "motley" layer is probably not the best idea in terms of labor costs and implementation efficiency.

Although I personally like the idea, at least such a possibility is probably worth discussing.

Why decide for users what they need?

? Genetics is a learning method. The right thing to do, imho, is to hide the learning algorithms, pre-selecting the optimal one.

? Genetics is a learning method. The right thing to do, imho, is to hide the learning algorithms, pre-selecting the optimal one.

Is working with the NS only about choosing its topology? The method of training also plays an important role. Topology and learning are closely related.

All users have their own imho, so you can't take half of the decision to yourself.

We have to createa networkconstructor which isn't limited by any presets. And as versatile as possible.

Sergeev:

The library should be universal and extensive, opening the possibility to find variants of solutions, not in the standard set of backpropagation, which you can find on the Internet anyway.

Is working with the NS only about choosing its topology? The method of training also plays an important role. Topology and learning are closely related.

All users have their own imho, so you can't take half of the decision on yourself.

We need to createa networkconstructor that is not limited to some presets. And as universal as possible.