My first description of this issue was not clear at all I am sorry.

I didn't expect to have the same result in simulation and reality.

But I expect to have quite the same Optimisation's / Forward's results than a single back-test with the same parameters (and the same period).

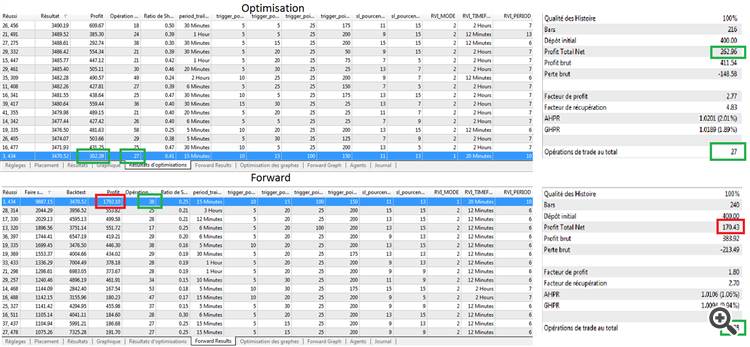

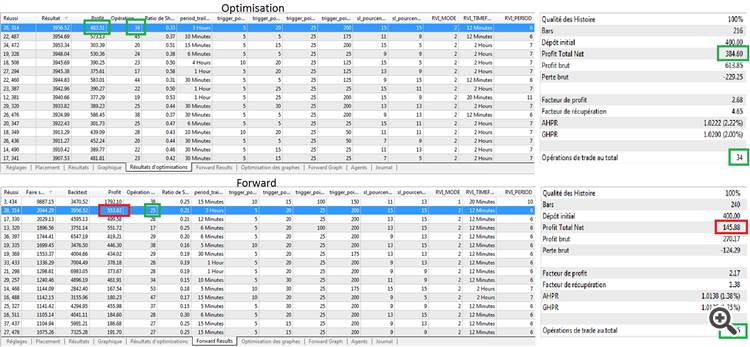

Has you can see on my screenshots the gap is very important between forward and single test

Ok, so you're talking about the walk-forward feature. What do you make of the difference between the number of bars in back-testing vs walk-forward?

I am not sure to understand your question, but for me there is no difference for the Bars function result between back-testing and walk-forward. If there is one it could be the reason of this gap because a lot of my decision engine integrate this part of code :

int tmpBars; tmpBars = Bars(_symbol, INDICATOR_TIMEFRAME); if (EVERY_TICK == false && tmpBars == _bars) // where _bars and _symbol are two attribute of the class return true; _bars = tmpBars; // then the rest of the time consuming code is executed... // so by this way this analyse is done only 1 time per INDICATOR_TIMEFRAME

My first description of this issue was not clear at all I am sorry.

I didn't expect to have the same result in simulation and reality.

But I expect to have quite the same Optimisation's / Forward's results than a single back-test with the same parameters (and the same period).

Has you can see on my screenshots the gap is very important between forward and single test

Is the results of your Optimization reproducible ? I mean, do you get the same results if you run an optimization at different time ?

Same for Forward results, are they reproducible ?

Even if the result for a single test is closed to that of optimization results, I don't find normal that the result is different. You have to investigate why you get different results, check your code and data.

Is the results of your Optimization reproducible ? I mean, do you get the same results if you run an optimization at different time ?

Same for Forward results, are they reproducible ?

Even if the result for a single test is closed to that of optimization results, I don't find normal that the result is different. You have to investigate why you get different results, check your code and data.

Well they are not "exactly" reproducible and the result is different after each run, for me this is due to random execution delay which can affect my strategy (but can't explain the forward gap)

I have probably found a bug this night, I launched a new optimization today and will pay attention to the evolution of the results gap

Well they are not "exactly" reproducible and the result is different after each run, for me this is due to random execution delay which can affect my strategy (but can't explain the forward gap)

I have probably found a bug this night, I launched a new optimization today and will pay attention to the evolution of the results gap

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

Hello,

I had an issue since 2 weeks : A big gap between the forwards results and simulations results.

My EA use Wininet library to execute a Web Query because a part of the EA's decision is handled by data crawling on the web, these data are centralized on a web-service. This web-service requires a timestamp. By this way I am able to simulate what could have append few days ago with trades based on this data analyses.

It works perfectly for basic simulation and optimization, at the end of an optimization, I run again my EA with the best parameters, the simulations results are very close from the optimization results.

But for the forward result, it's totally broken : the best one is supposed to give me 1792 $ of profit during his forward period, but at the end of the simulation the result was only 168 $

I have enable the random execution but this is not the cause of this big gap : my robot isn't a scalper, usually it keep his positions for 4 to 6 hours.

It will be difficult for you to help me without attached code, but for the moment I just want to know if this issue is quite usual or not.