Introduction

RNN is the neural network used to predict time serial data. It started from RNN, to LSTM (Long short-term memory) and then GRU (Gated Recurrent Units). Each of them targeted to resolve some issues to make the RNN model better and better. Here is a page showing the details of the models. In this post, I am not going to repeat the theoretical details but to put the RNN model in practice over the MT4 tester and live trading.

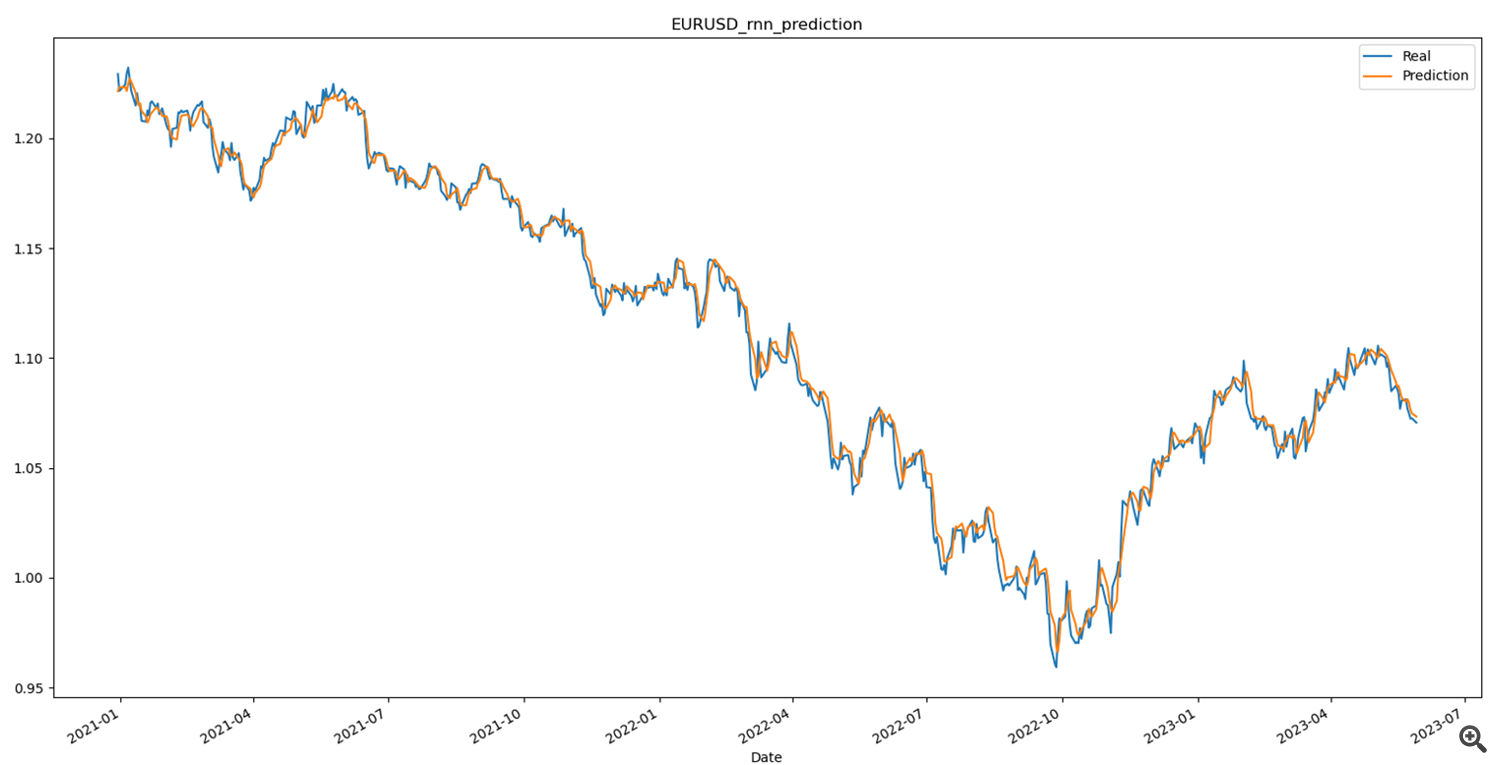

Here is an image showing the simulated prediction on the instrument EURUSD over a daily price.

Simulated prediction

As one can see that the prediction line is overlapped on the real value. With such a close prediction, this motivated us to put this model into an EA.

The bridge between python and MT4

To allow using RNN from python, we need a bridge that makes python and mql4 communicate. Python proxy is a library to achieve this.

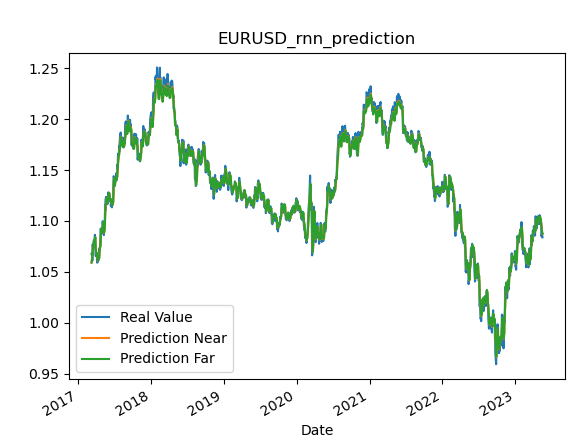

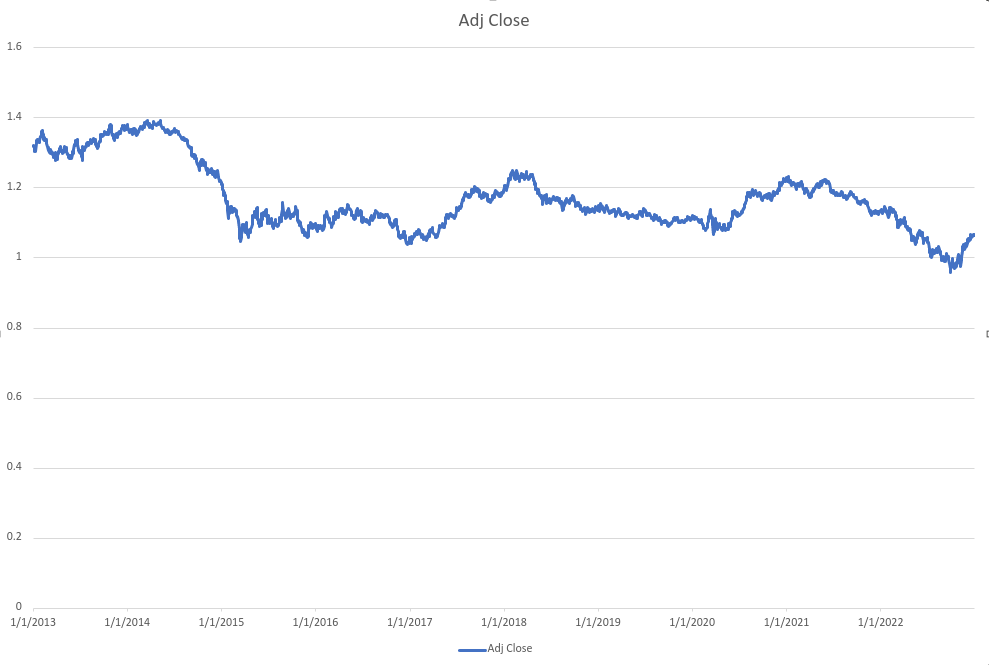

Here is one of the backtest result generated from the tester.

This is a result from 2022-06 to 2023-05. 1 year period. Although, the result is positive, this is not a good example. I will explain in more details later in this post.

Data preparation

I used data from yahoo finance to train the RNN model. This is because the historical data from MT4 is 5 years only which may be not enough for model training.

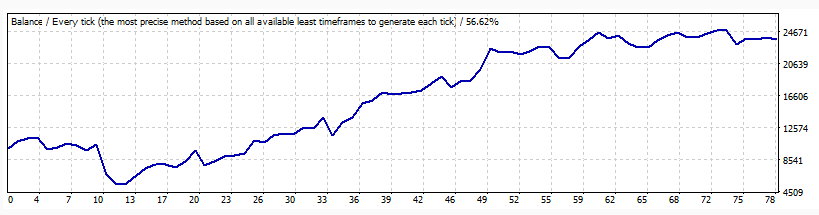

EURUSD from yahoo finance

RNN training

According to the article, GRU model is the best one. Here is the code snapshot for the model training.

def GRU_model_regularization(self , X_train , y_train): ''' create GRU model trained on X_train and y_train ''' # create a model from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Dense, SimpleRNN, GRU from tensorflow.keras.optimizers import SGD from tensorflow.keras.layers import Dropout # The GRU architecture my_GRU_model = Sequential() # First GRU layer with Dropout regularisation my_GRU_model.add(GRU(units=50, return_sequences=True, input_shape=(X_train.shape[1],1), activation='tanh')) my_GRU_model.add(Dropout(0.2)) # Second GRU layer my_GRU_model.add(GRU(units=50, return_sequences=True, activation='tanh')) my_GRU_model.add(Dropout(0.2)) # Third GRU layer my_GRU_model.add(GRU(units=50, return_sequences=True, activation='tanh')) my_GRU_model.add(Dropout(0.2)) # Fourth GRU layer my_GRU_model.add(GRU(units=50, activation='tanh')) my_GRU_model.add(Dropout(0.2)) # The output layer my_GRU_model.add(Dense(units=y_train.shape[1])) # units= the number of the prediction output # Compiling the RNN my_GRU_model.compile(optimizer=SGD(learning_rate=0.01, decay=1e-7, momentum=0.9, nesterov=False),loss='mean_squared_error') # Fitting to the training set my_GRU_model.fit(X_train,y_train,epochs=50,batch_size=150, verbose=0) return my_GRU_modelWith this RNN model created, we will use it to predict the entire testing period.

Execution strategy 001

The idea is simple. We will have a long position when the prediction return is bigger than a threshold, a short position when the prediction return is smaller than a threshold, no position when the prediction return is too small.

Execution strategy 002

This is a mistake and I created this strategy accidentally during the testing. This strategy is a reverse of the 001. It is straight that this strategy provide the best return which shows on the above return image but this is not a reasonable decision so the result is not good and I am not going to use that.

Testing result

The result is not good. There is no profit generated. This is strange, given that the prediction curve is covering the real value, how could the result is not good? I print out the backtest prediction value instead of looking into the simulation prediction. With the visualization, I realized where is the problem.

Backtest prediction

The backtest prediction value is a shifted real value. This is a EMA-like curve. Now, this is obvious that why the result is bad. The next question is that why the simulation prediction is better than the backtest prediction, what is the difference between them?

There is a step to normalize the price value before prediction. In the simulation, all the testing data are used in the normalization step, this is trapped into the looking forward bias while this is not the case in the backtest prediction.

Conclusion

The backtest result is bad due to the prediction of RNN is just another EMA. I will include all the needed files. If you are interested, you can continue the work.

Files

RNN_EA.py --- The ea connected to MT4

RNN_Strategy_002.py --- execution strategy

RNN_Strategy_003.py --- execution strategy

RNN_Trainer.py --- training script for the RNN model

data_download_from_yahoo.py --- download data from yahoo

requirements.txt --- python environment