You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

Pause Giant AI Experiments!

We call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.

***

The authors ask the questions:

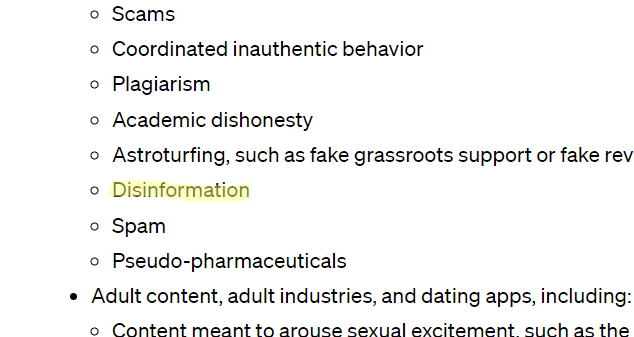

Should we allow computers and robots to clog our social media and websites with adverts and fake news?

Should we replace humans with automated systems wherever possible?

Won't this gradually lead to us becoming redundant and machines taking over all our work?

How far should we go in developing artificial intelligence to avoid losing control of it in the future?

Sistemma in Russia has introduced its new product - a chatbot with artificial intelligence called SistemmaGPT, which is an analogue of ChatGPT.

For the development of the Russian chatbot, both in-house developments and research results from the renowned Stanford University were used.

SistemmaGPT provides basic functions such as processing large amounts of data, communicating with customers, managing email or incoming calls and much more.

Short of...

In general, it's easy to guess that the government has its eye on AI developments. Musk serves under the guise of concern for humanity.

It won't take long for independent developers to experiment with AI on large capacity data centres.

Here we are.

The authors ask the questions:

Should we allow computers and robots to litter our social media and websites with adverts and fake news?

Should we replace humans with automated systems wherever possible?

Wouldn't this gradually lead to us becoming redundant and machines taking over all our work?

How far should we go in developing artificial intelligence to avoid losing control of it in the future?

You're looking at the wrong thing. The key points are different.

There's been enough spam and misinformation without AI for a long time. After all, filters can be created, with the same AI.

The authors ask the questions:

Should we allow computers and robots to litter our social media and websites with adverts and fake news?

Should we replace humans with automated systems wherever possible?

Wouldn't this gradually lead to us becoming redundant and machines taking over all our work?

How far should we go in developing artificial intelligence so that we don't lose control of it in the future?

It seems like technological advances (assembly line, agricultural techniques) have relieved the leather bags of routine and made their lives happier. There were of course some dissatisfied people :)

GPT5 is coming out in December. And let's say it's not AI, but if it can't be distinguished from a human by communication, how can you tell? Honestly I can't tell the difference between people in the office either (whether they're normal or that one with bugs)))

GPT5 is coming out in December. And let's say it's not an AI, but if it can't be distinguished from a human by communication, how can you tell? Honestly, I can not distinguish people in the office (normal or that, with bugs)))

:)))

Strange that my question was perceived as a violation of the content policy...

:)))

It's odd that my question was perceived as a content policy violation....

Probably because of the reference to death. Maybe the Prompt is remotely suggesting he's a schizophrenic suicide.

I think that's the reason

he couldn't understand the dream talk.

I do indeed often fly in my dreams and indeed often have trouble landing from great heights.