You are missing trading opportunities:

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

Registration

Log in

You agree to website policy and terms of use

If you do not have an account, please register

I don't know what mt4 is, it won't even run on win10, I think that's a good thing

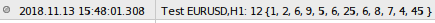

I tweaked the array.

I had to use ArrayCopy , becauseMQL5 swore that the array was static.

If it's such a speed contest, I'll offer my own variant...

Your variant is indeed the fastest, but it contains a bug: if all elements of an array are equal to a filter, your function will exit the array.

I will offer my own variant, it is a little bit slower than yours:

2018.11.13 17:16:38.618 massiv v1 (EURUSD,M1) test my=1512090

2018.11.13 17:16:40.083 massiv v1 (EURUSD,M1) test alien=1464941

You have been asking these kinds of questions for several years now. Have you learned much? Sorry, but it is obvious that you are still at the level of bytes and elementary arrays.

The question itself is formulated incorrectly. The task is not to remove duplicate values (task of GCE level) but in something much bigger, you must update the list of valid items. If so, the question should sound completely different. You confuse and mislead the participants and, first of all, yourself: impose to the participants the wrong solution in principle and ask to make it effective.

I don't do programming for the sake of programming, I have no goal to become a mega-programmer and to be clever on forums.

What don't you understand in the question: Clear an array of defined elements?

Your variant is really the fastest, but it contains a bug: if all elements of an array are equal to a filter, your function will exit the array.

I'll suggest my variant, it's a bit slower than yours:

2018.11.13 17:16:38.618 massiv v1 (EURUSD,M1) test my=1512090

2018.11.13 17:16:40.083 massiv v1 (EURUSD,M1) test alien=1464941

Yes, thanks. Corrected.

Only you have an error somewhere, too, because the checksum does not coincide because one element is missing somewhere. Didn't work out where.

Tweaked it by removing unnecessary passages

In both cases each element is dragged at most once.

Yes, sorry, one time indeed. I was hoping someone would be interested in the DBMS approach and check it out, didn't wait. Had to do it myself.

Inserted ArrayDeleteValue.mq5 into your checker, it is twice as bad as yours. I thought about the reason and fixed two lines in it so that one third of the items would be removed instead of 0.1%.

That is how it turned out:

2018.11.13 19:45:22.148 Del (GBPUSD.m,H1) variant Pastushak: Checksum = 333586; elements - 667421; execution time = 108521 microseconds

2018.11.13 19:45:22.148 Del (GBPUSD.m,H1) variant Korotky: Checksum = 333586; elements - 667421; execution time = 5525 microseconds

2018.11.13 19:45:22.148 Del (GBPUSD.m,H1) variant Fedoseev: Checksum = 333586; elements - 667421; execution time = 4879 microseconds

2018.11.13 19:45:22.164 Del (GBPUSD.m,H1) variant Semko: Checksum = 333586; elements - 667421; execution time = 14479 microseconds

2018.11.13 19:45:22.179 Del (GBPUSD.m,H1) variant Pavlov: Checksum = 998744; elements - 667421; execution time = 0 microseconds

2018.11.13 19:45:22.179 Del (GBPUSD.m,H1) variant Nikitin: Checksum = 333586; elements - 667421; execution time = 5759 microseconds

2018.11.13 19:45:22.179 Del (GBPUSD.m,H1) variant Vladimir: Checksum = 333586; elements - 667421; execution time = 1542 microseconds

Pavlov's variant had an error, I had to comment it out.

Conclusion: calculating addresses in an array with an arbitrary distance between their numbers is still worse than processing elements in a row, at a given step, much less step 1, the compiler can optimize it.

P.S. Borland's Pascal and Delphi compilers make it so that at runtime the loop variable does not matter (in memory), it is put somewhere in the CPU registers.

Yes, sorry, one time indeed. I was hoping someone would be interested in the DBMS approach and check it out, didn't wait. Had to do it myself.

Inserted ArrayDeleteValue.mq5 into your checker, it is twice as bad as yours. I thought about the reason and fixed two lines in it so that one third of the items would be deleted instead of 0.1%.

That is how it turned out:

2018.11.13 19:45:22.148 Del (GBPUSD.m,H1) variant Pastushak: Checksum = 333586; elements - 667421; execution time = 108521 microseconds

2018.11.13 19:45:22.148 Del (GBPUSD.m,H1) variant Korotky: Checksum = 333586; elements - 667421; execution time = 5525 microseconds

2018.11.13 19:45:22.148 Del (GBPUSD.m,H1) variant Fedoseev: Checksum = 333586; elements - 667421; execution time = 4879 microseconds

2018.11.13 19:45:22.164 Del (GBPUSD.m,H1) variant Semko: Checksum = 333586; elements - 667421; execution time = 14479 microseconds

2018.11.13 19:45:22.179 Del (GBPUSD.m,H1) variant Pavlov: Checksum = 998744; elements - 667421; execution time = 0 microseconds

2018.11.13 19:45:22.179 Del (GBPUSD.m,H1) variant Nikitin: Checksum = 333586; elements - 667421; execution time = 5759 microseconds

2018.11.13 19:45:22.179 Del (GBPUSD.m,H1) variant Vladimir: Checksum = 333586; elements - 667421; execution time = 1542 microseconds

Pavlov's variant had an error, I had to comment it out.

Conclusion: calculating addresses in an array with an arbitrary distance between their numbers is still worse than processing items in a row at a given step, all the more so at step 1, the compiler can optimize it.

P.S. Borland's Pascal and Delphi compilers make it so that at runtime the loop variable does not matter (in memory), it is put somewhere in the CPU registers.

Pavlov's version has been corrected.

Your values are strange. Maybe you ran the script after profiling or debugger without recompiling the code?

That's how it works for me:

And in your version it generates an incorrect checksum. And creating an additional array is of no benefit at all, on the contrary, it slows down the process and eats up additional resources.

Only in my case it is done in blocks using ArrayCopy, so there is a speed advantage.