Yes, it seems slower, although I havn't done any exact measurements.

However, what's far worse, I have been getting incorrect results from the tester with recent builds! This happens especially when I'm doing a genetic optimization and then verify the results in a single specific run later in order to see the (balance/equity) graph. Sometimes good (or even the best) results from the optimization deliver far worse results when checked explicitly. The other way around happens as well, but those are spotted much easier. For example, when I optimize two variables (full optimization), normally you would see a gradual shift in color in the 2D-graph. But lately, I keep getting random 'white' spots in my 2D-graph. If I verify them by hand, most of the time they perform better in single test and would deliver the 'expected' color.

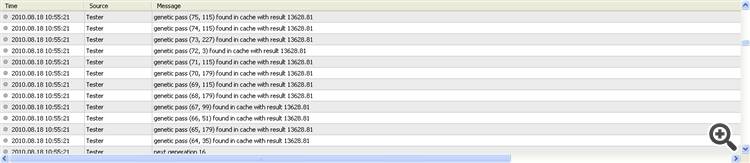

I don't know what the cause for all this is, but I suspect there's at least something wrong with the caching algorithm that tries to re-use values from previous optimization runs. I have seen numerous cases where an optimization with the same data set/EA but with different input parameters will result in a very quick reply, with optimization results that are all the same single value. This value is taken from the cache (as reported in the journal). The only way out of this situation (I have been able to find) is to recompile the EA, because then all values will be re-calculated.

I still havn't been able to faithfully reproduce this behaviour though in a small example, as I'm busy optimizing my entry for the ATC2010!

- www.mql5.com

I will add some examples of what I mean, because I just ran into another case of this behaviour.

Here is are the optimization results, we're going to focus on the best pass (208) and the third one (281).

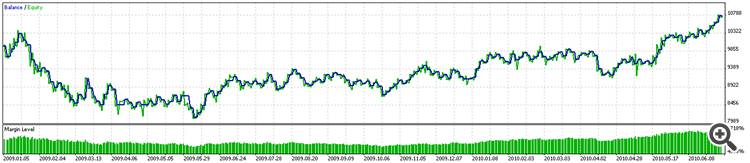

The graph for the best result??? (Pass 208)

The graph for the first correct result, Pass 281. (Pass 473 is incorrect as well.)

Here is what I think:

- Recompiling the expert or changing the optimization parameters will reproduce the cache.

- Genetic should go randomly but gives almost same results as complete algorithm.

- Running a single test SHOULD give same result as optimization, BUT I found it doesn't when I use open prices only for optimization and every tick for testing.

Regarding slow, I found MQL5InfoInteger(MQL5_TESTING) returns true during optimization (shall be false?), anyway this caused to trigger the slow calculations.

- www.mql5.com

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

Just noticed it takes (100-300) sec/pass for my expert which used to take (10-25) before the recent terminal update , same with all (even example experts - so much slower). Same PC, but now MT5 CPU utilization is very low, and CPU heat ranges from (55-65) while it used to be (65-85) during optimization.

Anyone else noticed same, or just me?