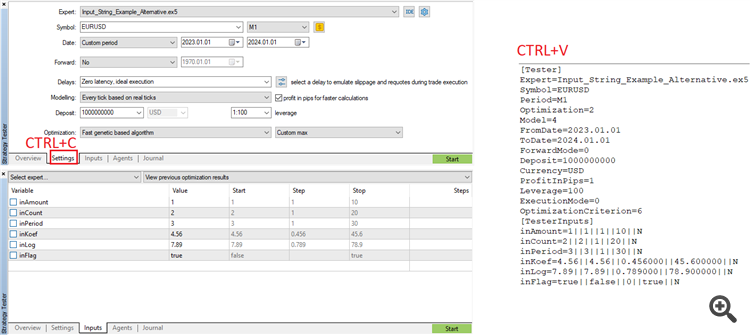

There are two keyboard shortcuts that work in tabs Settings/Inputs: CTRL+C and CTRL+V.

Hello, I have a practical issue with the MT5 optimizer.

In neural network models, the number of weights increases exponentially with the number of nodes. It becomes then quickly impossible to set them all manually. I haven't found how to modify the values of all parameters steps(or bounds) at once in the optimizer.

Do you have an idea how to do that ?

Thanks.

the ranges of the test you mean

you will need to save the current set file , in the tester optimization inputs tab, save it , open it with notepad and do replace function stuff.

If you have a huge neural net though move the data to pytorch and test there . You can also use aws or pytorch lightning which will save you time .

Max i managed was 4 layers 90 nodes if i recall with the tester (which is not big at all), and reached 700K passes or smth.the ranges of the test you mean

you will need to save the current set file , in the tester optimization inputs tab, save it , open it with notepad and do replace function stuff.

If you have a huge neural net though move the data to pytorch and test there . You can also use aws or pytorch lightning which will save you time .

Max i managed was 4 layers 90 nodes if i recall with the tester (which is not big at all), and reached 700K passes or smth.Yes ranges, start/stop.

Ok, here is what I did:

Save the current set file as .ini so I can read it > open it with notepad > replace all -1(lower bound) by -2 for example, with the notepad command and save it > open new file from terminal. So it works thank you guys.

I don't know about pytorch or aws, I will plunge into it.

Is it that the MT5 has a limited number of pass or is it slower ?

Yes ranges, start/stop.

Ok, here is what I did:

Save the current set file as .ini so I can read it > open it with notepad > replace all -1(lower bound) by -2 for example, with the notepad command and save it > open new file from terminal. So it works thank you guys.

I don't know about pytorch or aws, I will plunge into it.

Is it that the MT5 has a limited number of pass or is it slower ?

it is more efficient if you are going the Neural net route . Pytorch has built in structures that work in parallel with gpus (and aws etc) so you get more "bang" for your buck.

The advantage with the genetic algorithm is you dont have to worry about learning rates and batches

it is more efficient if you are going the Neural net route . Pytorch has built in structures that work in parallel with gpus (and aws etc) so you get more "bang" for your buck.

The advantage with the genetic algorithm is you dont have to worry about learning rates and batches

I believe the genetic way is that when a local minimum is found, some weights are switched to random values to move a bit aside and try a new local minimum..

I don't remember how the learning rate is fixed, if it is inversely proportional to the slope or something.

I believe the genetic way is that when a local minimum is found, some weights are switched to random values to move a bit aside and try a new local minimum..

I don't remember how the learning rate is fixed, if it is inversely proportional to the slope or something.

yeah you dont have a learning rate in the tester you dont need it

- Free trading apps

- Over 8,000 signals for copying

- Economic news for exploring financial markets

You agree to website policy and terms of use

Hello, I have a practical issue with the MT5 optimizer.

In neural network models, the number of weights increases exponentially with the number of nodes. It becomes then quickly impossible to set them all manually. I haven't found how to modify the values of all parameters steps(or bounds) at once in the optimizer.

Do you have an idea how to do that ?

Thanks.