Resilient back propagation (Rprop), an algorithm that can be used to train a neural network, is similar to the more common (regular) back-propagation. But it has two main advantages over back propagation: First, training with Rprop is often faster than training with back propagation.

Second, Rprop doesn't require you to specify any free parameter values, as opposed to back propagation which needs values for the learning rate (and usually an optional momentum term). The main disadvantage of Rprop is that it's a more complex algorithm to implement than back propagation. Think of a neural network as a complex mathematical function that accepts numeric inputs and generates numeric outputs.

The values of the outputs are determined by the input values, the number of so-called hidden processing nodes, the hidden and output layer activation functions, and a set of weights and bias values. A fully connected neural network with m inputs, h hidden nodes, and n outputs has (m * h) + h + (h * n) + n weights and biases. For example, a neural network with 4 inputs, 5 hidden nodes, and 3 outputs has (4 * 5) + 5 + (5 * 3) + 3 = 43 weights and biases.

Training a neural network is the process of finding values for the weights and biases so that, for a set of training data with known input and output values, the computed outputs of the network closely match the known outputs. The most common technique used to train neural networks is the back-propagation algorithm. Back propagation requires a value for a parameter called the learning rate.

The effectiveness of back propagation is highly sensitive to the value of the learning rate. Rprop was developed by researchers in 1993 in an attempt to improve upon the back-propagation algorithm. A good way to get a feel for what Rprop is, and to see where this article is headed, is to take a look at Figure 1. Instead of using real data, the demo program begins by creating 10,000 synthetic data items.

Each data item has four features (predictor variables). The dependent Y value to predict can take on one of three possible categorical values. These Y values are encoded using 1-of-N encoding so Y can be (1, 0, 0) or (0, 1, 0), or (0, 0, 1).

After generating the synthetic data, the demo randomly split the data set into an 8,000-item set to be used to train a neural network using Rprop, and a 2,000-item test set to be used to estimate the accuracy of the resulting model.

The first training item is: -5.16 5.71 -0.82 -3.44 0.00 1.00 0.00 All four feature values are between -10.0 and +10.0.

You can imagine that the data corresponds to the problem of predicting which of three political parties (Republican, Democrat, Independent) a person is affiliated with, based on their annual income, age, socio-economic status, and education level, where the feature values have been normalized. So the first line of data could correspond to a person who has lower than average income (-5.16), and who is older than average (age = 5.71), with slightly lower than average socio-economic status (-0.82), and lower than average education level (-3.44), who is a Democrat (0, 1, 0). The Rprop algorithm is an iterative process. The demo set the maximum number of iterations (often called epochs) to 1,000. As training progressed, the demo computed and displayed the current error, based on the best weights and biases found at that point, every 100 epochs.

When finished training, the best values found for the 43 weights and biases were displayed. Using the best weights and bias values, the resulting neural network model had a predictive accuracy of 98.70 percent on the test data set. This article assumes you have at least intermediate-level developer skills and a basic understanding of neural networks, but does not assume you know anything about the Rprop algorithm.

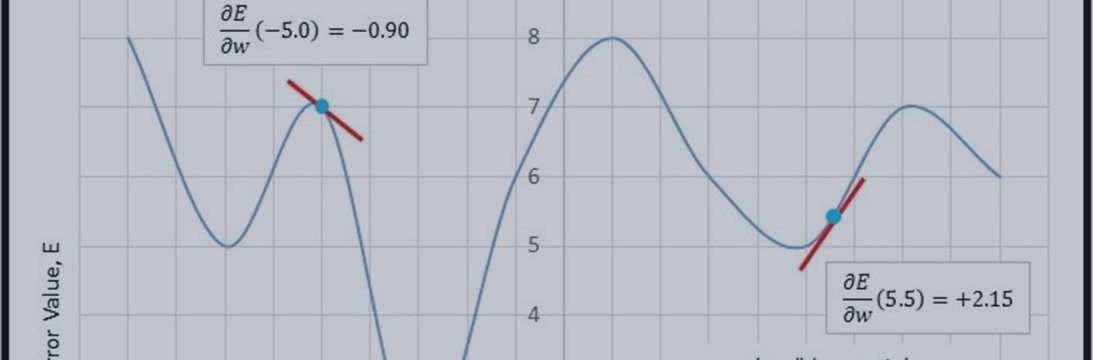

The demo program is coded in C#, but you shouldn't have too much trouble refactoring the demo code to another language. Understanding Gradients and the Rprop Algorithm Many machine learning algorithms, including Rprop, are based on a mathematical concept called the gradient. I think using a picture is the best way to explain what a gradient is. Take a look at the graph in Figure 2. The curve plots error vs. the value of a single weight.

The idea here is that you must have some measure of error (there are several), and that the value of the error will change as the value of one weight changes, assuming you hold the values of the other weights and biases the same. For a neural network with many weights and biases, there'd be graphs like the one in Figure 2 for every weight and bias.